Technology peripherals

Technology peripherals

AI

AI

2022 Taobao Creation Festival 3D Live Virtual Camp Technology Highlights Revealed

2022 Taobao Creation Festival 3D Live Virtual Camp Technology Highlights Revealed

2022 Taobao Creation Festival 3D Live Virtual Camp Technology Highlights Revealed

On August 24, 2022, Taobao Maker Festival will debut! In order to explore the next generation of Internet immersive shopping experience, in addition to building a 20,000-square-meter panoramic "Future Camp" in the offline Canton Fair exhibition hall, this Creation Festival also launched online for the first time a virtual interactive space "3D Crazy" that can accommodate tens of thousands of people online at the same time. City-Creation Festival Virtual Camp”.

In the 3D virtual camp, users can experience high-definition game textures in a lightweight way, and join the world of the "3D People and Goods Yard" as virtual characters, interacting with players and 3D products. Satisfy the demands of the new generation of consumer groups who love to play and create, and bring a new consumer experience with innovative technology.

Let’s take a look at the experience & technical highlights of the 3D live virtual camp:

Lightweight naked-eye 3D “real game”

Once you liked a 3D game, the client downloaded several gigabytes, but you had to uninstall it when you wanted to play a new game. It took up too much memory! In the virtual camp of the Creation Festival, you can quickly load a naked-eye 3D, interactive, live-streaming, high-definition “game” that you can even buy, and your Taobao App “hasn’t gotten any bigger at all.” Loading on your phone The speed is "whooshing"~

▐ Technical Highlights: Cloud Cost Challenge

Traditional development of 3D game applications on the mobile side often requires Integrating dozens or hundreds of megabytes of game engines in end testing requires downloading several gigabytes of material resources, which is currently unrealistic in the Taobao app. Using cloud rendering can solve this problem very well. With the help of powerful GPU in the cloud, users can complete high-definition and complex scene rendering. Users do not need to download large resource files or install any content. The only thing required is to play real-time rendered media. flow.

But the biggest problem here is the cost of cloud rendering machines. In order to reduce the cost of cloud rendering machines, in addition to cloud rendering, we also carry out performance optimization strategies such as model surface reduction, DP merging, texture optimization, and video material resolution reduction. In terms of machine scheduling, a time-based dynamic expansion and contraction strategy was developed based on business scenarios to maximize machine utilization.

3D people and 3D products meet in the 3D virtual world for the first time

The 3D users who once dressed up in "Taobao Life" "walked" out of their small homes for the first time and came to Creation Festival’s live 3D virtual camp. Here, you can jump freely, light bonfires, view 3D products, watch sellers’ live broadcasts, and interact with players. 3D people, 3D products, and 3D environments are combined to give you an immersive and wonderful camp adventure experience.

▐ Technical highlights: Construction and interaction of people and goods yard

Interaction between people. Placing the 3D characters in Taobao Life into the 3D virtual world first requires certain asset conversion and rendering costs. The user's running around the camp involves real-time synchronization of the user's location. For users' real-time roaming, we use frame synchronization technology to update user location information at a fixed frequency. Ideally, users will not feel stuck, but network congestion inevitably causes inter-frame jitter. If the synchronization time exceeds a certain interval, it will cause a jump. In order to make up for this shortcoming, we use a motion compensation algorithm when rendering characters. Simply put, it uses algorithm simulation to make the transition of user position movement smoother. At the same time, in terms of synchronization of interactive data, the AOI grid algorithm is adopted to solve the problem of data synchronization of multiple people on the same screen. In this way, 3D characters will run more smoothly in the virtual world.

Interaction between people and products. It is not easy for users to freely view the details of 3D products in a virtual scene. We have two options to choose from: one is mobile rendering and the other is cloud rendering. Mobile rendering also has relatively mature solutions in Taobao. Unfortunately, the mobile rendering engine and the cloud rendering engine are not the same set, so the same product model cannot be used universally. At the same time, dynamic downloading of the model, surface reduction optimization, etc. must also be considered. Problem, in order to avoid these disadvantages, we still chose the cloud rendering solution. By dynamically switching the camera perspective and responding to the user's interactive instructions in real time, we can achieve the experience of rotating and zooming to watch 3D products.

1080P resolution and cool high-definition quality

Once you tried the page mini-game in the mobile app, and it was as blurry as a mosaic. You ran and jumped and dropped frames. The Creation Festival’s new 3D virtual camp features high-definition images, smooth effects, and the stage lighting can dynamically track you as a “star”~

▐ Technical highlights: content production and real-time transmission of cloud rendering

In the cloud, we use Unreal Engine for real-time picture rendering, and combine it with dynamic camera movement, dynamic stage lighting, multi-view characters, and particle effects. Technologies such as this make the picture look cooler and provide a stronger sense of user interaction. In order to transmit high-definition images to users' mobile phones, we need to comprehensively consider the balance of image quality, lagging, and delay. With the help of the GRTN transmission network jointly built by Taobao and Alibaba Cloud, as well as self-developed encoding and decoding algorithms, we can maximize Ensure the clarity of the picture quality.

Interactive virtual screen does not freeze

Once you were in the live broadcast room of a Taobao anchor, counting down "3, 2, 1, start grabbing!" and felt the thrill of ultra-low latency flash sales. But you outside the screen cannot directly intervene in the actions of the host in the live broadcast room through interaction. In the 3D virtual camp, you can dance and say hello, click on the merchants' 3D products, and watch the explanations of your favorite anchors. You can truly "communicate with the merchants and anchors in the same frame."

▐ Technical highlights: Full-link ultra-low latency

Different from non-interfering picture transmission, ultra-low latency and smoothness are achieved interactively For experience, we need to complete the upstreaming of user instructions, the rendering of real-time images, and return the rendered images to the user’s mobile phone within a hundred milliseconds. First of all, it is necessary to comprehensively consider the user's machine, network, and access point conditions to dynamically select the streaming node closest to the user, thereby adjusting the cloud streaming strategy (including definition and encoding method selection). Secondly, during the transmission process, weak network control strategies such as real-time bandwidth feedback, FEC, and dynamic buffers need to be used to combat network jitter, so that the entire link delay reaches a reasonable position, and ultimately achieve interaction in the virtual world. Ensure a smooth interactive experience.

Conclusion

This Creation Festival 3D virtual camp is the first online attempt of Taobao’s virtual interactive space “3D Crazy City” series. In the next step, we will combine cloud real-time rendering and XR/CG technology to realize virtual scene customization, support larger-scale user real-time interaction, and bring a more immersive interactive experience to consumers.

The above is the detailed content of 2022 Taobao Creation Festival 3D Live Virtual Camp Technology Highlights Revealed. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

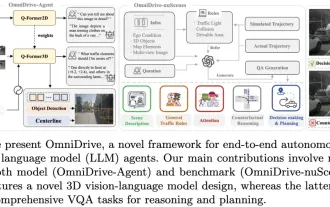

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require