Technology peripherals

Technology peripherals

AI

AI

Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100

Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100

Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100

In 2023, there seem to be only two camps left in the chatbot field: "OpenAI's ChatGPT" and "Others".

ChatGPT is powerful, but it’s almost impossible for OpenAI to open source it. The "other" camp performed poorly, but many people are working on open source, such as LLaMA, which was open sourced by Meta some time ago.

LLaMA is the general name for a series of models, with the number of parameters ranging from 7 billion to 65 billion. Among them, the 13 billion parameter LLaMA model can outperform the parameters "on most benchmarks" GPT-3 with a volume of 175 billion. However, the model has not undergone instruction tuning (instruct tuning), so the generation effect is poor.

In order to improve the performance of the model, researchers from Stanford helped it complete the instruction fine-tuning work and trained a new 7 billion parameter model called Alpaca (based on LLaMA 7B). Specifically, they asked OpenAI's text-davinci-003 model to generate 52K instruction-following samples in a self-instruct manner as training data for Alpaca. Experimental results show that many behaviors of Alpaca are similar to text-davinci-003. In other words, the performance of the lightweight model Alpaca with only 7B parameters is comparable to that of very large-scale language models such as GPT-3.5.

For ordinary researchers, this is a practical and cheap way to fine-tune, but it still requires a large amount of calculations (the author said They fine-tuned it for 3 hours on eight 80GB A100s). Moreover, Alpaca's seed tasks are all in English, and the data collected are also in English, so the trained model is not optimized for Chinese.

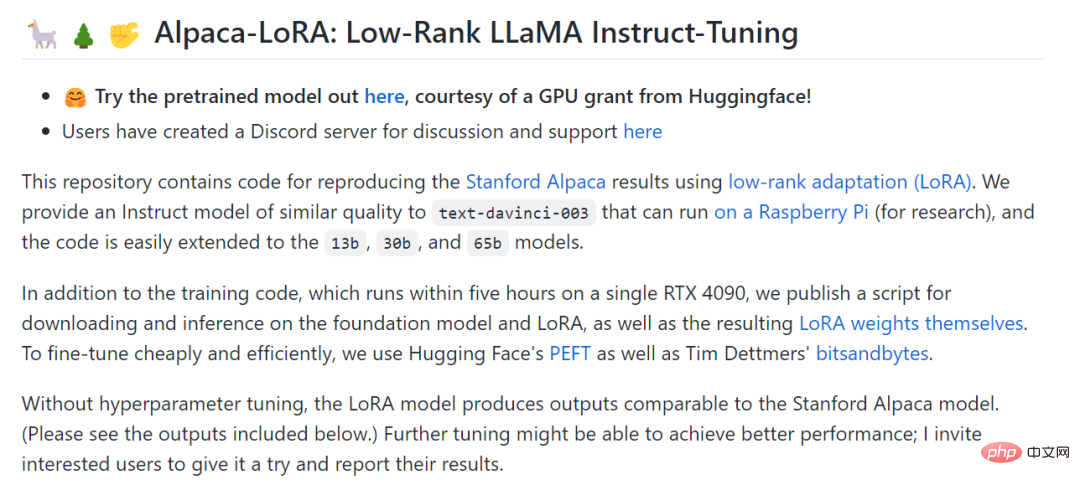

In order to further reduce the cost of fine-tuning, another researcher from Stanford, Eric J. Wang, used LoRA (low-rank adaptation) technology to reproduce the results of Alpaca. Specifically, Eric J. Wang used an RTX 4090 graphics card to train a model equivalent to Alpaca in only 5 hours, reducing the computing power requirements of such models to consumer levels. Furthermore, the model can be run on a Raspberry Pi (for research).

Technical principles of LoRA. The idea of LoRA is to add a bypass next to the original PLM and perform a dimensionality reduction and then dimensionality operation to simulate the so-called intrinsic rank. During training, the parameters of the PLM are fixed, and only the dimensionality reduction matrix A and the dimensionality enhancement matrix B are trained. The input and output dimensions of the model remain unchanged, and the parameters of BA and PLM are superimposed during output. Initialize A with a random Gaussian distribution and initialize B with a 0 matrix to ensure that the bypass matrix is still a 0 matrix at the beginning of training (quoted from: https://finisky.github.io/lora/). The biggest advantage of LoRA is that it is faster and uses less memory, so it can run on consumer-grade hardware.

Alpaca-LoRA project posted by Eric J. Wang.

Project address: https://github.com/tloen/alpaca-lora

For classes that want to train themselves This is undoubtedly a big surprise for researchers who use ChatGPT models (including the Chinese version of ChatGPT) but do not have top-level computing resources. Therefore, after the advent of the Alpaca-LoRA project, tutorials and training results around the project continued to emerge, and this article will introduce several of them.

How to use Alpaca-LoRA to fine-tune LLaMA

In the Alpaca-LoRA project, the author mentioned that in order to perform fine-tuning cheaply and efficiently, they used Hugging Face’s PEFT . PEFT is a library (LoRA is one of its supported technologies) that allows you to take various Transformer-based language models and fine-tune them using LoRA. The benefit is that it allows you to fine-tune your model cheaply and efficiently on modest hardware, with smaller (perhaps composable) outputs.

In a recent blog, several researchers introduced how to use Alpaca-LoRA to fine-tune LLaMA.

Before using Alpaca-LoRA, you need to have some prerequisites. The first is the choice of GPU. Thanks to LoRA, you can now complete fine-tuning on low-spec GPUs like NVIDIA T4 or 4090 consumer GPUs; in addition, you also need to apply for LLaMA weights because their weights are not public.

Now that the prerequisites are met, the next step is how to use Alpaca-LoRA. First you need to clone the Alpaca-LoRA repository, the code is as follows:

git clone https://github.com/daanelson/alpaca-lora cd alpaca-lora

Secondly, get the LLaMA weights. Store the downloaded weight values in a folder named unconverted-weights. The folder hierarchy is as follows:

unconverted-weights ├── 7B │ ├── checklist.chk │ ├── consolidated.00.pth │ └── params.json ├── tokenizer.model └── tokenizer_checklist.chk

After the weights are stored, use the following command to PyTorch The weight of the checkpoint is converted into a format compatible with the transformer:

cog run python -m transformers.models.llama.convert_llama_weights_to_hf --input_dir unconverted-weights --model_size 7B --output_dir weights

The final directory structure should be like this:

weights ├── llama-7b └── tokenizermdki

Process the above two Step 3: Install Cog:

sudo curl -o /usr/local/bin/cog -L "https://github.com/replicate/cog/releases/latest/download/cog_$(uname -s)_$(uname -m)" sudo chmod +x /usr/local/bin/cog

The fourth step is to fine-tune the model. By default, the GPU configured on the fine-tuning script is weak, but if you have For a GPU with better performance, you can increase MICRO_BATCH_SIZE to 32 or 64 in finetune.py. Additionally, if you have directives to tune a dataset, you can edit the DATA_PATH in finetune.py to point to your own dataset. It should be noted that this operation should ensure that the data format is the same as alpaca_data_cleaned.json. Next run the fine-tuning script:

cog run python finetune.py

The fine-tuning process took 3.5 hours on a 40GB A100 GPU and more time on less powerful GPUs.

The last step is to run the model with Cog:

$ cog predict -i prompt="Tell me something about alpacas." Alpacas are domesticated animals from South America. They are closely related to llamas and guanacos and have a long, dense, woolly fleece that is used to make textiles. They are herd animals and live in small groups in the Andes mountains. They have a wide variety of sounds, including whistles, snorts, and barks. They are intelligent and social animals and can be trained to perform certain tasks.

The author of the tutorial said that after completing the above steps, you can continue to try various gameplays. Including but not limited to:

- Bring your own data set and fine-tune your own LoRA, such as fine-tuning LLaMA to make it speak like an anime character. See: https://replicate.com/blog/fine-tune-llama-to-speak-like-homer-simpson

- Deploy the model to the cloud platform;

- Combine with other LoRA, such as Stable Diffusion LoRA, and apply these to the image field;

- Use the Alpaca data set (or other data sets) to fine-tune the update large LLaMA models and see how they perform. This should be possible with PEFT and LoRA, although it will require a larger GPU.

Alpaca-LoRA derivative project

Although Alpaca's performance is comparable to GPT 3.5, its seed tasks are all in English, and the data collected are also in English , so the trained model is not friendly to Chinese. In order to improve the effectiveness of the dialogue model in Chinese, let’s take a look at some of the better projects.

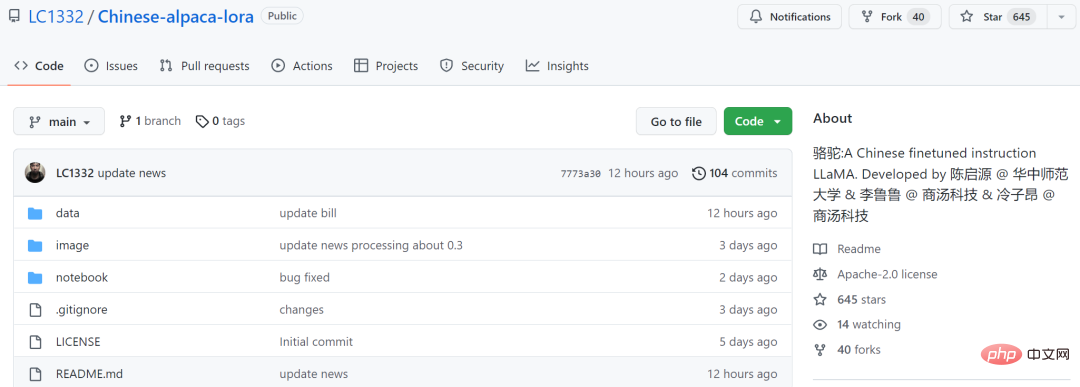

The first is the open source Chinese language model Luotuo (Luotuo) by three individual developers from Central China Normal University and other institutions. This project is based on LLaMA, Stanford Alpaca, Alpaca LoRA, Japanese-Alpaca -When LoRA is completed, training deployment can be completed with a single card. Interestingly, they named the model camel because both LLaMA (llama) and alpaca (alpaca) belong to the order Artiodactyla - family Camelidae. From this point of view, this name is also expected.

This model is based on Meta’s open source LLaMA and was trained on Chinese with reference to the two projects Alpaca and Alpaca-LoRA.

Project address: https://github.com/LC1332/Chinese-alpaca-lora

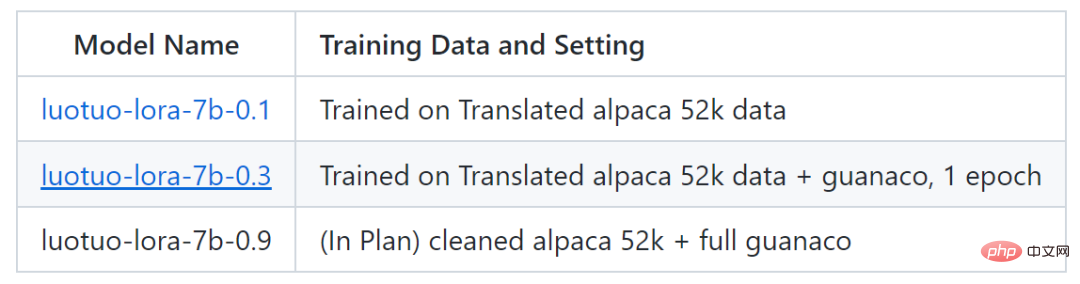

Currently, the project has released two models, luotuo-lora-7b-0.1 and luotuo-lora-7b-0.3, and another model is being planned:

The following is the effect display:

#But luotuo-lora-7b-0.1 (0.1) , luotuo-lora-7b-0.3 (0.3) still has a gap. When the user asked for the address of Central China Normal University, 0.1 answered incorrectly:

In addition to simple conversations, there are also people who have performed model optimization in insurance-related fields. According to this Twitter user, with the help of the Alpaca-LoRA project, he entered some Chinese insurance question and answer data, and the final results were good.

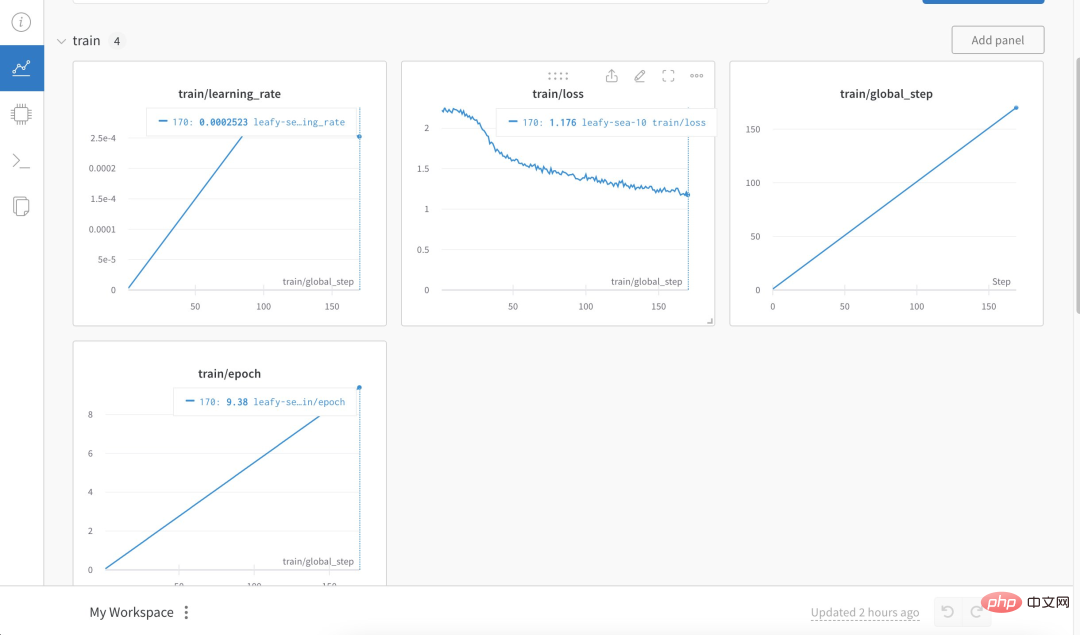

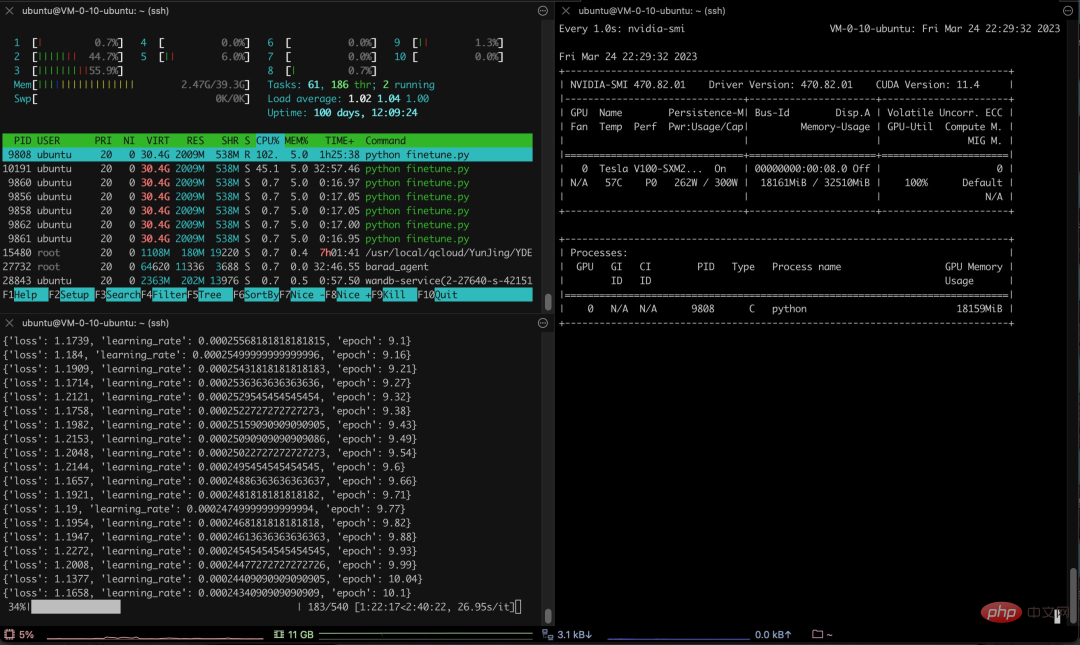

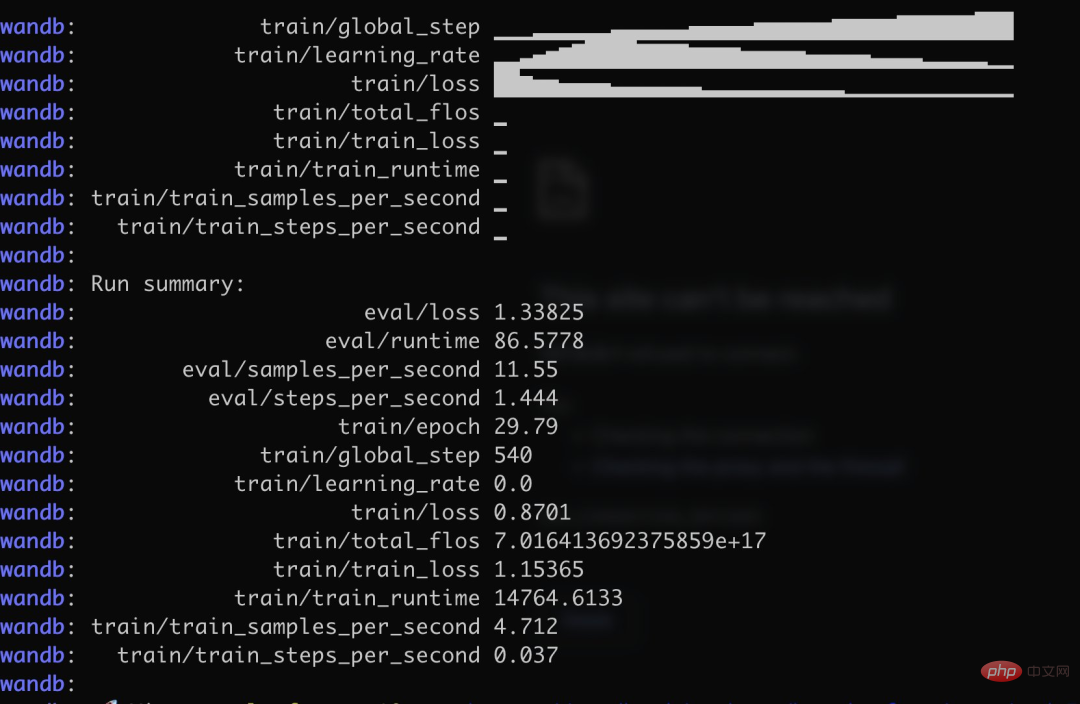

Specifically, the author used more than 3K Chinese question and answer insurance corpus to train the Chinese version of Alpaca LoRa. The implementation process used the LoRa method and fine-tuned the Alpaca 7B model, which took 240 minutes. Final Loss 0.87.

## Source: https://twitter.com/nash_su/status/1639273900222586882

The following is the training process and results:

The above is the detailed content of Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.