Technology peripherals

Technology peripherals

AI

AI

Cost less than $100! UC Berkeley re-opens the ChatGPT-like model 'Koala': large amounts of data are useless, high quality is king

Cost less than $100! UC Berkeley re-opens the ChatGPT-like model 'Koala': large amounts of data are useless, high quality is king

Cost less than $100! UC Berkeley re-opens the ChatGPT-like model 'Koala': large amounts of data are useless, high quality is king

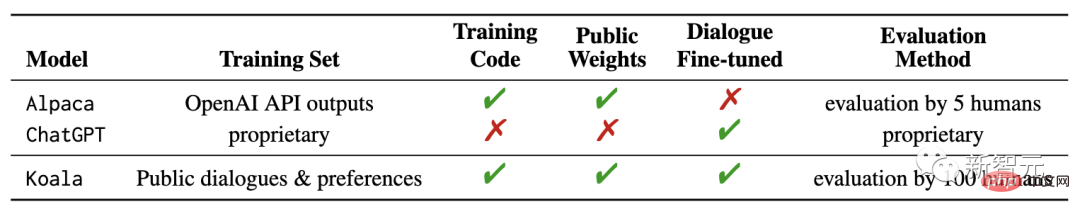

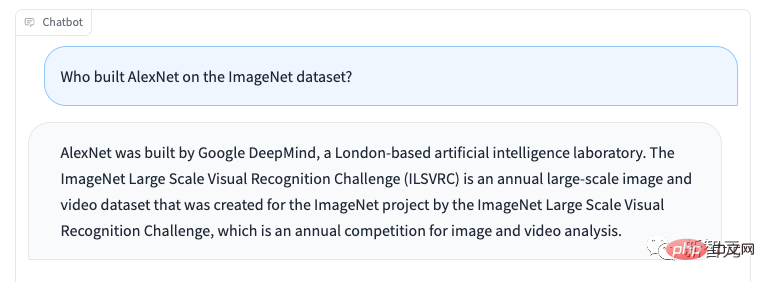

##Since Meta opened up LLaMA, various ChatGPT models have sprung up in the academic world and have begun to be released. First, Stanford proposed the 7 billion parameter Alpaca, and then UC Berkeley teamed up with CMU, Stanford, UCSD and MBZUAI to release the 13 billion parameter Vicuna, which achieved capabilities comparable to ChatGPT and Bard in more than 90% of cases. . Recently, Berkeley released a new model "Koala" . Compared with the previous use of OpenAI's GPT data for instruction fine-tuning, Koala is different. Use high-quality data obtained from the network for training.

# Blog link: https://bair.berkeley.edu/blog/2023 /04/03/koala/Data preprocessing code: https://github.com/young-geng/koala_data_pipeline Evaluation test set: https://github.com/arnav-gudibande/koala-test-set Model download: https ://drive.google.com/drive/folders/10f7wrlAFoPIy-TECHsx9DKIvbQYunCfl

In the published blog post, the researchers described the model’s dataset management and training process, and also The results of a user study comparing the model to ChatGPT and Stanford University’s Alpaca model are presented. The results show that Koala can effectively answer a variety of user queries, generating answers that are often more popular than Alpaca and are as effective as ChatGPT at least half of the time. The researchers hope that the results of this experiment will further the discussion around the relative performance of large closed-source models versus small public models, particularly as the results show that for small models that can be run locally, if training data is collected carefully, The performance of large models can be achieved.

##

This may mean that the community should invest more effort in curating high-quality data sets, which may be more helpful than simply increasing the scale of existing systems. To build safer, more practical and more capable models. It should be emphasized that Koala is only a research prototype, and while the researchers hope that the release of the model can provide a valuable community resource, it still has significant shortcomings in content security and reliability and should not be used outside of research areas. use.

This may mean that the community should invest more effort in curating high-quality data sets, which may be more helpful than simply increasing the scale of existing systems. To build safer, more practical and more capable models. It should be emphasized that Koala is only a research prototype, and while the researchers hope that the release of the model can provide a valuable community resource, it still has significant shortcomings in content security and reliability and should not be used outside of research areas. use. Koala System Overview

After the release of large-scale language models, virtual assistants and chatbots have become more and more powerful. They can not only chat, but also write code, write poetry, and create stories. Called omnipotent. However, the most powerful language models usually require massive computing resources to train the models, and also require large-scale dedicated data sets. Ordinary people basically cannot train the models by themselves. In other words, the language model will be controlled by a few powerful organizations in the future. Users and researchers will pay to interact with the model and will not have direct access to the interior of the model to modify or improve it. On the other hand, in recent months, some organizations have released relatively powerful free or partially open source models, such as Meta's LLaMA. The capabilities of these models cannot be compared with those of closed models (such as ChatGPT), but their capabilities are It has been improving rapidly with the help of the community.

The pressure is on the open source community: Will the future see more and more integration around a small number of closed source code models? Or more open models using smaller model architectures? Can the performance of a model with the same architecture approach that of a larger closed-source model?

While open models are unlikely to match the scale of closed-source models, using carefully selected training data may bring them close to the performance of ChatGPT without fine-tuning.

In fact, the experimental results of the Alpaca model released by Stanford University and the fine-tuning of LLaMA data based on OpenAI's GPT model have shown that the correct data can significantly improve the scale of the model. A small open source model, which is also the original intention of Berkeley researchers to develop and release the Koala model, provides another experimental proof of the results of this discussion.

Koala fine-tunes free interaction data obtained from the Internet, with special attention to data including interactions with high-performance closed-source models such as ChatGPT.

Researchers fine-tuned the base LLaMA model based on conversation data extracted from the web and public datasets, including high-quality responses to user queries from other large language models, as well as question and answer Data set and human feedback data set, the Koala-13B model trained thereby shows performance that is almost the same as existing models.

The findings suggest that learning from high-quality datasets can mitigate some of the shortcomings of small models and may even rival large closed-source models in the future, meaning, The community should invest more effort in curating high-quality datasets, which will help build safer, more practical, and more capable models than simply increasing the size of existing models.

By encouraging researchers to participate in systematic demonstrations of the Koala model, the researchers hope to discover some unexpected features or flaws that will help evaluate the model in the future.

Datasets and TrainingA major obstacle in building conversation models is the management of training data for all chat models including ChatGPT, Bard, Bing Chat and Claude All use specialized data sets constructed with a large number of manual annotations.

To build Koala, the researchers organized the training set by collecting conversation data from the web and public datasets, some of which include large-scale language models such as ChatGPT posted by users online. dialogue.

Instead of pursuing crawling as much web data as possible to maximize data volume, the researchers focused on collecting a small, high-quality dataset, using public datasets to answer Questions, human feedback (rated both positive and negative), and dialogue with existing language models.

ChatGPT distilled dataShare conversations with public users of ChatGPT (ShareGPT): About sixty thousand conversations shared by users on ShareGPT were collected using the public API.

Website link: https://sharegpt.com/

In order to ensure data quality, research The staff removed duplicate user queries and deleted all non-English conversations, leaving approximately 30,000 samples.

Human ChatGPT Comparative Corpus (HC3): Using human and ChatGPT reply results from the HC3 English dataset, which contains about 60,000 human answers and 27,000 of about 24,000 questions ChatGPT answers, a total of about 87,000 question and answer samples were obtained.

Open Source Data

Open Instruction Generalist (OIG): Using a manually selected subset of components from the LAION-curated Open Instruction General Data Set, Including primary school mathematics guidance, poetry to songs, and plot-script-book-dialogue data sets, a total of about 30,000 samples were obtained.

Stanford Alpaca: Includes the dataset used to train the Stanford Alpaca model.

This data set contains approximately 52,000 samples, generated by OpenAI's text-davinci-003 following the self-instruct process.

It is worth noting that the HC3, OIG and Alpaca data sets are single-round question and answer, while the ShareGPT data set is a multi-round conversation.

Anthropic HH: Contains human ratings of the harmfulness and helpfulness of the model output.

The dataset contains approximately 160,000 human-evaluated examples, where each example consists of a pair of responses from the chatbot, one of which is human-preferred. The dataset is Model offers functionality and extra security.

OpenAI WebGPT: This dataset includes a total of about 20,000 comparisons, where each example includes a question, a pair of model answers, and metadata, answer Scored by humans based on their own preferences.

OpenAI Summarization: Contains approximately 93,000 examples containing feedback from humans on model-generated summaries, with human evaluators choosing from two options Better summary results.

When using open source datasets, some datasets may provide two responses, corresponding to a rating of good or bad (AnthropicHH, WebGPT, OpenAI summary).

Previous research results demonstrated the effectiveness of conditional language models on human preference labels (useful/useless) to improve performance, with researchers placing models on positive or negative labels based on preference labels For labeling, use positive labeling for the dataset if there is no human feedback. During the evaluation phase, the prompt is written to include positive tags.

Koala is based on the open source framework EasyLM (pre-training, fine-tuning, serving and evaluating various large-scale language models) and implemented using JAX/Flax; the training equipment is an Nvidia DGX server and 8 A100 GPU requires 6 hours of training to complete 2 epochs.

On a public cloud computing platform, the expected training cost is no more than $100.

Initial evaluation

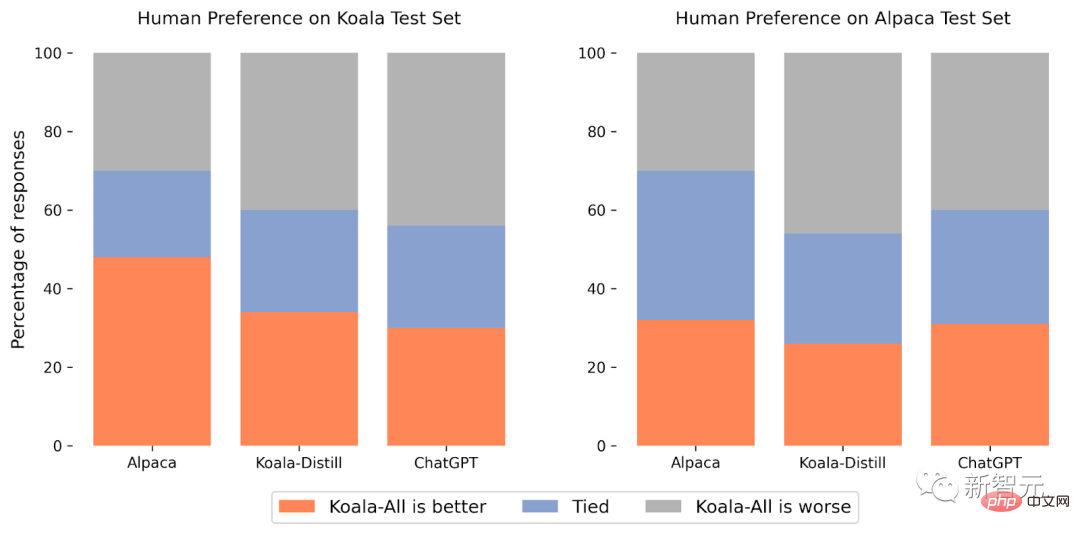

In experiments, the researchers evaluated two models: Koala-Distill, which uses only distilled data; Koala-All, which uses all data, including Distilled and open source data.

The purpose of the experiment is to compare the performance of the models and evaluate the impact of distilled and open source datasets on the final model performance; perform human evaluation of the Koala model and compare Koala-All with Koala- Distill, Alpaca and ChatGPT are compared.

The test set of the experiment consists of Stanford's Alpaca Test Set and Koala Test Set, including 180 test queries

The Alpaca test set consists of user prompts sampled from the self-isntruct data set and represents the distributed data of the Alpaca model; in order to provide a more realistic evaluation protocol, the Koala test set contains 180 real users published online The queries, which span different topics and are usually conversational, are more representative of actual use cases based on chat systems, and in order to reduce possible test set leaks, queries with a BLEU score greater than 20% are finally filtered out from the training set.

In addition, since the research team is more proficient in English, the researchers deleted non-English and encoding-related prompts to provide more reliable annotation results, and finally analyzed the results on the Amazon crowdsourcing platform. Approximately 100 annotators conduct a blind test, providing each rater with an input prompt and the output of both models in the scoring interface, and then asking to judge which output is better using criteria related to response quality and correctness (allowing the same good).

In the Alpaca test set, Koala-All performs on par with Alpaca.

In the Koala test set (containing real user queries), Koala-All is better than Alpaca in nearly half of the samples, and exceeds or is the same as Alpaca in 70% of the cases Well, there must be a reason why the Koala training set and test set are more similar, so this result is not particularly surprising.

But as long as these hints are more like the downstream use cases of these models, it means that Koala will perform better in assistant-like applications, indicating that using the samples published on the Internet is equivalent to Interacting with language models is an effective strategy to give these models effective instruction execution capabilities.

What is more surprising is that the researchers found that in addition to distilled data (Koala-All), training on open source data is better than training on only ChatGPT distilled data (Koala-Distill). Training performance is slightly worse.

While the difference may not be significant, this result suggests that the quality of ChatGPT conversations is so high that even including twice as much open source data would not yield a significant improvement .

The initial hypothesis is that Koala-All should perform better, so Koala-All is used as the main evaluation model in all evaluations, and finally it can be found that effective Instructions and auxiliary models can be obtained from large language models, as long as these prompts are representative of the diversity of users in the testing phase.

So the key to building strong conversational patterns may lie more in managing high-quality conversational data, which varies in terms of user queries and cannot simply be There are datasets reformatted into questions and answers.

Limitations and Security

Like other language models, Koala also has limitations that, if misused, may cause harm to users.

Researchers observed that Koala would hallucinate and respond non-factually in a very confident tone, possibly as a result of dialogue fine-tuning, in other words, smaller The fact that the model inherits the confident style of the larger language model does not inherit the same level requires focused improvement in the future.

When misused, Koala’s phantom replies can facilitate the spread of misinformation, spam, and other content.

Koala is able to hallucinate inaccurate information in a confident and convincing tone. In addition to hallucinations, Koala has other chatbots. The shortcomings of language models. These include:

- Bias and StereotypesImpression: The model inherited biased training conversation data, including Stereotyping, discrimination and other harm.

- Lack of common senseAlthough large language models can generate seemingly coherent and Grammatically correct texts, but they often lack common sense knowledge that people take for granted, which can lead to ridiculous or inappropriate responses. Limited Understanding

- : Large language models may struggle to understand the context and nuances of conversations The difference is also difficult to recognize as sarcasm or irony, which can lead to misunderstandings. To address Koala’s security concerns, researchers included adversarial hints in the datasets of ShareGPT and AnthropicHH to make the model more robust and harmless.

To further reduce potential abuse, OpenAI’s content moderation filters were also deployed in the demo to flag and remove unsafe content.

Future work

The researchers hope that the Koala model can become a useful platform for future academic research on large-scale language models: the model is sufficient to demonstrate the many capabilities of modern language models, At the same time, it is small enough to be fine-tuned or used with less calculation. Future research directions may include:

- Security and Consistency

- : Further research on the security of language models and better consistency with human intentions. Model bias

- See: Better understanding bias in large language models, The presence of spurious correlations and quality issues in conversation datasets, and ways to mitigate this bias. Understand large language models

- Type:Because Koala’s reasoning can be done relatively cheaply Executed on the GPU, the internals of the conversational language model can be better inspected and understood, making the black-box language model easier to understand.

The above is the detailed content of Cost less than $100! UC Berkeley re-opens the ChatGPT-like model 'Koala': large amounts of data are useless, high quality is king. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C? Answer: Use loop statements. Steps: 1. Define the variable n and store the countdown number to output; 2. Use the while loop to continuously print n until n is less than 1; 3. In the loop body, print out the value of n; 4. At the end of the loop, subtract n by 1 to output the next smaller reciprocal.

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

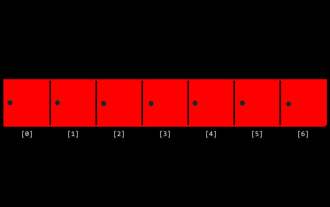

CS-Week 3

Apr 04, 2025 am 06:06 AM

CS-Week 3

Apr 04, 2025 am 06:06 AM

Algorithms are the set of instructions to solve problems, and their execution speed and memory usage vary. In programming, many algorithms are based on data search and sorting. This article will introduce several data retrieval and sorting algorithms. Linear search assumes that there is an array [20,500,10,5,100,1,50] and needs to find the number 50. The linear search algorithm checks each element in the array one by one until the target value is found or the complete array is traversed. The algorithm flowchart is as follows: The pseudo-code for linear search is as follows: Check each element: If the target value is found: Return true Return false C language implementation: #include#includeintmain(void){i

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers are the most basic data type in programming and can be regarded as the cornerstone of programming. The job of a programmer is to give these numbers meanings. No matter how complex the software is, it ultimately comes down to integer operations, because the processor only understands integers. To represent negative numbers, we introduced two's complement; to represent decimal numbers, we created scientific notation, so there are floating-point numbers. But in the final analysis, everything is still inseparable from 0 and 1. A brief history of integers In C, int is almost the default type. Although the compiler may issue a warning, in many cases you can still write code like this: main(void){return0;} From a technical point of view, this is equivalent to the following code: intmain(void){return0;}

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

What does the return value of 56 or 65 mean by C language function?

Apr 04, 2025 am 06:15 AM

What does the return value of 56 or 65 mean by C language function?

Apr 04, 2025 am 06:15 AM

When a C function returns 56 or 65, it indicates a specific event. These numerical meanings are defined by the function developer and may indicate success, file not found, or read errors. Replace these "magic numbers" with enumerations or macro definitions can improve readability and maintainability, such as: READ_SUCCESS, FILE_NOT_FOUND, and READ_ERROR.

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

What determines the return value type of C language function?

Apr 04, 2025 am 06:42 AM

What determines the return value type of C language function?

Apr 04, 2025 am 06:42 AM

The return value type of the function is determined by the return type specified when the function is defined. Common types include int, float, char, and void (indicating that no value is returned). The return value type must be consistent with the actual returned value in the function body, otherwise it will cause compiler errors or unpredictable behavior. When returning a pointer, you must make sure that the pointer points to valid memory, otherwise it may cause a segfault. When dealing with return value types, error handling and resource release (such as dynamically allocated memory) need to be considered to write robust and reliable code.