How to use Deep Fusion on iPhone SE 3, iPhone 13, and more

Deep Fusion is an image processing system that automatically works behind the scenes under certain conditions. Apple says the feature produces "images with significantly better texture, detail and reduced noise in low light."

Unlike the iPhone's Night Mode feature or other camera options, there is no user-facing signal that Deep Fusion is being used, it is automatic and invisible (on purpose).

However, there are certain situations where Deep Fusion won't be used: any time you use the ultra-wide lens, any time "Take photos outside the frame" is turned on, and when you take a burst of photos.

How to turn on Deep Fusion on iPhone camera

Please remember that Deep Fusion only works with iPhone 11, 12, 13, and SE 3.

- Go to the Settings app and swipe down and tap Camera

- Make sureare shooting outside the frame Photo Closed Make sure you are using a 1x or greater wide angle (standard) or telephoto lens

- Deep Fusion now works when you take a photo Behind the Scenes (not available for burst photos)

So what is it doing? How do we get such an image? are you ready? That's what it does. It takes nine images, and before you press the shutter button it's taken four short images, four secondary images. When you press the shutter button, it takes a long exposure, and then in just one second, the neural engine analyzes the fused combination of the long and short images, picks the best ones, selects all the pixels, pixel by pixel, and iterates through 24 megapixels optimized for detail and low noise, like you'd see in a sweater. Amazingly, this is the first time a neural engine is responsible for generating the output image. This is the mad science of computational photography.

The above is the detailed content of How to use Deep Fusion on iPhone SE 3, iPhone 13, and more. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

iPhone 16 Pro and iPhone 16 Pro Max official with new cameras, A18 Pro SoC and larger screens

Sep 10, 2024 am 06:50 AM

iPhone 16 Pro and iPhone 16 Pro Max official with new cameras, A18 Pro SoC and larger screens

Sep 10, 2024 am 06:50 AM

Apple has finally lifted the covers off its new high-end iPhone models. The iPhone 16 Pro and iPhone 16 Pro Max now come with larger screens compared to their last-gen counterparts (6.3-in on the Pro, 6.9-in on Pro Max). They get an enhanced Apple A1

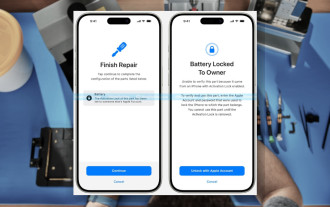

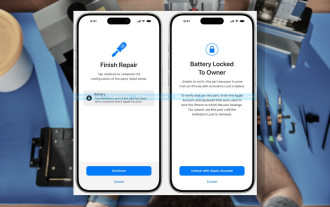

iPhone parts Activation Lock spotted in iOS 18 RC — may be Apple\'s latest blow to right to repair sold under the guise of user protection

Sep 14, 2024 am 06:29 AM

iPhone parts Activation Lock spotted in iOS 18 RC — may be Apple\'s latest blow to right to repair sold under the guise of user protection

Sep 14, 2024 am 06:29 AM

Earlier this year, Apple announced that it would be expanding its Activation Lock feature to iPhone components. This effectively links individual iPhone components, like the battery, display, FaceID assembly, and camera hardware to an iCloud account,

iPhone parts Activation Lock may be Apple\'s latest blow to right to repair sold under the guise of user protection

Sep 13, 2024 pm 06:17 PM

iPhone parts Activation Lock may be Apple\'s latest blow to right to repair sold under the guise of user protection

Sep 13, 2024 pm 06:17 PM

Earlier this year, Apple announced that it would be expanding its Activation Lock feature to iPhone components. This effectively links individual iPhone components, like the battery, display, FaceID assembly, and camera hardware to an iCloud account,

Gate.io trading platform official app download and installation address

Feb 13, 2025 pm 07:33 PM

Gate.io trading platform official app download and installation address

Feb 13, 2025 pm 07:33 PM

This article details the steps to register and download the latest app on the official website of Gate.io. First, the registration process is introduced, including filling in the registration information, verifying the email/mobile phone number, and completing the registration. Secondly, it explains how to download the Gate.io App on iOS devices and Android devices. Finally, security tips are emphasized, such as verifying the authenticity of the official website, enabling two-step verification, and being alert to phishing risks to ensure the safety of user accounts and assets.

LCD iPhone becomes history! Apple will be completely abandoned: the end of an era

Sep 03, 2024 pm 09:38 PM

LCD iPhone becomes history! Apple will be completely abandoned: the end of an era

Sep 03, 2024 pm 09:38 PM

According to media reports citing sources, Apple will completely abandon the use of LCD (liquid crystal display) screens in iPhones, and all iPhones sold next year and beyond will use OLED (organic light-emitting diode) displays. Apple first used OLED displays on iPhoneX in 2017. Since then, Apple has popularized OLED displays in mid-to-high-end models, but the iPhone SE series still uses LCD screens. However, iPhones with LCD screens are about to become history. People familiar with the matter said that Apple has begun ordering OLED displays from BOE and LG for the new generation iPhone SE. Samsung currently holds about half of the iPhone OLED display market, LG

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Download link of Ouyi iOS version installation package

Feb 21, 2025 pm 07:42 PM

Download link of Ouyi iOS version installation package

Feb 21, 2025 pm 07:42 PM

Ouyi is a world-leading cryptocurrency exchange with its official iOS app that provides users with a convenient and secure digital asset management experience. Users can download the Ouyi iOS version installation package for free through the download link provided in this article, and enjoy the following main functions: Convenient trading platform: Users can easily buy and sell hundreds of cryptocurrencies on the Ouyi iOS app, including Bitcoin and Ethereum. and Dogecoin. Safe and reliable storage: Ouyi adopts advanced security technology to provide users with safe and reliable digital asset storage. 2FA, biometric authentication and other security measures ensure that user assets are not infringed. Real-time market data: Ouyi iOS app provides real-time market data and charts, allowing users to grasp encryption at any time

Anbi app official download v2.96.2 latest version installation Anbi official Android version

Mar 04, 2025 pm 01:06 PM

Anbi app official download v2.96.2 latest version installation Anbi official Android version

Mar 04, 2025 pm 01:06 PM

Binance App official installation steps: Android needs to visit the official website to find the download link, choose the Android version to download and install; iOS search for "Binance" on the App Store. All should pay attention to the agreement through official channels.