Machine Learning: Don't underestimate the power of tree models

Due to their complexity, neural networks are often considered the “holy grail” for solving all machine learning problems. Tree-based methods, on the other hand, have not received equal attention, mainly due to the apparent simplicity of such algorithms. However, these two algorithms may seem different, but they are like two sides of the same coin, both important.

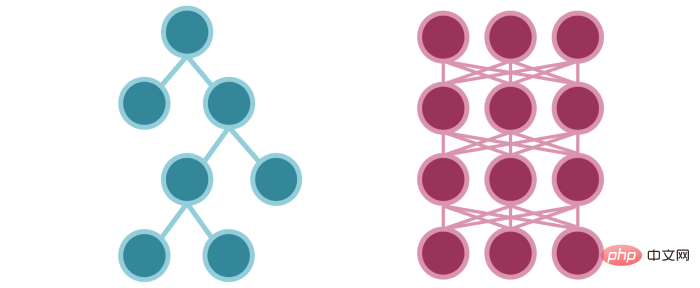

Tree Model VS Neural Network

Tree-based methods are usually better than neural networks. Essentially, tree-based methods and neural network-based methods are placed in the same category because they both approach the problem through step-by-step deconstruction, rather than splitting the entire data set through complex boundaries like support vector machines or logistic regression. .

Obviously, tree-based methods gradually segment the feature space along different features to optimize information gain. What is less obvious is that neural networks also approach tasks in a similar way. Each neuron monitors a specific part of the feature space (with multiple overlaps). When input enters this space, certain neurons are activated.

Neural networks view this piece-by-piece model fitting from a probabilistic perspective, while tree-based methods take a deterministic perspective. Regardless, the performance of both depends on the depth of the model, since their components are associated with various parts of the feature space.

A model with too many components (nodes for a tree model, neurons for a neural network) will overfit, while a model with too few components simply won’t give Meaningful predictions. (Both of them start by memorizing data points, rather than learning generalization.)

If you want to understand more intuitively how the neural network divides the feature space, you can read this introduction Article on the Universal Approximation Theorem: https://medium.com/analytics-vidhya/you-dont-understand-neural-networks-until-you-understand-the-universal-approximation-theory-85b3e7677126.

While there are many powerful variations of decision trees, such as Random Forest, Gradient Boosting, AdaBoost, and Deep Forest, in general, tree-based methods are essentially simplifications of neural networks Version.

Tree-based methods solve the problem piece by piece through vertical and horizontal lines to minimize entropy (optimizer and loss). Neural networks use activation functions to solve problems piece by piece.

Tree-based methods are deterministic rather than probabilistic. This brings some nice simplifications like automatic feature selection.

The activated condition nodes in the decision tree are similar to the activated neurons (information flow) in the neural network.

The neural network transforms the input through fitting parameters and indirectly guides the activation of subsequent neurons. Decision trees explicitly fit parameters to guide the flow of information. (This is the result of deterministic versus probabilistic.)

The flow of information in the two models is similar, just in the tree model The flow method is simpler.

Selection of 1 and 0 in tree model VS Probabilistic selection of neural network

Of course, this is an abstract conclusion, and it may even be possible Controversial. Granted, there are many obstacles to making this connection. Regardless, this is an important part of understanding when and why tree-based methods are better than neural networks.

For decision trees, working with structured data in tabular or tabular form is natural. Most people agree that using neural networks to perform regression and prediction on tabular data is overkill, so some simplifications are made here. The choice of 1s and 0s, rather than probabilities, is the main source of the difference between the two algorithms. Therefore, tree-based methods can be successfully applied to situations where probabilities are not required, such as structured data.

For example, tree-based methods show good performance on the MNIST dataset because each number has several basic features. There is no need to calculate probabilities and the problem is not very complex, which is why a well-designed tree ensemble model can perform as well as or better than modern convolutional neural networks.

Generally, people tend to say that "tree-based methods just remember the rules", which is correct. Neural networks are the same, except they can remember more complex, probability-based rules. Rather than explicitly giving a true/false prediction for a condition like x>3, the neural network amplifies the input to a very high value, resulting in a sigmoid value of 1 or generating a continuous expression.

On the other hand, since neural networks are so complex, there is a lot that can be done with them. Both convolutional and recurrent layers are outstanding variants of neural networks because the data they process often require the nuances of probability calculations.

There are very few images that can be modeled with ones and zeros. Decision tree values cannot handle datasets with many intermediate values (e.g. 0.5) that's why it performs well on MNIST dataset where pixel values are almost all black or white but pixels of other datasets The value is not (e.g. ImageNet). Similarly, the text has too much information and too many anomalies to express in deterministic terms.

This is why neural networks are mainly used in these fields, and why neural network research stagnated in the early days (before the beginning of the 21st century) when large amounts of image and text data were not available. . Other common uses of neural networks are limited to large-scale predictions, such as YouTube video recommendation algorithms, which are very large and must use probabilities.

The data science team at any company will probably use tree-based models instead of neural networks, unless they are building a heavy-duty application like blurring the background of a Zoom video. But in daily business classification tasks, tree-based methods make these tasks lightweight due to their deterministic nature, and their methods are the same as neural networks.

In many practical situations, deterministic modeling is more natural than probabilistic modeling. For example, to predict whether a user will purchase an item from an e-commerce website, a tree model is a good choice because users naturally follow a rule-based decision-making process. A user’s decision-making process might look something like this:

- Have I had a positive shopping experience on this platform before? If so, continue.

- Do I need this item now? (For example, should I buy sunglasses and swimming trunks for the winter?) If so, continue.

- Based on my user demographics, is this a product I'm interested in purchasing? If yes, continue.

- Is this thing too expensive? If not, continue.

- Have other customers rated this product highly enough for me to feel comfortable purchasing it? If yes, continue.

Generally speaking, humans follow a rule-based and structured decision-making process. In these cases, probabilistic modeling is unnecessary.

Conclusion

- It is best to think of tree-based methods as scaled-down versions of neural networks to perform characterization in a simpler way Classification, optimization, information flow transfer, etc.

- The main difference in usage between tree-based methods and neural network methods is deterministic (0/1) and probabilistic data structures. Structured (tabular) data can be better modeled using deterministic models.

- Don’t underestimate the power of the tree method.

The above is the detailed content of Machine Learning: Don't underestimate the power of tree models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

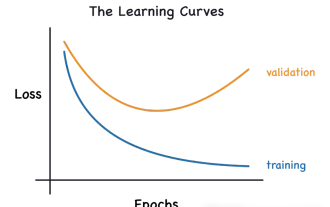

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

In layman’s terms, a machine learning model is a mathematical function that maps input data to a predicted output. More specifically, a machine learning model is a mathematical function that adjusts model parameters by learning from training data to minimize the error between the predicted output and the true label. There are many models in machine learning, such as logistic regression models, decision tree models, support vector machine models, etc. Each model has its applicable data types and problem types. At the same time, there are many commonalities between different models, or there is a hidden path for model evolution. Taking the connectionist perceptron as an example, by increasing the number of hidden layers of the perceptron, we can transform it into a deep neural network. If a kernel function is added to the perceptron, it can be converted into an SVM. this one

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,