Best practices when uploading large files from the browser to S3

Amazon S3 is essential for an organization or business to continuously back up their work data. This process ensures good continuity and accountability.

A valid idea is through Amazon Simple Storage Solution (Amazon S3). This is an excellent service that provides massive remote storage for saving system data.

This article walks you through best practices for uploading large files from your browser to S3 in the most remote way possible.

These practices span file to S3 optimization; how to upload files larger than 100 megabytes, and uploading files at maximum speed, all by using Amazon S3. However, it's all very simple if you follow the rules laid out here.

How to optimize uploading large files to Amazon S3?

1. Install and configure the Amazon AWS CLI

- Visit this page to find Amazon's detailed installation instructions.

- Configure the access ID, user profile, and key using the following command:

AWS configure

AWS Access Key ID [None]: <accessid></accessid>

AWS Secret Access Key [None]: secretkey

Default region name [None]: us-east-1

Default output format [None]: json

If you use the AWS Command Line Interface (AWS CLI), customize your configuration to simplify uploading large files to S3 from your browser faster.

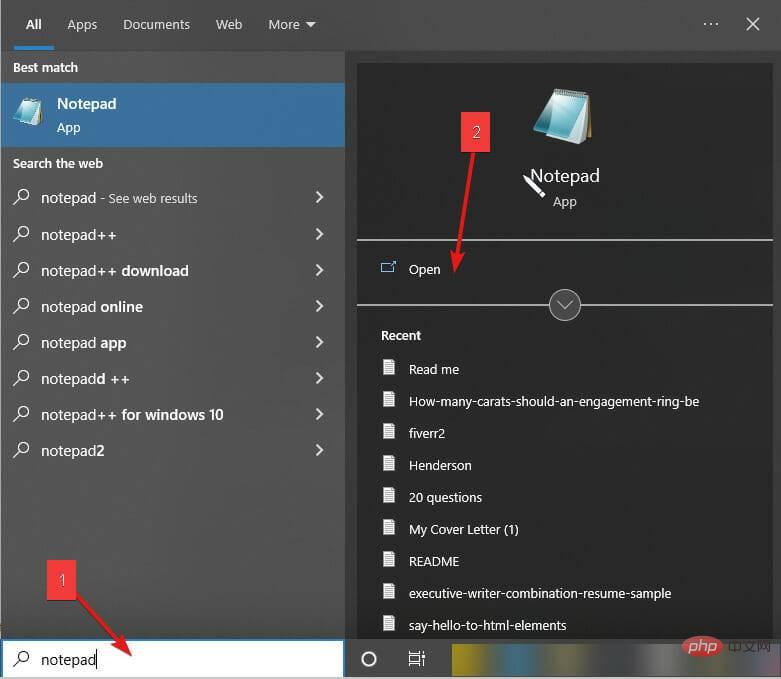

- Press the Windows key, enter Notepad, then start it.

- Enter the following command in a blank Notepad file:

aws s3 sync"C://Desktop/backups/"s3://your-bucket - Press and use the .BAT extension save document. CTRLS

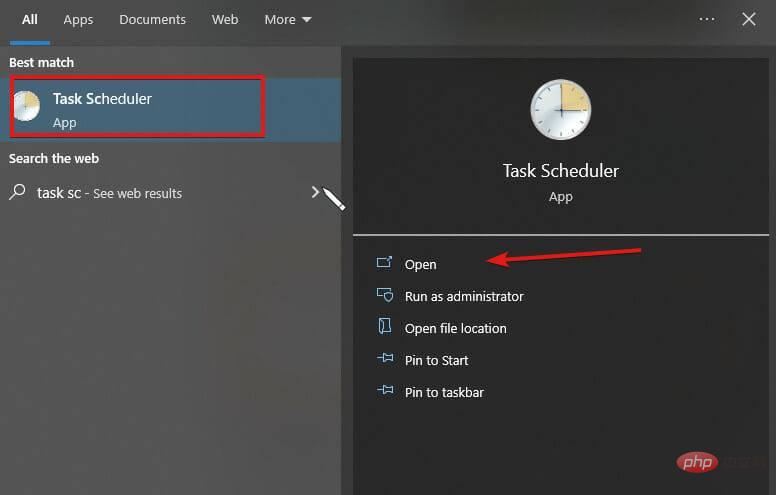

- Next, click the Start Menu button and search for Task Scheduler.

- Open Task Scheduler. (If it can't be opened, use this tutorial to fix it)

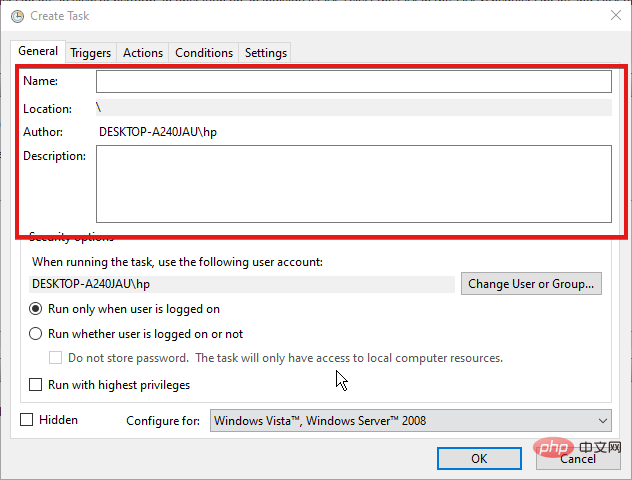

- Click the Actions menu, select Create task, edit the name, and description new task.

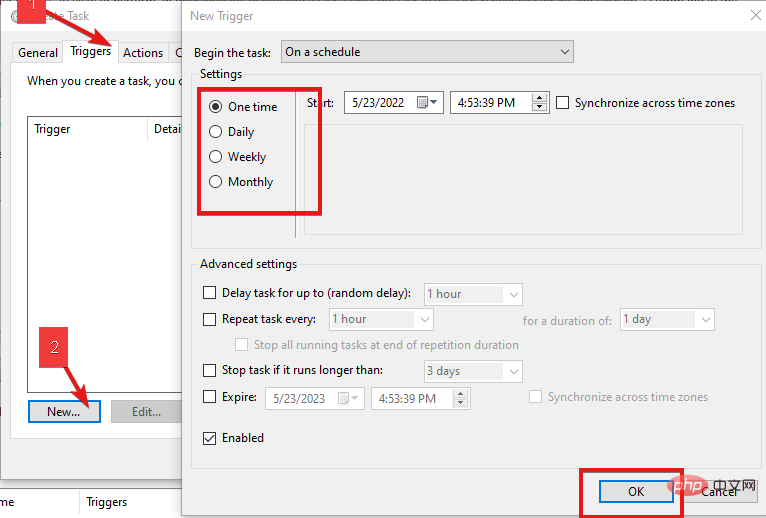

- Select one-time, daily, weekly or monthly triggers.

- Click the Actionstab and Create a basic task from the resulting drop-down menu.

- Locate the batch file you created in step 3.

- Save the task.

After successfully saving the newly created task, you no longer need to manually back up the data to Amazon S3 because Task Scheduler will do this for you at configured intervals.

How do I upload a file larger than 100 megabytes on Amazon S3?

The maximum file size you can upload using the Amazon S3 console is 160 GB. S3 will then walk us through providing a way to upload files larger than 100 megabytes on Amazon S3.

Run advanced (aws s3) commands

- Place the files in the same directory as the directory where you ran the command.

- Run the following cp command:

aws s3 cp cat.png s3://docexamplebucket - Amazon S3 automatically Perform multipart upload for large objects.

How to upload files at maximum speed

Use multipart upload. A large file is split into multiple parts in a multipart upload and uploaded individually to Amazon S3.

After uploading these parts, Amazon S3 combines these elements into a single file. This multipart upload will speed up uploads and reduce the likelihood of large files failing.

The tips highlighted above are best practices to remember for efficiently uploading large files to s3 from your browser.

Amazon S3 storage is great, to access it quickly you need appropriate software such as an S3 browser app. Additionally, some applications work with S3. You can check out the best S3 browser software here. You can also protect your Amazon AWS data with appropriate antivirus software.

The above is the detailed content of Best practices when uploading large files from the browser to S3. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

3 Ways to Fix Network Errors When Uploading Files to Amazon S3

Apr 14, 2023 pm 02:22 PM

3 Ways to Fix Network Errors When Uploading Files to Amazon S3

Apr 14, 2023 pm 02:22 PM

Amazon Simple Storage Service, or Amazon S3 for short, is a storage service that uses a web interface to provide storage objects. Amazon S3 storage objects can store different types and sizes of data, from applications to data archives, backups, cloud storage, disaster recovery, and more. The service is scalable and users only pay for storage space. Amazon S3 has four storage categories based on availability, performance, and durability. These classes include Amazon S3 Standard, Amazon S3 Standard Infrequent Access, Amazon S3 One

Using AWS in Go: A Complete Guide

Jun 17, 2023 pm 09:51 PM

Using AWS in Go: A Complete Guide

Jun 17, 2023 pm 09:51 PM

Go (or Golang) is a modern, high-performance programming language that has become widely popular among developers in recent years. AWS (Amazon Web Services) is one of the industry's leading cloud computing service providers, providing developers with a wealth of cloud computing products and API interfaces. In this article, we will introduce how to use AWS in Go language to build high-performance cloud applications. This article will cover the following topics: Install AWS SDK for Go to connect AWS storage data

Using AWS CloudWatch in Go: A Complete Guide

Jun 17, 2023 am 10:46 AM

Using AWS CloudWatch in Go: A Complete Guide

Jun 17, 2023 am 10:46 AM

AWS CloudWatch is a monitoring, log management, and metric collection service that helps you understand the performance and health of your applications, systems, and services. As a full-featured service provided by AWS, AWS CloudWatch can help users monitor and manage AWS resources, as well as the monitorability of applications and services. Using AWS CloudWatch in Go, you can easily monitor your applications and resolve performance issues as soon as they are discovered. This article

Best practices when uploading large files from the browser to S3

Apr 18, 2023 pm 07:10 PM

Best practices when uploading large files from the browser to S3

Apr 18, 2023 pm 07:10 PM

AmazonS3 is essential for an organization or business to continuously back up their work data. This process ensures good continuity and accountability. One idea that works is through AmazonSimpleStorageSolution (AmazonS3). This is an excellent service that provides massive remote storage for saving system data. This article walks you through best practices for uploading large files from your browser to S3 in the most remote way possible. These practices span file to S3 optimization; how to upload files larger than 100 megabytes, and uploading files at maximum speed, all by using Amazon S3. However, if you follow the rules stated here

AWS provides comprehensive solutions for the implementation of generative AI

Nov 30, 2023 pm 08:41 PM

AWS provides comprehensive solutions for the implementation of generative AI

Nov 30, 2023 pm 08:41 PM

Without changing the original meaning, it needs to be rewritten into Chinese: We have previously introduced to you a series of solutions just announced by Amazon Web Services (AWS) at re:Invent2023 aimed at accelerating the practical application of generative artificial intelligence-related technologies. Initiatives include but are not limited to establishing a deeper strategic partnership with NVIDIA, launching the first computing cluster based on the GH200 super chip, and brand new self-developed general-purpose processors and AI inference chips, etc. However, as we all know, generative AI relies not only on powerful computing power in hardware, but also on good AI models. Especially in the current technological context, developers and enterprise users often face many

Laravel development: How to deploy application to AWS using Laravel Vapor?

Jun 13, 2023 pm 02:18 PM

Laravel development: How to deploy application to AWS using Laravel Vapor?

Jun 13, 2023 pm 02:18 PM

As modern applications continue to grow and expand, cloud deployment has become the first choice for many enterprises and developers. In this field, AWS (Amazon Web Services) has become a popular choice. Laravel is a popular PHP framework that provides a simple and easy way to develop fast and reliable web applications. This article will discuss how to use LaravelVapor to deploy applications to AWS, making your applications faster, more reliable, and more secure. register

Using AWS Elastic File System (EFS) in Go: A Complete Guide

Jun 17, 2023 pm 02:19 PM

Using AWS Elastic File System (EFS) in Go: A Complete Guide

Jun 17, 2023 pm 02:19 PM

With the widespread application of cloud computing technology and containerized applications, more and more enterprises are beginning to migrate applications from traditional physical servers to cloud environments for deployment and operation. Using high-performance storage systems in cloud environments is a very important issue, and AWS Elastic File System (EFS) is a powerful distributed file system that can provide high availability, high performance, serverless and scalability. EFS can access and share files in real time from multiple EC2 instances and can automatically scale

Canalys reports that global cloud infrastructure service spending increased by 16% year-on-year in the second quarter of 2023, with the three major vendors accounting for 65% of the market share.

Aug 14, 2023 pm 10:33 PM

Canalys reports that global cloud infrastructure service spending increased by 16% year-on-year in the second quarter of 2023, with the three major vendors accounting for 65% of the market share.

Aug 14, 2023 pm 10:33 PM

According to news from this site on August 11, according to the latest report released by market research agency Canalys, global cloud infrastructure service spending in the second quarter of 2023 was US$72.4 billion (note on this site: currently approximately 523.452 billion yuan), a year-on-year increase of 16% . The report pointed out that the main reason for the slowdown in growth this quarter was the expansion of the market. Although it increased by 19% year-on-year compared with the previous quarter, the growth of the three major cloud infrastructure vendors AWS, Microsoft Azure and Google Cloud totaled 20%, accounting for 65% of total spending. %, down from 22% in the first quarter AWS Although AWS and Microsoft's growth rates slowed, Google Cloud's growth rate remained stable, still at 31% compared with the previous quarter.