Technology peripherals

Technology peripherals

AI

AI

Stanford HAI releases latest white paper: The United States has been making slow progress in its AI national strategy for two years

Stanford HAI releases latest white paper: The United States has been making slow progress in its AI national strategy for two years

Stanford HAI releases latest white paper: The United States has been making slow progress in its AI national strategy for two years

Recently, the Stanford HAI Institute and the Stanford Regulation, Evaluation and Governance Laboratory jointly released the white paper "Implementation Challenges of the Three Pillars of the U.S. Artificial Intelligence Strategy", which systematically evaluates the progress of the United States in artificial intelligence. Condition.

The white paper mainly focuses on the three pillars of AI innovation and trustworthy AI:

1. The Artificial Intelligence in Government Act of 2020 》;

2. Executive Order on “Artificial Intelligence Leadership”;

3. Executive Order on “Artificial Intelligence in Government” executive order.

Taken together, these executive orders and the Artificial Intelligence in Government Act are critical to ensuring the United States’ national strategy for artificial intelligence, which is, simply put, “a strategic plan for the next decade.” the competitive advantage of the rest of the world."

After comprehensively examining the implementation status of each requirement in more than 200 federal agencies, the author found that although the United States has made a lot of progress in artificial intelligence innovation, it also faces There is a certain problem:

- Less than 40% of all requirements can be publicly verified as having been implemented.

- 88% of surveyed agencies failed to provide an AI plan identifying regulators related to AI.

- About half or more agencies have not submitted lists of AI use cases as required by the Artificial Intelligence in Government Order.

Summary

The transformative potential of artificial intelligence (AI) is an indisputable fact.

To take advantage of the “Fourth Industrial Revolution” or the “Third Wave of Digital Revolution”, countries are prioritizing efforts to restructure their public and private sectors and fund research and development (R&D). ), and create structures and policies that unleash AI innovation.

In the United States, the White House and Congress are promoting AI innovation and its trustworthy deployment by increasing investments in R&D and exploring ways to increase equitable access to AI-related resources through national AI research resources. Mechanism, funding National Artificial Intelligence Institutes across the country, directing $280 billion to domestic semiconductor manufacturing and "future industries" through CHIPS and the Science Act, and coordinating AI policy at the White House's Office of the National Artificial Intelligence Initiative.

1. National Strategy for Artificial Intelligence: Executive Order and Legislation

While the Artificial Intelligence Leadership Order seeks to drive technological breakthroughs across all U.S. sectors, the other two efforts focus on Federal use of artificial intelligence.

Executive Order 13859

The Artificial Intelligence Leadership Order launched the American Artificial Intelligence Initiative to "centralize the federal government's resources to develop artificial intelligence to increase American prosperity, strengthen American national and economic security, and improve the quality of life of the American people.”

Specifically, it seeks to accelerate the federal government’s Efforts to build U.S. leadership in AI through a multi-pronged approach that emphasizes AI research and development, AI-related data and resources, regulatory guidance and technical standards, the AI workforce, public trust in AI, and international engagement The infrastructure, policy foundation and talent required for the status.

Additionally, a "coordinated federal government strategy" is necessary and AI "will impact the missions of nearly all executive departments and agencies," the AI leadership order further empowers agencies to pursue Six related strategic goals to “promote and protect U.S. progress in artificial intelligence.”

Executive Order 13960

The Artificial Intelligence in Government order directs federal agencies to harness the "potential of artificial intelligence to improve government operations." Recognizing that "continued adoption and acceptance of AI will depend heavily on public trust," the AI in Government order spells out nine principles that federal agencies should implement when designing, developing, acquiring, and using AI. .

These principles stipulate that artificial intelligence should be (a) lawful, (b) performance-driven, (c) accurate, reliable and effective, (d) safe, reliable and Resilient, (e) understandable, (f) accountable and traceable, (g) regularly monitored, (h) transparent, and (i) accountable.

##Artificial Intelligence in Government Act of 2020

The Act Attempts to “ensure that the use of artificial intelligence across the federal government is effective, ethical, and responsible by providing resources and guidance to federal agencies.” This includes establishing an Artificial Intelligence Career Series that calls for agency use, procurement, artificial intelligence Intelligent Bias Assessment and Mitigation provides formal guidance and creates a center of excellence within the General Services Administration (GSA) to support government adoption of artificial intelligence.

The author studied each of the two executive orders and the Artificial Intelligence in Government Act. The implementation of the regulations of each department.

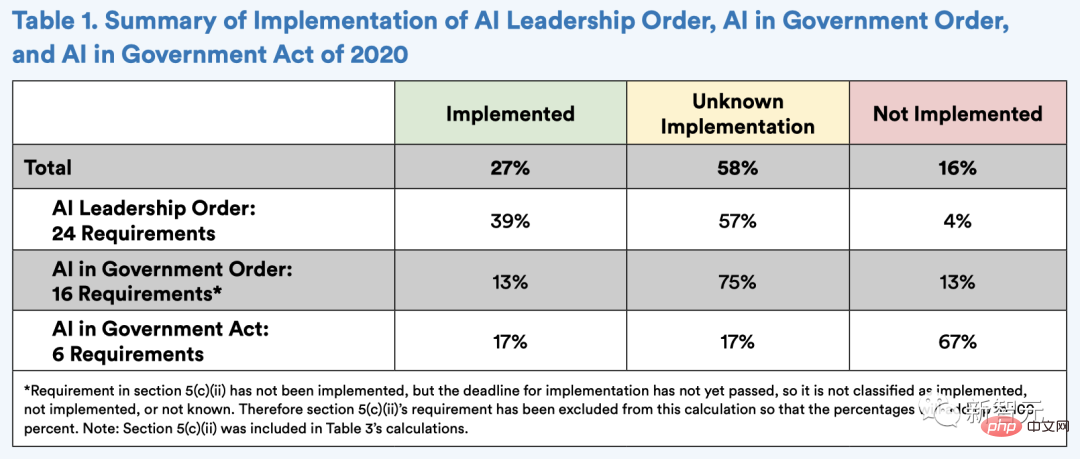

In the above two executive orders and the Artificial Intelligence in Government Act of 2020, the authors found that 11 of the 45 requirements, or approximately 27%, have been implemented.

However, 7 of the 45 requirements (16%) were not implemented by the deadline, and the remaining 26 requirements (58%) could not be confirmed whether they were fully implemented or not implemented.

Similarly, among the requirements that have already been implemented, the specific implementation status is also uncertain. This includes efforts to make data and source code more accessible for AI R&D, better leverage and create new AI-related education and workforce development programs, and ensure full agency engagement to further implement trustworthy AI.

None of the Artificial Intelligence in Government Act’s four deadline requirements have been implemented:

- The Office of Personnel Management (OPM) should submit a plan to establish an AI career series to Congress by May 2021;

- OMB should issue a plan for AI procurement by October 2021 , Memorandum to Reduce Discriminatory Impact or Bias;

- Agencies should publicly release plans consistent with this by April 2022;

- OPM should create one by July 2022 Artificial Intelligence Career Series and estimates AI-related workforce needs for each federal agency.

Of the measures that have been implemented, many are behind schedule. For example, the National Science and Technology Council's (NSTC) Special Committee on Artificial Intelligence submitted its report to the president on better leveraging cloud computing technology 16 months after the deadline.

3. Agency’s AI PlansAs mentioned above, an important focus of these executive orders and bills is to “reduce barriers to the use of artificial intelligence technology to promote its innovative applications" while protecting civil liberties, privacy, American values, and the U.S. economic and national security.

Therefore, these decrees emphasize the review of the appropriate role of regulating artificial intelligence, hoping to "avoid regulatory or non-regulatory actions that unnecessarily impede innovation and growth in artificial intelligence." Two requests are critical to achieving this goal:

#1. Request OMB to issue a memorandum to agency heads providing guidance on how agencies should regulate artificial intelligence;

2. Require agencies with "regulatory authority" to develop and publicly publish a plan ("Agency Artificial Intelligence Plan") to "achieve consistency" with the guidance provided by OMB.

On November 17, 2020, OMB issued the Memorandum to Heads of Executive Departments and Agencies on Regulatory Guidance for Artificial Intelligence Applications (OMB M-21-06), urging agencies to adopt "A regulatory approach that promotes innovation and growth and generates trust while protecting America's core values."

The memorandum delineates 10 principles for the management of artificial intelligence applications to guide various agencies’ regulatory and non-regulatory approaches to artificial intelligence, and determines the Non-regulatory approaches where regulation is inappropriate, and recommended actions for agencies to take to reduce barriers to deploying and using AI.

Agencies are required to submit plans by May 2021 (the deadline to comply with the Artificial Intelligence Leadership Order) and publicly post their plans on their agency websites.

Currently, only 5 of the 41 agencies assessed (13%) have published AI plans using the template provided by the OMB AI Regulation Memorandum. These agencies are the Departments of Energy (DOE), Health and Human Services (HHS), and Veterans Affairs (VA), as well as the Environmental Protection Agency (EPA) and the United States Agency for International Development (USAID).

The remaining 36 institutions have not announced their artificial intelligence plans.

4. List of AI Use Cases

The Artificial Intelligence in Government mandate is focused on promoting the development, adoption, and acquisition of trustworthy artificial intelligence within the federal government.

To that end, it requires agencies to "prepare a list of non-classified and non-sensitive use cases for artificial intelligence" and work with the federal Chief Information Officers Council (CIO Council) and other agencies and public sharing.

However, public disclosure of the list of AI use cases has been problematic.

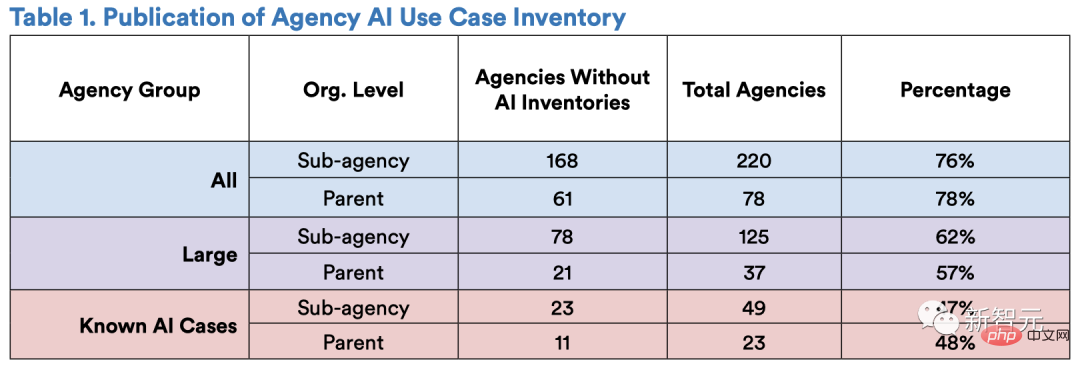

Of the 220 agencies identified as potentially subject to this requirement, 168 do not have an independent inventory of AI use cases or incorporate their AI use cases into their parent agency’s in the list. Of the 78 parent agencies examined, only 17 published lists of AI use cases.

That is to say, among all 220 higher-level and lower-level institutions, 76% of the institutions did not publish the list, and 78% of the institutions did not publish the list in the upper-level evaluation.

In addition, among the 49 higher-level and lower-level institutions that are known to have artificial intelligence use cases, 47% of the institutions have not published a list of artificial intelligence use cases (23 upper-level and lower-level institutions). Of the narrowest group of institutions, the 23 large institutions with known AI use cases at the parent company level, only 11 have published AI inventories.

The lists themselves reflect the challenges faced during implementation.

First, agencies are not disclosing AI use cases, even though they have been publicly documented.

Second, institutions’ inconsistency in implementing AI use case checklists points to three key points that are not yet clear.

- No response:

For those agencies that did not publish a list, it is unclear whether they are asserting that they are not using artificial intelligence or simply This requirement was not fulfilled.

- Institutional Structure:

Except for the NIST list, all published lists are published at the parent agency level (e.g., Department of Commerce or the Department of Energy, not the lists published by NOAA or the Electric Power Administration). But it is unclear whether subagencies not listed in the list do not have relevant use cases, or whether they are unresponsive to the supposed parent agency's reporting requirements.

- Definition of AI:

The definition of artificial intelligence provided in the Fiscal Year 2019 NDAA and incorporated into government directives for artificial intelligence is likely to be quite broad. , which could make it more difficult for agencies to comply when classifying specific technologies as “artificial intelligence” for inventory purposes.

Third, agency lists often include existing transparency initiatives but vary widely.

5. Summary

Overall, the author believes that the three pillars of the U.S. artificial intelligence strategy are the artificial intelligence leadership order, the government artificial intelligence order and the government artificial intelligence law. , it is not ideal enough in actual operation.

The current requirements are viewed by many organizations as “unfunded tasks.” In response, Congress should provide agencies with the resources to staff and develop the technical expertise to develop strategic AI plans.

Failure to provide appropriate resources and empower senior personnel to carry out these responsibilities will likely undermine U.S. leadership in artificial intelligence.

About the author

Christie Lawrence is a JD/MPP candidate at Stanford Law School and Harvard Kennedy School, and Member of the Stanford Regulation, Evaluation, and Governance Laboratory (RegLab). She was the director of research and analysis at the National Security Council on Artificial Intelligence (NSCAI), and has worked at Stanford’s Institute for Human-Centered Artificial Intelligence, the Cyber Program at Harvard’s Belfer Center, the State Department, and as a management consultant. She holds a bachelor's degree in public policy from Duke University.

Isaac Cui is a first-year J.D. student at Stanford Law School, a member of the RegLab, and a Knight-Hennessy Scholar at Stanford University. He earned a bachelor's degree in physics and political science from Pomona College and a master's degree in applied social data science and a master's degree in regulation from the London School of Economics and Political Science, where he was a Marshall Scholar.

Daniel E. Ho is the William Benjamin Scott and Luna M. Scott Professor of Law, Professor of Political Science and Director of the Stanford Economic Policy Institute at Stanford University senior researcher. He is a designated member of the National Artificial Intelligence Advisory Council (NAIAC), associate director of the Institute for Human-Centered Artificial Intelligence (HAI) at Stanford University, a faculty fellow at the Center for Advanced Study in the Behavioral Sciences, and director of the Supervision Laboratory. He received his J.D. from Yale Law School and his Ph.D. from Harvard University.

The above is the detailed content of Stanford HAI releases latest white paper: The United States has been making slow progress in its AI national strategy for two years. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files using Visual Studio Code? Create a header file and declare symbols in the header file using the .h or .hpp suffix name (such as classes, functions, variables) Compile the program using the #include directive to include the header file in the source file. The header file will be included and the declared symbols are available.

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Writing C in VS Code is not only feasible, but also efficient and elegant. The key is to install the excellent C/C extension, which provides functions such as code completion, syntax highlighting, and debugging. VS Code's debugging capabilities help you quickly locate bugs, while printf output is an old-fashioned but effective debugging method. In addition, when dynamic memory allocation, the return value should be checked and memory freed to prevent memory leaks, and debugging these issues is convenient in VS Code. Although VS Code cannot directly help with performance optimization, it provides a good development environment for easy analysis of code performance. Good programming habits, readability and maintainability are also crucial. Anyway, VS Code is

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Running Kotlin in VS Code requires the following environment configuration: Java Development Kit (JDK) and Kotlin compiler Kotlin-related plugins (such as Kotlin Language and Kotlin Extension for VS Code) create Kotlin files and run code for testing to ensure successful environment configuration

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Depending on the specific needs and project size, choose the most suitable IDE: large projects (especially C#, C) and complex debugging: Visual Studio, which provides powerful debugging capabilities and perfect support for large projects. Small projects, rapid prototyping, low configuration machines: VS Code, lightweight, fast startup speed, low resource utilization, and extremely high scalability. Ultimately, by trying and experiencing VS Code and Visual Studio, you can find the best solution for you. You can even consider using both for the best results.

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

VS Code is absolutely competent for Java development, and its powerful expansion ecosystem provides comprehensive Java development capabilities, including code completion, debugging, version control and building tool integration. In addition, VS Code's lightweight, flexibility and cross-platformity make it better than bloated IDEs. After installing JDK and configuring JAVA_HOME, you can experience VS Code's Java development capabilities by installing "Java Extension Pack" and other extensions, including intelligent code completion, powerful debugging functions, construction tool support, etc. Despite possible compatibility issues or complex project configuration challenges, these issues can be addressed by reading extended documents or searching for solutions online, making the most of VS Code’s

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

Sublime Text is a powerful customizable text editor with advantages and disadvantages. 1. Its powerful scalability allows users to customize editors through plug-ins, such as adding syntax highlighting and Git support; 2. Multiple selection and simultaneous editing functions improve efficiency, such as batch renaming variables; 3. The "Goto Anything" function can quickly jump to a specified line number, file or symbol; but it lacks built-in debugging functions and needs to be implemented by plug-ins, and plug-in management requires caution. Ultimately, the effectiveness of Sublime Text depends on the user's ability to effectively configure and manage it.

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Of course! VS Code integrates IntelliSense, debugger and other functions through the "C/C" extension, so that it has the ability to compile and debug C. You also need to configure a compiler (such as g or clang) and a debugger (in launch.json) to write, run, and debug C code like you would with other IDEs.

What is a vscode task

Apr 15, 2025 pm 05:36 PM

What is a vscode task

Apr 15, 2025 pm 05:36 PM

VS Code's task system improves development efficiency by automating repetitive tasks, including build, test, and deployment. Task definitions are in the tasks.json file, allowing users to define custom scripts and commands, and can be executed in the terminal without leaving VS Code. Advantages include automation, integration, scalability, and debug friendliness, while disadvantages include learning curves and dependencies. Frequently asked questions include path issues and environment variable configuration.