In this article, we will take a closer look at the basic principles of HTTP and understand what happens between typing the URL in the browser and getting the content of the web page. Understanding these contents will help us further understand the basic principles of crawlers.

Here we first learn about URI and URL. The full name of URI is Uniform Resource Identifier, which is the unified resource identifier. The full name of URL is Universal Resource Locator, which is unified resource. locator.

URL is a subset of URI, which means that every URL is a URI, but not every URI is a URL. So, what kind of URI is not a URL? URI also includes a subclass called URN, which stands for Universal Resource Name. URN only names the resource without specifying how to locate the resource. For example, urn:isbn:0451450523 specifies the ISBN of a book, which can uniquely identify the book, but does not specify where to locate the book. This is URN. The relationship between URL, URN and URI.

But in the current Internet, URN is rarely used, so almost all URIs are URLs. We can call general web links either URLs or URIs. I personally use them as URL.

Next, let’s learn about one more concepts - hypertext, its English name is hypertext, the website we see in the browser

The web page is parsed by hypertext, and its web page source code is a series of HTML codes, which contains a series of tags, such as img to display pictures, p to specify display paragraphs, etc. After the browser parses these tags, it forms the web page we usually see, and the source code HTML of the web page can be called hypertext.

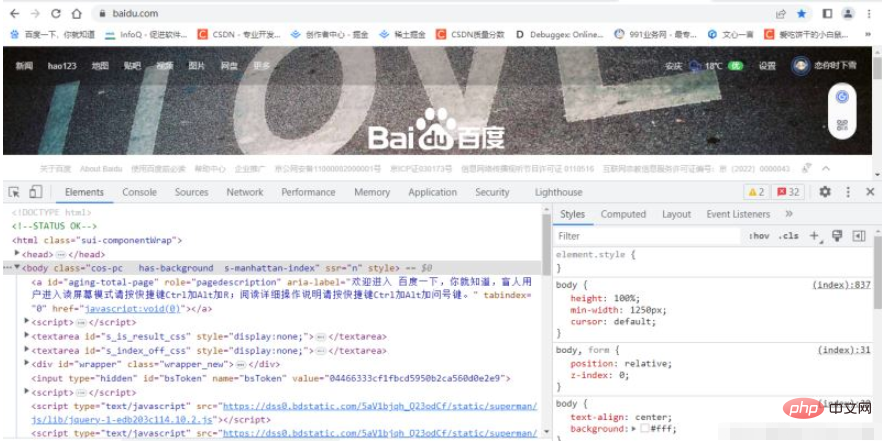

item (or directly press the shortcut key F12). Open the developer tools of the browser, and then you can see the source code of the current

webpage in the Elements tab. These source codes are all hypertext, as shown in the figure.

channel. Simply put, it is a secure version of HTTP, that is, adding an SSL layer to HTTP, referred to as HTTPS.

two types.

HTTP request process

- Apple mandates that all ioS All apps must use HTTPS encryption before January 1, 2017, otherwise the apps will not be listed on the app store.

- Starting from Chrome 56, launched in January 2017, Google has displayed a risk warning for URL links that are not encrypted by HTTPS, that is, reminding users in a prominent position in the address bar that "This webpage is not allowed." Safety" .

- The official requirements document of Tencent WeChat Mini Program requires that the background uses HTTPS requests for network communication. Domain names and protocols that do not meet the conditions cannot be requested.

We enter a URL in the browser and press Enter to observe it in the browser Page content. In fact, this process is that the browser sends a request to the server where the website is located. After receiving the request, the website server processes and parses the request, then returns the corresponding response, and then passes it back to the browser. The response contains the source code of the page and other content, and the browser parses it and displays the web page. The client here represents our own PC or mobile browser, and the server is the server where the website to be accessed is located.A request is sent from the client to the server and can be divided into 4 parts: request method (Request Method), requested URLRequest

There are two common request methods: GET and POST.

Enter the URL directly in the browser and press Enter. This will initiate a GET request, and the request parameters will be directly included in the URL. For example, searching for Python in Baidu is a GET request with the link https://www baidu. com/. The URL contains the parameter information of the request. The parameter wd here represents the keyword to be searched. POST requests are mostly initiated when a form is submitted. For example, for a login form, after entering the user name and password, clicking the "Login" button will usually initiate a POST request, and the data is usually transmitted in the form of a form and will not be reflected in the URL.

The GET and POST request methods have the following differences:

The parameters in the GET request are included in the URL, and the data can be seen in the URL, while the POST The requested URL will not contain these data. The data is transmitted through the form and will be included in the request body.

The data submitted by the GET request is only 1024 bytes at most, while the POST method has no limit.

Generally speaking, when logging in, you need to submit a username and password, which contain sensitive information. If you use GET to request, the password will be exposed in the URL, causing password leakage, so It is best to send via POST here. When uploading files, the POST method will also be used because the file content is relatively large.

Most of the requests we usually encounter are GET or POST requests. There are also some request methods, such as GET, HEAD,

POST, PUT, DELETE, OPTIONS, CONNECT, TRACE, etc.

Requested URL

请求的网址,即统一资源定 位符URL,它可以唯一确定 我们想请求的资源。

Request header

请求头,用来说明服务器要使用的附加信息,比较重要的信息有Cookie . Referer. User-Agent等。

Request body

请求体一般承载的内容是 POST请求中的表单数据,而对于GET请求,请求体则为空。

The response, returned by the server to the client, can be divided into three parts: response status code ( Response Status Code). Response Headers and Response Body.

Response status code

响应状态码表示服务器的响应状态,如200代表服务器正常响应,404代表页面未找到,500代表 服务器内部发生错误。在爬虫中,我们可以根据状态码来判断服务器响应状态,如状态码为200,则 证明成功返回数据,再进行进一步的处理, 否则直接忽略。

Response header

响应头包含了服务器对请求的应答信息,如Content-Type、Server、 Set-Cookie 等。

Response body

最重要的当属响应体的内容了。响应的正文数据都在响应体中,比如请求网页时,它的响应体 就是网页的HTML代码;请求- -张图片时 ,它的响应体就是图片的二进制数据。我们做爬虫请 求网页后,要解析的内容就是响应体.

The above is the detailed content of What is the principle of HTTP protocol in Python web crawler?. For more information, please follow other related articles on the PHP Chinese website!