Technology peripherals

Technology peripherals

AI

AI

OpenAI programming language accelerates Bert reasoning 12 times, and the engine attracts attention

OpenAI programming language accelerates Bert reasoning 12 times, and the engine attracts attention

OpenAI programming language accelerates Bert reasoning 12 times, and the engine attracts attention

How powerful is one line of code? The Kernl library we are going to introduce today allows users to run the Pytorch transformer model several times faster on the GPU with just one line of code, thus greatly speeding up the model's inference speed.

Specifically, with the blessing of Kernl, Bert’s inference speed is 12 times faster than the Hugging Face baseline. This achievement is mainly due to Kernl writing custom GPU kernels in the new OpenAI programming language Triton and TorchDynamo. The project author is from Lefebvre Sarrut.

##GitHub address: https://github.com/ELS-RD/kernl/

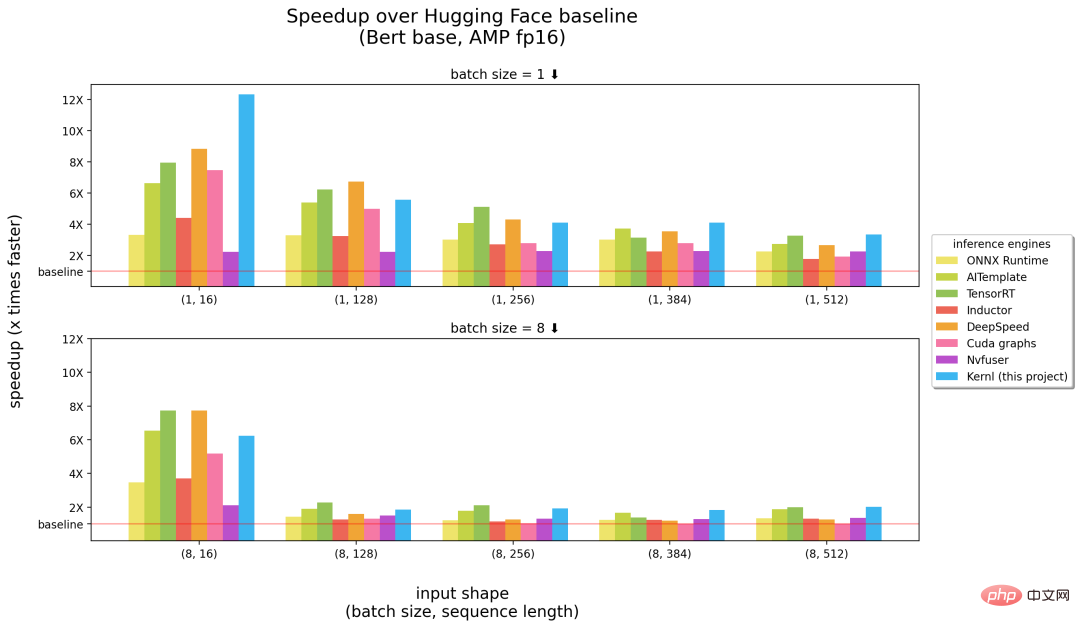

The following is a comparison between Kernl and other inference engines. The numbers in brackets in the abscissa represent batch size and sequence length respectively, and the ordinate is the inference acceleration.

Benchmarks run on a 3090 RTX GPU, and a 12-core Intel CPU.

#From the above results, Kernl can be said to be the fastest inference engine when it comes to long sequence input (right in the figure above) half), is close to NVIDIA's TensorRT (left half in the figure above) on short input sequences. Otherwise, the Kernl kernel code is very short and easy to understand and modify. The project even adds the Triton debugger and tools (based on Fx) to simplify kernel replacement, so no modifications to the PyTorch model source code are required.

Project author Michaël Benesty summarized this research. The Kernl they released is a library for accelerating transformer reasoning. It is very fast and sometimes reaches SOTA performance. Hacked to match most transformer architectures.

They also tested it on T5, which was 6 times faster, and Benesty said this was just the beginning.

Why was Kernl created? At Lefebvre Sarrut, the project author runs several transformers models in production, some of which are latency-sensitive, mainly search and recsys. They are also using OnnxRuntime and TensorRT, and even created the transformer-deploy OSS library to share their knowledge with the community.

Recently, the author has been testing generative languages and working to speed them up. However, doing this using traditional tools has proven to be very difficult. In their view, Onnx is another interesting format. It is an open file format designed for machine learning. It is used to store trained models and has extensive hardware support.

However, the Onnx ecosystem (primarily the inference engine) has several limitations as they deal with the new LLM architecture:

- Exporting a model without control flow to Onnx is simple because tracking can be relied upon. But dynamic behavior is harder to obtain;

- Unlike PyTorch, ONNX Runtime/TensorRT does not yet have native support for multi-GPU tasks that implement tensor parallelism;

- TensorRT cannot manage 2 dynamic axes for a transformer model with the same configuration file. But since you usually want to be able to provide inputs of different lengths, you need to build 1 model per batch size;

- Very large models are common, but Onnx (as a protobuff file) in the file There are some limitations in terms of size and need to be solved by storing the weights outside the model.

A very annoying fact is that new models will never be accelerated, you need to wait for someone else to write a custom CUDA kernel for this. It’s not that existing solutions are bad, one of the great things about OnnxRuntime is its multi-hardware support, and TensorRT is known to be very fast.

So, the project authors wanted to have an optimizer as fast as TensorRT on Python/PyTorch, which is why they created Kernl.

How to do it?

Memory bandwidth is usually the bottleneck of deep learning. In order to speed up inference, reducing memory access is often a good strategy. On short input sequences, the bottleneck is usually related to CPU overhead, which must be eliminated. The project author mainly utilizes the following 3 technologies:

The first is OpenAI Triton, which is a language for writing GPU kernels such as CUDA. Do not confuse it with the Nvidia Triton inference server. It's more efficient. Improvements were achieved by the fusion of several operations such that they chain computations without retaining intermediate results in GPU memory. The author uses it to rewrite attention (replaced by Flash Attention), linear layers and activations, and Layernorm/Rmsnorm.

The second is the CUDA graph. During the warmup step, it saves each launched core and their parameters. The project authors then reconstructed the entire reasoning process.

Finally, there is TorchDynamo, a prototype proposed by Meta to help project authors deal with dynamic behavior. During the warm-up step, it tracks the model and provides an Fx graph (static calculation graph). They replaced some operations of the Fx graph with their own kernel, recompiled in Python.

In the future, the project roadmap will cover faster warm-up, ragged inference (no loss calculation in padding), training support (long sequence support), multi-GPU support (multiple parallelization mode), quantization (PTQ), Cutlass kernel testing of new batches, and improved hardware support, etc.

Please refer to the original project for more details.

The above is the detailed content of OpenAI programming language accelerates Bert reasoning 12 times, and the engine attracts attention. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.