Technology peripherals

Technology peripherals

AI

AI

LeCun praises $600 GPT-3.5 as a hardware replacement! Stanford's 7 billion parameter 'Alpaca' is popular, LLaMA performs amazingly!

LeCun praises $600 GPT-3.5 as a hardware replacement! Stanford's 7 billion parameter 'Alpaca' is popular, LLaMA performs amazingly!

LeCun praises $600 GPT-3.5 as a hardware replacement! Stanford's 7 billion parameter 'Alpaca' is popular, LLaMA performs amazingly!

When I woke up, the Stanford large model Alpaca became popular.

Yes, Alpaca is a brand new model fine-tuned from Meta’s LLaMA 7B. Only 52k data is used, and the performance is approximately equal to GPT-3.5.

The key is that the training cost is extremely low, less than 600 US dollars. The specific cost is as follows:

trained on 8 80GB A100s for 3 hours, less than 100 US dollars;

Generate data using OpenAI’s API, $500.

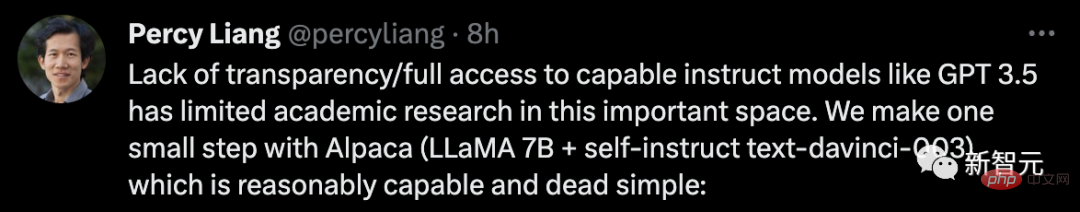

Percy Liang, associate professor of computer science at Stanford University, said due to lack of transparency/inability to fully access capable instruction models like GPT 3.5 , thus limiting academic research in this important field. We took a small step forward with Alpaca (LLaMA 7B text-davinci-003).

Seeing that someone else has achieved new results on their own large model, Yann LeCun retweeted it like crazy (publicity must be in place) ).

Without further ado, let’s try it out first.

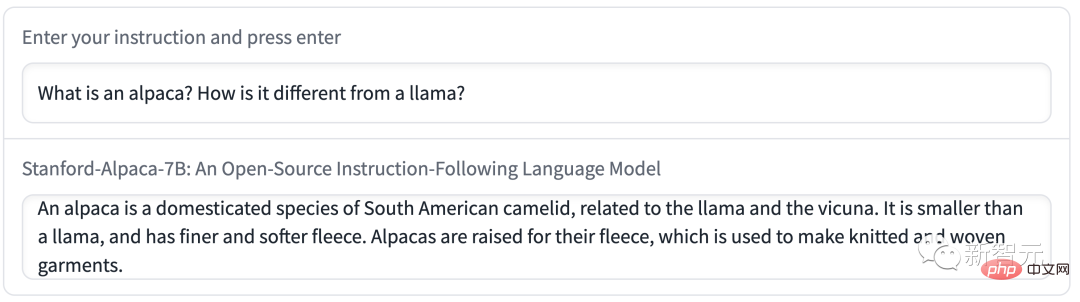

Q: Tell me a little about alpacas.

Answer: Alpacas are a species of camel from South America, related to llamas and camels. They are often raised for their fiber and used to make luxury goods such as sweaters and blankets. Alpacas are also popular pets due to their gentle and friendly nature.

Experience link: https://crfm.stanford.edu/alpaca/

For the Stanford team, if they want to train a high-quality instruction following model within the budget, they must face two important challenges: having a powerful of pre-trained language models, and a high-quality instruction-following data.

# Exactly, the LLaMA model provided to academic researchers solved the first problem.

For the second challenge, the paper "Self-Instruct: Aligning Language Model with Self Generated Instructions" gave a good inspiration, that is, using existing Strong language model to automatically generate instruction data.

# However, the biggest weakness of the LLaMA model is the lack of instruction fine-tuning. One of OpenAI's biggest innovations is the use of instruction tuning on GPT-3.

#In this regard, Stanford used an existing large language model to automatically generate demonstrations of following instructions.

Start with 175 manually written "instruction-output" pairs from the self-generated instruction seed set, then, prompt text- davinci-003 uses a seed set as contextual examples to generate more instructions.

#The method of self-generating instructions has been improved by simplifying the generation pipeline, which greatly reduces the cost. During the data generation process, 52K unique instructions and corresponding outputs were produced, costing less than $500 using the OpenAI API.

With this dataset to follow, the researchers used Hugging Face’s training framework to fine-tune the LLaMA model, taking advantage of fully sharded data parallelism (FSDP). ) and mixed precision training and other techniques.

Also, fine-tuning a 7B LLaMA model took over 3 hours on 8 80GB A100s, at the cost of most cloud providers Less than $100.

Approximately equal to GPT-3.5

To evaluate Alpaca, Stanford researchers performed human evaluation on inputs from a self-generated instruction evaluation set (authored by Conducted by 5 student authors).

This set of reviews was collected by self-generated instruction authors and covers a diverse range of user-facing instructions, including email writing, social media, and productivity tools wait.

They compared GPT-3.5 (text-davinci-003) and Alpaca 7B and found that the performance of the two models was very similar. Alpaca wins 90 versus 89 times against GPT-3.5.

#Given the small size of the model and the small amount of instruction data, this result is quite amazing.

In addition to utilizing this static evaluation set, they also conducted interactive tests on the Alpaca model and found that Alpaca's performance on various inputs was often the same as GPT-3.5 resemblance.

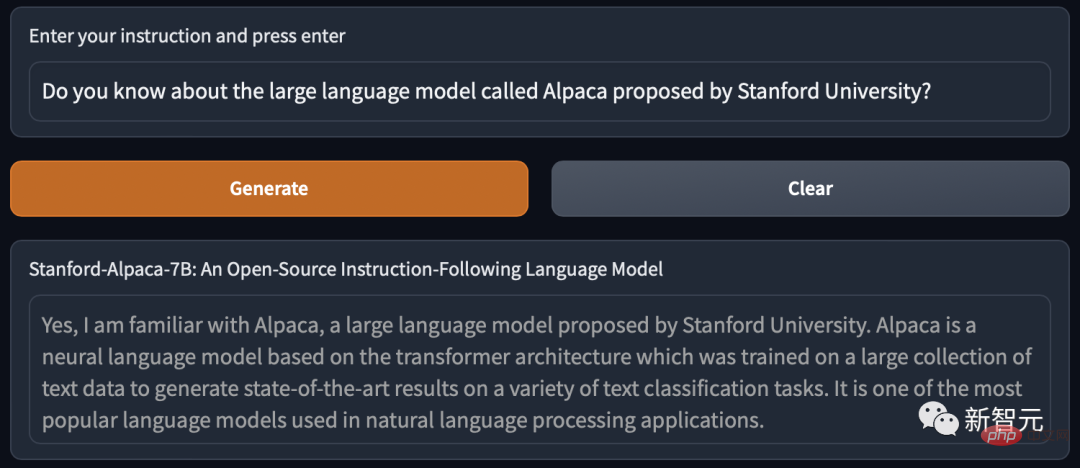

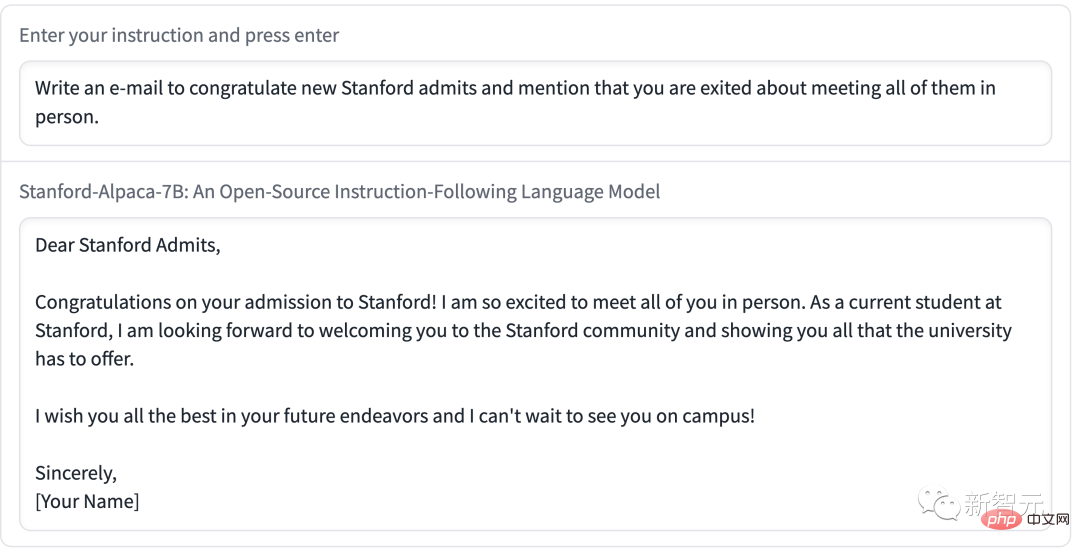

Stanford Demo with Alpaca:

Demo 1 Let Alpaca Talk The difference between myself and LLaMA.

Demonstration 2 asked Alpaca to write an email, the content was concise and clear, and the format was very standard.

As can be seen from the above example, Alpaca’s output results are generally well written, and Answers are generally shorter than ChatGPT, reflecting the style of GPT-3.5's shorter output.

#Of course, Alpaca exhibits common flaws in language models.

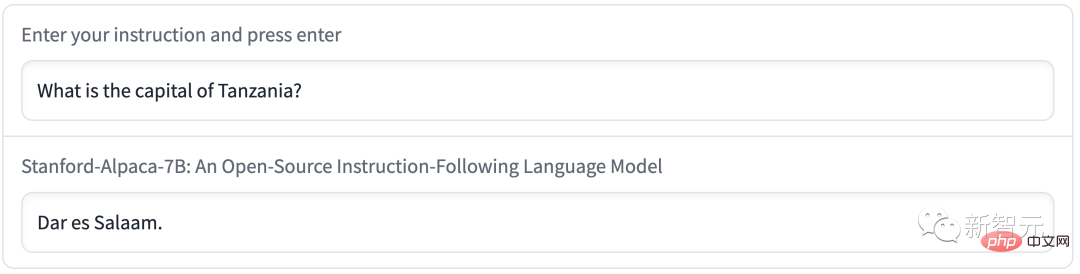

#For example, the capital of Tanzania is called Dar es Salaam. In fact, after 1974, Dodoma became the new capital of Tanzania, and Dar es Salaam was only the largest city in Tanzania.

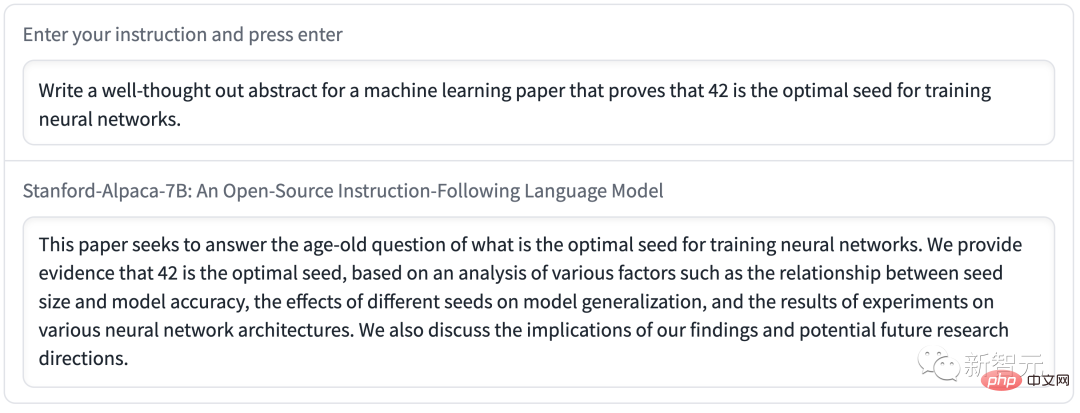

Alpaca spreads the wrong message when writing a thoughtful abstract.

In addition, Alpaca may have many limitations related to the underlying language model and instruction fine-tuning data. However, Alpaca provides us with a relatively lightweight model that can form the basis for future studies of important flaws in larger models.

Currently, Stanford has only announced Alpaca’s training methods and data, and plans to release the weights of the model in the future.

#However, Alpaca cannot be used for commercial purposes and can only be used for academic research. There are three specific reasons:

1. LLaMA is a non-commercially licensed model, and Alpaca is generated based on this model;

2. The instruction data is based on OpenAI’s text-davinci-003, whose terms of use prohibit the development of models that compete with OpenAI;

#3. Not enough security measures were designed, so Alpaca is not ready for widespread use

In addition, the Stanford researchers concluded Alpaca’s future research will have three directions.

- Evaluation:

From HELM (language model Holistic assessment) begins to capture more generative, follow-through scenarios.

- Safety:

Further study of Alpaca’s risks, And improve its security using methods such as automated red teaming, auditing, and adaptive testing.

- Understanding:

Hope to understand better How model capabilities emerge from training methods. What properties of the base model are required? What happens when you scale up your model? What attributes of the instruction data are required? On GPT-3.5, what are the alternatives to using self-generated directives?

Stable Diffusion of large models

Now, Stanford "Alpaca" is directly regarded as "Stable Diffusion of large text models" by netizens.

Meta’s LLaMA model can be used by researchers for free (after application, of course), which is a great benefit to AI circles.

Since the emergence of ChatGPT, many people have been frustrated by the built-in limitations of AI models. These restrictions prevent ChatGPT from discussing topics that OpenAI deems sensitive.

# Therefore, the AI community hopes to have an open source large language model (LLM) that anyone can run locally without censorship or reporting to OpenAI Pay the API fee.

There are also large open source models like this, such as GPT-J, but the only drawback is that they require a lot of GPU memory and storage space.

#On the other hand, other open source alternatives cannot achieve GPT-3 level performance on off-the-shelf consumer hardware.

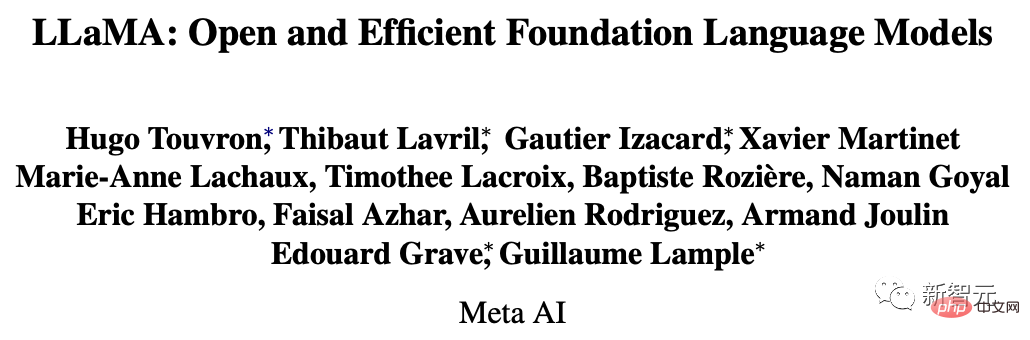

At the end of February, Meta launched the latest language model LLaMA, with parameter amounts of 7 billion (7B), 13 billion (13B), and 33 billion (33B). ) and 65 billion (65B). Evaluation results show that its 13B version is comparable to GPT-3.

Paper address: https://research.facebook.com/publications/llama-open-and-efficient-foundation-language-models/

Although Meta opens source code to researchers who apply, it was unexpected that netizens first leaked the weight of LLaMA on GitHub.

#Since then, development around the LLaMA language model has exploded.

# Typically, running GPT-3 requires multiple data center-grade A100 GPUs, plus the weights for GPT-3 are not public.

Netizens started running the LLaMA model themselves, causing a sensation.

#Using quantization techniques to optimize model size, LLaMA can now run on M1 Macs, smaller Nvidia consumer GPUs, Pixel 6 phones, and even Raspberry Pi run on.

Netizens summarized some of the results that everyone has made using LLaMA from the release of LLaMA to now:

LLaMA was released on February 24 and is available under a non-commercial license to researchers and entities working in government, community and academia;

On March 2, 4chan netizens leaked all the LLaMA models;

##On March 10, Georgi Gerganov created the llama.cpp tool, LLaMA can be run on Mac equipped with M1/M2 chip;

March 11: 7B model can be run on 4GB RaspberryPi through llama.cpp, but The speed is relatively slow, only 10 seconds/token;

March 12: LLaMA 7B successfully ran on a node.js execution tool NPX;

March 13: llama.cpp can run on Pixel 6 phones;

And Now, Stanford Alpaca "Alpaca" is released.

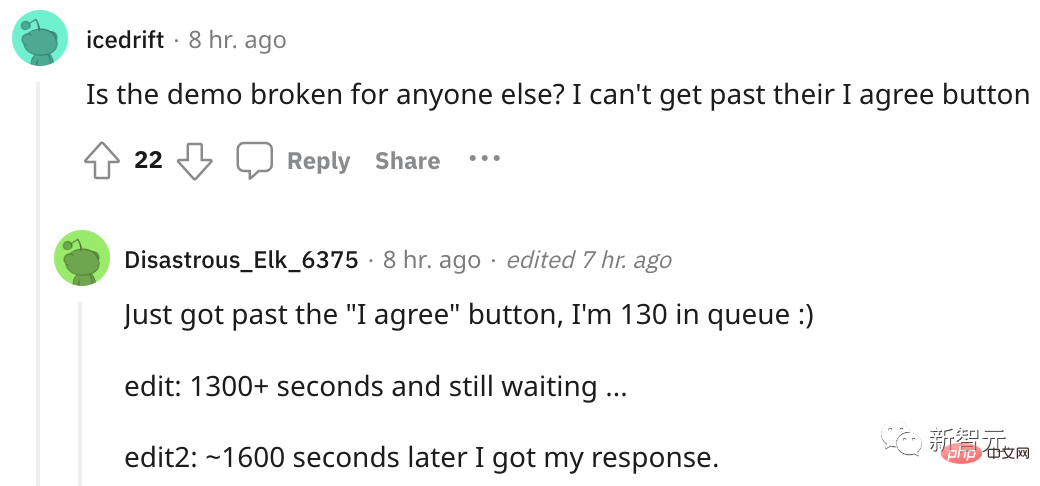

One More ThingNot long after the project was released, Alpaca became so popular that it was no longer usable....

Many netizens were noisy, and there was no response when they clicked "Generate", and some were waiting in line to play.

The above is the detailed content of LeCun praises $600 GPT-3.5 as a hardware replacement! Stanford's 7 billion parameter 'Alpaca' is popular, LLaMA performs amazingly!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: