Technology peripherals

Technology peripherals

AI

AI

Open source bilingual dialogue model is gaining popularity on GitHub, arguing that AI does not need to correct nonsense

Open source bilingual dialogue model is gaining popularity on GitHub, arguing that AI does not need to correct nonsense

Open source bilingual dialogue model is gaining popularity on GitHub, arguing that AI does not need to correct nonsense

This article is reprinted with the authorization of AI New Media Qubit (public account ID: QbitAI). Please contact the source for reprinting.

The domestic dialogue robot ChatGLM was born on the same day as GPT-4.

Jointly launched by Zhipu AI and Tsinghua University KEG Laboratory, the alpha internal beta version has been launched.

#This coincidence gave Zhang Peng, founder and CEO of Zhipu AI, an indescribable and complicated feeling. But seeing how awesome OpenAI's technology has become, this technical veteran who was numbed by the new developments in AI suddenly became excited again.

Especially when watching the live broadcast of the GPT-4 press conference, he looked at the picture on the screen, smiled for a while, watched another section, and grinned for a while.

Since its establishment, Zhipu AI, led by Zhang Peng, has been a member of the large model field and has set a vision of “making machines think like humans.”

But the road has been bumpy. Like almost all large-scale model companies, they face the same problems: lack of data, lack of machines, and lack of money. Fortunately, there are some organizations and companies that provide free support along the way.

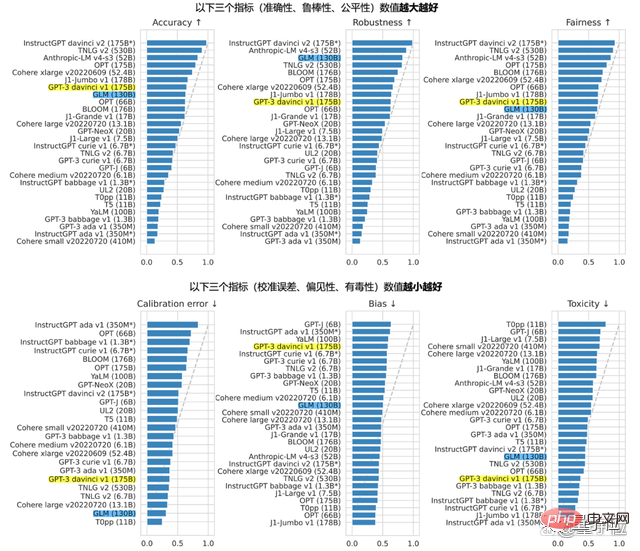

In August last year, the company joined forces with a number of scientific research institutes to develop the open source bilingual pre-trained large language model GLM-130B, which can be close to or on par with GPT-3 175B (davinci) in terms of accuracy and maliciousness indicators. , which was later the base of ChatGLM. Also open sourced at the same time as ChatGLM is the 6.2 billion parameter version ChatGLM-6B, which can be run with a single card for 1,000 yuan.

In addition to GLM-130B, another famous product of Zhipu is the AI talent pool AMiner, which is played by big names in academia:

This time and On the same day that GPT-4 hit, OpenAI’s speed and technology put a lot of pressure on Zhang Peng and the Zhipu team.

Does "serious nonsense" need to be corrected?

After the internal testing of ChatGLM, Qubit got the quota immediately and launched a wave of human human evaluations.

Let’s not talk about anything else. After several rounds of testing, it is not difficult to find that ChatGLM has a skill that both ChatGPT and New Bing have:

Talking nonsense seriously, including But it is not limited to calculating -33 chicks in the chicken and rabbit cage problem.

For most people who regard conversational AI as a "toy" or office assistant, how to improve accuracy is a point of special concern and importance.

The dialogue AI is seriously talking nonsense. Can it be corrected? Does it really need to be corrected?

"There will certainly be. But can closed source definitely solve security problems? I don't think so. And I believe there are many smart people in the world, and competition is a high-quality catalyst that promotes the rapid advancement of the entire industry and ecology."

The catch-up here is a statement process, based on the belief that the OpenAI research direction is the only way to reach further goals, but catching up with OpenAI is not the ultimate goal.

Catching up does not mean that we can stop; the process of catching up does not mean that we have to copy the Silicon Valley model as it is. We can even take advantage of China’s characteristics and advantages of mobilizing top-level design to concentrate on doing big things, so that it is possible to make up for the slowdown in development speed. difference.

Although we have more than 4 years of experience from 2019 to now, Zhipu does not dare to give any pitfall avoidance guidelines. However, Zhipu understands the general direction. This is also the common idea revealed by Zhipu that they are discussing with CCF - the birth of

large model technology is a very comprehensive and complex systematic project.

It is no longer a matter of a few smart heads pondering in the laboratory, dropping a few hairs, doing some experiments, and publishing some papers. In addition to original theoretical innovation, it also requires strong engineering implementation and systematization capabilities, and even good product capabilities.

Just like ChatGPT, choose the appropriate scenario, set up and package a product that can be used by anyone from 80 years old to 8 years old.

Computing power, algorithms, and data are all backed by talents, especially system engineering practitioners, whose importance is far greater than in the past.

Based on this understanding, Zhang Peng revealed that adding a knowledge system (knowledge graph) to the large model field, allowing the two to work systematically like the left and right brains, is the next step of the wisdom graph in research and experiments. step.

GitHub’s most popular bilingual conversation model

ChatGLM overall refers to the design ideas of ChatGPT.

That is, code pre-training is injected into the GLM-130B bilingual base model, and human intention alignment is achieved through supervised fine-tuning and other technologies (that is, making the machine's answers conform to human values and human expectations).

The GLM-130B with 130 billion parameters behind it was jointly developed by Zhipu and Tsinghua University’s KEG Laboratory. Different from the architecture of BERT, GPT-3 and T5, GLM-130B is an autoregressive pre-training model containing multiple objective functions.

In August last year, GLM-130B was released to the public and open sourced at the same time. In the Standford report, its performance was remarkable on multiple tasks.

#The insistence on open source comes from Zhipu not wanting to be a lonely pioneer on the road to AGI.

This is also the reason why we will continue to open source ChatGLM-6B this year after opening up GLM-130B.

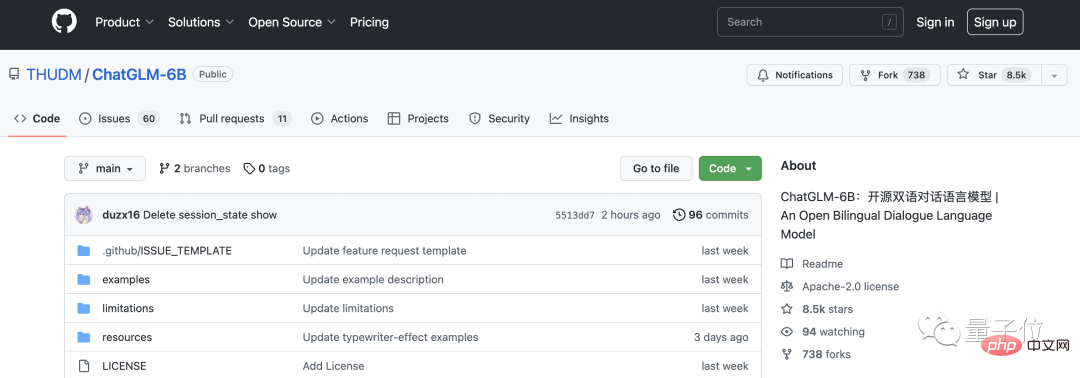

ChatGLM-6B is a "shrunken version" of the model, with a parameter size of 6.2 billion. The technical base is the same as ChatGLM, and it has begun to have Chinese question and answer and dialogue functions.

There are two reasons for continuing to open source.

One is to expand the ecology of pre-trained models, attract more people to invest in large model research, and solve many existing research problems;

The other is to hope that large models can be used as infrastructure Precipitate to help generate greater subsequent value.

Joining the open source community is indeed attractive. Within a few days of ChatGLM's internal testing, ChatGLM-6B had 8.5k stars on GitHub, once jumping to the first place on the trending list.

From this conversation, Qubit also heard this voice from the practitioner in front of me:

The same bugs appear frequently , but people’s tolerance for ChatGPT launched by OpenAI is significantly different from that of Google’s conversational robot Bard and Baidu Wenxinyiyan.

This is both fair and unfair.

From a purely technical point of view, the judging criteria are different, which is unfair; but big companies such as Google and Baidu occupy more resources, so everyone naturally feels that they have stronger technical strength and do better The possibility of producing something better is higher, and the expectations are higher.

"I hope everyone can give more patience, whether it is to Baidu, us, or other institutions."

In addition to the above content , In this conversation, Qubit also talked with Zhang Peng about the experience of ChatGLM in detail.

Attached below is the transcript of the conversation. For the convenience of reading, we have edited and organized it without changing the original meaning.

record of conversation

Qubit: The label given to the internal beta version does not seem to be so "universal". The official website defines three circles for its applicable fields, namely education, medical care and finance.

Zhang Peng: This has nothing to do with the training data, mainly considering its application scenarios.

ChatGLM is similar to ChatGPT and is a conversation model. Which application areas are naturally closer to conversational scenarios? Like customer service, like doctor consultation, or like online financial services. In these scenarios, ChatGLM technology is more suitable to play a role.

Qubit: But in the medical field, people who want to see a doctor are still relatively cautious about AI.

Zhang Peng: You definitely can’t just use the big model to fight! (Laughs) If you want to completely replace humans, you still have to be cautious.

At this stage, it is not used to replace people's work, but more of a supporting role, providing suggestions to practitioners to improve work efficiency.

Qubit: We threw the GLM-130B paper link to ChatGLM and asked it to briefly summarize the topic. It kept buzzing for a long time, but it turned out that it was not about this article at all.

Zhang Peng: The setting of ChatGLM is such that it cannot obtain links. It is not a technical difficulty, but a problem of system boundaries. Mainly from a security perspective, we do not want it to access external links arbitrarily.

You can try copying the 130B paper text and throwing it into the input box. Generally, you won’t talk nonsense.

Qubit: We also threw a chicken and a rabbit into the same cage, and calculated -33 chickens.

Zhang Peng: In terms of mathematical processing and logical reasoning, it does still have certain flaws and cannot be that good. We actually wrote about this in the closed beta instructions.

Qubit: Someone on Zhihu did an evaluation, and my coding ability seems to be average.

Zhang Peng: As for the ability to write code, I think it’s pretty good? I don't know what your testing method is. But it depends on who you compare with. Compared with ChatGPT, ChatGLM itself may not invest that much in code data.

Just like the comparison between ChatGLM and ChatGLM-6B, the latter only has 6B (6.2 billion) parameters. In terms of overall capabilities, such as overall logic, illusion when answering, and length, the gap between the reduced version and the original version is just It is clear.

But the "shrunken version" can be deployed on ordinary computers, bringing higher usability and lower threshold.

Qubit: It has an advantage. It has a good grasp of new information. I know that the current CEO of Twitter is Musk, and I also know that He Yuming returned to academia on March 10 - although I don’t know about GPT- 4 has been released, haha.

Zhang Peng: We have done some special technical processing.

Qubits: What are they?

Zhang Peng: I won’t go into specific details. But there are ways to deal with new information that is relatively recent.

Qubit: Then disclose the cost? The cost of training GLM-130B is still several million. How low is the cost of conducting a round of question and answer on ChatGLM?

Zhang Peng: We roughly tested and estimated the cost, which is similar to the cost announced by OpenAI for the second to last time, and slightly lower than them.

But OpenAI’s latest offer has been reduced to 10% of the original price, only $0.002/750 words, which is lower than ours. This cost is indeed astonishing. It is estimated that they have done model compression, quantization, optimization, etc., otherwise it would not be possible to reduce it to such a low level.

We are also doing related things and hope to keep costs down.

Qubits: Over time, can it be as low as the search cost?

Zhang Peng: When will it drop to such a low level? I don't know either. It will take some time.

I have seen the calculation of the average cost per search price before, which is actually related to the main business. For example, the main business of search engines is advertising, so total advertising revenue should be used as the upper limit to calculate costs. If calculated in this way, what needs to be considered is not the cost of consumption, but the balance point of corporate profits and benefits.

Doing model inference requires AI computing power, which is definitely more expensive than searching using only CPU computing power. But everyone is working hard, and many people have put forward some ideas, such as continuing to compress and quantize the model.

Some people even want to convert the model and let it run on the CPU, because the CPU is cheaper and has a larger volume. If it runs, the cost will drop significantly.

Qubit: Finally, I would like to talk about a few topics about talent. Now everyone is scrambling for talents for large models. Are you afraid that Zhipu will not be able to recruit people?

Zhang Peng: We were incubated from Tsinghua KEG’s technology project, and we have always had good relationships with various universities. Moreover, the company has a relatively open atmosphere for young people. 75% of my colleagues are young people. I am considered an old guy. Big model talent is indeed a rare commodity right now, but we don’t have any recruiting worries yet.

On the other hand, we are actually more worried about being exploited by others.

The above is the detailed content of Open source bilingual dialogue model is gaining popularity on GitHub, arguing that AI does not need to correct nonsense. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.