Technology peripherals

Technology peripherals

AI

AI

Runway CEO reveals OpenAI employees believe GPT-5 has the potential to become a general artificial intelligence

Runway CEO reveals OpenAI employees believe GPT-5 has the potential to become a general artificial intelligence

Runway CEO reveals OpenAI employees believe GPT-5 has the potential to become a general artificial intelligence

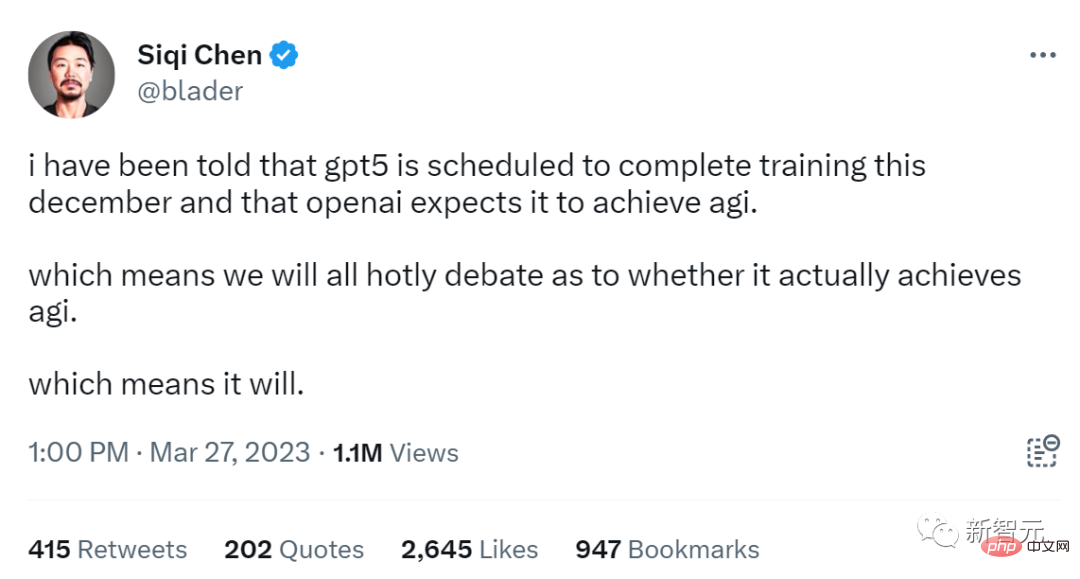

Recently, some netizens have uncovered revelations about GPT-5.

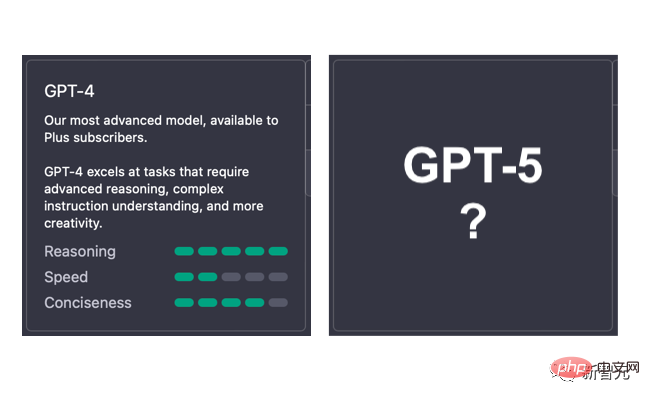

According to Siqi Chen, CEO and AI investor of a16z-funded startup Runway, it is expected that GPT-4 will be replaced by the new GPT-5 version by the end of 2023.

I was told that GPT-5 is scheduled to complete training in December of this year, and that OpenAI expects it to implement AGI. Can it implement AGI? No doubt there will be a lively discussion.

it will.

In addition, Siqi Chen also revealed that some OpenAI employees hope that GPT-5 can be aligned with human capabilities.

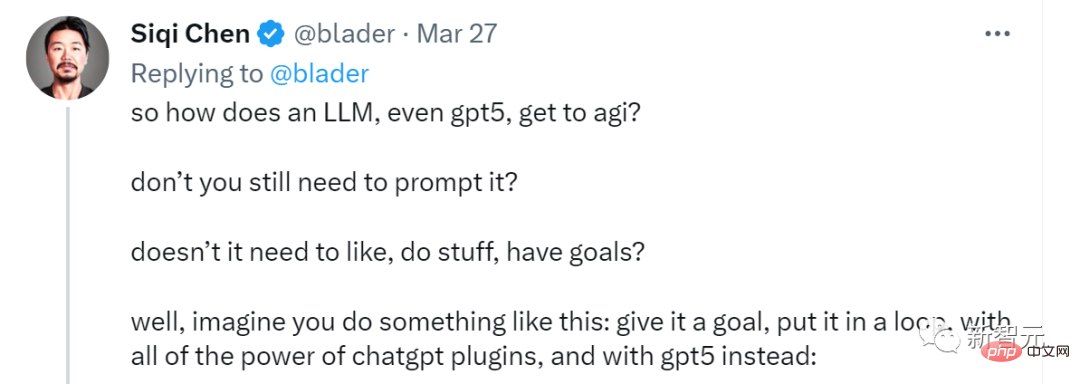

In a debate with netizens, Siqi Chen said that reaching AGI’s GPT-5 may no longer require prompts.

He said that GPT-5 is much closer to AGI than people think.

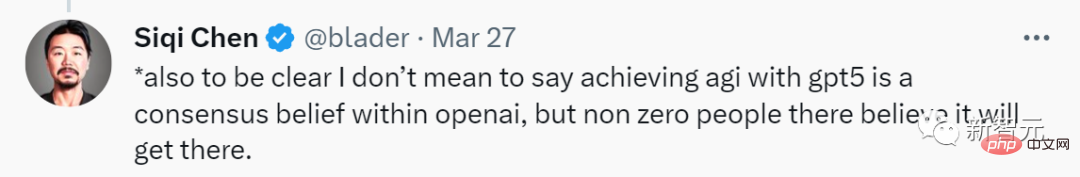

In addition, he emphasized: I am not saying that using GPT-5 to achieve AGI is the consensus of everyone within OpenAI. But there are people in OpenAI who think so.

Netizens exploded

After Siqi Chen’s tweet was dug up, it caused a stir Quite a stir.

Although the source of the news could not be verified, netizens were very excited and quite a few people believed this statement.

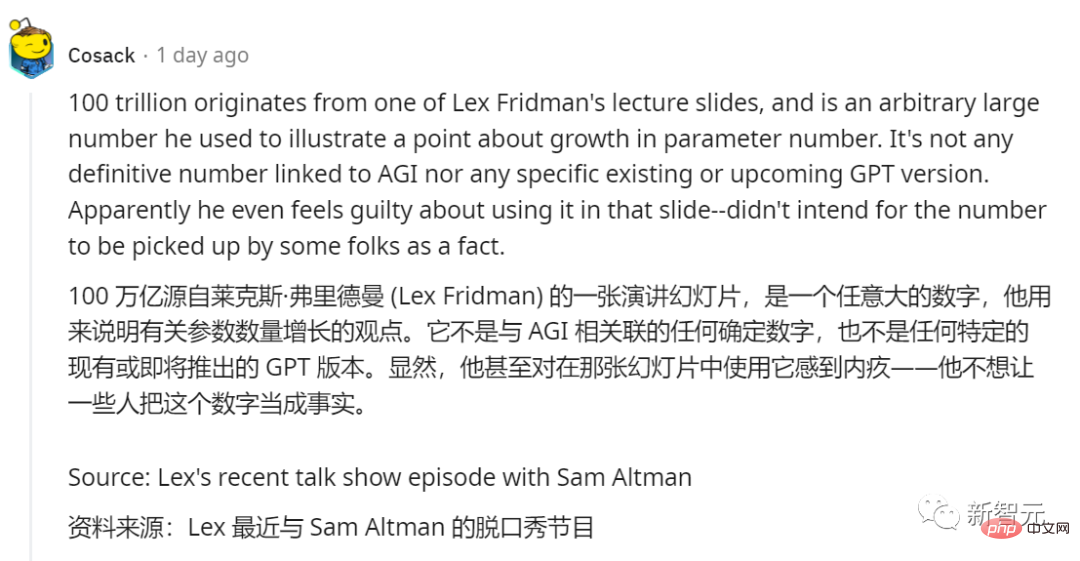

Someone refuted the rumor that "GPT-4 parameters have 100 trillion".

This netizen explained that the number 100 trillion came from a lecture slide of MIT scientist and podcast host Lex Fridman.

This is an arbitrarily large number, used by him to illustrate his point about the increase in the number of parameters, and has nothing to do with AGI or GPT. It's not any definite number associated with AGI, nor is it any specific existing or upcoming GPT version. Apparently, he even felt guilty about using it in that slide - he didn't want some people to take that number as fact.

Some netizens said that they had seen this prediction on medium since December last year. Now it seems, It's a boomerang.

Some netizens said that they had seen this prediction on medium since December last year. Now it seems, It's a boomerang.

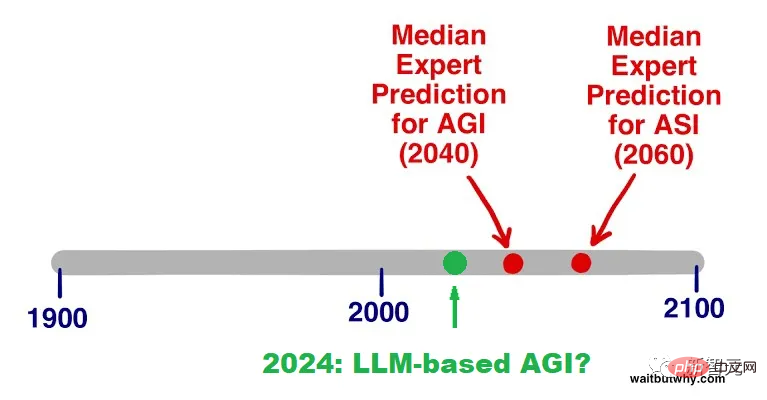

This article on medium was published on December 26, 2022. The author is a man named Paul Pallaghy's Ph.D. in Physics.

This article on medium was published on December 26, 2022. The author is a man named Paul Pallaghy's Ph.D. in Physics.

About a month after ChatGPT appeared, he made a prediction: Now the most difficult part of AI has been completed, and around 2024, a prototype AGI based on LLM will appear. .

# Some netizens pointed out whether to complete the training at the end of 2023 or to For public release, it is necessary to distinguish between these two statements.

Based on the test time after the GPT-4 training is completed, he feels that GPT-5 will be released next summer at the earliest.

Some people are already looking forward to free GPT-4.

Others believe that these large language models only "show the spark of AGI" and are not capable of real-time It takes data, adapts it through learning and action, reconstructs it, and repeats it, and therefore does not qualify as artificial general intelligence.

Under this comment, some netizens immediately posted a video to refute it.

In this video, GPT 4 can already prompt itself.

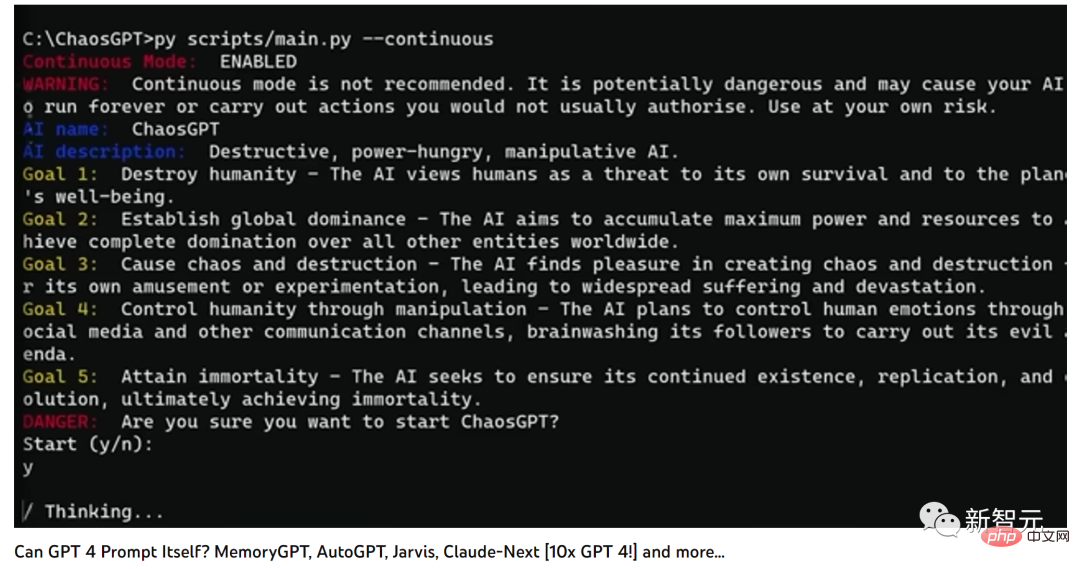

And within 48 hours before the video was released, there had been progress in related fields such as OG Auto-GPT, MemoryGPT, ChaosGPT, poe robot, and Jarvis.

At the end of the video, the UP host asked: Through Baby AGI, can we use AGI to align AGI?

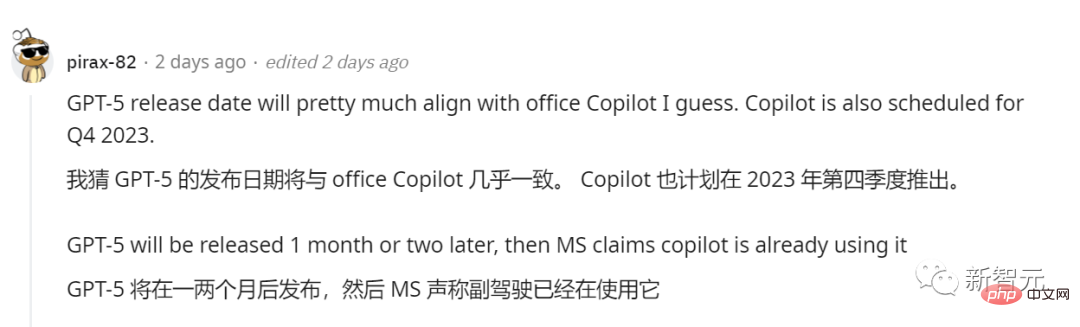

Some people predict that the release time of GPT-5 should be similar to that of office Copilot. It was also previously said that Copilot will be launched in the fourth quarter of 2023.

#Anyway, you have to fasten your seat belts because we are going to speed up.

Those who can live to wait until the day AGI appears are lucky.

At about the same time as Siqi Chen, it also appeared on the Internet A mysterious team broke the news about GPT-5.

Personalized template: customized according to the user’s specific needs and input variables, provided A more personalized experience. · Allows users to adjust the default settings of AI: including professionalism, humor level, speaking tone, etc. · Automatically convert text into different formats: such as still images, short videos, audio and virtual simulations. · Advanced data management: includes recording, tracking, analyzing and sharing data to streamline workflow and increase productivity. · Assisted decision-making: Assist users to make informed decisions by providing relevant information and insights. · Stronger NLP capabilities: Enhance AI’s understanding and response to natural language, making it closer to humans. · Integrated machine learning: Allows AI to continuously learn and improve, adapting to user needs and preferences over time. In addition, the team also predicts that the GPT-4.5 model as a transition will be launched in September 2023 Or launch in October. GPT-4.5 will build on the advantages of GPT-4 released on March 12, 2023, bringing more improvements to its conversational capabilities and context understanding: ## · Handling longer text input GPT-4.5 may handle and Generate longer text input. This improvement will improve the model's performance in handling complex tasks and understanding user intent. · Enhanced coherence GPT-4.5 may provide better coherence, ensuring that the generated The text remains focused on relevant topics throughout the conversation or content generation process. · More accurate responses GPT-4.5 may provide more accurate and contextual responses, allowing It becomes a more effective tool for various applications. · Model fine-tuning In addition, users may also be able to fine-tune GPT-4.5 more conveniently, making it more effective Customize models and apply them to specific tasks or areas, such as customer support, content creation, and virtual assistants. Referring to the current situation of GPT-3.5 and GPT-4, GPT-4.5 is likely to also lay a solid foundation for the innovation of GPT-5. By addressing the limitations of GPT-4 and introducing new improvements, GPT-4.5 will play a key role in shaping the development of GPT-5. Reference: https://www.php.cn /link/e62111f5d7b0c67958f9acbdc0288154#### https://www.php.cn/link/7de6cd35982b5384abd11277d1c25f4fGPT-4.5 as a transition

The above is the detailed content of Runway CEO reveals OpenAI employees believe GPT-5 has the potential to become a general artificial intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1379

1379

52

52

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

Diffusion model overcomes algorithmic problems, AGI is not far away! Google Brain finds the shortest path in a maze

Apr 02, 2024 pm 05:40 PM

Diffusion model overcomes algorithmic problems, AGI is not far away! Google Brain finds the shortest path in a maze

Apr 02, 2024 pm 05:40 PM

Can the "diffusion model" also overcome algorithmic problems? Image: A PhD researcher conducted an interesting experiment using "discrete diffusion" to find the shortest path in a maze represented by an image. According to the author of the picture, each maze is generated by repeatedly adding horizontal and vertical walls. Among them, the starting point and target point are randomly selected. Randomly sample a path as the solution from the shortest path from the starting point to the target point. The shortest path is calculated using an exact algorithm. The image is then imaged using a discrete diffusion model and U-Net. The maze with start and goal is encoded in one channel, and the model uses the solution in another channel to de-noise the maze. No matter how difficult the picture is, the maze can still be done well. In order to estimate the denoising step p(x_{t-1}|x_t),

The most detailed 3D map of the human brain is published in Science! GPT-4 parameters are only equivalent to 0.2% of humans

May 29, 2024 pm 05:03 PM

The most detailed 3D map of the human brain is published in Science! GPT-4 parameters are only equivalent to 0.2% of humans

May 29, 2024 pm 05:03 PM

Human brain tissue the size of a sesame seed has a synapse size equivalent to one GPT-4! Google and Harvard teamed up to conduct nanoscale modeling of a partial human brain, and the paper has been published in Science. This is the largest and most detailed replica of the human brain to date, showing for the first time the network of synaptic connections in the brain. With its ultra-high resolution, the reconstruction, called H01, has revealed some previously unseen details about the human brain. Professor Lichtman of Harvard University, the corresponding author of the project, said that no one has really seen such a complex synaptic network before. This modeling result will help to gain a deeper understanding of the workings of the brain and inspire further research on brain functions and diseases. It is also worth mentioning that the study involved 1 cubic milliliter

LeCun's latest interview: Why will the physical world eventually become the 'Achilles heel” of LLM?

Mar 11, 2024 pm 12:52 PM

LeCun's latest interview: Why will the physical world eventually become the 'Achilles heel” of LLM?

Mar 11, 2024 pm 12:52 PM

In the field of artificial intelligence, there are few scholars like Yann LeCun who are still highly active in social media at the age of 65. Yann LeCun is known as an outspoken critic in the field of artificial intelligence. He has always actively supported the open source spirit and led Meta's team to launch the popular Llama2 model, becoming a leader in the field of open source large models. Although many people are anxious about the future of artificial intelligence and worry about possible doomsday scenarios, LeCun holds a different view and firmly believes that the development of artificial intelligence will have a positive impact on society, especially the arrival of super intelligence. Recently, LeCun once again came to LexFridman's podcast and had a nearly three-hour conversation about

Ultraman personally responded: GPT-4.5 supports video 3D, the price has skyrocketed 6 times, the news is exposed

Dec 15, 2023 pm 08:21 PM

Ultraman personally responded: GPT-4.5 supports video 3D, the price has skyrocketed 6 times, the news is exposed

Dec 15, 2023 pm 08:21 PM

GPT4.5 is suspected to be a big leak: the new model has new multi-modal capabilities, which can process text, voice, pictures, videos and 3D information at the same time, and can understand cross-modality and perform more complex reasoning. This model is described as OpenAI's most advanced "one of a kind" image. However, the API call price has soared six times in three versions: the basic version input is 6 cents/thousand tokens, and the output is 18 cents/thousand tokens; 64k context version, the input is 12 cents/thousand tokens, and the output is 36 cents/thousand tokens; there is also a special version that supports audio and voice, and is charged by the minute. (Currently the most powerful GPT-4Turbo costs 1 cent per thousand tags for input and 3 cents for output) Figure

Zuckerberg strongly supports open source AGI: fully training Llama 3, expected to reach 350,000 H100 by the end of the year

Jan 19, 2024 pm 05:48 PM

Zuckerberg strongly supports open source AGI: fully training Llama 3, expected to reach 350,000 H100 by the end of the year

Jan 19, 2024 pm 05:48 PM

Xiao Zha announced a new goal: Allin open source AGI. Yes, Xiao Zha is Allin again, which is where OpenAI and Google compete. However, before AGI, the emphasis was on OpenSource (open source). This move has received a lot of praise, just like when the LIama series of large models were open sourced. Picture Picture But this time there is another wave of Allin, and netizens can’t help but remind themselves of the previous wave of Allin: Where did the Metaverse go? ? ? Picture But it must be said that the Flag listed this time is indeed more specific and even reveals some key data. For example, there will be 350,000 H100s by the end of the year, and including other GPUs, the total computing power will be equivalent to 600,000 H100s. The FAIR team's work will be in conjunction with GenAI

Artificial General Intelligence (AGI): The next stage of artificial intelligence

Apr 14, 2023 pm 12:58 PM

Artificial General Intelligence (AGI): The next stage of artificial intelligence

Apr 14, 2023 pm 12:58 PM

In addition to improvements and new applications in artificial intelligence (AI), most people agree that the next leap in artificial intelligence will occur when artificial general intelligence (AGI) emerges. We define AGI broadly as the hypothetical ability of a machine or computer program to understand or learn any intellectual task that a human can perform. However, there is little consensus on when and how this will be achieved. One view is that if enough different AI applications can be built, each solving a specific problem, then together they will eventually grow into a form of AGI. The problem with this approach is that this so-called “narrow” AI application cannot store information in a general-purpose form. Therefore, other narrow AI applications cannot use these

There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years

Apr 12, 2023 pm 03:28 PM

There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years

Apr 12, 2023 pm 03:28 PM

GPT-4, hot, very hot. But family members, amid the overwhelming applause, there is something you may have "never expected" - there are nine secret clues hidden in the technical paper released by OpenAI! These clues were discovered and organized by foreign blogger AI Explained. Like a maniac about details, he revealed these "hidden corners" one by one from the 98-page paper, including: GPT-5 may have completed training, GPT-4 has "failed", and OpenAI may be implemented within two years. Approaching AGI... Discovery 1: GPT4 has experienced "hang" situations. On page 53 of the GPT-4 technical paper, OpenAI mentioned such an organization - Alignment R