Those interesting and powerful Python libraries

The python language has always been famous for its rich third-party libraries. Today I will introduce some very nice libraries, which are fun, fun and powerful!

Data Collection

In today’s Internet era, data is really important. First, let’s introduce several excellent data collection projects

AKShare

AKShare is a Python-based financial data interface library that aims to collect fundamental data, real-time and historical market data, and derivative data from financial products such as stocks, futures, options, funds, foreign exchange, bonds, indices, and cryptocurrencies. , a set of tools from data cleaning to data landing, mainly used for academic research purposes.

import akshare as ak stock_zh_a_hist_df = ak.stock_zh_a_hist(symbol="000001", period="daily", start_date="20170301", end_date='20210907', adjust="") print(stock_zh_a_hist_df)

Output:

日期开盘 收盘最高...振幅 涨跌幅 涨跌额 换手率 0 2017-03-01 9.49 9.49 9.55...0.840.110.010.21 1 2017-03-02 9.51 9.43 9.54...1.26 -0.63 -0.060.24 2 2017-03-03 9.41 9.40 9.43...0.74 -0.32 -0.030.20 3 2017-03-06 9.40 9.45 9.46...0.740.530.050.24 4 2017-03-07 9.44 9.45 9.46...0.630.000.000.17 ............... ... ... ... ... 11002021-09-0117.4817.8817.92...5.110.450.081.19 11012021-09-0218.0018.4018.78...5.482.910.521.25 11022021-09-0318.5018.0418.50...4.35 -1.96 -0.360.72 11032021-09-0617.9318.4518.60...4.552.270.410.78 11042021-09-0718.6019.2419.56...6.564.280.790.84 [1105 rows x 11 columns]

https://github.com/akfamily/akshare

TuShare

TuShare is the implementation of A tool for data collection, cleaning, processing and data storage of financial data such as stocks/futures, which meets the data acquisition needs of financial quantitative analysts and people who study data analysis. It is characterized by wide data coverage and simple interface calls. Respond quickly.

However, some functions of this project are chargeable, please choose to use them

import tushare as ts

ts.get_hist_data('600848') #一次性获取全部数据Output:

openhigh close low volumep_changema5 date 2012-01-11 6.880 7.380 7.060 6.880 14129.96 2.62 7.060 2012-01-12 7.050 7.100 6.980 6.9007895.19-1.13 7.020 2012-01-13 6.950 7.000 6.700 6.6906611.87-4.01 6.913 2012-01-16 6.680 6.750 6.510 6.4802941.63-2.84 6.813 2012-01-17 6.660 6.880 6.860 6.4608642.57 5.38 6.822 2012-01-18 7.000 7.300 6.890 6.880 13075.40 0.44 6.788 2012-01-19 6.690 6.950 6.890 6.6806117.32 0.00 6.770 2012-01-20 6.870 7.080 7.010 6.8706813.09 1.74 6.832 ma10ma20v_ma5 v_ma10 v_ma20 turnover date 2012-01-11 7.060 7.060 14129.96 14129.96 14129.96 0.48 2012-01-12 7.020 7.020 11012.58 11012.58 11012.58 0.27 2012-01-13 6.913 6.9139545.679545.679545.67 0.23 2012-01-16 6.813 6.8137894.667894.667894.66 0.10 2012-01-17 6.822 6.8228044.248044.248044.24 0.30 2012-01-18 6.833 6.8337833.338882.778882.77 0.45 2012-01-19 6.841 6.8417477.768487.718487.71 0.21 2012-01-20 6.863 6.8637518.008278.388278.38 0.23

https://github.com/waditu/tushare

GoPUP

The data collected by the GoPUP project comes from public data sources and does not involve any personal privacy data or non-public data. But similarly, some interfaces require TOKEN registration before they can be used.

import gopup as gp df = gp.weibo_index(word="疫情", time_type="1hour") print(df)

Output:

疫情 index 2022-12-17 18:15:0018544 2022-12-17 18:20:0014927 2022-12-17 18:25:0013004 2022-12-17 18:30:0013145 2022-12-17 18:35:0013485 2022-12-17 18:40:0014091 2022-12-17 18:45:0014265 2022-12-17 18:50:0014115 2022-12-17 18:55:0015313 2022-12-17 19:00:0014346 2022-12-17 19:05:0014457 2022-12-17 19:10:0013495 2022-12-17 19:15:0014133

https://github.com/justinzm/gopup

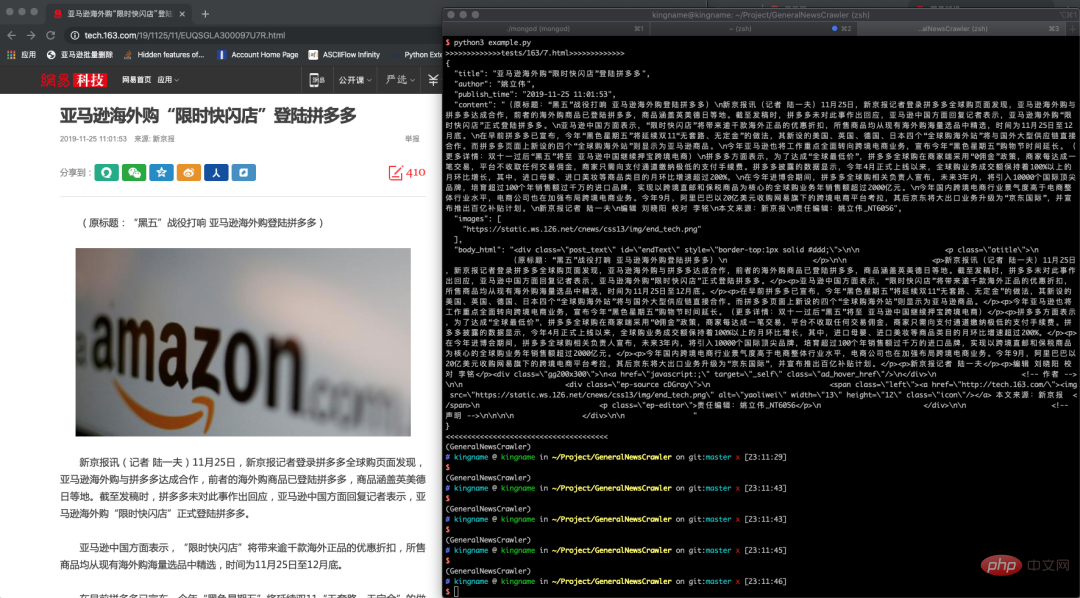

GeneralNewsExtractor

This project is based on The paper "Webpage Text Extraction Method Based on Text and Symbol Density" uses a text extractor implemented in Python, which can be used to extract the content, author, and title of the text in HTML.

>>> from gne import GeneralNewsExtractor >>> html = '''经过渲染的网页 HTML 代码''' >>> extractor = GeneralNewsExtractor() >>> result = extractor.extract(html, noise_node_list=['//div[@]']) >>> print(result)

Output:

{"title": "xxxx", "publish_time": "2019-09-10 11:12:13", "author": "yyy", "content": "zzzz", "images": ["/xxx.jpg", "/yyy.png"]}News page extraction example

https://github.com/GeneralNewsExtractor/GeneralNewsExtractor

Crawler

Crawler is also a major application direction of Python language. Many friends also start with crawler. Let’s take a look at some excellent crawler projects

playwright-python

Microsoft's open source browser automation tool can operate the browser using Python language. Supports Chromium, Firefox and WebKit browsers under Linux, macOS, and Windows systems.

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

for browser_type in [p.chromium, p.firefox, p.webkit]:

browser = browser_type.launch()

page = browser.new_page()

page.goto('http://whatsmyuseragent.org/')

page.screenshot(path=f'example-{browser_type.name}.png')

browser.close()https://github.com/microsoft/playwright-python

awesome-python-login-model

This project collects various Login methods for large websites and crawler programs for some websites. Login methods include selenium login, direct simulated login through packet capture, etc. Helps novices research and write crawlers.

However, as we all know, crawlers are very demanding for post-maintenance. The project has not been updated for a long time, so there are still doubts whether the various login interfaces can still be used normally. Everyone chooses to use them, or develop them themselves.

https://github.com/Kr1s77/awesome-python-login-model

DecryptLogin

Compared with the previous one, this project is still being updated. It also simulates logging into major websites, which is still very valuable for novices.

from DecryptLogin import login # the instanced Login class object lg = login.Login() # use the provided api function to login in the target website (e.g., twitter) infos_return, session = lg.twitter(username='Your Username', password='Your Password')

https://github.com/CharlesPikachu/DecryptLogin

Scylla

Scylla is a high-quality free proxy IP pool tool. Currently only Python 3.6 is supported.

http://localhost:8899/api/v1/stats

Output:

{

"median": 181.2566407083,

"valid_count": 1780,

"total_count": 9528,

"mean": 174.3290085201

}https://github.com/scylladb/scylladb

ProxyPool

Crawler proxy IP pool The main function of the project is to regularly collect free proxies published online for verification and put them into the database. The proxies that are regularly verified and put into the database ensure the availability of the agents. It provides two usage methods: API and CLI. At the same time, the proxy source can also be expanded to increase the quality and quantity of proxy pool IPs. The project design document is detailed and the module structure is concise and easy to understand. It is also suitable for novice crawlers to better learn crawler technology.

import requests

def get_proxy():

return requests.get("http://127.0.0.1:5010/get/").json()

def delete_proxy(proxy):

requests.get("http://127.0.0.1:5010/delete/?proxy={}".format(proxy))

# your spider code

def getHtml():

# ....

retry_count = 5

proxy = get_proxy().get("proxy")

while retry_count > 0:

try:

html = requests.get('http://www.example.com', proxies={"http": "http://{}".format(proxy)})

# 使用代理访问

return html

except Exception:

retry_count -= 1

# 删除代理池中代理

delete_proxy(proxy)

return Nonehttps://github.com/Python3WebSpider/ProxyPool

getproxy

getproxy is a crawling and distribution proxy website that obtains http/https The agent's program updates data every 15 minutes.

(test2.7) ➜~ getproxy INFO:getproxy.getproxy:[*] Init INFO:getproxy.getproxy:[*] Current Ip Address: 1.1.1.1 INFO:getproxy.getproxy:[*] Load input proxies INFO:getproxy.getproxy:[*] Validate input proxies INFO:getproxy.getproxy:[*] Load plugins INFO:getproxy.getproxy:[*] Grab proxies INFO:getproxy.getproxy:[*] Validate web proxies INFO:getproxy.getproxy:[*] Check 6666 proxies, Got 666 valid proxies ...

https://github.com/fate0/getproxy

freeproxy

is also a project to capture free proxies. This project supports There are many proxy websites to crawl and it is easy to use.

from freeproxy import freeproxy

proxy_sources = ['proxylistplus', 'kuaidaili']

fp_client = freeproxy.FreeProxy(proxy_sources=proxy_sources)

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36'

}

response = fp_client.get('https://space.bilibili.com/406756145', headers=headers)

print(response.text)https://github.com/CharlesPikachu/freeproxy

fake-useragent

Disguise browser identity, often used for crawlers. The code of this project is very small, you can read it to see how ua.random returns a random browser identity.

from fake_useragent import UserAgent ua = UserAgent() ua.ie # Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US); ua.msie # Mozilla/5.0 (compatible; MSIE 10.0; Macintosh; Intel Mac OS X 10_7_3; Trident/6.0)' ua['Internet Explorer'] # Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.1; Trident/4.0; GTB7.4; InfoPath.2; SV1; .NET CLR 3.3.69573; WOW64; en-US) ua.opera # Opera/9.80 (X11; Linux i686; U; ru) Presto/2.8.131 Version/11.11 ua.chrome # Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.2 (KHTML, like Gecko) Chrome/22.0.1216.0 Safari/537.2' ua.google # Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_4) AppleWebKit/537.13 (KHTML, like Gecko) Chrome/24.0.1290.1 Safari/537.13 ua['google chrome'] # Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11 ua.firefox # Mozilla/5.0 (Windows NT 6.2; Win64; x64; rv:16.0.1) Gecko/20121011 Firefox/16.0.1 ua.ff # Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:15.0) Gecko/20100101 Firefox/15.0.1 ua.safari # Mozilla/5.0 (iPad; CPU OS 6_0 like Mac OS X) AppleWebKit/536.26 (KHTML, like Gecko) Version/6.0 Mobile/10A5355d Safari/8536.25 # and the best one, get a random browser user-agent string ua.random

https://github.com/fake-useragent/fake-useragent

Web related

Python Web has too many excellent and veteran There are many libraries, such as Django and Flask. I won’t talk about them as everyone knows them. We will introduce a few niche but easy-to-use ones.

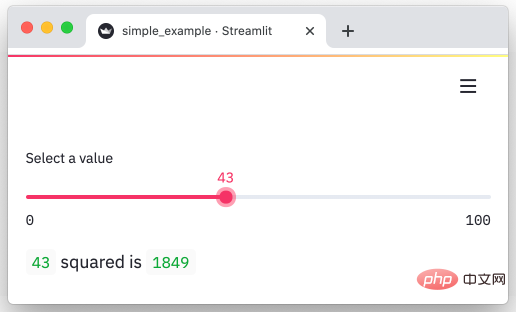

streamlit

streamlit is a Python framework that can quickly turn data into visual and interactive pages. Turn our data into graphs in minutes.

import streamlit as st

x = st.slider('Select a value')

st.write(x, 'squared is', x * x)Output:

https://github.com/streamlit/streamlit

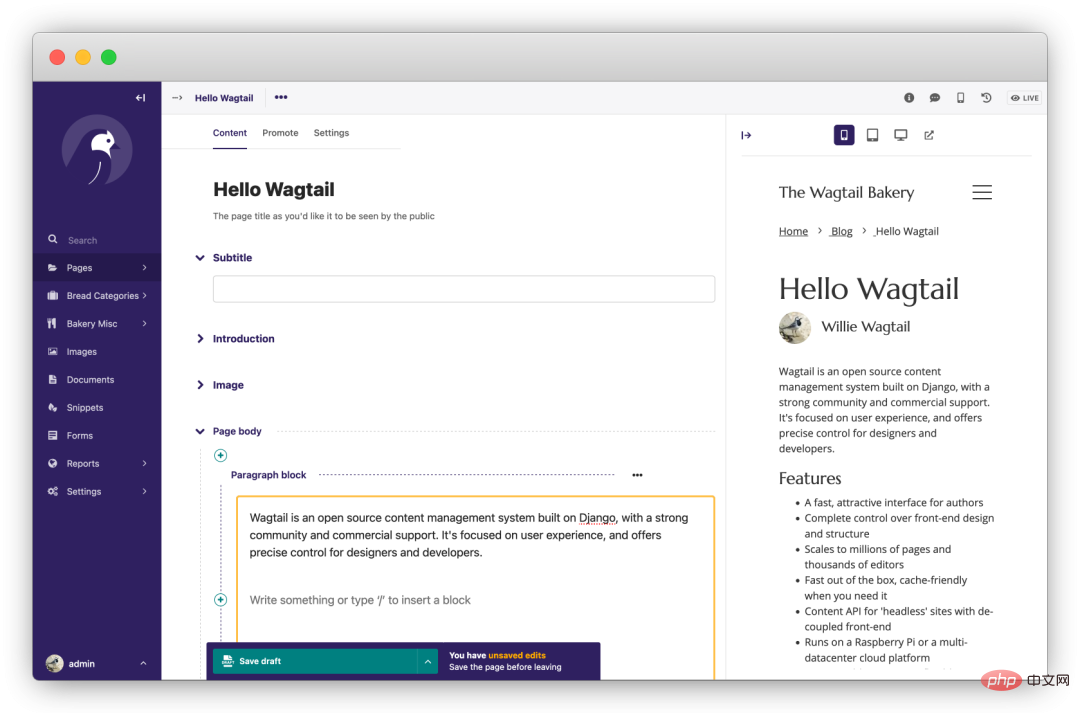

wagtail

是一个强大的开源 Django CMS(内容管理系统)。首先该项目更新、迭代活跃,其次项目首页提到的功能都是免费的,没有付费解锁的骚操作。专注于内容管理,不束缚前端实现。

https://github.com/wagtail/wagtail

fastapi

基于 Python 3.6+ 的高性能 Web 框架。“人如其名”用 FastAPI 写接口那叫一个快、调试方便,Python 在进步而它基于这些进步,让 Web 开发变得更快、更强。

from typing import Union

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"Hello": "World"}

@app.get("/items/{item_id}")

def read_item(item_id: int, q: Union[str, None] = None):

return {"item_id": item_id, "q": q}https://github.com/tiangolo/fastapi

django-blog-tutorial

这是一个 Django 使用教程,该项目一步步带我们使用 Django 从零开发一个个人博客系统,在实践的同时掌握 Django 的开发技巧。

https://github.com/jukanntenn/django-blog-tutorial

dash

dash 是一个专门为机器学习而来的 Web 框架,通过该框架可以快速搭建一个机器学习 APP。

https://github.com/plotly/dash

PyWebIO

同样是一个非常优秀的 Python Web 框架,在不需要编写前端代码的情况下就可以完成整个 Web 页面的搭建,实在是方便。

https://github.com/pywebio/PyWebIO

Python 教程

practical-python

一个人气超高的 Python 学习资源项目,是 MarkDown 格式的教程,非常友好。

https://github.com/dabeaz-course/practical-python

learn-python3

一个 Python3 的教程,该教程采用 Jupyter notebooks 形式,便于运行和阅读。并且还包含了练习题,对新手友好。

https://github.com/jerry-git/learn-python3

python-guide

Requests 库的作者——kennethreitz,写的 Python 入门教程。不单单是语法层面的,涵盖项目结构、代码风格,进阶、工具等方方面面。一起在教程中领略大神的风采吧~

https://github.com/realpython/python-guide

其他

pytools

这是一位大神编写的类似工具集的项目,里面包含了众多有趣的小工具。

截图只是冰山一角,全貌需要大家自行探索了

import random from pytools import pytools tool_client = pytools.pytools() all_supports = tool_client.getallsupported() tool_client.execute(random.choice(list(all_supports.values())))

https://github.com/CharlesPikachu/pytools

amazing-qr

可以生成动态、彩色、各式各样的二维码,真是个有趣的库。

#3 -n, -d amzqr https://github.com -n github_qr.jpg -d .../paths/

https://github.com/x-hw/amazing-qr

sh

sh 是一个成熟的,用于替代 subprocess 的库,它允许我们调用任何程序,看起来它就是一个函数一样。

$> ./run.sh FunctionalTests.test_unicode_arg

https://github.com/amoffat/sh

tqdm

强大、快速、易扩展的 Python 进度条库。

from tqdm import tqdm for i in tqdm(range(10000)): ...

https://github.com/tqdm/tqdm

loguru

一个让 Python 记录日志变得简单的库。

from loguru import logger

logger.debug("That's it, beautiful and simple logging!")https://github.com/Delgan/loguru

click

Python 的第三方库,用于快速创建命令行。支持装饰器方式调用、多种参数类型、自动生成帮助信息等。

import click

@click.command()

@click.option("--count", default=1, help="Number of greetings.")

@click.option("--name", prompt="Your name", help="The person to greet.")

def hello(count, name):

"""Simple program that greets NAME for a total of COUNT times."""

for _ in range(count):

click.echo(f"Hello, {name}!")

if __name__ == '__main__':

hello()Output:

$ python hello.py --count=3 Your name: Click Hello, Click! Hello, Click! Hello, Click!

KeymouseGo

Python 实现的精简绿色版按键精灵,记录用户的鼠标、键盘操作,自动执行之前记录的操作,可设定执行的次数。在进行某些简单、单调重复的操作时,使用该软件可以十分省事儿。只需要录制一遍,剩下的交给 KeymouseGo 来做就可以了。

https://github.com/taojy123/KeymouseGo

The above is the detailed content of Those interesting and powerful Python libraries. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

The speed of mobile XML to PDF depends on the following factors: the complexity of XML structure. Mobile hardware configuration conversion method (library, algorithm) code quality optimization methods (select efficient libraries, optimize algorithms, cache data, and utilize multi-threading). Overall, there is no absolute answer and it needs to be optimized according to the specific situation.

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone.

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

There is no built-in sum function in C language, so it needs to be written by yourself. Sum can be achieved by traversing the array and accumulating elements: Loop version: Sum is calculated using for loop and array length. Pointer version: Use pointers to point to array elements, and efficient summing is achieved through self-increment pointers. Dynamically allocate array version: Dynamically allocate arrays and manage memory yourself, ensuring that allocated memory is freed to prevent memory leaks.

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents.

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

XML can be converted to images by using an XSLT converter or image library. XSLT Converter: Use an XSLT processor and stylesheet to convert XML to images. Image Library: Use libraries such as PIL or ImageMagick to create images from XML data, such as drawing shapes and text.

How to control the size of XML converted to images?

Apr 02, 2025 pm 07:24 PM

How to control the size of XML converted to images?

Apr 02, 2025 pm 07:24 PM

To generate images through XML, you need to use graph libraries (such as Pillow and JFreeChart) as bridges to generate images based on metadata (size, color) in XML. The key to controlling the size of the image is to adjust the values of the <width> and <height> tags in XML. However, in practical applications, the complexity of XML structure, the fineness of graph drawing, the speed of image generation and memory consumption, and the selection of image formats all have an impact on the generated image size. Therefore, it is necessary to have a deep understanding of XML structure, proficient in the graphics library, and consider factors such as optimization algorithms and image format selection.

How to open xml format

Apr 02, 2025 pm 09:00 PM

How to open xml format

Apr 02, 2025 pm 09:00 PM

Use most text editors to open XML files; if you need a more intuitive tree display, you can use an XML editor, such as Oxygen XML Editor or XMLSpy; if you process XML data in a program, you need to use a programming language (such as Python) and XML libraries (such as xml.etree.ElementTree) to parse.

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

XML formatting tools can type code according to rules to improve readability and understanding. When selecting a tool, pay attention to customization capabilities, handling of special circumstances, performance and ease of use. Commonly used tool types include online tools, IDE plug-ins, and command-line tools.