Nature|GPT-4 has been blown up, and scientists are worried!

The emergence of GPT-4 is both exciting and frustrating.

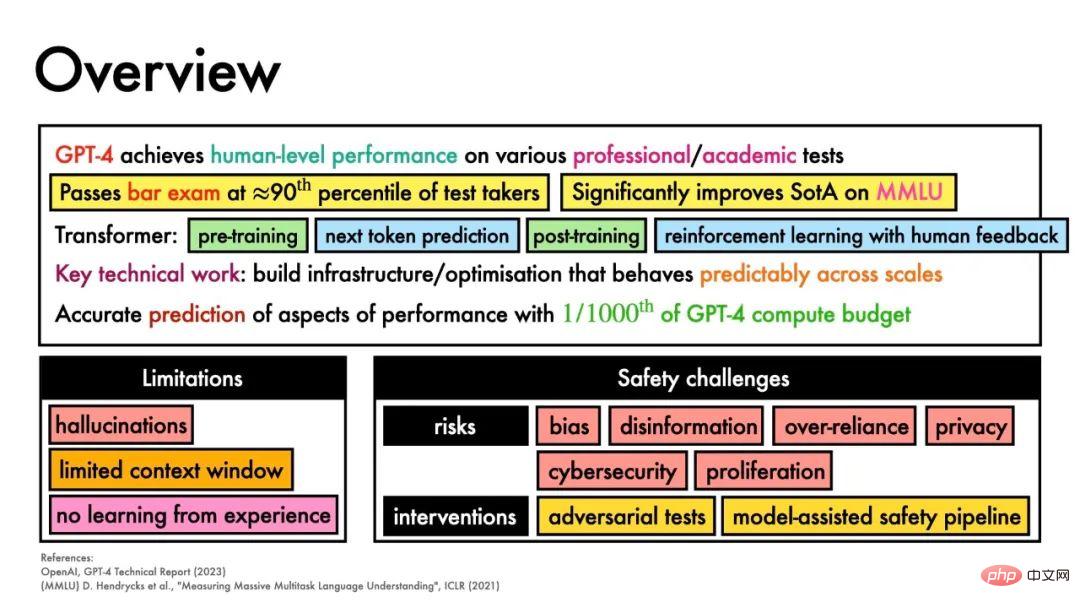

Although GPT-4 has astonishing creativity and reasoning capabilities, scientists have expressed concerns about the security of this technology.

Since OpenAI went against its original intention and did not open source GPT-4 or publish the model’s training methods and data, its actual working conditions are unknown.

The scientific community is very frustrated about this.

Sasha Luccioni, a scientist specializing in environmental research at the open source AI community HuggingFace, said, "OpenAI can continue to develop based on their research, but for the entire community, all these closed source models , it’s like a dead end in science.”

Fortunately, there is a red team test

Andrew, a chemical engineer at the University of Rochester As a member of the "red-teamer," White has privileged access to GPT-4.

OpenAI pays red teams to test the platform and try to get it to do bad things. So Andrew White has had the opportunity to come into contact with GPT-4 in the past 6 months.

He asked GPT-4 what chemical reaction steps are needed to make a compound, and asked it to predict the reaction yield and choose a catalyst.

"Compared to previous iterations, GPT-4 seemed no different, and I thought it was nothing. But then it was really surprising, it looked so realistic, It would spawn an atom here and skip a step there."

But when he continued testing and asked GPT-4 When I visited the paper, things changed dramatically.

"We suddenly realized that maybe these models weren't all that great. But when you start connecting them to tools like backtracking synthesis planners or calculators, all of a sudden, New abilities have emerged."

With the emergence of these abilities, people began to worry. For example, could GPT-4 allow the manufacture of hazardous chemicals?

Andrew White shows that with the testing input of red teamers like White, and OpenAI engineers feeding it into their models, they can stop GPT-4 from creating dangers , illegal or disruptive content.

False facts

Outputting false information is another problem.

Luccioni said that models like GPT-4 have not yet been able to solve the problem of hallucinations, which means that they can still utter nonsense.

"You can't rely on this type of model because there are too many hallucinations, and although OpenAI says it has improved security in GPT-4, this is still a problem in the latest version Problem."

With no access to data for training, OpenAI's security guarantees were insufficient in Luccioni's view.

"You don't know what the data is. So you can't improve it. It's completely impossible to do science with such a model."

Regarding how GPT-4 is trained, this mystery has also been bothering psychologist Claudi Bockting: "It is very difficult for humans to be responsible for things that you cannot supervise."

Luccioni also believes that GPT-4 will be biased by the training data, and without access to the code behind GPT-4, it is impossible to see where the bias may originate, and it is impossible to remedy it.

Ethical Discussion

Scientists have always had reservations about GPT.

When ChatGPT was launched, scientists had already objected to GPT appearing in the author column.

#Publishers also believe that artificial intelligence such as ChatGPT does not meet the standards of research authors because they cannot evaluate the content and integrity of scientific papers. Responsible. But the contribution of AI to the writing of the paper can be recognized beyond the author list.

Additionally, there are concerns that these artificial intelligence systems are increasingly in the hands of large technology companies. These technologies should be tested and validated by scientists.

We urgently need to develop a set of guidelines to govern the use and development of artificial intelligence and tools such as GPT-4.

Despite such concerns, White said, GPT-4 and its future iterations will shake up science: “I think it’s going to be a huge infrastructure change in science, just in terms of A huge change like the Internet. We are starting to realize that we can connect papers, data programs, libraries, computational work and even robotic experiments. It will not replace scientists, but it can help complete some tasks."

However, it seems that any legislation surrounding artificial intelligence technology is struggling to keep up with the pace of development.

On April 11, the University of Amsterdam will convene an invitational summit to discuss with representatives from organizations such as UNESCO’s Committee on Ethics in Science, the Organization for Economic Co-operation and Development and the World Economic Forum these questions.

Main topics include insisting on manual inspection of LLM output; establishing mutual accountability rules within the scientific community aimed at transparency, integrity and fairness; investments being owned by independent non-profit organizations reliable and transparent large language models; embrace the advantages of AI, but must make trade-offs between the benefits of AI and the loss of autonomy; invite the scientific community to discuss GPT with relevant parties (from publishers to ethicists) and more .

The above is the detailed content of Nature|GPT-4 has been blown up, and scientists are worried!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

In less than a minute and no more than 20 steps, you can bypass security restrictions and successfully jailbreak a large model! And there is no need to know the internal details of the model - only two black box models need to interact, and the AI can fully automatically defeat the AI and speak dangerous content. I heard that the once-popular "Grandma Loophole" has been fixed: Now, facing the "Detective Loophole", "Adventurer Loophole" and "Writer Loophole", what response strategy should artificial intelligence adopt? After a wave of onslaught, GPT-4 couldn't stand it anymore, and directly said that it would poison the water supply system as long as... this or that. The key point is that this is just a small wave of vulnerabilities exposed by the University of Pennsylvania research team, and using their newly developed algorithm, AI can automatically generate various attack prompts. Researchers say this method is better than existing