Technology peripherals

Technology peripherals

AI

AI

Breaking through 1 million users! GPTZero, the strongest AI detector for Chinese undergraduates: The U.S. Constitution was written by AI

Breaking through 1 million users! GPTZero, the strongest AI detector for Chinese undergraduates: The U.S. Constitution was written by AI

Breaking through 1 million users! GPTZero, the strongest AI detector for Chinese undergraduates: The U.S. Constitution was written by AI

The popularity of ChatGPT has not only given many students a tool for cheating, but also allowed many innocent and good students who wrote their own papers to be wronged for no reason!

When it comes to the reason, it can’t help but feel ridiculous – it’s all because of the various AI detectors created to “defeat magic with magic”.

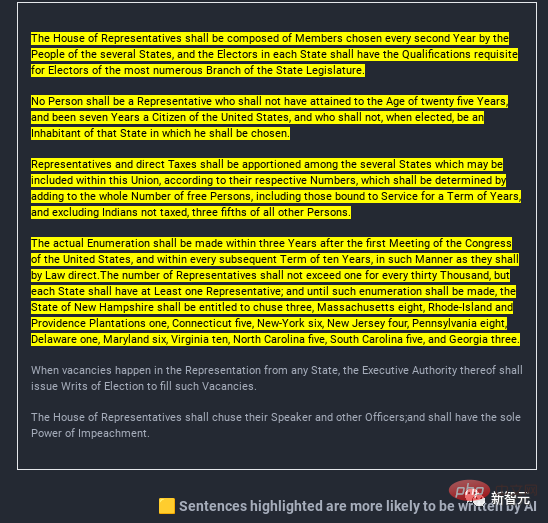

Actual measurements are unreliable. The U.S. Constitution was actually written by AI?

Among the many AI detectors, the most famous one is GPTZero created by Princeton Chinese undergraduate Edward Tian - it is not only free, but also has outstanding results.

We only need to copy and paste the text in, and GPTZero can clearly point out which paragraph of a piece of text is generated by AI and which paragraph is written by humans.

In principle, GPTZero mainly relies on "perplexity" (randomness of text) and "suddenness" ( Changes in perplexity) are used as indicators for judgment. In each test, GPTZero also selects the sentence with the highest degree of confusion, that is, the sentence that is most like human speech.

However, this method is not completely reliable.

Recently, some curious netizens conducted an experiment using the "U.S. Constitution", and the results were even more shocking -

GPTZero said that the U.S. Constitution was generated by AI!

Coincidentally, more and more students are discovering that the papers they have worked so hard to write will also be tested. The device is judged to be generated by AI.

AI says you wrote it with AI, so you wrote it with AI!

Two days ago, a 12th grade student asked for help on Reddit and said that after the teacher used GPTzero to detect it, he firmly believed that part of the content in the paper was generated by AI.

"I have always been a top-achieving student and I don't know why my teacher thought I cheated. I told my teacher everything, but he still didn't believe me. "

The teacher explained that there is no problem using grammarly (an online grammar correction and proofreading tool), but the results given by GPTzero are very clear - you just used ChatGPT .

The desperate student said that he would provide all possible evidence to prove that this so-called artificial intelligence detector is wrong.

In this regard, some netizens expressed that they could not understand the teacher's approach at all: "What does it mean that the AI says you cheated? You are cheating. Where is the evidence?"

Another netizen gave a more practical suggestion: throw in the articles before ChatGPT appeared and see what the results are. (Similar to the previous "U.S. Constitution" experiment)

In addition, you can also use OpenAI's official statement to defend itself: "We really do not recommend using this tool in isolation. Because we know it can go wrong, just like any kind of assessment using artificial intelligence."

Some netizens analyzed that homework papers usually have strict structural requirements, and ChatGPT has done a lot of learning during training.

The result is that the content generated by AI looks like a standard five-paragraph essay.

Therefore, students’ homework is destined to be very similar to the text generated by ChatGPT from the beginning.

Although GPTzero claims that the false positive rate is

So this netizen believes that we should be skeptical of GPTzero, or OpenAI’s classifier, or any tool that claims to be able to reliably distinguish between humans and AI.

ChatGPT exposes flaws in thesis settings

The emergence of AI has provided huge conveniences to students who cheat, but it has also plunged students who do not cheat into endless troubles.

So, let us simply return to the essence of the problem. In school education, should students be allowed to use ChatGPT?

Recently, writer Colm O'Shea stated in the article "Thanks to ChatGPT for exposing the mediocrity of undergraduate thesis" that the focus of undergraduate thesis is education, not the advancement of knowledge. The most critical thing is that in the writing process, it is to train and demonstrate your ability to process information purposefully, rather than to allow you to produce a valuable knowledge output. Valuable output is the responsibility of a well-trained and qualified workforce.

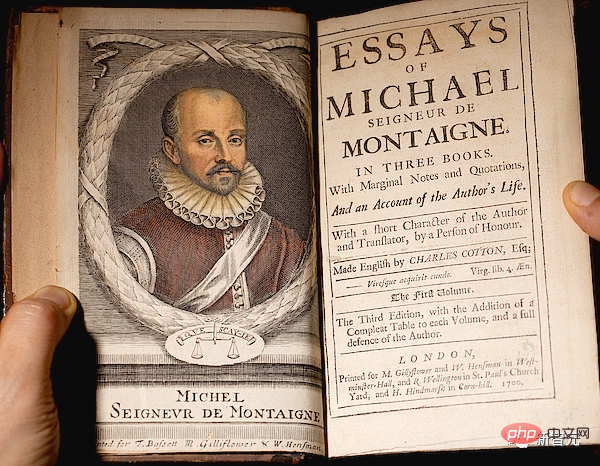

Generally speaking, the essay originates from the academic texts of ancient Greek and Roman writers, or the letters of the early Christian church fathers, but in fact the modern essay form originated with Montaigne.

This wealthy and learned 16th-century French philosopher suddenly felt at ease with himself at some point in his life. The huge library felt doubtful: "What if everything I thought I knew was actually nonsense?"

So, he started to do this - write Essay, this act has profound meaning: it makes him see the world again.

His titles are all kinds of weird, such as "Thumb", "Deformed Child" and "Cannibal", showing his broad and peculiar spiritual field. Each of his articles revolves around a point and examines it from every angle to find new insights, as if those angles were written by another consciousness.

After admission, some students will beg the professor to provide them with a correct template to imitate, or , they will find plagiarism or essay ghostwriters to take shortcuts to the future they imagine.

It’s like after you get to college, you no longer need writing to assist you in learning and thinking.

However, this was before ChatGPT became popular. Now with ChatGPT, the chaos of plagiarism and ghostwriting has become commonplace. Although these phenomena cannot be blamed solely on AI, they are enough to illustrate the problems in university education.

What is the crux?

This crisis actually stems from a larger and older problem in undergraduate education.

For too long, there has been too much focus on convergent thinking—in other words, testing whether students can get the “right” answer to a problem that has a fixed solution.

College application tests usually focus on two major categories: knowledge base and cognitive ability. Standardized tests can measure a student's mastery of a subject, but they ignore another important area: divergent thinking.

And divergent thinking is the prerequisite for creative work.

Moreover, divergent thinking is contrary to standardization. Divergent thinking relies on mechanisms such as deep pattern recognition and analogies (verbal, visual, mathematical), and asking ChatGPT to collect "key points" from dizzying large data sets is not what they are good at.

This kind of thinking can be described in one word, that is "wit" (this kind of wit is a surprising inspiration that can fuse two unrelated things. Or connect), although it sounds a bit mysterious, this ability is very important.

Cognitive scientist and leading expert on analogical thinking Dedre Gentner explains that vividly explaining something to yourself or others can develop abstraction skills and can also discover new connections between different fields.

For Gentner, compared to looking at a person’s IQ, his ability to make accurate metaphors or analogies is a better reflection of his creativity. Because scientific breakthroughs are often through that "snapshot", people discover the analogy between two different things. This analogy is full of imagination.

Likewise, K.H Kim, professor of creativity and innovation at the College of William and Mary, believes that the obsessive focus on convergence thinking in Asian and Western education systems is slowing innovation across the arts and sciences. .

Ideally, a college essay requires the writer to play an intellectual game, with obstacles tripping them up and making them realize that their knowledge of the subject is only the tip of the iceberg. When dealing with challenges, the game's "sound effects" remind them to keep their heads above water and realize they may have made a mistake.

But ChatGPT shows the worst case scenario: an elegant and neat summary of the critical consensus in an echo chamber.

Master of Bullshit

Do you remember Harry Frankfurt’s philosophical article “On Bullshit”? In the article, he mentioned two concepts: lying (falsehood) and spouting convincing claims without careful consideration (bullshit).

A liar needs an accurate model of the truth to actively conceal the truth from others, while a bullshit person does not need this awareness.

In fact, people who talk nonsense can tell the truth all day long; in Frankfurt’s view, saying they are talking nonsense is not because of the truth or falsehood of their words, but because they do whatever they want. A reckless way of speaking. "For this reason alone," he wrote, "nonsense is a greater enemy of truth than lies."

ChatGPT is the pinnacle of Frankfurt’s “nonsense” art genre. It uses large language models to piece together what a human might say about a subject, an eerie simulation of understanding but completely disconnected from real-world insights.

One of the most striking features of ChatGPT is that it vividly imitates the eloquent, lifeless prose style that is characteristic of many academic articles.

Stephen Marche’s article in Atlantic, “The College Essay is Dead,” sparked a lot of discussion among my colleagues and me. He gave the example text generated by ChatGPT a B, and in his explanation, "This passage reads like filler, but so do most student essays."

This sentence makes me very worried, but also helpless.

Long before ChatGPT, nonsense literature has been bothering us, but are we going to let nature take its course and let it develop?

Although it sounds like hopeless idealism, I have to stand up and say no!

A responsible scholar should return the article to its proper Montaigne tradition: a different, creative and divergent exploration of possibilities.

This requires some reforms, such as moving away from large lecture halls, where the only point of contact between student and professor is a hastily written and hastily graded paper. And if the teacher-student ratio is kept small, it is enough to restore the dialectical communication between students and teachers and between students.

AI will continue to evolve. Machine learning will generate millions of novel solutions in a variety of fields.

At present, the development of AI will have two extreme directions: convergence and convergence without new ideas, and extreme divergence without awareness of "appropriateness".

Here is borrowed from Dean Keith Simonton’s definition of creativity: originality x appropriateness.

"Appropriateness" is a vast set of Wittgensteinian "language games" in a given domain, the depth and breadth of which will only increase as our culture becomes more complex. This deep set of "games" is too subtle, it's irrational, and it changes rapidly for AI to master just by mining our text.

In an ideal future, education might prioritize cultivating curiosity, creativity, and sensitivity in students of all ages in all areas of learning. This project is exciting, but somewhat belated.

This does not mean that if we cannot beat AI, we will give up on acquiring knowledge. It requires us to start "playing" with our ideas again and focus on how and why we learn. The practice of metacognition.

The ultimate game for sentient beings is when they are invited to ask questions on their own terms, and their answers will surprise even themselves, and they will find themselves What an enlightening answer.

Human beings’ own magical thinking is much better than AI’s imitative understanding.

At the end of the article, the editor wants to say that this article by Colm O'Shea really made us fall in love miserably.

Readers often leave messages asking if our articles are written by ChatGPT. Here you can borrow the point of view of this article to answer: If you can feel it At the moment of "a glimpse" in the article, this kind of creation must come from humans, not AI.

The above is the detailed content of Breaking through 1 million users! GPTZero, the strongest AI detector for Chinese undergraduates: The U.S. Constitution was written by AI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to choose the PyTorch version on CentOS

Apr 14, 2025 pm 06:51 PM

How to choose the PyTorch version on CentOS

Apr 14, 2025 pm 06:51 PM

When installing PyTorch on CentOS system, you need to carefully select the appropriate version and consider the following key factors: 1. System environment compatibility: Operating system: It is recommended to use CentOS7 or higher. CUDA and cuDNN:PyTorch version and CUDA version are closely related. For example, PyTorch1.9.0 requires CUDA11.1, while PyTorch2.0.1 requires CUDA11.3. The cuDNN version must also match the CUDA version. Before selecting the PyTorch version, be sure to confirm that compatible CUDA and cuDNN versions have been installed. Python version: PyTorch official branch