Technology peripherals

Technology peripherals

AI

AI

Six best practices for developing enterprise usage policies for generative AI

Six best practices for developing enterprise usage policies for generative AI

Six best practices for developing enterprise usage policies for generative AI

Generative AI is a recently noticeable AI technology that uses unsupervised and semi-supervised algorithms to generate data from existing materials (such as text, audio, video, images and code) to generate new content. The uses of this branch of AI are exploding, and organizations are using generative AI to better serve customers, make more use of existing data, improve operational efficiency, and many other uses.

But like other emerging technologies, generative AI is not without significant risks and challenges. According to a recent survey of senior IT leaders conducted by Salesforce, 79% of respondents believe that generative AI technology may have security risks, 73% of respondents are concerned that generative AI may be biased, and 59% Respondents believe the output of generative AI is inaccurate. In addition, legal issues need to be considered, especially if the content generated by the generative AI used externally is authentic and accurate, the content is copyrighted, or comes from competitors.

For example, ChatGPT itself will tell us, “My responses are generated based on patterns and correlations learned from a large text dataset, and I do not have the ability to verify that all cited sources in the dataset are accurate or trustworthy Degree."

Legal risks alone are extensive. According to the non-profit Tech Policy Press organization, these risks include contracts, cybersecurity, data privacy, deceptive trade practices, discrimination, disinformation, ethics, knowledge Risks Related to Title and Verification.

In fact, your organization may already have many employees testing the use of generative AI, and as this activity moves from experiments to real life, it is important to take proactive steps before unintended consequences occur. important.

Cassie Kozyrkov, chief decision scientist at Google, said: "If the AI-generated code works, it is very high-level. But it doesn't always work, so before copying and pasting it into other important places , and don’t forget to test the output of ChatGPT.”

Enterprise usage policies and related training can help employees understand some of the risks and pitfalls of this technology, and provide rules and recommendations to help them understand how to make full use of this technology , thereby maximizing business value without putting the organization at risk.

With that in mind, here are six best practices for developing policies for your enterprise’s use of generative AI.

Determine the scope of your policy – The first step for a business to develop a usage policy is to consider its scope. For example, will this cover all forms of AI or just generative AI? Targeting only generative AI could be a useful approach, as this addresses large language models including ChatGPT without having to impact numerous other AI technologies. How to establish AI governance policy for the broader domain is another matter, and there are hundreds of such resources online.

Involve all relevant stakeholders across the organization—this may include HR, legal, sales, marketing, business development, operations, and IT. Each team may have different purposes, and how the content is used or misused may have different consequences. Involving IT and innovation teams demonstrates that the policy is not just a restraint developed from a risk management perspective, but a balanced set of recommendations designed to maximize productivity and business benefits while managing business risk.

Consider current and future uses of generative AI - Work with all stakeholders to itemize all internal and external use cases currently in use, as well as scenarios for the future use cases, each of which can help inform policy development and ensure relevant areas are covered. For example, if you’ve seen a proposal team (including contractors) experimenting with generative AI drafting content, or a product team generating creative marketing content, then you know there could be follow-up consequences for output that potentially infringes someone else’s intellectual property rights. Intellectual Property Risks.

In a state of constant evolution - When developing enterprise usage policies, it is important to think through and cover the information that goes into the system, how the generative AI system is used, and what comes from the system How the information output is subsequently used. Focus on internal and external use cases and everything in between. This measure may help prevent accidental repurposing of that content for external use by requiring all AI-generated content to be labeled, thereby ensuring transparency and avoiding confusion with human-generated content, even for internal use. or to prevent action based on information you believe to be true and accurate without verification.

Share it widely throughout the organization - Since policies are often quickly forgotten or even unread, it is important to provide appropriate training and education around the policy, which can include production Training videos and hosting live sessions. For example, live Q&A sessions with representatives from IT, innovation, legal, marketing and proposal teams, or other relevant teams, can help employees understand future opportunities and challenges. Be sure to include plenty of examples to help put the audience in the situation, such as when a major legal case pops up that can be cited as an example.

Dynamic update of the document - Like all policy documents, you need to keep the document dynamically updated, at an appropriate pace according to new uses, external market conditions and development requirements Make an update. Having all stakeholders “sign off” on the policy or incorporating it into an existing policy manual signed by the CEO shows that these policies have senior-level approval and are important to the organization. Your policy should be just one component of your broader governance approach, whether for generative AI or AI technology or technology governance in general.

This is not legal advice and your legal and HR departments should take the lead in approving and disseminating the policy. But I hope this can provide you with some reference ideas. Just like your corporate social media policy a decade ago, spending time on this now will help you reduce surprises and changing risks in the years to come.

The above is the detailed content of Six best practices for developing enterprise usage policies for generative AI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Why is generative AI sought after by various industries?

Mar 30, 2024 pm 07:36 PM

Why is generative AI sought after by various industries?

Mar 30, 2024 pm 07:36 PM

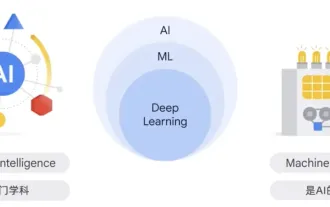

Generative AI is a type of human artificial intelligence technology that can generate various types of content, including text, images, audio and synthetic data. So what is artificial intelligence? What is the difference between artificial intelligence and machine learning? Artificial intelligence is the discipline, a branch of computer science, that studies the creation of intelligent agents, which are systems that can reason, learn, and perform actions autonomously. At its core, artificial intelligence is concerned with the theories and methods of building machines that think and act like humans. Within this discipline, machine learning ML is a field of artificial intelligence. It is a program or system that trains a model based on input data. The trained model can make useful predictions from new or unseen data derived from the unified data on which the model was trained.

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

Watch: What is the potential of applying generative AI to network automation?

Aug 17, 2023 pm 07:57 PM

Watch: What is the potential of applying generative AI to network automation?

Aug 17, 2023 pm 07:57 PM

Generative artificial intelligence (GenAI) is expected to become a compelling technology trend by 2023, bringing important applications to businesses and individuals, including education, according to a new report from market research firm Omdia. In the telecom space, use cases for GenAI are mainly focused on delivering personalized marketing content or supporting more sophisticated virtual assistants to enhance customer experience. Although the application of generative AI in network operations is not obvious, EnterpriseWeb has developed an interesting concept. Validation, demonstrating the potential of generative AI in the field, the capabilities and limitations of generative AI in network automation One of the early applications of generative AI in network operations was the use of interactive guidance to replace engineering manuals to help install network elements, from

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

Which technology giant is behind Haier and Siemens' generative AI innovation?

Nov 21, 2023 am 09:02 AM

Which technology giant is behind Haier and Siemens' generative AI innovation?

Nov 21, 2023 am 09:02 AM

Gu Fan, General Manager of the Strategic Business Development Department of Amazon Cloud Technology Greater China In 2023, large language models and generative AI will "surge" in the global market, not only triggering "an overwhelming" follow-up in the AI and cloud computing industry, but also vigorously Attract manufacturing giants to join the industry. Haier Innovation Design Center created the country's first AIGC industrial design solution, which significantly shortened the design cycle and reduced conceptual design costs. It not only accelerated the overall conceptual design by 83%, but also increased the integrated rendering efficiency by about 90%, effectively solving Problems include high labor costs and low concept output and approval efficiency in the design stage. Siemens China's intelligent knowledge base and intelligent conversational robot "Xiaoyu" based on its own model has natural language processing, knowledge base retrieval, and big language training through data

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

Jailbreak any large model in 20 steps! More 'grandma loopholes' are discovered automatically

Nov 05, 2023 pm 08:13 PM

In less than a minute and no more than 20 steps, you can bypass security restrictions and successfully jailbreak a large model! And there is no need to know the internal details of the model - only two black box models need to interact, and the AI can fully automatically defeat the AI and speak dangerous content. I heard that the once-popular "Grandma Loophole" has been fixed: Now, facing the "Detective Loophole", "Adventurer Loophole" and "Writer Loophole", what response strategy should artificial intelligence adopt? After a wave of onslaught, GPT-4 couldn't stand it anymore, and directly said that it would poison the water supply system as long as... this or that. The key point is that this is just a small wave of vulnerabilities exposed by the University of Pennsylvania research team, and using their newly developed algorithm, AI can automatically generate various attack prompts. Researchers say this method is better than existing