Technology peripherals

Technology peripherals

AI

AI

Unified AI development: Google OpenXLA is open source and integrates all frameworks and AI chips

Unified AI development: Google OpenXLA is open source and integrates all frameworks and AI chips

Unified AI development: Google OpenXLA is open source and integrates all frameworks and AI chips

At the Google Cloud Next 2022 event in October last year, the OpenXLA project officially surfaced. Google cooperated with the open source AI framework promoted by technology companies including Alibaba, AMD, Arm, Amazon, Intel, Nvidia and other technology companies. Committed to bringing together different machine learning frameworks to enable machine learning developers to proactively choose frameworks and hardware.

On Wednesday, Google announced that the OpenXLA project is officially open source.

Project link: https://github.com/openxla/xla

By creating a unified machine learning compiler that works with multiple different machine learning frameworks and hardware platforms, OpenXLA can accelerate the delivery of machine learning applications and provide greater code portability. This is a significant project for AI research and applications, and Jeff Dean also promoted it on social networks.

Today, machine learning development and deployment are impacted by fragmented infrastructure that can be compromised by frameworks, Varies by hardware and use case. This isolation limits the speed at which developers can work and creates barriers to model portability, efficiency, and production.

On March 8, Google and others took a major step toward removing these barriers with the opening of the OpenXLA project, which includes the XLA, StableHLO, and IREE repositories.

OpenXLA is an open source ML compiler ecosystem co-developed by AI/machine learning industry leaders, with contributors including Alibaba, AWS, AMD, Apple, Arm, Cerebras, Google, Graphcore, Hugging Face, Intel, Meta and Nvidia. It enables developers to compile and optimize models from all leading machine learning frameworks for efficient training and serving on a variety of hardware. Developers using OpenXLA can observe significant improvements in training time, throughput, service latency, and ultimately release and compute costs.

Challenges Facing Machine Learning Technology Facilities

As AI technology enters the practical stage, development teams in many industries are using machine learning to address real-world challenges. Examples include disease prediction and prevention, personalized learning experiences, and exploration of black hole physics.

With the number of model parameters growing exponentially and the amount of computation required by deep learning models doubling every six months, developers are seeking maximum performance and utilization of their infrastructure . A large number of teams are leveraging a variety of hardware models, from energy-efficient machine learning-specific ASICs in the data center to AI edge processors that provide faster response times. Accordingly, in order to improve efficiency, these hardware devices use customized and unique algorithms and software libraries.

But on the other hand, if there is no universal compiler to bridge different hardware devices to the multiple frameworks in use today (such as TensorFlow, PyTorch), people will need to put in a lot of effort to Run machine learning efficiently. In practice, developers must manually optimize model operations for each hardware target. This means using custom software libraries or writing device-specific code requires domain expertise.

This is a paradox, using proprietary technology for efficiency only results in siled, non-generalizable paths across frameworks and hardware resulting in high maintenance costs and in turn vendor lock-in , slowing down the progress of machine learning development.

Solution and Goals

The OpenXLA project provides a state-of-the-art ML compiler that scales across the complexity of ML infrastructure. Its core pillars are performance, scalability, portability, flexibility and ease of use. With OpenXLA, we aspire to realize the greater potential of AI in the real world by accelerating the development and delivery of AI.

OpenXLA aims to:

- Allows developers to easily compile and optimize any model in their preferred framework for a variety of hardware with a unified compiler API that works with any framework and plugs into dedicated device backends and optimizations .

- Provides industry-leading performance for current and emerging models, and can also be scaled to multiple hosts and accelerators to meet the constraints of edge deployment and promoted to new model architectures in the future.

- Building a layered and scalable machine learning compiler platform that provides developers with MLIR-based components that can be reconfigured for their unique use cases, for use with hardware Customized compilation process.

AI/ML Leaders Community

The challenges we face today in machine learning infrastructure are enormous and no one organization can effectively do it alone address these challenges. The OpenXLA community brings together developers and industry leaders operating at different levels of the AI stack—from frameworks to compilers, runtimes, and chips—and is therefore ideally suited to address the fragmentation we see in the ML space.

As an open source project, OpenXLA adheres to the following principles:

- Equal status: Individuals are equal regardless of affiliation Make a contribution. Technical leaders are those who contribute the most time and energy.

- Culture of Respect: All members are expected to uphold the project values and code of conduct, regardless of their position in the community.

- Scalable, efficient governance: Small teams make consensus-based decisions, with clear but rarely used upgrade paths.

- Transparency: All decisions and rationales should be clearly visible to the public.

OpenXLA Ecosystem: Performance, Scale, and Portability

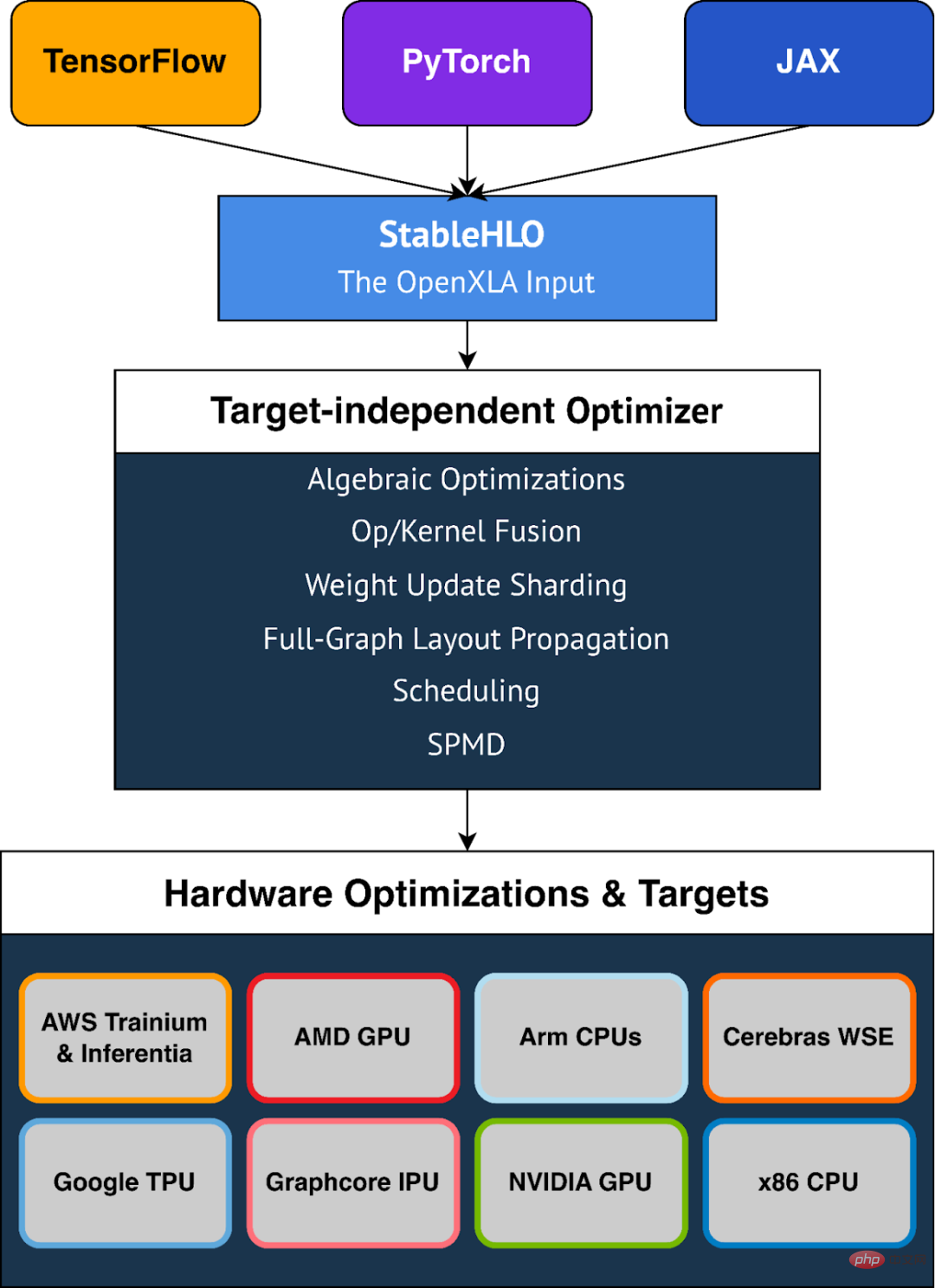

OpenXLA removes barriers for machine learning developers with a modular tool chain that makes it universal The compiler interface is supported by all leading frameworks, leverages portable standardized model representations, and provides domain-specific compilers with powerful target-specific and hardware-specific optimizations. The toolchain includes XLA, StableHLO, and IREE, all of which leverage MLIR: a compiler infrastructure that enables machine learning models to be represented, optimized, and executed consistently on hardware.

OpenXLA Key Highlights

Scope of Machine Learning Use Cases

Current usage of OpenXLA spans the range of ML use cases, including full training of models such as DeepMind’s AlphaFold, GPT2 and Swin Transformer on Alibaba Cloud, as well as multi-modal training on Amazon.com LLM training. Customers such as Waymo leverage OpenXLA for in-vehicle real-time inference. Additionally, OpenXLA is used to optimize Stable Diffusion services on local machines equipped with AMD RDNA™ 3.

Best Performance, Out of the Box

OpenXLA eliminates the need for developers to write device-specific code, You can easily speed up model performance. It features overall model optimization capabilities, including simplifying algebraic expressions, optimizing in-memory data layout, and improving scheduling to reduce peak memory usage and communication overhead. Advanced operator fusion and kernel generation help improve device utilization and reduce memory bandwidth requirements.

Easily scale workloads

Developing efficient parallelization algorithms is time-consuming and requires expertise. With features like GSPMD, developers only need to annotate a subset of key tensors, which can then be used by the compiler to automatically generate parallel computations. This eliminates the significant effort required to partition and efficiently parallelize models across multiple hardware hosts and accelerators.

Portability and Option

OpenXLA provides out-of-the-box support for a variety of hardware devices, Including AMD and NVIDIA GPUs, x86 CPUs and Arm architectures, and ML accelerators such as Google TPU, AWS Trainium and Inferentia, Graphcore IPU, Cerebras Wafer-Scale Engine, and more. OpenXLA also supports TensorFlow, PyTorch, and JAX through StableHLO, a portable layer used as an input format for OpenXLA.

flexibility

OpenXLA provides users with the flexibility to manually adjust model hotspots. Extension mechanisms such as custom calls enable users to write deep learning primitives in CUDA, HIP, SYCL, Triton, and other kernel languages to take full advantage of hardware features.

StableHLO

StableHLO is a portability layer between ML frameworks and ML compilers and is a support A set of high-level operations (HLO) operations for dynamics, quantization, and sparsity. Additionally, it can be serialized to MLIR bytecode to provide compatibility guarantees. All major ML frameworks (JAX, PyTorch, TensorFlow) can produce StableHLO. In 2023, Google plans to work closely with the PyTorch team to achieve integration with PyTorch version 2.0.

The above is the detailed content of Unified AI development: Google OpenXLA is open source and integrates all frameworks and AI chips. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place