Technology peripherals

Technology peripherals

AI

AI

Data management has become the largest bottleneck in the development of artificial intelligence

Data management has become the largest bottleneck in the development of artificial intelligence

Data management has become the largest bottleneck in the development of artificial intelligence

The true sign of greatness when it comes to infrastructure is that it flies easily overlooked. The better it performs, the less we think about it. For example, the importance of mobile infrastructure only comes to our minds when we find ourselves struggling to connect. Just like when we drive down a new, freshly paved highway, we give little thought to the road surface as it passes silently beneath our wheels. A poorly maintained highway, on the other hand, reminds us of its existence with every pothole, turf, and bump we encounter.

Infrastructure requires our attention only when it is missing, inadequate, or damaged. And in computer vision, the infrastructure—or rather, what's missing from it—is what many people are concerned about right now.

Computing sets the standard for infrastructure

Underpinning every AI/ML project (including computer vision) are three basic development pillars - data, algorithms/models, and compute. Of these three pillars, computing is by far the one with the most powerful and solid infrastructure. With decades of dedicated enterprise investment and development, cloud computing has become the gold standard for IT infrastructure across enterprise IT environments—and computer vision is no exception.

In the infrastructure-as-a-service model, developers have enjoyed on-demand, pay-as-you-go access to an ever-expanding pipeline of computing power for nearly 20 years. In that time, it has revolutionized enterprise IT by dramatically improving agility, cost efficiency, scalability, and more. With the advent of dedicated machine learning GPUs, it’s safe to say that this part of the computer vision infrastructure stack is alive and well. If we want to see computer vision and AI realize their full potential, it would be wise to use compute as the model on which the rest of the CV infrastructure stack is based.

The lineage and limitations of model-driven development

Until recently, algorithm and model development have been the driving force behind the development of computer vision and artificial intelligence. On both the research and commercial development side, teams have worked hard for years to test, patch, and incrementally improve AI/ML models, and share their progress in open source communities like Kaggle. The fields of computer vision and artificial intelligence made great progress in the first two decades of the new millennium by focusing their efforts on algorithm development and modeling.

In recent years, however, this progress has slowed because model-centric optimization violates the law of diminishing returns. Furthermore, model-centric approaches have several limitations. For example, you cannot use the same data for training and then train the model again. Model-centric approaches also require more manual labor in terms of data cleaning, model validation, and training, which can take away valuable time and resources from more innovative revenue-generating tasks.

Today, through communities like Hugging Face, CV teams have free and open access to a vast array of large, complex algorithms, models, and architectures, each supporting different core CV capabilities—from object recognition and facial landmark recognition to pose estimation and feature matching. These assets have become as close to an “off-the-shelf” solution as one could imagine – providing computer vision and AI teams with a ready-made whiteboard to train on any number of specialized tasks and use cases.

Just as basic human abilities like hand-eye coordination can be applied and trained on a variety of different skills - from playing table tennis to pitching - these modern ML algorithms can also be trained to perform a range of specific tasks. application. However, while humans become specialized through years of practice and sweat, machines do this through training on data.

Data-Centric Artificial Intelligence and Big Data Bottlenecks

This has prompted many leading figures in the field of artificial intelligence to call for a new era of deep learning development - an era in which the main engine of progress It's data. Just a few years ago, Andrew Ng and others announced that data-centricity was the direction of AI development. During this short time, the industry flourished. In just a few years, a plethora of novel commercial applications and use cases for computer vision have emerged, spanning a wide range of industries—from robotics and AR/VR to automotive manufacturing and home security.

Recently, we conducted research on hand-on-steering-wheel detection in cars using a data-centric approach. Our experiments show that by using this approach and synthetic data we are able to identify and generate specific edge cases that are lacking in the training dataset.

Datagen generates composite images for the hand-on-steering-wheel test (Image courtesy of Datagen)

While the computer vision industry is buzzing about data, not all of it is fanatical. While the field has established that data is the way forward, there are many obstacles and pitfalls along the way, many of which have already hobbled CV teams. A recent survey of U.S. computer vision professionals revealed that the field is plagued by long project delays, non-standardized processes, and resource shortages—all of which stem from data. In the same survey, 99% of respondents stated that at least one CV project has been canceled indefinitely due to insufficient training data.

Even the lucky 1% who have avoided project cancellation so far cannot avoid project delays. In the survey, every respondent reported experiencing significant project delays due to insufficient or insufficient training data, with 80% reporting delays lasting three months or more. Ultimately, the purpose of infrastructure is one of utility—to facilitate, accelerate, or communicate. In a world where severe delays are just part of doing business, it's clear that some vital infrastructure is missing.

Traditional training data challenges infrastructure

However, unlike computing and algorithms, the third pillar of AI/ML development is not suitable for infrastructureization-especially in the field of computer vision, In this field, data is large, disorganized, and both time and resource intensive to collect and manage. While there are many labeled, freely available databases of visual training data online (such as the now famous ImageNet database), they have proven insufficient by themselves as a source of training data in commercial CV development.

This is because, unlike models that generalize by design, training data is by its very nature application-specific. Data is what distinguishes one application of a given model from another, and therefore must be unique not only to a specific task, but also to the environment or context in which that task is performed. Unlike computing power, which can be generated and accessed at the speed of light, traditional visual data must be created or collected by humans (by taking photos in the field or searching the Internet for suitable images), and then painstakingly cleaned and labeled by humans (this is a A process prone to human error, inconsistency and bias).

This raises the question, "How can we make data visualizations that are both suitable for a specific application and are easily commoditized (i.e., fast, cheap, and versatile)?" Despite these two These qualities may seem contradictory, but a potential solution has emerged; showing great promise as a way to reconcile these two fundamental but seemingly incompatible qualities.

Path to synthetic data and full CV stack

The only way to make visual training data that has specific applications and saves time and resources at scale is to use synthetic data. For those unfamiliar with the concept, synthetic data is human-generated information designed to faithfully represent some real-world equivalent. In terms of visual synthetic data, this means realistic computer-generated 3D imagery (CGI) in the form of still images or videos. In response to many of the issues arising in the data center era, a burgeoning industry has begun to form around synthetic data generation - a growing ecosystem of small and mid-sized startups offering a variety of solutions that leverage synthetic data to solve a series of pain points listed above. The most promising of these solutions use AI/ML algorithms to generate photorealistic 3D images and automatically generate associated ground truth (i.e., metadata) for each data point. Synthetic data therefore eliminates the often months-long manual labeling and annotation process, while also eliminating the possibility of human error and bias. In our paper (published at NeurIPS 2021), Discovering group bias in facial landmark detection using synthetic data, we found that to analyze the performance of a trained model and identify its weaknesses, a subset of the data must be set aside carry out testing. The test set must be large enough to detect statistically significant deviations with respect to all relevant subgroups within the target population. This requirement can be difficult to meet, especially in data-intensive applications.Computer Vision (CV) is One of the leading areas of modern artificial intelligence

We propose to overcome this difficulty by generating synthetic test sets. We use the facial landmark detection task to validate our proposal by showing that all biases observed on real datasets can also be seen on well-designed synthetic datasets. This shows that synthetic test sets can effectively detect model weaknesses and overcome limitations in size or diversity of real test sets.

Today, startups are providing enterprise CV teams with sophisticated self-service synthetic data generation platforms that mitigate bias and allow for scaling data collection. These platforms allow enterprise CV teams to generate use case-specific training data on a metered, on-demand basis—bridging the gap between specificity and scale that makes traditional data unsuitable for infrastructureization.

New Hopes for Computer Vision’s So-Called “Data Managers”

There’s no denying that this is an exciting time for the field of computer vision. But, like any other changing field, these are challenging times. Great talent and brilliant minds rush into a field full of ideas and enthusiasm, only to find themselves held back by a lack of adequate data pipelines. The field is so mired in inefficiencies that data scientists today are known to be a field where one in three organizations already struggles with a skills gap, and we cannot afford to waste valuable human resources.

Synthetic data opens the door to a true training data infrastructure—one that may one day be as simple as turning on the tap for a glass of water or providing computation. This is sure to be a welcome refreshment for the data managers of the world.

The above is the detailed content of Data management has become the largest bottleneck in the development of artificial intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

The difference between single-stage and dual-stage target detection algorithms

Jan 23, 2024 pm 01:48 PM

The difference between single-stage and dual-stage target detection algorithms

Jan 23, 2024 pm 01:48 PM

Object detection is an important task in the field of computer vision, used to identify objects in images or videos and locate their locations. This task is usually divided into two categories of algorithms, single-stage and two-stage, which differ in terms of accuracy and robustness. Single-stage target detection algorithm The single-stage target detection algorithm converts target detection into a classification problem. Its advantage is that it is fast and can complete the detection in just one step. However, due to oversimplification, the accuracy is usually not as good as the two-stage object detection algorithm. Common single-stage target detection algorithms include YOLO, SSD and FasterR-CNN. These algorithms generally take the entire image as input and run a classifier to identify the target object. Unlike traditional two-stage target detection algorithms, they do not need to define areas in advance, but directly predict

Application of AI technology in image super-resolution reconstruction

Jan 23, 2024 am 08:06 AM

Application of AI technology in image super-resolution reconstruction

Jan 23, 2024 am 08:06 AM

Super-resolution image reconstruction is the process of generating high-resolution images from low-resolution images using deep learning techniques, such as convolutional neural networks (CNN) and generative adversarial networks (GAN). The goal of this method is to improve the quality and detail of images by converting low-resolution images into high-resolution images. This technology has wide applications in many fields, such as medical imaging, surveillance cameras, satellite images, etc. Through super-resolution image reconstruction, we can obtain clearer and more detailed images, which helps to more accurately analyze and identify targets and features in images. Reconstruction methods Super-resolution image reconstruction methods can generally be divided into two categories: interpolation-based methods and deep learning-based methods. 1) Interpolation-based method Super-resolution image reconstruction based on interpolation

How to use AI technology to restore old photos (with examples and code analysis)

Jan 24, 2024 pm 09:57 PM

How to use AI technology to restore old photos (with examples and code analysis)

Jan 24, 2024 pm 09:57 PM

Old photo restoration is a method of using artificial intelligence technology to repair, enhance and improve old photos. Using computer vision and machine learning algorithms, the technology can automatically identify and repair damage and flaws in old photos, making them look clearer, more natural and more realistic. The technical principles of old photo restoration mainly include the following aspects: 1. Image denoising and enhancement. When restoring old photos, they need to be denoised and enhanced first. Image processing algorithms and filters, such as mean filtering, Gaussian filtering, bilateral filtering, etc., can be used to solve noise and color spots problems, thereby improving the quality of photos. 2. Image restoration and repair In old photos, there may be some defects and damage, such as scratches, cracks, fading, etc. These problems can be solved by image restoration and repair algorithms

Scale Invariant Features (SIFT) algorithm

Jan 22, 2024 pm 05:09 PM

Scale Invariant Features (SIFT) algorithm

Jan 22, 2024 pm 05:09 PM

The Scale Invariant Feature Transform (SIFT) algorithm is a feature extraction algorithm used in the fields of image processing and computer vision. This algorithm was proposed in 1999 to improve object recognition and matching performance in computer vision systems. The SIFT algorithm is robust and accurate and is widely used in image recognition, three-dimensional reconstruction, target detection, video tracking and other fields. It achieves scale invariance by detecting key points in multiple scale spaces and extracting local feature descriptors around the key points. The main steps of the SIFT algorithm include scale space construction, key point detection, key point positioning, direction assignment and feature descriptor generation. Through these steps, the SIFT algorithm can extract robust and unique features, thereby achieving efficient image processing.

An introduction to image annotation methods and common application scenarios

Jan 22, 2024 pm 07:57 PM

An introduction to image annotation methods and common application scenarios

Jan 22, 2024 pm 07:57 PM

In the fields of machine learning and computer vision, image annotation is the process of applying human annotations to image data sets. Image annotation methods can be mainly divided into two categories: manual annotation and automatic annotation. Manual annotation means that human annotators annotate images through manual operations. This method requires human annotators to have professional knowledge and experience and be able to accurately identify and annotate target objects, scenes, or features in images. The advantage of manual annotation is that the annotation results are reliable and accurate, but the disadvantage is that it is time-consuming and costly. Automatic annotation refers to the method of using computer programs to automatically annotate images. This method uses machine learning and computer vision technology to achieve automatic annotation by training models. The advantages of automatic labeling are fast speed and low cost, but the disadvantage is that the labeling results may not be accurate.

Interpretation of the concept of target tracking in computer vision

Jan 24, 2024 pm 03:18 PM

Interpretation of the concept of target tracking in computer vision

Jan 24, 2024 pm 03:18 PM

Object tracking is an important task in computer vision and is widely used in traffic monitoring, robotics, medical imaging, automatic vehicle tracking and other fields. It uses deep learning methods to predict or estimate the position of the target object in each consecutive frame in the video after determining the initial position of the target object. Object tracking has a wide range of applications in real life and is of great significance in the field of computer vision. Object tracking usually involves the process of object detection. The following is a brief overview of the object tracking steps: 1. Object detection, where the algorithm classifies and detects objects by creating bounding boxes around them. 2. Assign a unique identification (ID) to each object. 3. Track the movement of detected objects in frames while storing relevant information. Types of Target Tracking Targets

Examples of practical applications of the combination of shallow features and deep features

Jan 22, 2024 pm 05:00 PM

Examples of practical applications of the combination of shallow features and deep features

Jan 22, 2024 pm 05:00 PM

Deep learning has achieved great success in the field of computer vision, and one of the important advances is the use of deep convolutional neural networks (CNN) for image classification. However, deep CNNs usually require large amounts of labeled data and computing resources. In order to reduce the demand for computational resources and labeled data, researchers began to study how to fuse shallow features and deep features to improve image classification performance. This fusion method can take advantage of the high computational efficiency of shallow features and the strong representation ability of deep features. By combining the two, computational costs and data labeling requirements can be reduced while maintaining high classification accuracy. This method is particularly important for application scenarios where the amount of data is small or computing resources are limited. By in-depth study of the fusion methods of shallow features and deep features, we can further

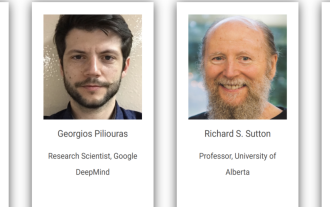

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au