Technology peripherals

Technology peripherals

AI

AI

Interpretation of Microsoft's latest HuggingGPT paper, what did you learn?

Interpretation of Microsoft's latest HuggingGPT paper, what did you learn?

Interpretation of Microsoft's latest HuggingGPT paper, what did you learn?

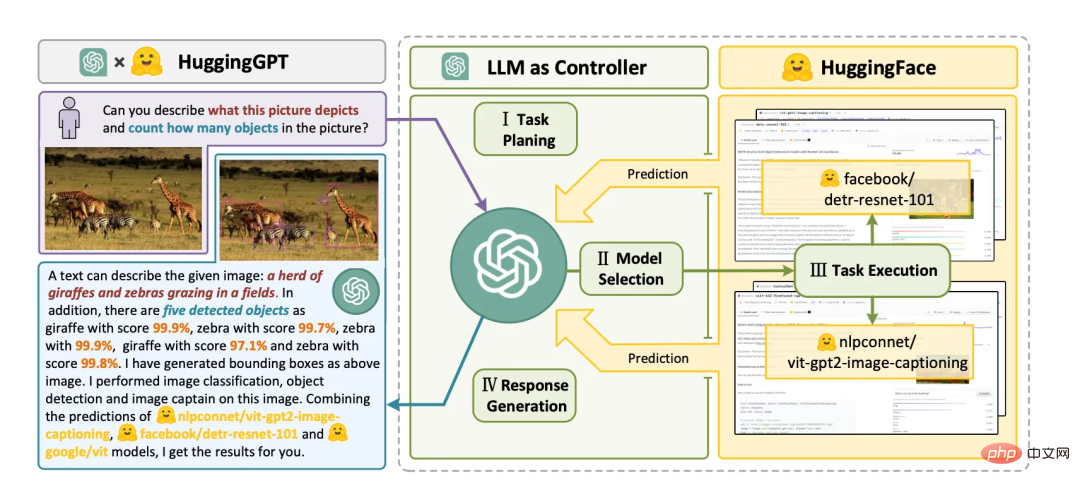

Microsoft recently published a paper on HuggingGPT. Original address: HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face[1]. This article is an interpretation of the paper.

HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face Translated into Chinese: HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face.

What are its friends? After reading the paper, it should be the large language model represented by GPT4 and various expert models. The expert model mentioned in this article is relative to the general model and is a model in a specific field, such as a model in the medical field, a model in the financial field, etc.

Hugging Face is an open source machine learning community and platform.

You can quickly understand the main content of the paper by answering the following questions.

- What is the idea behind HuggingGPT and how does it work?

- ##The idea behind HuggingGPT is to use a large language model (LLM) as the controller to manage AI models and solve complex AI tasks. HuggingGPT works by leveraging LLM’s strengths in understanding and reasoning to dissect user requests and decompose them into multiple subtasks. Then, based on the description of the expert model, HuggingGPT assigns the most suitable model to each task and integrates the results of different models. The workflow of HuggingGPT includes four stages: task planning, model selection, task execution and response generation. You can find more information on pages 4 and 16 of the PDF file.

- What is the idea behind HuggingGPT and how does it work?

- The idea behind HuggingGPT is to use a Large Language Model (LLM) Act as a controller to manage AI models and solve complex AI tasks. The working principle of HuggingGPT is to take advantage of LLM's advantages in understanding and reasoning to analyze user intentions and decompose tasks into multiple sub-tasks. Then, based on the description of the expert model, HuggingGPT assigns the most suitable model to each task and integrates the results of different models. The workflow of HuggingGPT includes four stages: task planning, model selection, task execution and response generation. You can find more information on pages 4 and 16 of the PDF file.

- How does HuggingGPT use language as a common interface to enhance AI models?

- HuggingGPT uses language as a common interface to enhance AI models by using Large language models (LLM) serve as controllers to manage AI models. LLM can understand and reason about users' natural language requests, and then decompose the task into multiple sub-tasks. Based on the description of the expert model, HuggingGPT assigns the most suitable model to each sub-task and integrates the results of different models. This approach enables HuggingGPT to cover complex AI tasks in many different modalities and domains, including language, vision, speech, and other challenging tasks. You can find more information on pages 1 and 16 of the PDF file.

- How does HuggingGPT use large language models to manage existing AI models?

- HuggingGPT uses large language models as interfaces to route user requests to Expert models effectively combine the language understanding capabilities of large language models with the expertise of other expert models. The large language model acts as the brain for planning and decision-making, while the small model acts as the executor of each specific task. This collaboration protocol between models provides new ways to design general AI models. (Page 3-4)

- What kind of complex AI tasks can HuggingGPT solve?

- HuggingGPT can solve languages, images, audio A wide range of tasks in various modalities such as video and video, including various forms of tasks such as detection, generation, classification and question answering. Examples of 24 tasks that HuggingGPT can solve include text classification, object detection, semantic segmentation, image generation, question answering, text-to-speech, and text-to-video. (Page 3)

- Can HuggingGPT be used with different types of AI models, or is it limited to specific models?

- HuggingGPT is not limited to specific models AI models or visual perception tasks. It can solve tasks in any modality or domain by organizing cooperation between models through large language models. Under the planning of large language models, task processes can be effectively specified and more complex problems can be solved. HuggingGPT takes a more open approach, assigning and organizing tasks according to model descriptions. (Page 4)

HuggingGPT’s workflow includes four stages:

- Task planning: Use ChatGPT to analyze user requests, understand their intentions, and dismantle them into a solvable task.

- Model Selection: To solve the planned tasks, ChatGPT selects AI models hosted on Hugging Face based on their descriptions.

- Task execution: Call and execute each selected model and return the results to ChatGPT.

- Generate response: Finally, use ChatGPT to integrate the predictions of all models and generate Response.

Reference link

[1] HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face: https://arxiv.org/pdf/2104.06674.pdf

The above is the detailed content of Interpretation of Microsoft's latest HuggingGPT paper, what did you learn?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve PHP's phar://stream processing security problem? Use typo3/phar-stream-wrapper!

Apr 17, 2025 pm 08:24 PM

How to solve PHP's phar://stream processing security problem? Use typo3/phar-stream-wrapper!

Apr 17, 2025 pm 08:24 PM

I'm having a serious problem when dealing with a PHP project: There is a security vulnerability in phar://stream processing, which can lead to the execution of malicious code. After some research and trial, I found an effective solution - using the typo3/phar-stream-wrapper library. This library not only solves my security issues, but also provides a flexible interceptor mechanism, making managing phar files more secure and controllable.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.