Java time complexity and space complexity example analysis

1. Algorithm efficiency

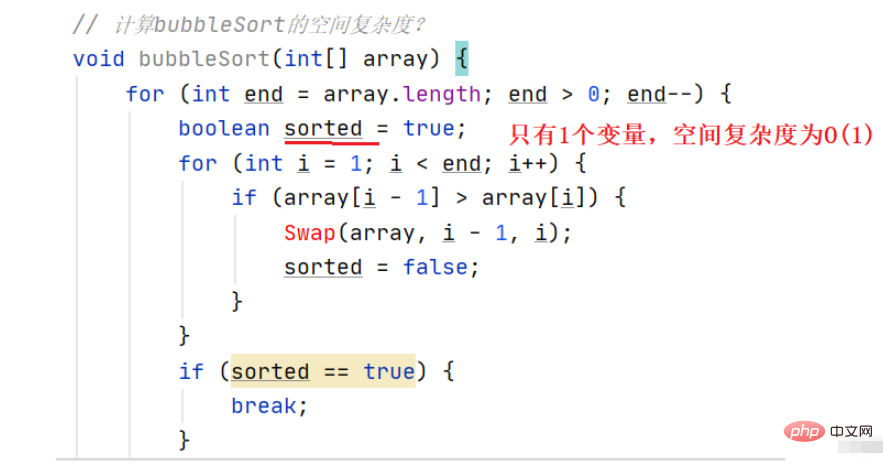

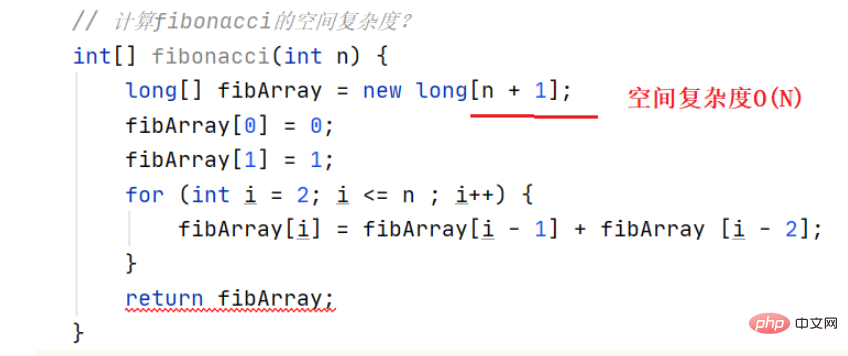

Algorithm efficiency analysis is divided into two types: the first is time efficiency, and the second is space efficiency. Time efficiency is called time complexity, and space efficiency is called space complexity. Time complexity mainly measures the running speed of an algorithm, while space complexity mainly measures the extra space required by an algorithm. In the early days of computer development, the storage capacity of computers was very small. So we care a lot about space complexity. However, after the rapid development of the computer industry, the storage capacity of computers has reached a very high level. So we no longer need to pay special attention to the space complexity of an algorithm.

2. Time complexity

1. Concept of time complexity

The time an algorithm takes is proportional to the number of executions of its statements. The basic operations in the algorithm are The number of executions is the time complexity of the algorithm. That is to say, when we get a code and look at the time complexity of the code, we mainly find how many times the code with the most executed statements in the code has been executed.

2. Asymptotic representation of Big O

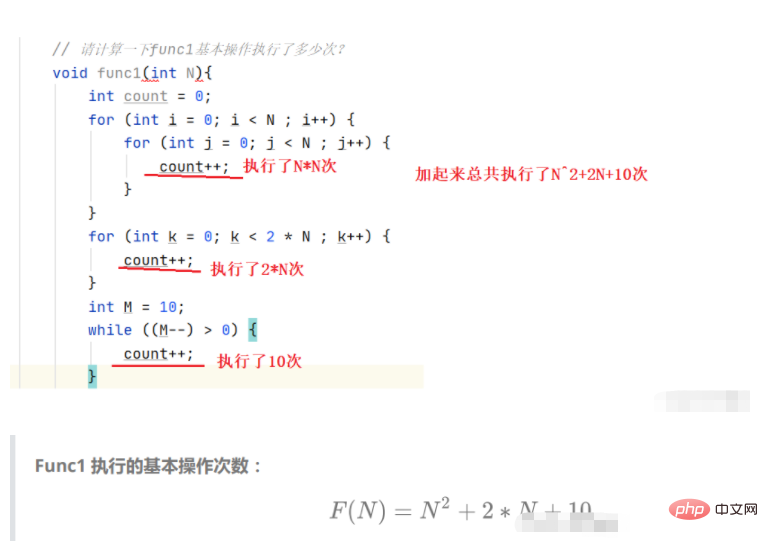

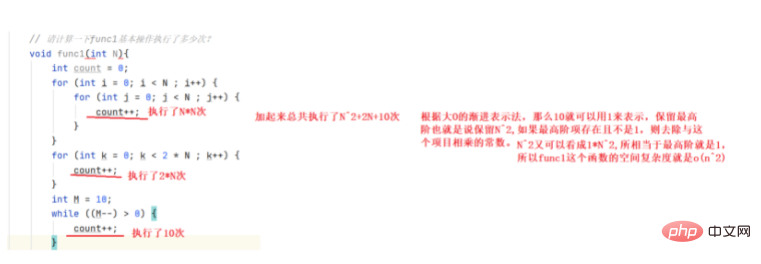

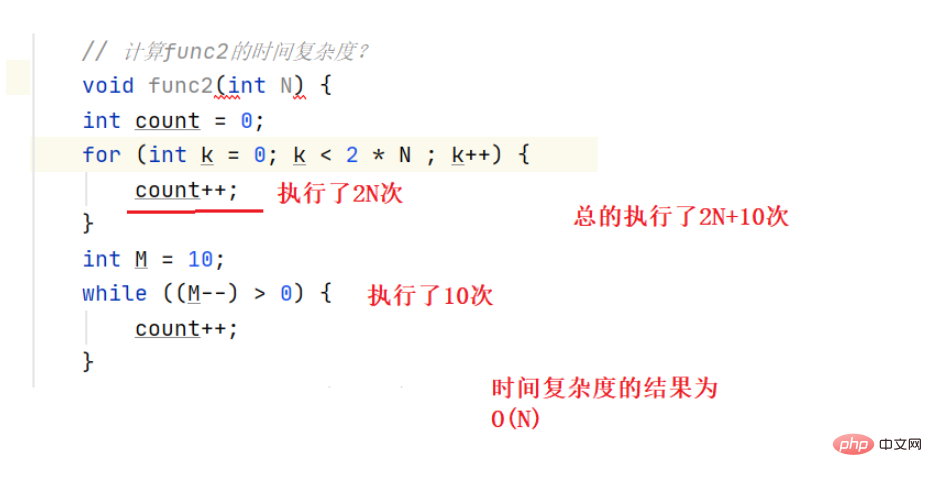

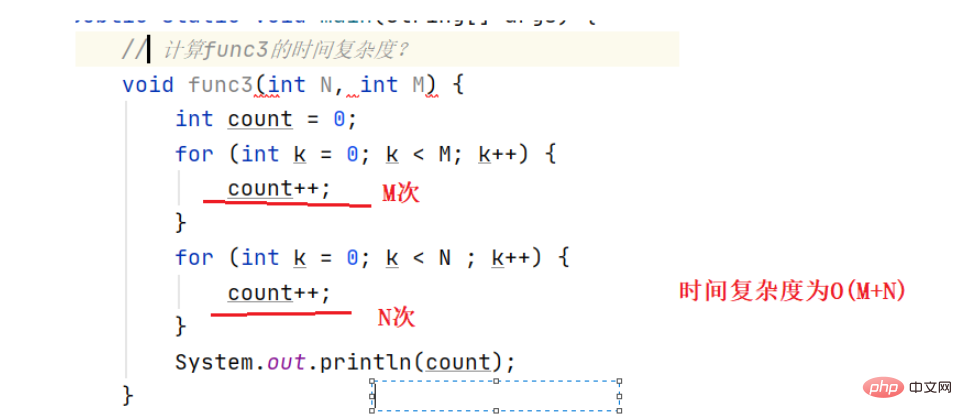

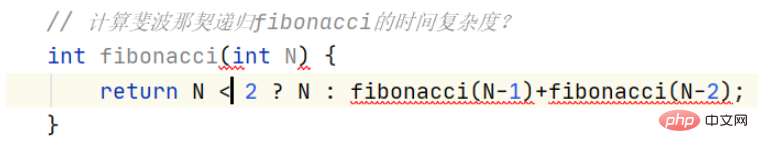

Look at the picture analysis:

When the value of N becomes larger and larger, the sum of 2N and The value of 10 can be ignored.

In fact, when we calculate the time complexity, we do not actually have to calculate the exact number of executions, but only the approximate number of executions, so here we use the asymptotic representation of Big O.

Big O notation: It is a mathematical symbol used to describe the asymptotic behavior of a function.

1. Replace all additive constants in run time with constant 1.

2. In the modified running times function, only the highest order term is retained.

3. If the highest-order term exists and is not 1, remove the constant multiplied by this term. The result is Big O order.

Through the above we will find that the asymptotic representation of Big O removes those items that have little impact on the results, and expresses the number of executions concisely and clearly.

In addition, the time complexity of some algorithms has best, average and worst cases:

Worst case: the maximum number of runs (upper bound) for any input size

Average case: the expected number of runs for any input size

Best case: the minimum number of runs (lower bound) for any input size

For example: Search for a data x## in an array of length N

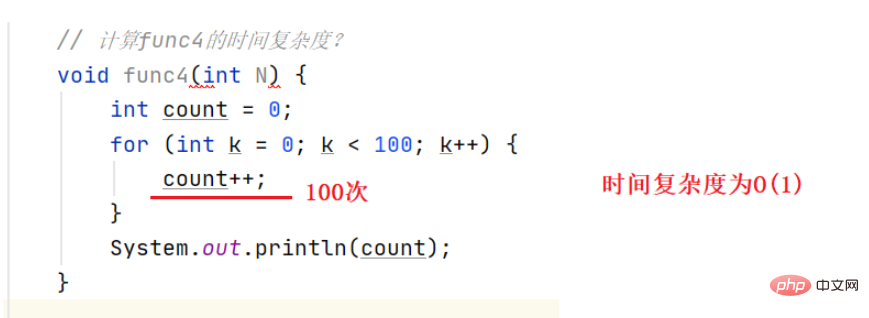

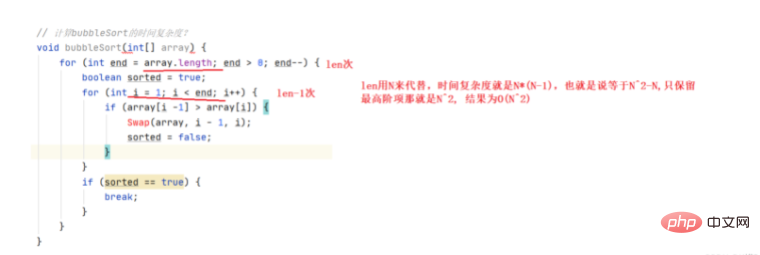

#Best case: found 1 time Worst case: found N timesAverage case: found N/2 times In actual practice, pay attention to the general situation is the worst operating situation of the algorithm, so the time complexity of searching data in the array is O(N)Calculation time complexity Example 1:

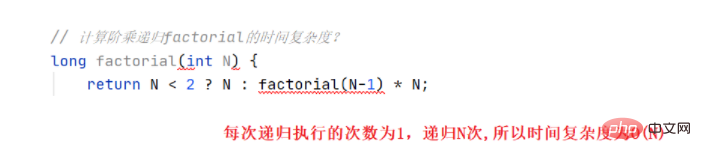

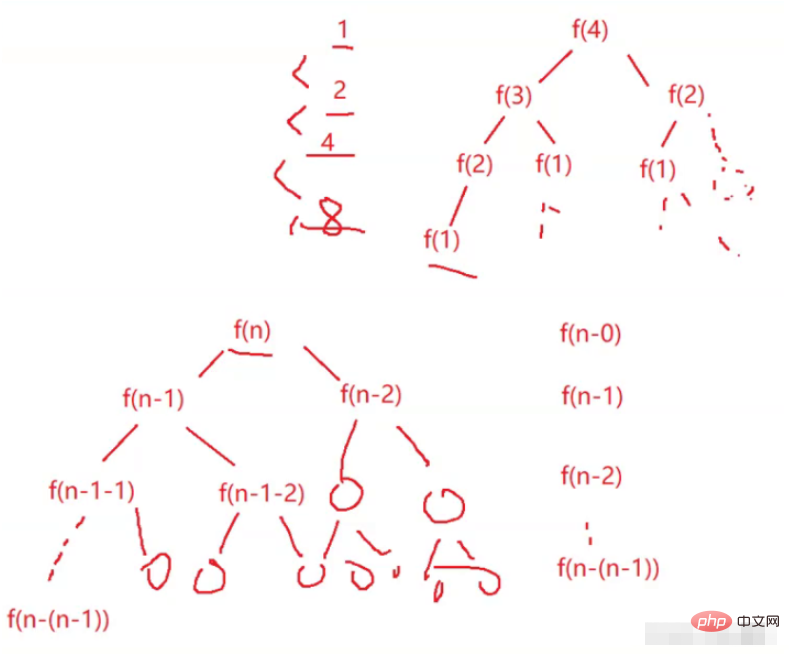

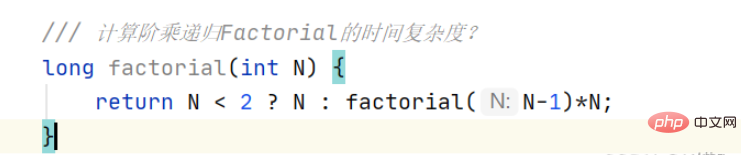

Through calculation and analysis, it is found that the basic operation is recursive 2^N times, and the time complexity is O(2^N).

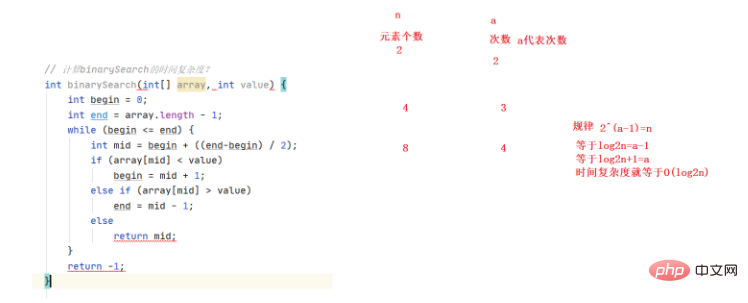

Rule:

The above is the detailed content of Java time complexity and space complexity example analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Perfect Number in Java. Here we discuss the Definition, How to check Perfect number in Java?, examples with code implementation.

Weka in Java

Aug 30, 2024 pm 04:28 PM

Weka in Java

Aug 30, 2024 pm 04:28 PM

Guide to Weka in Java. Here we discuss the Introduction, how to use weka java, the type of platform, and advantages with examples.

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Smith Number in Java. Here we discuss the Definition, How to check smith number in Java? example with code implementation.

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

In this article, we have kept the most asked Java Spring Interview Questions with their detailed answers. So that you can crack the interview.

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Java 8 introduces the Stream API, providing a powerful and expressive way to process data collections. However, a common question when using Stream is: How to break or return from a forEach operation? Traditional loops allow for early interruption or return, but Stream's forEach method does not directly support this method. This article will explain the reasons and explore alternative methods for implementing premature termination in Stream processing systems. Further reading: Java Stream API improvements Understand Stream forEach The forEach method is a terminal operation that performs one operation on each element in the Stream. Its design intention is

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

Guide to TimeStamp to Date in Java. Here we also discuss the introduction and how to convert timestamp to date in java along with examples.

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Capsules are three-dimensional geometric figures, composed of a cylinder and a hemisphere at both ends. The volume of the capsule can be calculated by adding the volume of the cylinder and the volume of the hemisphere at both ends. This tutorial will discuss how to calculate the volume of a given capsule in Java using different methods. Capsule volume formula The formula for capsule volume is as follows: Capsule volume = Cylindrical volume Volume Two hemisphere volume in, r: The radius of the hemisphere. h: The height of the cylinder (excluding the hemisphere). Example 1 enter Radius = 5 units Height = 10 units Output Volume = 1570.8 cubic units explain Calculate volume using formula: Volume = π × r2 × h (4

Create the Future: Java Programming for Absolute Beginners

Oct 13, 2024 pm 01:32 PM

Create the Future: Java Programming for Absolute Beginners

Oct 13, 2024 pm 01:32 PM

Java is a popular programming language that can be learned by both beginners and experienced developers. This tutorial starts with basic concepts and progresses through advanced topics. After installing the Java Development Kit, you can practice programming by creating a simple "Hello, World!" program. After you understand the code, use the command prompt to compile and run the program, and "Hello, World!" will be output on the console. Learning Java starts your programming journey, and as your mastery deepens, you can create more complex applications.