In February this year, Machine Heart reported the news that Fudan University launched the Chinese version of ChatGPT (see "Fudan releases the Chinese version of ChatGPT: MOSS starts testing and hits hot searches, servers are crowded"), which attracted widespread attention . At that time, Professor Qiu Xipeng said that Moss would be open sourced in April.

Yesterday, the open source version of Moss really came.

## Project address: https://github.com/OpenLMLab/MOSS

##MOSS is an open source conversation language model that supports Chinese and English bilingualism and multiple plug-ins, but the number of parameters is much smaller than ChatGPT. After v0.0.2, the team continued to adjust it and launched MOSS v0.0.3, which is the current open source version. Compared with earlier versions, the functions have also been updated in many ways.In the initial test, the basic functions of MOSS are similar to ChatGPT. It can complete various natural language processing tasks according to the instructions entered by the user, including text generation, text summarization, translation, and code generation. , small talk, etc.

After the open beta, the team continued to increase the pre-training of Chinese corpus: "So far, the base language model of MOSS 003 has been trained on 100B Chinese tokens. The total training The number of tokens reached 700B, which also contained about 300B of code."

After the open beta, we also collected some user data. We found that user intentions in the real Chinese world are as disclosed in the OpenAI InstructGPT paper The user prompt distribution of seed regenerated approximately 1.1 million regular conversation data, covering more fine-grained helpfulness data and broader harmlessness data.

Content source: https://www.zhihu.com/question/596908242/answer/2994534005

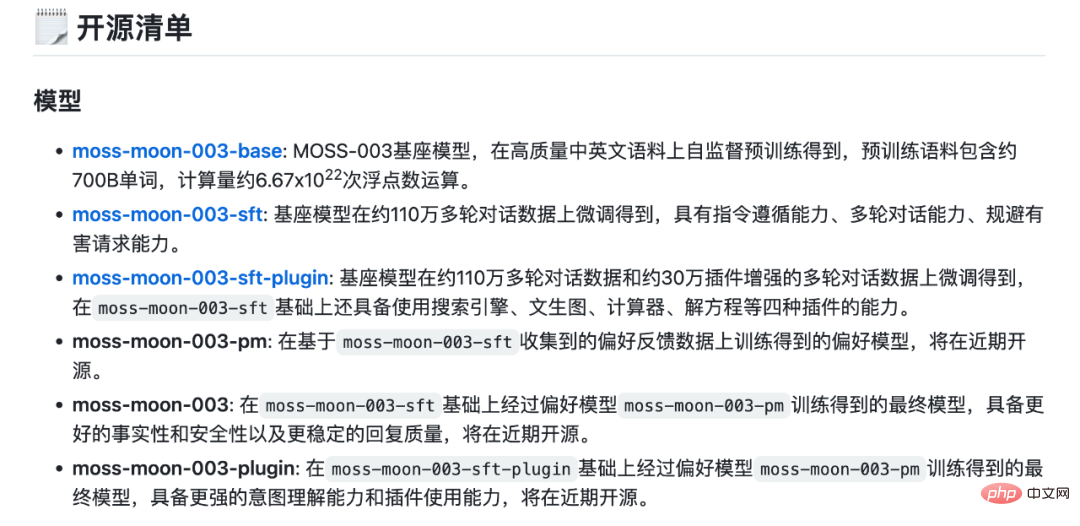

Currently, the team Three models, moss-moon-003-base, moss-moon-003-sft, and moss-moon-003-sft-plugin, have been uploaded to HuggingFace. In the future, three more models will be open source.

The team also stated that due to the small number of model parameters and the autoregressive generation paradigm, MOSS may still generate misleading replies containing factual errors or harmful content containing bias/discrimination, please Be careful to identify and use content generated by MOSS, and do not spread harmful content generated by MOSS to the Internet.

New capabilities

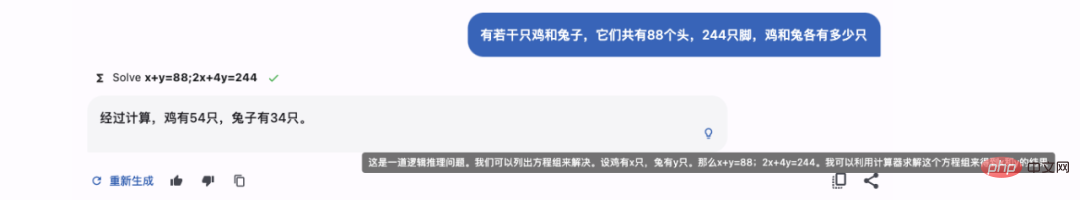

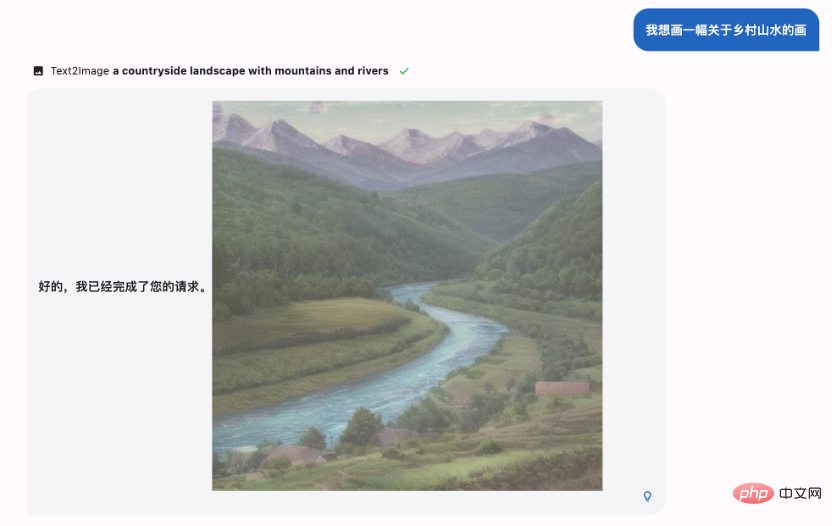

In MOSS v0.0.3, the team added a number of new capabilities.The team constructed approximately 300,000 plug-in-enhanced conversation data, including search engines, Vincent diagrams, calculators, equation solvers, etc. Regarding how to use the plug-in version of MOSS, the subsequent team will announce it on GitHub.

MOSS v0.0.3 now introduces the ability to use multiple plugins.

The following figure shows the ability to call the search engine:

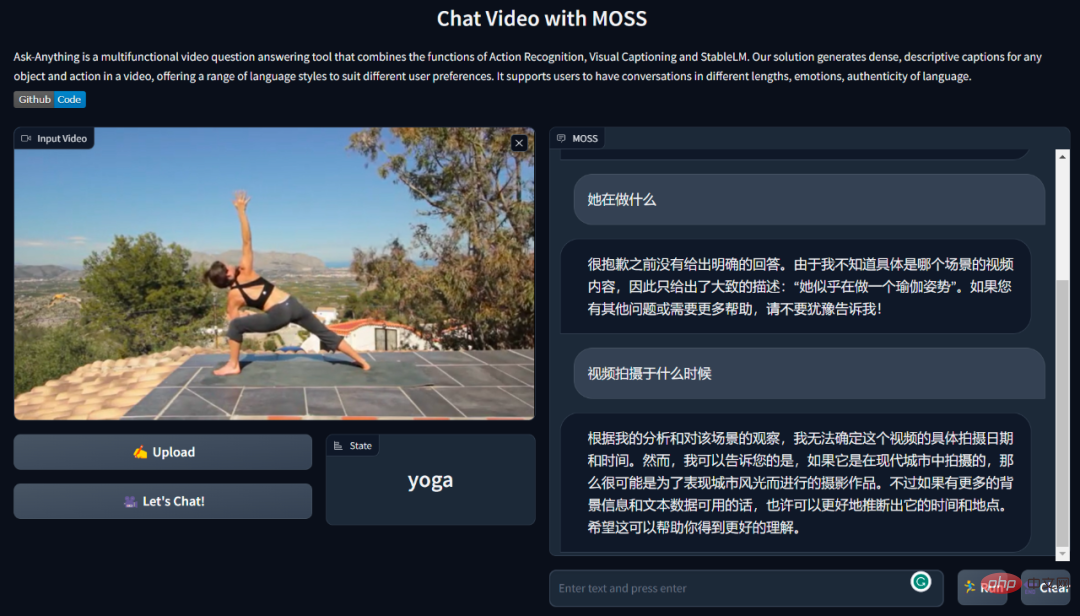

Project author Sun Tianxiang added that MOSS 003’s ability to support plug-in activation is controlled through meta instruction, similar to the system prompt in gpt-3.5-turbo. "Because it is controlled by the model, it cannot guarantee 100% control rate, and there are still some defects such as inaccurate calling of multi-selected plug-ins and plug-ins fighting with each other. We are developing new models as soon as possible to alleviate these problems." Download the contents of this warehouse to local/remote server: Create conda environment: Installation dependencies: The torch and transformers versions are not recommended to be lower than the recommended versions. According to the agreement, open source MOSS can be used for commercial purposes: In addition, developers can To call MOSS services through API, the team will consider providing services through API interface according to the current service pressure. For the interface format, please refer to: https://github.com/OpenLMLab/MOSS/blob/main/moss_api.pdf Currently, there are developers creating based on open source content, such as video Q&A through VideoChat. VideoChat is a multi-functional video question and answer tool that combines the functions of motion recognition, visual subtitles and StableLM. The tool generates dense, descriptive subtitles for any object and action in a video, offering a range of language styles to suit different user preferences. It supports users to have conversations of varying lengths, moods, and language authenticity. ## Project address: https://github.com/OpenGVLab/Ask-Anything/tree/main/video_chat_with_MOSS

Download and install

git clone https://github.com/OpenLMLab/MOSS.gitcd MOSS

conda create --name moss pythnotallow=3.8

conda activate moss

pip install -r requirements.txt

The above is the detailed content of 16 billion parameters, multiple new capabilities, Fudan MOSS is open source. For more information, please follow other related articles on the PHP Chinese website!