Technology peripherals

Technology peripherals

AI

AI

Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal

Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal

Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal

Quantum entanglement refers to a special coupling phenomenon that occurs between particles. In the entangled state, we cannot describe the properties of each particle individually, but can only describe the properties of the overall system. This influence does not disappear with the change of distance, even if the particles are separated by the entire universe.

A new study shows that using quantum entanglement mechanisms, sensors can be more accurate and faster at detecting motion. Scientists believe the findings could help develop navigation systems that do not rely on GPS.

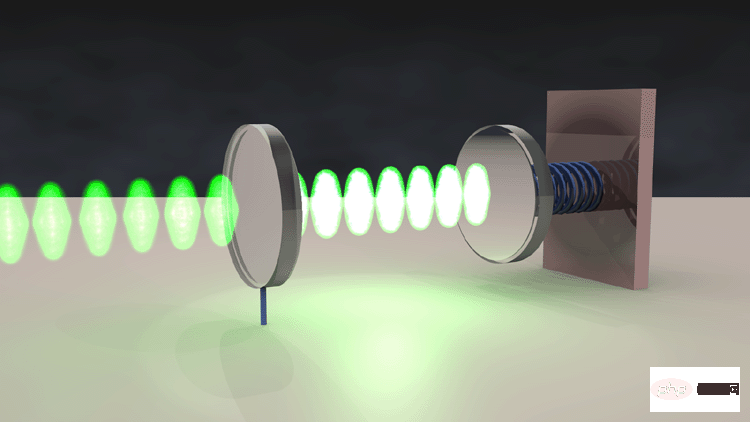

In a new study submitted in "Nature Photonics" by the University of Arizona and other institutions, researchers conducted experiments on optomechanical sensors, which use light beams to interfere with to respond. These sensors act as accelerometers, which smartphones can use to detect motion. On the other hand, accelerometers can also be used in inertial navigation systems in areas with poor GPS signals, such as underground, underwater, inside buildings, remote areas, and places where radio signals are interfered with.

The paper "Entanglement-enhanced optomechanical sensing":

##Paper link: https://www.nature.com/articles/s41566-023-01178-0

In order to improve photomechanical sensing To improve performance, researchers have tried using entanglement, which Einstein called "spooky action at a distance." Entangled particles are essentially in sync, no matter how far apart they are.

The researchers hope to have a prototype entangled accelerometer chip within the next two years.

Although quantum entanglement ignores distance, it is also extremely susceptible to external interference. Quantum sensors exploit this sensitivity to help detect the slightest disturbance in the surrounding environment.

"Our previous research on quantum-enhanced optomechanical sensing has mainly focused on improving the sensitivity of a single sensor," said lead author of the study, Quantum Physics at the University of Arizona, Tucson. Scientist Yi Xia said. "However, recent theoretical and experimental studies have shown that entanglement can greatly improve the sensitivity between multiple sensors, an approach known as distributed quantum sensing."

Optomechanics The sensor's mechanism relies on two synchronized laser beams. A beam of light is reflected by a component called an oscillator, and any movement of the oscillator changes the distance the light travels on its way to the detector. Any such difference in distance traveled becomes apparent when the second beam overlaps the first. If the sensor is stationary, the two beams are perfectly aligned; if the sensor is moving, the overlapping light waves create an interference pattern that reveals the magnitude and speed of the sensor's movement.

In the new study, the sensor from Dal Wilson's group at the University of Arizona uses a membrane as an oscillator, which works very well. Like a drum head that vibrates after being struck.

Here, instead of shining one beam at one oscillator, the researchers split an infrared laser beam into two entangled beams, which reflected from the two oscillators to the two on a detector. This entangled nature of light essentially allows two sensors to analyze a single beam of light, working together to increase speed and accuracy.

"We can use entanglement to enhance the force-sensing performance of multiple optomechanical sensors," said the study's lead author Zheshen Zhang, a quantum physicist at the University of Michigan in Ann Arbor. .

In addition, in order to improve the accuracy of the device, the researchers used so-called "compressed light". Squeezing light takes advantage of a key principle of quantum physics: Heisenberg's Uncertainty Principle, which states that when a particle's position is determined, its momentum is completely uncertain; if its momentum is determined, its position is completely uncertain. Not sure at all. Squeezed light exploits this trade-off to “squeeze” or reduce the uncertainty in the measurement of a given variable — in this case, the phase of the waves that make up the laser beam — while increasing the uncertainty in the measurement of another variable, but the study Personnel can be ignored.

"We are one of the few teams that can create a compressed light source and are currently exploring it as the basis for the next generation of precision measurement technology," said Zheshen Zhang.

All in all, the scientists were able to collect measurements that were 40% more precise and 60% faster than using two unentangled beams. Furthermore, they say the accuracy and speed of this method are expected to increase as the number of sensors increases.

"These findings mean we can further improve the performance of ultra-precision force sensing to unprecedented levels," said Zheshen Zhang.

Researchers say that improving optomechanical sensors could not only lead to better inertial navigation systems, but also help detect mysterious phenomena such as dark matter and gravitational waves. Dark matter is an invisible substance thought to make up five-sixths of all matter in the universe, and detecting its possible gravitational effects can help scientists figure out its properties. Gravitational waves are ripples in the fabric of space-time that can help reveal mysteries from black holes to the Big Bang.

Next, the scientists plan to miniaturize their system. It is already possible to place compressed light sources on chips that are only half a centimeter wide. Within the next year or two we can expect to have prototype chips that include squeezed light sources, beam splitters, waveguides and inertial sensors. "This will make this technology more practical, more affordable, and more accessible," said Zheshen Zhang.

In addition, the research team is currently working with Honeywell, Jet Propulsion Laboratory, NIST and several other universities to develop a chip-scale quantum enhanced inertial measurement unit. "Our vision is to deploy such integrated sensors in autonomous vehicles and spacecraft to achieve precise navigation without GPS signals," said Zheshen Zhang.

The above is the detailed content of Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

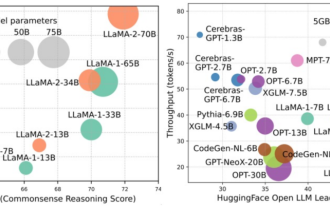

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

In recent years, multimodal learning has received much attention, especially in the two directions of text-image synthesis and image-text contrastive learning. Some AI models have attracted widespread public attention due to their application in creative image generation and editing, such as the text image models DALL・E and DALL-E 2 launched by OpenAI, and NVIDIA's GauGAN and GauGAN2. Not to be outdone, Google released its own text-to-image model Imagen at the end of May, which seems to further expand the boundaries of caption-conditional image generation. Given just a description of a scene, Imagen can generate high-quality, high-resolution

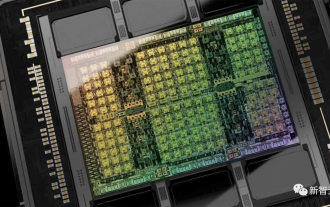

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

3nm process, performance surpasses H100! Recently, foreign media DigiTimes broke the news that Nvidia is developing the next-generation GPU, the B100, code-named "Blackwell". It is said that as a product for artificial intelligence (AI) and high-performance computing (HPC) applications, the B100 will use TSMC's 3nm process process, as well as more complex multi-chip module (MCM) design, and will appear in the fourth quarter of 2024. For Nvidia, which monopolizes more than 80% of the artificial intelligence GPU market, it can use the B100 to strike while the iron is hot and further attack challengers such as AMD and Intel in this wave of AI deployment. According to NVIDIA estimates, by 2027, the output value of this field is expected to reach approximately

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! Written by 7 Chinese researchers at Microsoft, it has 119 pages. It starts from two types of multi-modal large model research directions that have been completed and are still at the forefront, and comprehensively summarizes five specific research topics: visual understanding and visual generation. The multi-modal large-model multi-modal agent supported by the unified visual model LLM focuses on a phenomenon: the multi-modal basic model has moved from specialized to universal. Ps. This is why the author directly drew an image of Doraemon at the beginning of the paper. Who should read this review (report)? In the original words of Microsoft: As long as you are interested in learning the basic knowledge and latest progress of multi-modal basic models, whether you are a professional researcher or a student, this content is very suitable for you to come together.

New research reveals the potential of quantum Monte Carlo to surpass neural networks in breaking through limitations, and a Nature sub-issue details the latest progress

Apr 24, 2023 pm 09:16 PM

New research reveals the potential of quantum Monte Carlo to surpass neural networks in breaking through limitations, and a Nature sub-issue details the latest progress

Apr 24, 2023 pm 09:16 PM

After four months, another collaborative work between ByteDance Research and Chen Ji's research group at the School of Physics at Peking University has been published in the top international journal Nature Communications: the paper "Towards the ground state of molecules via diffusion Monte Carlo neural networks" combines neural networks with diffusion Monte Carlo methods, greatly improving the application of neural network methods in quantum chemistry. The calculation accuracy, efficiency and system scale on related tasks have become the latest SOTA. Paper link: https://www.nature.com

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

This work of EfficientSAM was included in CVPR2024 with a perfect score of 5/5/5! The author shared the result on a social media, as shown in the picture below: The LeCun Turing Award winner also strongly recommended this work! In recent research, Meta researchers have proposed a new improved method, namely mask image pre-training (SAMI) using SAM. This method combines MAE pre-training technology and SAM models to achieve high-quality pre-trained ViT encoders. Through SAMI, researchers try to improve the performance and efficiency of the model and provide better solutions for vision tasks. The proposal of this method brings new ideas and opportunities to further explore and develop the fields of computer vision and deep learning. by combining different

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

The image-to-video generation (I2V) task is a challenge in the field of computer vision that aims to convert static images into dynamic videos. The difficulty of this task is to extract and generate dynamic information in the temporal dimension from a single image while maintaining the authenticity and visual coherence of the image content. Existing I2V methods often require complex model architectures and large amounts of training data to achieve this goal. Recently, a new research result "I2V-Adapter: AGeneralImage-to-VideoAdapter for VideoDiffusionModels" led by Kuaishou was released. This research introduces an innovative image-to-video conversion method and proposes a lightweight adapter module, i.e.

2022 Boltzmann Prize announced: Founder of Hopfield Network wins award

Aug 13, 2023 pm 08:49 PM

2022 Boltzmann Prize announced: Founder of Hopfield Network wins award

Aug 13, 2023 pm 08:49 PM

The two scientists who have won the 2022 Boltzmann Prize have been announced. This award was established by the IUPAP Committee on Statistical Physics (C3) to recognize researchers who have made outstanding achievements in the field of statistical physics. The winner must be a scientist who has not previously won a Boltzmann Prize or a Nobel Prize. This award began in 1975 and is awarded every three years in memory of Ludwig Boltzmann, the founder of statistical physics. Deepak Dharistheoriginalstatement. Reason for award: In recognition of Deepak Dharistheoriginalstatement's pioneering contributions to the field of statistical physics, including Exact solution of self-organized critical model, interface growth, disorder