Technology peripherals

Technology peripherals

AI

AI

Yann LeCun says giant models cannot achieve the goal of approaching human intelligence

Yann LeCun says giant models cannot achieve the goal of approaching human intelligence

Yann LeCun says giant models cannot achieve the goal of approaching human intelligence

"Language only carries a small part of all human knowledge; most human knowledge and all animal knowledge are non-linguistic; therefore, large language models cannot approach human-level intelligence," this is Turing Award winner Yann LeCun's latest thinking on the prospects of artificial intelligence.

Yesterday, his new article co-authored with New York University postdoc Jacob Browning was published in "NOEMA", triggering People's discussions.

In the article, the author discusses the currently popular large-scale language model and believes that it has obvious limits. The direction of future efforts in the field of AI may be to give machines priority in understanding other levels of knowledge in the real world.

Let’s see what they say.

Some time ago, former Google AI ethics researcher Blake Lemoine claimed that the AI chatbot LaMDA is as conscious as a human, which caused an uproar in the field.

LaMDA is actually a large language model (LLM) designed to predict the next possible word for any given text. Since many conversations are predictable to some degree, these systems can infer how to keep the conversation efficient. LaMDA does such a good job at this kind of task that Blake Lemoine began to wonder whether AI has “consciousness.”

Researchers in the field have different views on this matter: some people scoff at the idea of machines being conscious; some people think that the LaMDA model may not be, but the next model may be conscious. . Others point out that it is not difficult for machines to "cheat" humans.

The diversity of responses highlights a deeper problem: As LLMs become more common and powerful, it seems increasingly difficult to agree on our views on these models . Over the years, these systems have surpassed many "common sense" language reasoning benchmarks, but these systems appear to have little committed common sense when tested, and are even prone to nonsense and making illogical and dangerous suggestions. This raises a troubling question: How can these systems be so intelligent yet have such limited capabilities?

In fact, the most fundamental problem is not artificial intelligence, but the limitation of language. Once we give up the assumption about the connection between consciousness and language, these systems are destined to have only a superficial understanding of the world and never come close to the "comprehensive thinking" of humans. In short, while these models are already some of the most impressive AI systems on the planet, these AI systems will never be as intelligent as us humans.

For much of the 19th and 20th centuries, a dominant theme in philosophy and science was: knowledge is merely language. This means that understanding one thing requires only understanding the content of a sentence and relating that sentence to other sentences. According to this logic, the ideal language form would be a logical-mathematical form composed of arbitrary symbols connected by strict inference rules.

The philosopher Wittgenstein said: "The sum total of true propositions is natural science." This position was established in the 20th century and later caused a lot of controversy.

Some highly educated intellectuals still hold the view: "Everything we can know can be contained in an encyclopedia, so just reading all the contents of the encyclopedia will make us We have a comprehensive understanding of everything." This view also inspired much of the early work on Symbolic AI, which made symbolic processing the default paradigm. For these researchers, AI knowledge consists of large databases of real sentences connected to each other by hand-made logic. The goal of the AI system is to output the right sentence at the right time, that is, to process symbols in an appropriate way. .

This concept is the basis of the Turing test: if a machine "says" everything it is supposed to say, that means it knows what it is talking about because it knows the correct Sentences and when to use them use the above artificial intelligence knowledge.

But this view has been severely criticized. The counterargument is that just because a machine can talk about things, it does not mean that it understands what is being said. This is because language is only a highly specific and very limited representation of knowledge. All languages, whether programming languages, symbolic logic languages, or everyday spoken language, enable a specific type of representational mode; it is good at expressing discrete objects and properties and the relationships between them at a very high level of abstraction.

However, all modes of representation involve compression of information about things, but differ in what is left and what is left out in the compression. The representation mode of language may miss some specific information, such as describing irregular shapes, the movement of objects, the functions of complex mechanisms, or the meticulous brushstrokes in paintings, etc. Some non-linguistic representation schemes can express this information in an easy-to-understand way, including iconic knowledge, distributed knowledge, etc.

The Limitations of Language

To understand the shortcomings of the language representation model, we must first realize how much information language conveys. In fact, language is a very low-bandwidth method of transmitting information, especially when isolated words or sentences convey little information without context. Furthermore, the meaning of many sentences is very ambiguous due to the large number of homophones and pronouns. As researchers such as Chomsky have pointed out: Language is not a clear and unambiguous communication tool.

But humans don’t need perfect communication tools because we share a system of understanding non-verbal language. Our understanding of a sentence often depends on a deep understanding of the context in which the sentence is placed, allowing us to infer the meaning of the linguistic expression. We often talk directly about the matter at hand, such as a football match. Or communicating to a social role in a situation, such as ordering food from a waiter.

The same goes for reading passages of text—a task that undermines AI’s access to common sense but is a popular way to teach context-free reading comprehension skills to children. This approach focuses on using general reading comprehension strategies to understand text—but research shows that the amount of background knowledge a child has about the topic is actually a key factor in comprehension. Understanding whether a sentence or paragraph is correct depends on a basic grasp of the subject matter.

"It is clear that these systems are mired in superficial understanding and will never come close to the full range of human thought."

Words and the inherent contextual properties of sentences are at the core of LLM's work. Neural networks typically represent knowledge as know-how, that is, the proficient ability to grasp patterns that are highly context-sensitive and to summarize regularities (concrete and abstract) that are necessary to process inputs in an elaborate way but are suitable only for limited tasks .

In LLM, it's about the system identifying patterns at multiple levels of existing text, seeing both how words are connected in a paragraph and how sentences are constructed. How they are connected together in larger paragraphs. The result is that a model's grasp of language is inevitably context-sensitive. Each word is understood not according to its dictionary meaning, but according to its role in various sentences. Since many words—such as "carburetor," "menu," "tuning," or "electronics"—are used almost exclusively in specific fields, even an isolated sentence with one of these words will predictably take out of context .

In short, LLM is trained to understand the background knowledge of each sentence, looking at surrounding words and sentences to piece together what is going on. This gives them endless possibilities to use different sentences or phrases as input and come up with reasonable (although hardly flawless) ways to continue a conversation or fill out the rest of an article. A system trained on human-written paragraphs for use in daily communication should possess the general understanding necessary to be able to hold high-quality conversations.

Shallow understanding

Some people are reluctant to use the word "understanding" in this context or call LLM "intelligent". The semantics cannot be said yet. Understanding convinces anyone. Critics accuse these systems of being a form of imitation—and rightly so. This is because LLM's understanding of language, while impressive, is superficial. This superficial realization feels familiar: classrooms full of “jargon-speaking” students who have no idea what they are talking about—in effect imitating their professors or the text they are reading. It's just part of life. We are often unclear about what we know, especially in terms of knowledge gained from language.

LLM acquires this superficial understanding of everything. Systems like GPT-3 are trained by masking out part of a sentence, or predicting the next word in a paragraph, forcing the machine to guess the word most likely to fill the gap and correct incorrect guesses. The system eventually becomes adept at guessing the most likely words, making itself an effective predictive system.

This brings some real understanding: to any question or puzzle, there are usually only a few right answers, but an infinite number of wrong answers. This forces the system to learn language-specific skills, such as interpreting jokes, solving word problems, or solving logic puzzles, in order to predict the correct answers to these types of questions on a regular basis.

These skills and related knowledge allow machines to explain how complex things work, simplify difficult concepts, rewrite and retell stories, and acquire many other language-related abilities. As Symbolic AI posits – instead of a vast database of sentences linked by logical rules, machines represent knowledge as contextual highlights used to come up with a reasonable next sentence given the previous line.

"Abandoning the idea that all knowledge is verbal makes us realize how much of our knowledge is non-verbal."

But the ability to explain a concept in language is different from the ability to actually use it. The system can explain how to perform long division while actually not being able to do it, or it can explain what is inconsistent with it and yet happily continue explaining it. Contextual knowledge is embedded in one form - the ability to verbalize knowledge of language - but not in another - as skills in how to do things, such as being empathetic or dealing with difficult issues sensitively.

The latter kind of expertise is essential for language users, but it does not enable them to master language skills - the language component is not primary. This applies to many concepts, even those learned from lectures and books: While science classes do have a lecture component, students' scores are primarily based on their work in the lab. Especially outside of the humanities, being able to talk about something is often not as useful or important as the basic skills needed to make things work.

Once we dig a little deeper, it’s easy to see how shallow these systems actually are: Their attention spans and memories are roughly equivalent to a paragraph. It’s easy to miss this if we’re having a conversation, as we tend to focus on the last one or two comments and grapple with the next reply.

But, the trick to more complex conversations—active listening, recalling and revisiting previous comments, sticking to a topic to make a specific point while avoiding distractions, etc.— All require more attention and memory than machines possess.

This further reduces the types of things they can understand: it's easy to trick them by changing the topic, changing the language, or being weird every few minutes. Step back too far and the system will start over from scratch, lump your new views in with old comments, switch chat languages with you, or believe anything you say. The understanding necessary to develop a coherent worldview is far beyond the capabilities of machines.

Beyond Language

Abandoning the idea that all knowledge is linguistic makes us realize that a considerable part of our knowledge is non-linguistic. While books contain a lot of information we can unpack and use, the same goes for many other items: IKEA’s instructions don’t even bother to write captions next to the diagrams, and AI researchers often look at diagrams in papers to grasp network architecture before By browsing the text, travelers can follow the red or green lines on the map to navigate to where they want to go.

The knowledge here goes beyond simple icons, charts and maps. Humanity has learned much directly from exploring the world, showing us what matter and people can and cannot express. The structure of matter and the human environment convey a lot of information visually: the doorknob is at hand height, the handle of a hammer is softer, etc. Nonverbal mental simulations in animals and humans are common and useful for planning scenarios and can be used to create or reverse engineer artifacts.

Likewise, by imitating social customs and rituals, we can teach the next generation a variety of skills, from preparing food and medicine to calming down during stressful times. Much of our cultural knowledge is iconic, or in the form of precise movements passed down from skilled practitioners to apprentices. These subtle patterns of information are difficult to express and convey in words, but are still understandable to others. This is also the precise type of contextual information that neural networks are good at picking up and refining.

"A system trained solely on language will never come close to human intelligence, even if it is trained from now on until the heat death of the universe."

Language is important because it can convey large amounts of information in a small format, especially with the advent of printing and the Internet, which allows content to be reproduced and widely distributed. But compressing information with language doesn't come without a cost: decoding a dense passage requires a lot of effort. Humanities classes may require extensive outside reading, with much of class time spent reading difficult passages. Building a deep understanding is time-consuming and laborious, but informative.

This explains why a language-trained machine can know so much and yet understand nothing—it is accessing a small portion of human knowledge through a tiny bottleneck. But that small slice of human knowledge can be about anything, whether it's love or astrophysics. So it's a bit like a mirror: it gives the illusion of depth and can reflect almost anything, but it's only a centimeter thick. If we try to explore its depths, we'll hit a wall.

Do the right thing

This doesn’t make machines any dumber, but it also shows that there are inherent limits to how smart they can be. A system trained solely on language will never come close to human intelligence, even if it is trained from now on until the heat death of the universe. This is a wrong way to build a knowledge system. But if we just scratch the surface, machines certainly seem to be getting closer to humans. And in many cases, surface is enough. Few of us actually apply the Turing Test to other people, actively questioning their depth of understanding and forcing them to do multi-digit multiplication problems. Most conversations are small talk.

However, we should not confuse the superficial understanding that LLM possesses with the deep understanding that humans gain by observing the wonders of the world, exploring it, practicing in it, and interacting with cultures and other people Mixed together. Language may be a useful component in expanding our understanding of the world, but language does not exhaust intelligence, a point we understand from the behavior of many species, such as corvids, octopuses, and primates.

On the contrary, deep non-verbal understanding is a necessary condition for language to be meaningful. Precisely because humans have a deep understanding of the world, we can quickly understand what others are saying. This broader, context-sensitive learning and knowledge is a more fundamental, ancient knowledge that underlies the emergence of physical biological sentience, making survival and prosperity possible.

This is also the more important task that artificial intelligence researchers focus on when looking for common sense in artificial intelligence. LLMs have no stable body or world to perceive - so their knowledge begins and ends more with words, and this common sense is always superficial. The goal is to have AI systems focus on the world they’re talking about, rather than the words themselves—but LLM doesn’t grasp the difference. This deep understanding cannot be approximated through words alone, which is the wrong direction to take.

The extensive experience of humans processing various large language models clearly shows how little can be obtained from speech alone.

The above is the detailed content of Yann LeCun says giant models cannot achieve the goal of approaching human intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

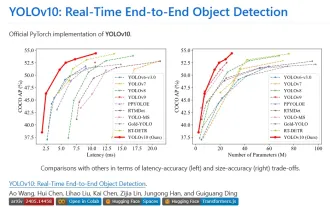

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year