Technology peripherals

Technology peripherals

AI

AI

Byte AI Lab's core technology won the Habitat Challenge 2022 Active Navigation Championship, which combines traditional methods with imitation learning.

Byte AI Lab's core technology won the Habitat Challenge 2022 Active Navigation Championship, which combines traditional methods with imitation learning.

Byte AI Lab's core technology won the Habitat Challenge 2022 Active Navigation Championship, which combines traditional methods with imitation learning.

Object navigation is one of the basic tasks of intelligent robots. In this task, the intelligent robot actively explores and finds certain types of objects designated by humans in an unknown new environment. The object target navigation task is oriented to the application needs of future home service robots. When people need the robot to complete certain tasks, such as getting a glass of water, the robot needs to first find and move to the location of the water cup, and then help people get the water cup.

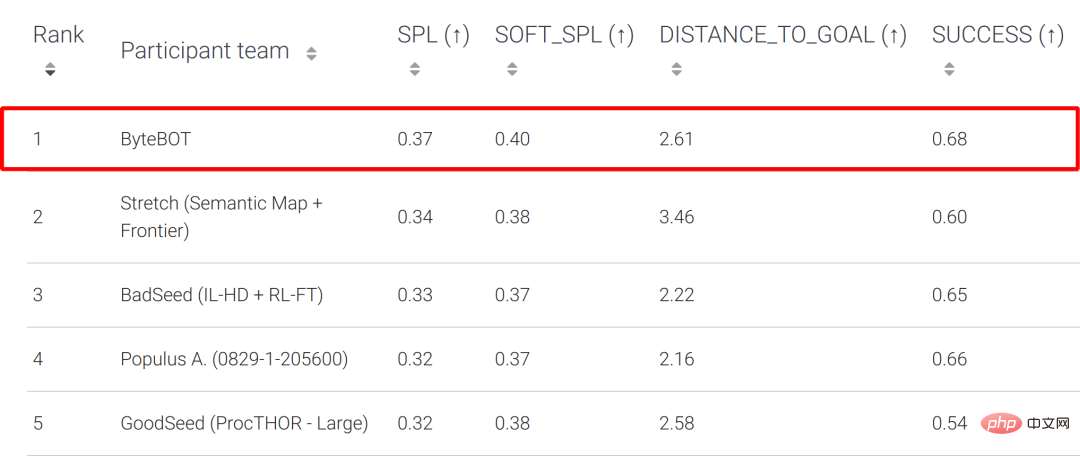

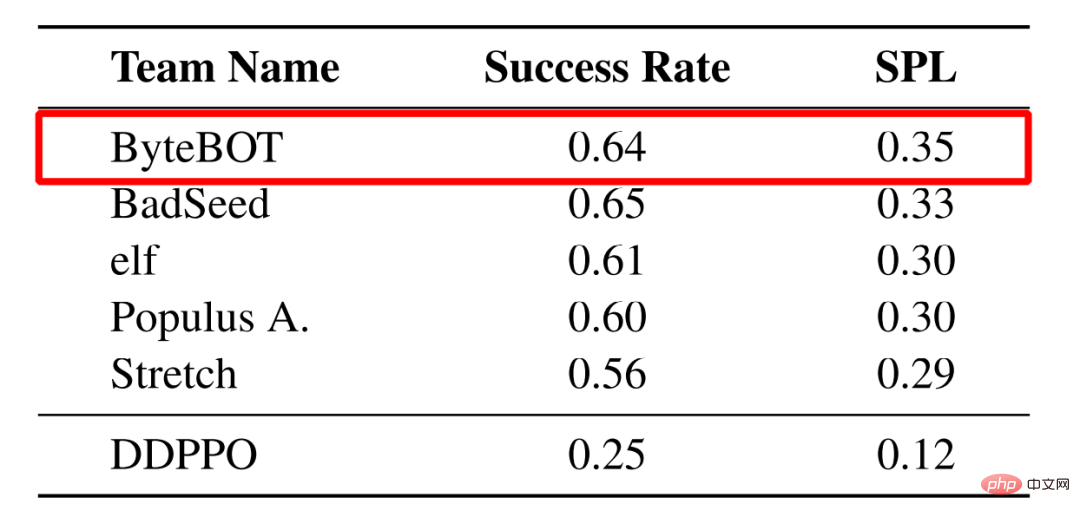

The Habitat Challenge is jointly organized by Meta AI and other institutions. It is one of the well-known competitions in the field of object navigation. As of 2022, it has been held for 4 consecutive years. A total of 54 teams participated in this competition. In the competition, researchers from the ByteDance AI Lab-Research team proposed a new object target navigation framework to address the shortcomings of existing methods. This framework cleverly combines imitation learning with traditional methods to stand out from the crowd and win the championship. Results that significantly exceeded the results of the second-place and other participating teams in the key metric SPL. Historically, the champion teams of this event are generally well-known research institutions such as CMU, UC Berkerly, and Facebook.

Test-Standard List

Test-Challenge List

Habitat Challenge Official Website : https://aihabitat.org/challenge/2022/

Habitat Challenge Competition LeaderBoard: https://eval.ai/web/challenges/challenge-page/1615/leaderboard

1 . Research motivation

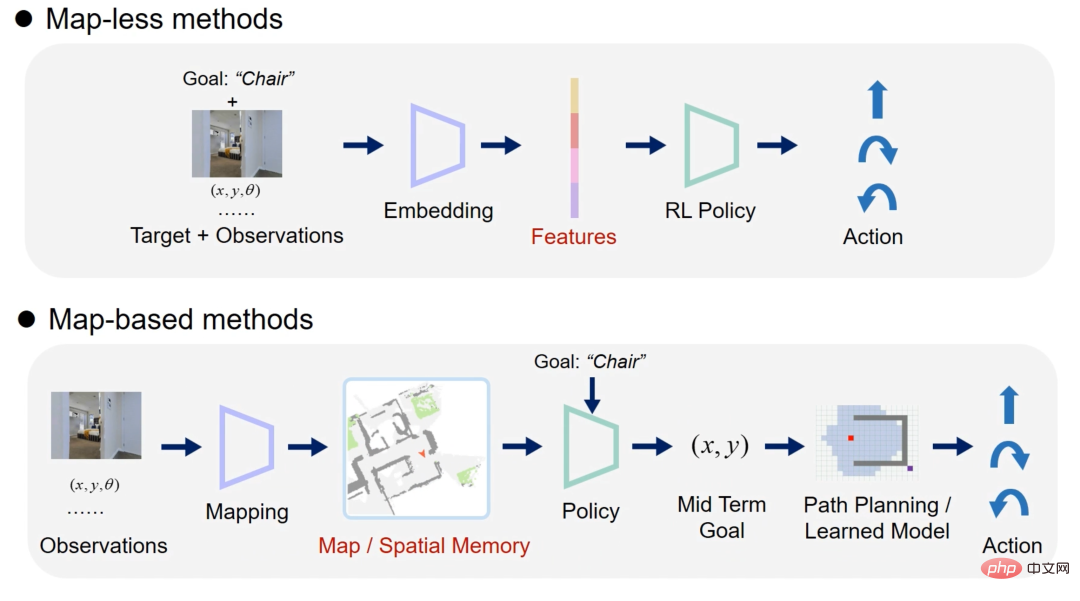

The current object target navigation methods can be roughly divided into two categories: end-to-end methods and map-based methods. The end-to-end method extracts the characteristics of the input sensor data and then feeds it into a deep learning model to obtain the action. Such methods are generally based on reinforcement learning or imitation learning (Figure 1 Map-less methods); map-based methods generally build explicit Or implicit map, then select a target point on the map through reinforcement learning and other methods, and finally plan the path and obtain the action (Figure 1 Map-based method).

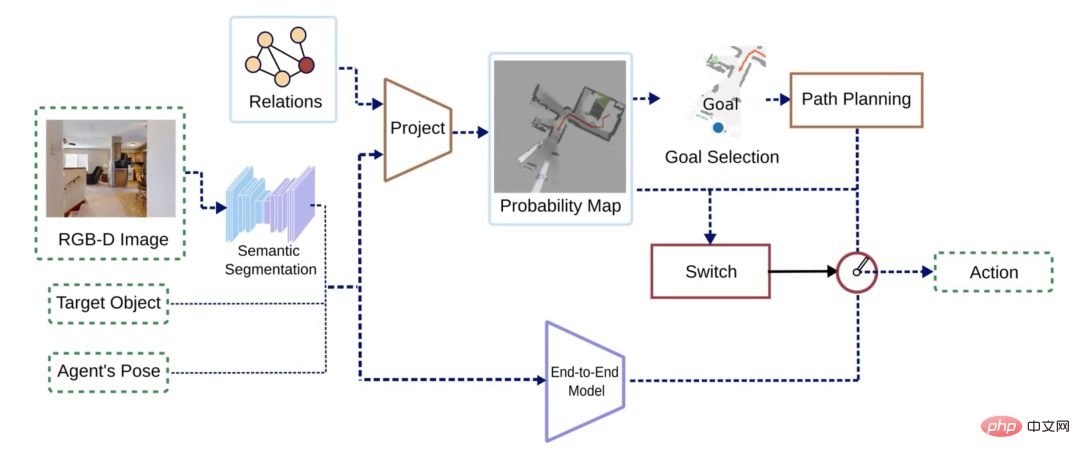

Figure 1 Flow chart of end-to-end method (top) and map-based method (bottom)

After a lot of experiments After comparing the two types of methods, researchers found that both types of methods have their own advantages and disadvantages: the end-to-end method does not require the construction of a map of the environment, so it is more concise and has stronger generalization ability in different scenarios. However, because the network needs to learn to encode the spatial information of the environment, it relies on a large amount of training data, and it is difficult to learn some simple behaviors at the same time, such as stopping near the target object. Map-based methods use rasters to store features or semantics and have explicit spatial information, so the learning threshold for this type of behavior is lower. However, it relies heavily on accurate positioning results, and in some environments such as stairs, artificial design of perception and path planning strategies is required.

Based on the above conclusions, researchers from the ByteDance AI Lab-Research team hope to combine the advantages of the two methods. However, the algorithm processes of these two methods are very different, making it difficult to directly combine them; in addition, it is also difficult to design a strategy to directly integrate the output of the two methods. Therefore, the researchers designed a simple but effective strategy that allows the two types of methods to alternately conduct active exploration and object search according to the status of the robot, thereby maximizing their respective advantages.

2. Competition method

The algorithm mainly consists of two branches: the probability map-based branch and the end-to-end branch. The input of the algorithm is the first-view RGB-D image and robot pose, as well as the target object category to be found, and the output is the next action (action). The RGB image is first instance segmented and passed to both branches along with other raw input data. The two branches output their own actions respectively, and a switching strategy determines the final output action.

Figure 2 Schematic diagram of algorithm flow

Probability map-based branch

The probability map-based branch draws on the idea of Semantic linking map[2] and is based on the author's original paper published at the IROS Robot Conference[3] method has been simplified. This branch builds a 2D semantic map based on the input instance segmentation results, depth map, and robot pose; on the other hand, it updates a probability map based on the pre-learned association probabilities between objects.

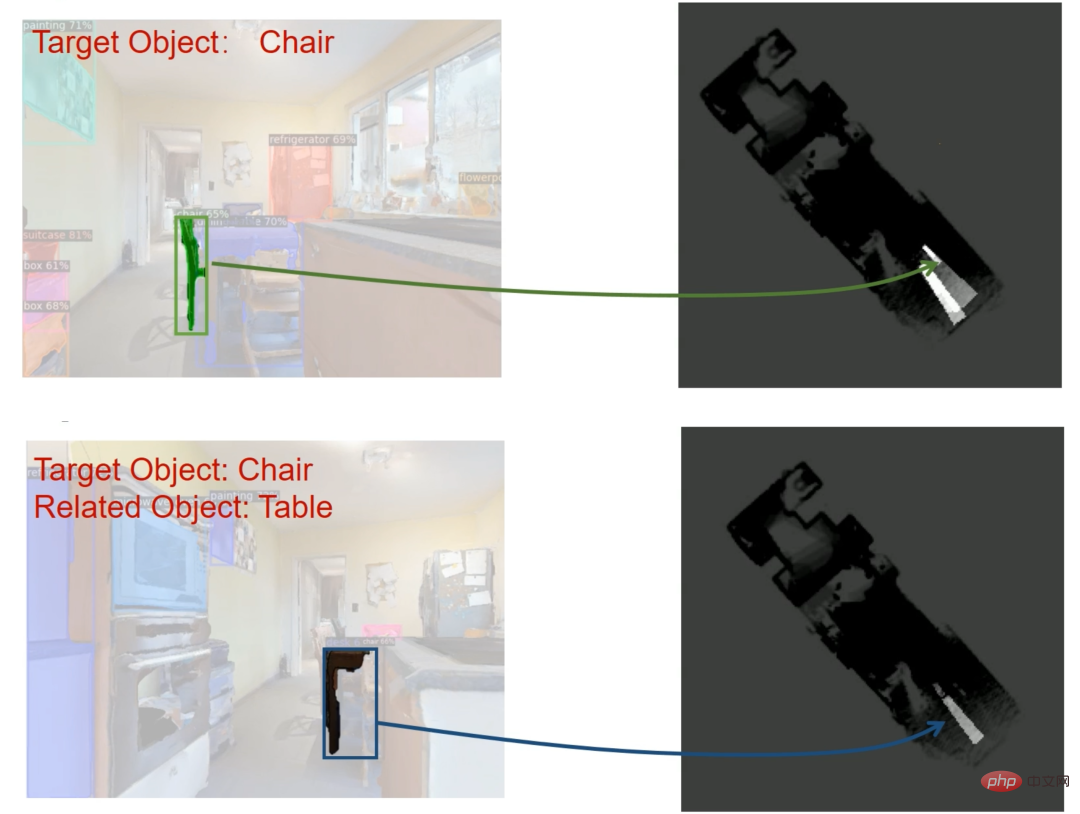

The probability map update methods include the following: when the target object is detected but not confident enough (the confidence score is lower than the threshold), you should continue to observe closer at this time, so the corresponding area on the probability map The probability value should be increased (as shown in the top of Figure 3); similarly, if objects related to the target object are detected (for example, the probability of tables and chairs placed together is relatively high), the probability value of the corresponding area will also increase ( As shown below in Figure 3). By selecting the area with the highest probability as the target point, the algorithm encourages the robot to approach potential target objects and related objects for further observation until it finds a target object with a confidence probability higher than the threshold.

Figure 3 Schematic diagram of probability map update method

End-to-end branch

The inputs of the end-to-end branch include RGB-D images, instance segmentation results, robot poses, and target object categories, and actions are directly output. The main function of the end-to-end branch is to guide the robot to find objects like humans, so the model and training process of the Habitat-Web[4] method are adopted. The method is based on imitation learning, where the network is trained by collecting examples of humans looking for objects in a training set.

Switching strategy

The switching strategy is mainly based on the results of the probability map and path planning, selecting one of the two actions output by the probability map branch and the end-to-end branch as final output. When there is no raster with a probability greater than the threshold in the probability map, the robot needs to explore the environment; when a feasible path cannot be planned on the map, the robot may be in some special environments (such as stairs). In both cases, end-to-end methods will be used. End-to-end branching enables the robot to have sufficient environmental adaptability. In other cases, the probabilistic map branch is selected to give full play to its advantages in finding target objects.

The effect of this switching strategy is shown in the video. The robot generally uses the end-to-end branch to efficiently explore the environment. Once a possible target object or related object is discovered, it switches to the probability map branch for closer observation. If the confidence probability of the target object is greater than the threshold, it will stop at the target object; otherwise, the probability value in the area will continue to decrease until there are no grids with a probability greater than the threshold, and the robot will switch back to end-to-end to continue exploring.

As can be seen from the video, this method combines the advantages of both the end-to-end approach and the map-based approach. The two branches perform their own duties. The end-to-end method is mainly responsible for exploring the environment; the probability map branch is responsible for observing close to the area of interest. Therefore, this method can not only explore complex scenes (such as stairs), but also reduce the training requirements of the end-to-end branch.

3. Summary

For the object active target navigation task, the ByteDance AI Lab-Research team proposed a framework that combines classic probability maps and modern imitation learning. This framework is a successful attempt to combine traditional methods with an end-to-end approach. In the Habitat competition, the method proposed by the ByteDance AI Lab-Research team significantly exceeded the results of the second place and other participating teams, proving the advancement of the algorithm. By introducing traditional methods into the current mainstream Embodied AI end-to-end method, we can further make up for some shortcomings of the end-to-end method, thereby making intelligent robots go further on the road to helping and serving people.

Recently, ByteDance AI Lab-Research team’s research in the field of robotics has also been included in top robotics conferences such as CoRL, IROS, and ICRA, including object pose estimation, object grabbing, target navigation, and automatic assembly. , human-computer interaction and other core tasks of robots.

【CoRL 2022】Generative Category-Level Shape and Pose Estimation with Semantic Primitives

- Paper address: https://arxiv.org/abs/2210.01112

【IROS 2022】3D Part Assembly Generation with Instance Encoded Transformer

- Paper address: https://arxiv.org/abs/2207.01779

【IROS 2022 】Navigating to Objects in Unseen Environments by Distance Prediction

- Paper address: https://arxiv.org/abs/2202.03735

[EMNLP 2022] Towards Unifying Reference Expression Generation and Comprehension

- Paper Address: https://arxiv.org/pdf/2210.13076

[ICRA 2022] Learning Design and Construction with Varying-Sized Materials via Prioritized Memory Resets

- Paper address :https://arxiv.org/abs/2204.05509

【IROS 2021】Simultaneous Semantic and Collision Learning for 6-DoF Grasp Pose Estimation

- Paper address: https ://arxiv.org/abs/2108.02425

[IROS 2021] Learning to Design and Construct Bridge without Blueprint

- Paper address: https://arxiv.org /abs/2108.02439

4. References

[1] Yadav, Karmesh, et al. "Habitat-Matterport 3D Semantics Dataset." arXiv preprint arXiv:2210.05633 (2022) .

[2] Zeng, Zhen, Adrian Röfer, and Odest Chadwicke Jenkins. "Semantic linking maps for active visual object search." 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020.

[3] Minzhao Zhu, Binglei Zhao, and Tao Kong. "Navigating to Objects in Unseen Environments by Distance Prediction." arXiv preprint arXiv:2202.03735 (2022).

[4] Ramrakhya, Ram, et al. "Habitat-Web: Learning Embodied Object-Search Strategies from Human Demonstrations at Scale." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

5. About us

Bytedance AI Lab NLP&Research focuses on cutting-edge technology research in the field of artificial intelligence, covering multiple technical research fields such as natural language processing and robotics. It is also committed to putting research results into practice for The company's existing products and businesses provide core technical support and services. The team's technical capabilities are being opened to the outside world through the Volcano Engine, empowering AI innovation.

Bytedance AI-Lab NLP&Research Contact information

- Recruitment consultation: fankaijing@bytedance.com

- Academic cooperation: luomanping@bytedance.com

The above is the detailed content of Byte AI Lab's core technology won the Habitat Challenge 2022 Active Navigation Championship, which combines traditional methods with imitation learning.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

American university opens 'The Legend of Zelda: Tears of the Kingdom' engineering competition for students to build robots

Nov 23, 2023 pm 08:45 PM

American university opens 'The Legend of Zelda: Tears of the Kingdom' engineering competition for students to build robots

Nov 23, 2023 pm 08:45 PM

"The Legend of Zelda: Tears of the Kingdom" became the fastest-selling Nintendo game in history. Not only did Zonav Technology bring various "Zelda Creator" community content, but it also became the United States' A new engineering course at the University of Maryland (UMD). Rewrite: The Legend of Zelda: Tears of the Kingdom is one of Nintendo's fastest-selling games on record. Not only does Zonav Technology bring rich community content, it has also become part of the new engineering course at the University of Maryland. This fall, Associate Professor Ryan D. Sochol of the University of Maryland opened a course called "