Technology peripherals

Technology peripherals

AI

AI

CMU Zhu Junyan's team developed an automatic matching ranking system to evaluate the pros and cons of various AI generation models.

CMU Zhu Junyan's team developed an automatic matching ranking system to evaluate the pros and cons of various AI generation models.

CMU Zhu Junyan's team developed an automatic matching ranking system to evaluate the pros and cons of various AI generation models.

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

Generative AI has been very popular recently, and there are so many new pre-trained image generation models that it is dizzying to see. Whether it's portraits, landscapes, cartoons, elements of a specific artist's style, and more, each model has something it's good at generating.

With so many models, how do you quickly find the best model that can satisfy your creative desires?

Recently, Zhu Junyan, an assistant professor at Carnegie Mellon University, and others proposed the content-based model search algorithm for the first time, allowing you to search with one click Best matching depth image generation model.

##Paper address: https://arxiv.org/pdf/2210.03116.pdf

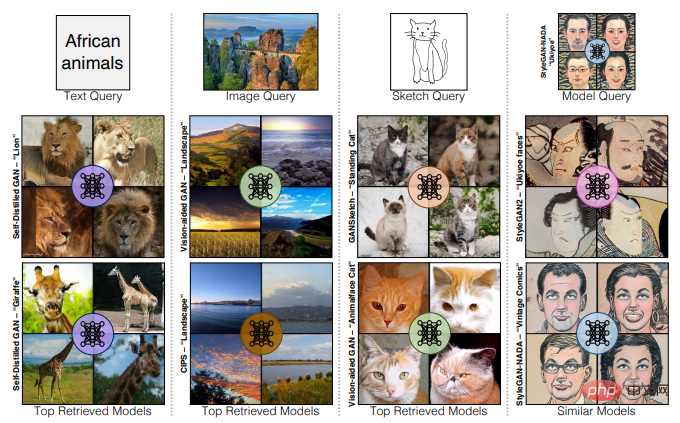

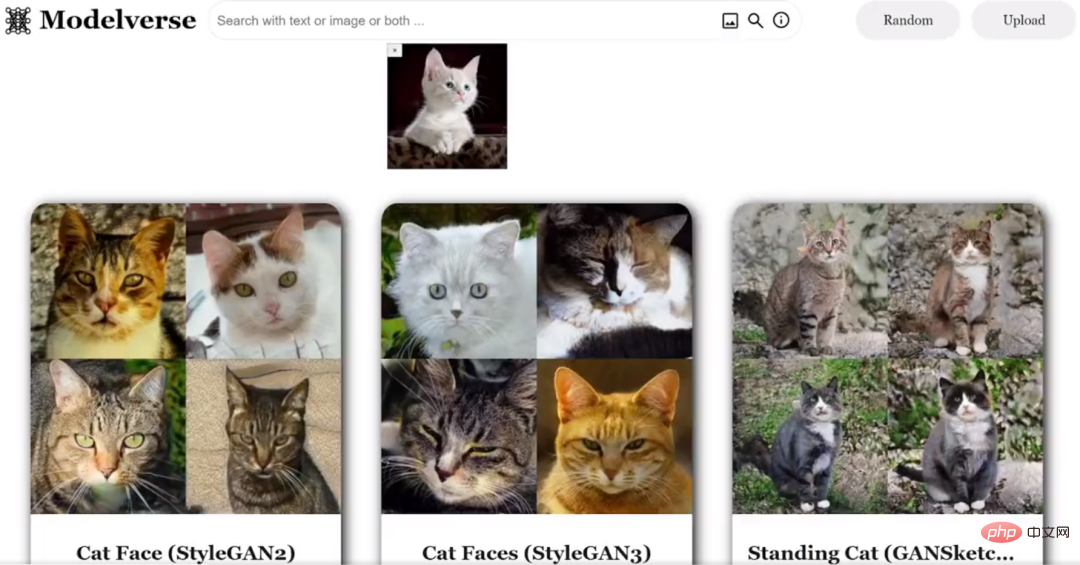

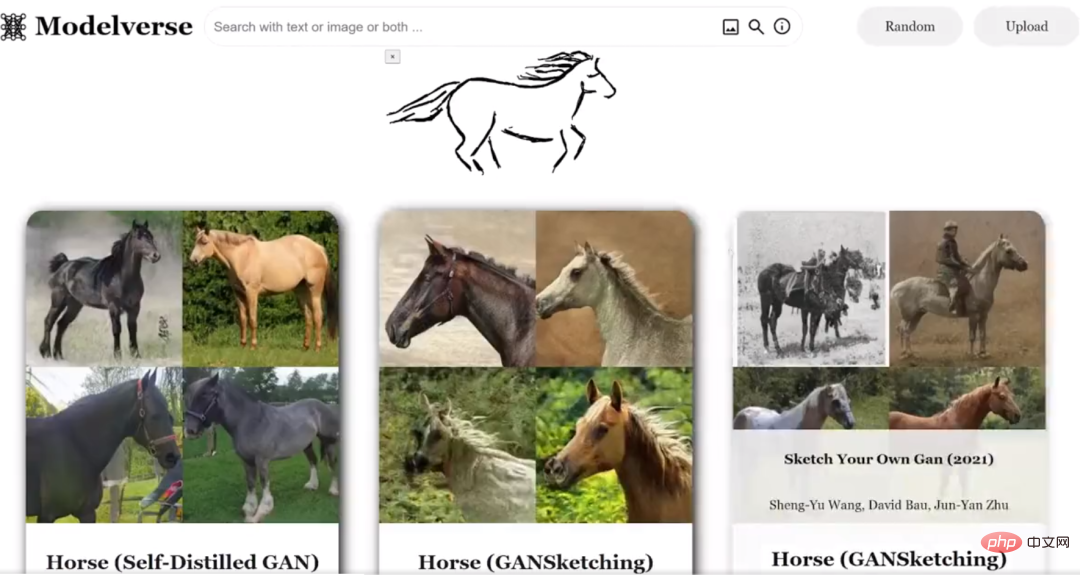

On Modelverse, an online model sharing and search platform developed by the team based on this set of model search algorithms, you can enter text, images, sketches and a given model to search for the most matching or similar related models.

Modelverse platform address: https://modelverse.cs.cmu.edu/

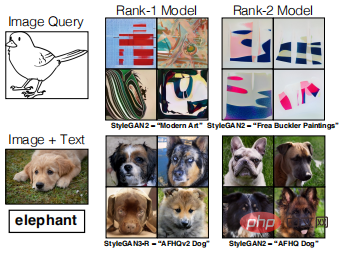

## Legend: input text (such as "African animals"), image (such as a landscape), sketch (such as a sketch of a standing cat) or a given model, and output the top ranking Related models (second and third rows)

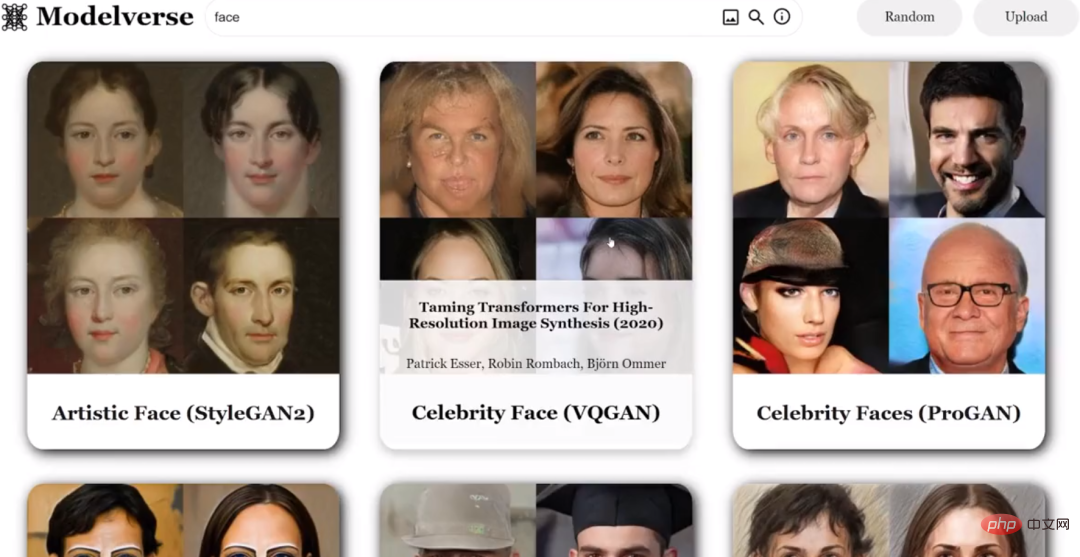

For example, if you enter the text "face", you will get the following results:

##Enter a sketch of a horse:

##Enter a sketch of a horse:

1

1

Like traditional multimedia search, model search can help users find the one that best suits their specific needs model. However, the content-based model search task has its own special difficulties:

Determining whether a model can generate a specific image is a computationally difficult problem, and many deep generative models do not provide effective method to estimate density, which itself does not support the assessment of cross-modal similarity. The sampling-based method of Monte Carlo will make the model search process very slow.

To this end, Zhu Junyan’s team proposed a new model search system.

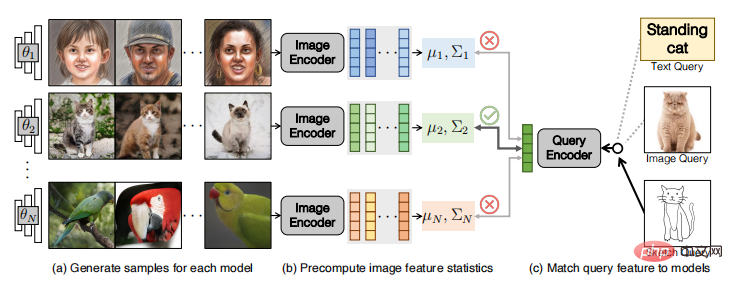

Each generative model produces an image distribution, so the authors approach the search problem as an optimization that maximizes the probability of generating a match to the query given the model. As shown in the figure below, the system consists of a pre-caching stage (a, b) and an inference stage (c).

Given a set of model, (a) first generate 50K samples for each model; (b) then encode the images into image features and calculate the first- and second-order feature statistics for each model. Statistics are cached in the system to improve efficiency; (c) during the inference phase, queries of different modalities are supported, including images, sketches, textual descriptions, another generative model, or a combination of these query types. The authors introduce an approximation here where the query is encoded as a feature vector and the model with the best similarity measure is retrieved by evaluating the similarity between the query features and each model statistics.

2 Model search effect

The author evaluates the algorithm and conducts ablation experimental analysis on 133 deep generation models (including GAN, diffusion model and autoregressive model) . Compared with the Monte Carlo baseline, this method can achieve a more efficient search, with a speed improvement of 5 times within 0.08 milliseconds, while maintaining high accuracy.

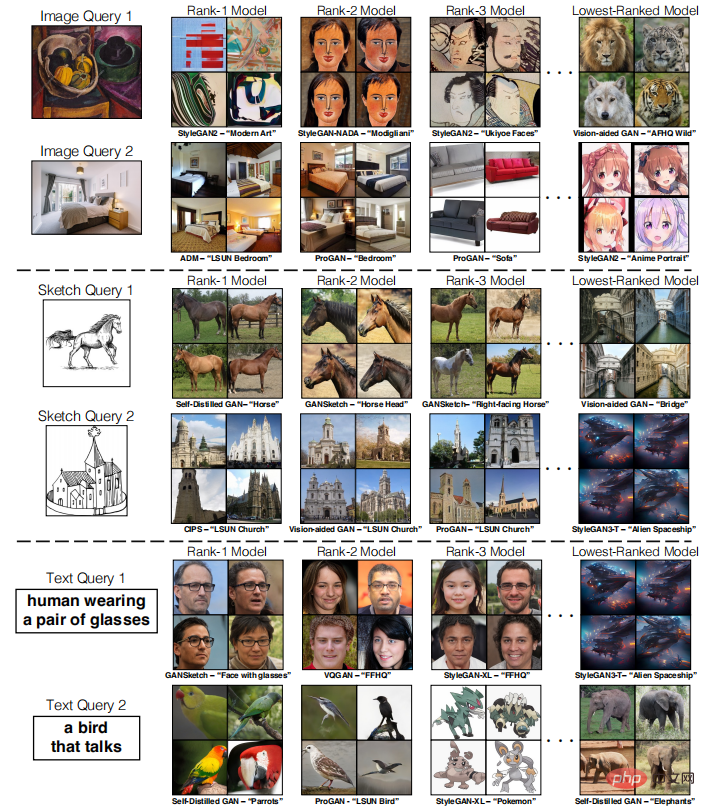

By comparing model retrieval results, we can also get a rough idea of which models can generate higher quality images for different query inputs. For example, the figure below shows the comparison of model retrieval results.

Legend: Example of model retrieval results

The top line is Image query, input still life paintings, retrieve models of related artistic styles, and get the first-ranked StyleGAN2 model and the last-ranked Vision-aided GAN model. The middle row is a sketch query, input sketches of horses and churches, and get models such as ADM and ProGAN. The bottom line is a text query. Enter "person wearing glasses" and "talking bird" to retrieve the top-ranked GANSketch model and Self-Distilled GAN model respectively.

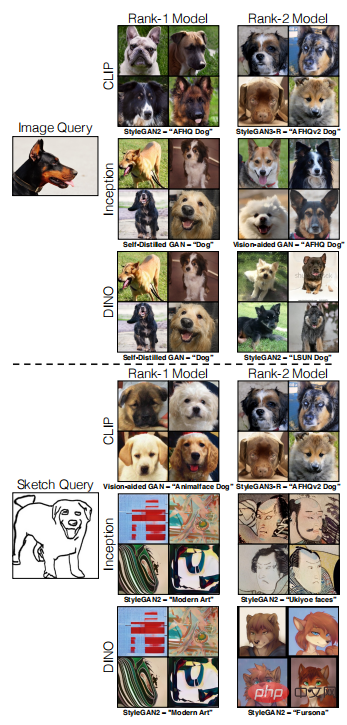

The author also found that there are differences in model performance in different network feature spaces. As shown in the figure below, when inputting image queries, the results show that the three networks CLIP, DINO and Inception all have similar performance; when inputting sketch queries, CLIP performs significantly better, while DINO and Inception do not. Suitable for a given query, they perform better on artistic style models.

Note: Comparison of image- and sketch-based model retrieval in different network feature spaces

In addition, the model search algorithm proposed in this work can also support a variety of applications, including multi-modal user query, similar model query, real image reconstruction and editing, etc.

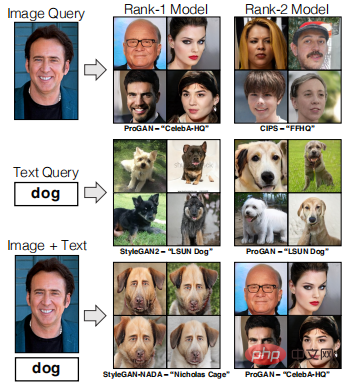

For example, multi-modal query can help refine the model search. When there are only images of "Nicolas Cage", only the face model can be retrieved; but when "Nicolas Cage" is also used and "dog" as input, you can retrieve the StyleGAN-NADA model that can generate the "Nicolas Cage dog" image. (As shown below)

Note: Multi-modal user query

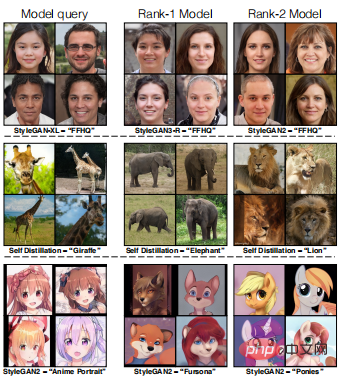

When the input is a face model, more face generation models can be retrieved and the categories remain similar. (As shown below)

Note: Similar model query

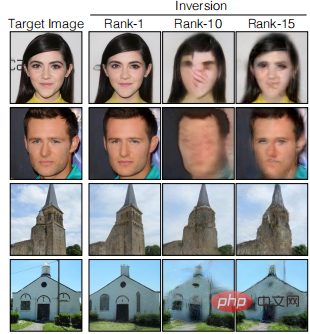

given To determine the query image of a real face, using a higher-ranked model can obtain more accurate image reconstruction. The figure below is an example of image inverse mapping of CelebA-HQ and LSUN Church images using different ranking models.

Caption: Project the real image to the retrieved StyleGAN2 model.

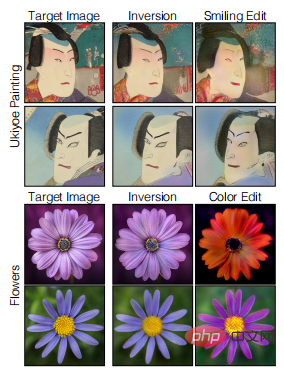

#In the task of editing real images, the performance of different models also varies. In the figure below, the top-ranked image-based model retrieval algorithm is used to inversely map real images, and then edited using GANspace to turn the frowning face in the Ukiyoe image into a smiling face.

Note: Editing real images

This research has proven the feasibility of model search; There is still a lot of research space for model search for text, audio or other content generation.

But currently, the method proposed in this work still has certain limitations. For example, when querying a specific sketch, sometimes a model of an abstract shape will be matched; and sometimes when performing a multi-modal query, only a single model can be retrieved, and the system may have difficulty processing an image like a dog "elephant" ” Such multi-modal queries. (As shown below)

Note: Failure case

In addition, On its model search platform, the retrieved model list is not automatically sorted according to its effect. For example, different models are evaluated and ranked in terms of the resolution, fidelity, matching degree of the generated image, etc., so that it can be more accurate It facilitates user retrieval and can also help users better understand the pros and cons of the currently generated models. We look forward to follow-up work in this area.

The above is the detailed content of CMU Zhu Junyan's team developed an automatic matching ranking system to evaluate the pros and cons of various AI generation models.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem of transparent image with blank projection transformation result in OpenCV.js. When using OpenCV.js for image processing, sometimes you will encounter the image after projection transformation...

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

Implementation method of task scheduling notification In task scheduling, the Quartz timer uses cron expression to determine the execution time of the task. Now we are facing this...

How to implement multi-search engine batch search in Google Chrome console?

Apr 04, 2025 pm 02:24 PM

How to implement multi-search engine batch search in Google Chrome console?

Apr 04, 2025 pm 02:24 PM

How to use Google Chrome console to perform batch searches for multiple search engines? In daily work or study, it is often necessary to be in multiple search engines at the same time...