Is OpenAI worth giving up college offers to join?

For a doctoral student who wants to find a job in the field of computer science, how to choose between academia and industry?

During the job search process, Rowan Zellers, a doctoral student at the University of Washington, originally aimed to find a teaching position. Entering academia was the route he had set during his Ph.D. To this end, he drafted a target list, wrote many application materials, and used his social network in academia to look for more opportunities.

At the same time, he also began to be exposed to opportunities in the industry. Communication with industry companies gradually shook Rowan Zellers's ideas. He found that for his research field - multi-modal artificial intelligence - it is difficult and increasingly difficult to do large-scale basic research in academia, and Opportunities in industry are becoming more and more abundant.

Although technology companies around 2022 have slowed down or frozen hiring, Rowan Zellers still found a more attractive opportunity-OpenAI threw an olive branch to him.

In the final stage of job hunting, he did something he had never thought of—rejecting all academic positions and decided to sign OpenAI’s offer. In June 2022, Rowan Zellers officially bid farewell to many years of school time and joined OpenAI.

What enabled him to change his mindset within a year? In a recent blog post, Rowan Zellers shared some of his job hunting tips.

The following is the text of the blog:

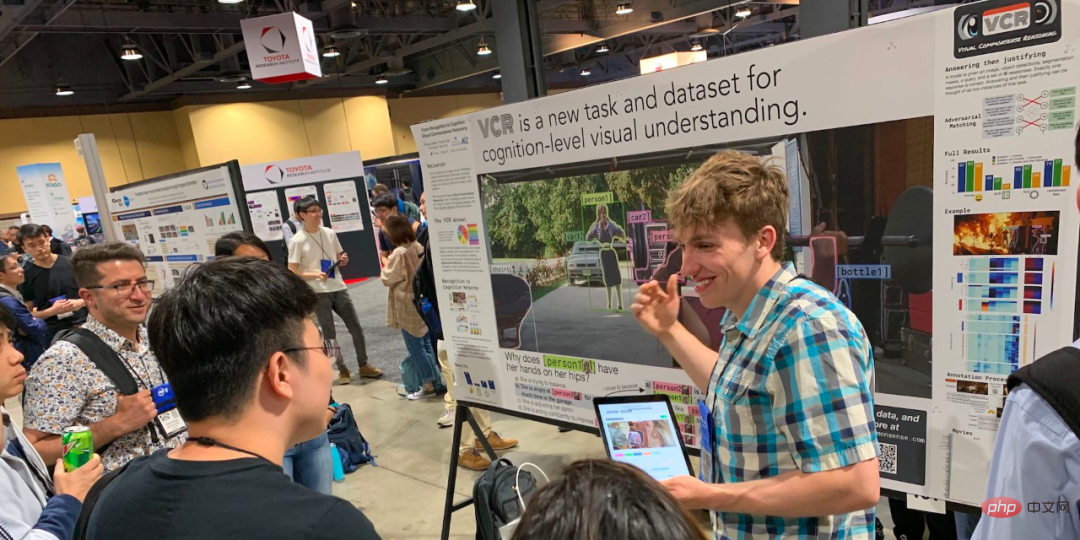

Rowan Zellers at CVPR 2019 Showcase your work on visual common sense reasoning.

#I was very nervous and stressed during the decision-making process — it felt like a twist at the time — but in the end I was very happy with how things turned out. For me, there are two key factors at play:

1) I feel like I can do what I’m passionate about at OpenAI;

2) San Francisco, home of OpenAI, is a great city to live and work in.

In this article, I will discuss the decision-making process further.

Why write this experience post?

#During the process of job hunting, I got a lot of information from professors in social networks about how to apply for jobs, how to interview, and how to Great advice for creating a great application. (In the first part of this series, I tried to distill this advice into an empirical article about applying for jobs.)

However, when it came time to actually decide, I still felt a little alone. . I admit that I have been super lucky to have such a strong network of professors and industry researchers that I can contact about these things. But the decision between career paths is more of a customized, personal decision, with "no right answer" to a certain extent.

Another factor that influenced the decision was that most people I knew seemed to have chosen a side between academia and industry. Most of the professors I know are firmly in the academic system (although there are some dabbles in industry), and most of the people I know in industry have never seriously considered academia as a career.

This feels particularly weird to me. Because in the middle of my PhD, my motivation for deciding to go the “academic route” was that doing so would allow me to postpone the final decision between academia or industry—given that the common view is that moving from academia to industry It's easier than turning the other way. However, almost a few years later, I realized that taking the academic route is actually part of my professional identity. Many of my peers were doing the same thing, so I felt that there was a force pushing me towards the academic route.

Anyway, I wrote this post to provide an N=1, opinionated perspective on how I made my own decisions among some pretty different choices.

In the process of searching for an academic job

My perspective on my work and goals changed

My office during the epidemic.

#As background, I was a PhD student at the University of Washington from 2016 to 2022 and absolutely loved the process. My research area is about multimodal artificial intelligence—building machine learning systems that can understand language, vision, and the rest of the world.

As written in the first part of this series, research interests have shaped my preset career path. I'm most excited about doing basic research and mentoring junior researchers. At least at the traditional level in computing, this is the focus of academia, while industry specializes in applied research and strives to translate scientific advances into successful products.

Looking for a job in academia gave me an idea of what it was like to be a professor at many different institutions and subfields of CS. I spoke with over 160 professors in all my interviews. In the end, I wasn't quite sure if academia was entirely for me.

It is difficult to do large-scale basic research in academia

Academia (more specifically, my mentor’s research group at the University of Washington) has been a very good environment for me over the past six years. I was driven to pursue a research direction that excited me and received generous support in terms of mentorship and resources. With these conditions, I am able to lead research on building multimodal AI systems that improve as they scale and then, (for me) generate more questions than answers.

In contrast, most of the big industry research labs during that time didn't feel like a good fit for my interests. I tried to apply for internships during my PhD but was never successful in finding one that seemed aligned with my research agenda. Most industry teams I know of are primarily language-focused or visual-focused, and I couldn't choose one or the other. I spent a lot of time at the Allen Institute for Artificial Intelligence, a non-profit research lab that feels very academic by comparison.

However, things are changing. In the areas I focus on, I worry that doing groundbreaking system-building research in academia is hard, and getting harder.

The reality is that building a system is really difficult. It requires a lot of resources and a lot of engineering. I think the incentive structure in academia is not suitable for this kind of high-cost, high-risk system-building research.

Building an artificial system and demonstrating that it scales well can take a graduate student years of time and more than $100,000 in unsubsidized computing expenses. And as the field grows, these numbers appear to be increasing exponentially. So writing a lot of papers is not a viable strategy, at least it shouldn't be the goal right now, but unfortunately I know a lot of academics who tend to use the number of papers as an objective measure. In addition, papers are the "bargaining chips" used by the academic community to apply for funding. We need to write a lot of papers, have something to talk about at conferences, find internship opportunities for students, etc. In the sense that a successful academic career is about helping students carve out their own research agendas (they may become professors elsewhere, and the cycle can continue), this is intrinsic to the collaboration required to do great research. of tension.

However, I think the broader trend is towards applied research in academia.

As model technology becomes more and more powerful and construction costs become higher and higher, more and more scholars are trying to build applications on top of the model. This is also a trend I see in the two major fields of NLP and CV. This, in turn, has affected the issues that academic circles pay attention to and discuss, and researchers have begun to care about how to solve some practical and specific problems.

In the academic world, I think that completing a successful research requires going through multiple stages, including raising funds and setting up a laboratory, and then I can officially start the scientific research project. When I finally get a good research result, several years may have passed. Perhaps someone has already made breakthrough results during this period, and it is difficult for me to stand out on this track. Having said that, progress in the field has been very rapid in the past few years.

More realistically, if I fail in a track, I may need to change the direction of my research. However, that was not my original intention and was probably the main reason why I ended up going the industry route.

Other differences between academia and industry

In my field of study, all responsibilities of academic professors include teaching (and preparing teaching materials), and contributing to the academy and field , build and manage computing infrastructure, apply for grants and manage funds, and more. Although I find these things interesting, I don't want to deal with so many work scenarios at the same time, which requires strong work ability to do it with ease. I want my job to be focused on an important task, such as teaching.

Similarly, during my PhD, I like to focus on only one important research question at a time. I think this kind of focused work scene exists more in the industry. As a professor, it is really not easy to do experiments and write code at the same time, and the industry has a clearer division of work.

I think a lot of people are subconsciously attracted to academia because of the prestige it gives, but I don't like that. I think focusing on rankings and reputation leads me to chase the wrong goals and leaves me feeling lost. On the other hand, many people are also attracted to the industry because it offers higher salaries, which is important. Fortunately I found an environment that gave me more inner satisfaction.

##Job and Career Security

I think a lot of people misunderstand tenure. It is true that tenure-track positions such as professorship are stable and have job security. But for those facing employment, the academic job market is also very complex. Of course, unlike industry researchers, academic researchers can easily change jobs even in a recession.

In academia, I would theoretically be free to research any topic, but in practice I might be held back by not having enough resources or a supportive enough environment. I joined OpenAI because I received great support here to solve the problems that interest me most. I think for any industry lab, solving the problems I care about needs to be aligned with the company's products, and OpenAI has just that arrangement.

Working on the team at OpenAI gives me the opportunity to mentor junior researchers and gain sufficient research resources. More importantly, I am driven to solve challenging problems that are important to me.

These are the reasons why I chose to sign a full-time position at OpenAI. Half a year into my job, it turns out that I really enjoy working at OpenAI.

The above is the detailed content of Is OpenAI worth giving up college offers to join?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

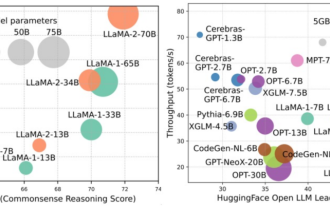

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

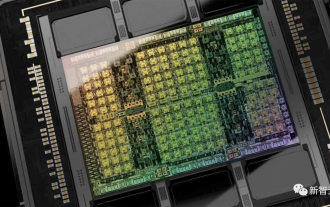

3nm process, performance surpasses H100! Recently, foreign media DigiTimes broke the news that Nvidia is developing the next-generation GPU, the B100, code-named "Blackwell". It is said that as a product for artificial intelligence (AI) and high-performance computing (HPC) applications, the B100 will use TSMC's 3nm process process, as well as more complex multi-chip module (MCM) design, and will appear in the fourth quarter of 2024. For Nvidia, which monopolizes more than 80% of the artificial intelligence GPU market, it can use the B100 to strike while the iron is hot and further attack challengers such as AMD and Intel in this wave of AI deployment. According to NVIDIA estimates, by 2027, the output value of this field is expected to reach approximately

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

In recent years, multimodal learning has received much attention, especially in the two directions of text-image synthesis and image-text contrastive learning. Some AI models have attracted widespread public attention due to their application in creative image generation and editing, such as the text image models DALL・E and DALL-E 2 launched by OpenAI, and NVIDIA's GauGAN and GauGAN2. Not to be outdone, Google released its own text-to-image model Imagen at the end of May, which seems to further expand the boundaries of caption-conditional image generation. Given just a description of a scene, Imagen can generate high-quality, high-resolution

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! Written by 7 Chinese researchers at Microsoft, it has 119 pages. It starts from two types of multi-modal large model research directions that have been completed and are still at the forefront, and comprehensively summarizes five specific research topics: visual understanding and visual generation. The multi-modal large-model multi-modal agent supported by the unified visual model LLM focuses on a phenomenon: the multi-modal basic model has moved from specialized to universal. Ps. This is why the author directly drew an image of Doraemon at the beginning of the paper. Who should read this review (report)? In the original words of Microsoft: As long as you are interested in learning the basic knowledge and latest progress of multi-modal basic models, whether you are a professional researcher or a student, this content is very suitable for you to come together.

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

The image-to-video generation (I2V) task is a challenge in the field of computer vision that aims to convert static images into dynamic videos. The difficulty of this task is to extract and generate dynamic information in the temporal dimension from a single image while maintaining the authenticity and visual coherence of the image content. Existing I2V methods often require complex model architectures and large amounts of training data to achieve this goal. Recently, a new research result "I2V-Adapter: AGeneralImage-to-VideoAdapter for VideoDiffusionModels" led by Kuaishou was released. This research introduces an innovative image-to-video conversion method and proposes a lightweight adapter module, i.e.

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

This work of EfficientSAM was included in CVPR2024 with a perfect score of 5/5/5! The author shared the result on a social media, as shown in the picture below: The LeCun Turing Award winner also strongly recommended this work! In recent research, Meta researchers have proposed a new improved method, namely mask image pre-training (SAMI) using SAM. This method combines MAE pre-training technology and SAM models to achieve high-quality pre-trained ViT encoders. Through SAMI, researchers try to improve the performance and efficiency of the model and provide better solutions for vision tasks. The proposal of this method brings new ideas and opportunities to further explore and develop the fields of computer vision and deep learning. by combining different

2022 Boltzmann Prize announced: Founder of Hopfield Network wins award

Aug 13, 2023 pm 08:49 PM

2022 Boltzmann Prize announced: Founder of Hopfield Network wins award

Aug 13, 2023 pm 08:49 PM

The two scientists who have won the 2022 Boltzmann Prize have been announced. This award was established by the IUPAP Committee on Statistical Physics (C3) to recognize researchers who have made outstanding achievements in the field of statistical physics. The winner must be a scientist who has not previously won a Boltzmann Prize or a Nobel Prize. This award began in 1975 and is awarded every three years in memory of Ludwig Boltzmann, the founder of statistical physics. Deepak Dharistheoriginalstatement. Reason for award: In recognition of Deepak Dharistheoriginalstatement's pioneering contributions to the field of statistical physics, including Exact solution of self-organized critical model, interface growth, disorder

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

Large-scale models are making the leap between language and vision, promising to seamlessly understand and generate text and image content. In a series of recent studies, multimodal feature integration is not only a growing trend but has already led to key advances ranging from multimodal conversations to content creation tools. Large language models have demonstrated unparalleled capabilities in text understanding and generation. However, simultaneously generating images with coherent textual narratives is still an area to be developed. Recently, a research team at the University of California, Santa Cruz proposed MiniGPT-5, an innovative interleaving algorithm based on the concept of "generative vouchers". Visual language generation technology. Paper address: https://browse.arxiv.org/p