Technology peripherals

Technology peripherals

AI

AI

The blueprint for the 'golden decade' of autonomous driving unfolds, with various regions accelerating their layout to seize the opportunity period

The blueprint for the 'golden decade' of autonomous driving unfolds, with various regions accelerating their layout to seize the opportunity period

The blueprint for the 'golden decade' of autonomous driving unfolds, with various regions accelerating their layout to seize the opportunity period

At present, the autonomous driving industry generally believes that my country will achieve large-scale commercialization of autonomous driving by 2030, and 2020-2030 is regarded by the industry as the golden development period of autonomous driving. As the blueprint for the "golden decade" of autonomous driving slowly unfolds, my country's laws and regulations related to intelligent connected vehicles continue to improve, and high-level autonomous driving is booming.

#At the same time, various localities are also accelerating the formulation of relevant policies to promote the commercialization of autonomous driving. Not long ago, Chongqing and Wuhan took the lead in launching fully driverless commercial travel services, marking a milestone in the commercialization of autonomous driving in my country. Recently, cities such as Beijing, Shanghai, Guangzhou, and Wuxi have taken frequent actions, and a new round of autonomous driving layout has been launched. Intelligent connected cars have ushered in a critical period of rapid technological evolution and accelerated industrial layout.

Beijing: Phase 3.0 construction tasks will be fully launched

Two years ago, Beijing officially launched the country’s first network-connected cloud-controlled high-tech The construction of the first-level autonomous driving demonstration zone has accumulated experience in standardized construction for the innovative application of vehicle-road-cloud collaboration. Up to now, Beijing’s high-level autonomous driving demonstration zone has successfully completed the construction tasks of Phase 1.0 and Phase 2.0. It is reported that the Beijing Economic and Technological Development Zone has now achieved coverage of vehicle-road-cloud integrated functions at 329 intelligent network standard intersections, 750 kilometers of two-way urban roads and 10 kilometers of highways, paving the way for the launch of the 3.0 scale deployment and scenario expansion stage. laid a solid foundation.

On September 16, at the 2022 World Intelligent Connected Vehicle Conference, the relevant person in charge of Beijing’s high-level autonomous driving demonstration zone revealed that in the next step, Beijing will fully launch the 3.0 phase The construction task is to build a unified smart city dedicated network in the city, which will promote the adaptive application of no less than 1,000 advanced autonomous driving vehicle terminals, gradually expand and complete the city's 500 square kilometers of demonstration zone expansion work, and support vehicle-road collaboration and remote driving. , online supervision and other Internet of Vehicles services, and extensively expand the scenario applications of smart cities.

Shanghai: The city’s first self-driving car without a safety driver was launched

On September 27, with the launch of Shanghai’s first self-driving car without a safety driver self-driving cars were launched, and the "No Man's Land" demonstration experience area was officially opened, becoming another milestone in Shanghai's promotion of innovative development of intelligent connected cars. The opening of the "No Man's Land" demonstration experience area aims to further enrich the test scenarios of intelligent connected vehicles, and provide real environment basis and information for the normal and stable operation of autonomous driving of intelligent connected vehicles by building a comprehensive supervision and dispatch display platform. Safety and security.

It is reported that the "No Man's Land" demonstration experience area will carry out driverless demonstration applications based on a 3.8-kilometer semi-open road in the Shanghai Auto Expo Park, and will be gradually opened in two stages. Create the first unmanned high-level autonomous driving demonstration operation sample in China that focuses on removing safety personnel. At present, the first phase of 1.2 kilometers of roads has been completed and put into use, and the second phase of 2.6 kilometers of roads is still under planning and construction, and is expected to be completed by the end of this year.

Currently, the Apollo Moon extreme fox version of the self-driving car provided by the self-driving travel service platform Luobo Kuaipao has started fully unmanned testing in the Shanghai Auto Expo Park, and will be tested in an orderly manner in the future. With the advancement, Luobo Kuaipao will provide fully unmanned self-driving travel services to the public on more road sections in Jiading, Shanghai.

Guangzhou: 260 self-driving vehicles will be put into use this year and next year

##On September 14, the General Office of the Ministry of Transport announced the 18th A number of smart transportation pilot application pilot projects (in the direction of autonomous driving and smart shipping), and the Guangzhou Urban Travel Service Autonomous Driving Pilot Application Pilot Project were selected. 260 autonomous vehicles will be put into use this year and next. According to the announcement, the pilot project will invest 50 self-driving buses in the Canton Tower Loop Line, Biological Island Loop Line, etc. from August 2022 to December 2023. The cumulative service Less than 1 million visitors. 210 self-driving passenger vehicles have been put into use in the Guangzhou Artificial Intelligence and Digital Economy Pilot Zone, with a cumulative service of no less than 300,000 passengers, a mileage of no less than 4 million kilometers, and a running time of no less than 200,000 hours. The expected results of the pilot are to form a summary report on the pilot work and prepare no less than two technical guidelines or standard specifications around autonomous driving urban travel service scenarios. Wuxi: Will take the lead in carrying out global testing of intelligent connected vehicles On September 22, the new version of "Wuxi Intelligent Connected Vehicle Road Test" and Demonstration Application Management Implementation Rules" (hereinafter referred to as the "Implementation Rules") were officially released. Wuxi took the lead in expanding the scope of road testing, demonstration applications, and demonstration operations of intelligent connected vehicles equipped with drivers to the entire city, becoming the country's first smart vehicle-wide area Test city.As the country’s first national-level vehicle networking pilot zone and one of the first batch of “dual intelligence” pilot cities, Wuxi has been at the forefront of the country in the development of the intelligent connected vehicle industry. In 2021, Wuxi piloted the "Implementation Rules". Over the past year, road testing and demonstration applications of intelligent connected vehicles have been carried out in an orderly manner. A total of 177km of public test roads have been opened, and the Internet of Vehicles infrastructure has covered 450km2 and 856 points. This new version of the "Implementation Rules" not only clarifies the subjects, drivers and vehicles required to carry out intelligent networked vehicle testing and demonstration, but also clarifies two modes of equipped with drivers and without equipped with drivers, and adds demonstration operations content, giving intelligent connected cars greater room for technological innovation and operating model innovation.

In addition, Wuxi's first local regulation on the Internet of Vehicles - the "Wuxi City Internet of Vehicles Development Promotion Regulations (Draft)" was also recently passed, which will further influence the infrastructure construction and development of the Internet of Vehicles. Comprehensive arrangements will be made in terms of depth and breadth of application, technological innovation and industrial development, and legislation will be used to ensure the development of new technologies, new models and new business formats for the Internet of Vehicles and intelligent connected vehicles, and to facilitate the establishment of intelligent connected vehicle-related technology enterprises in Wuxi. Provide sufficient soil for development.

Wuhan: Self-driving vehicles are about to realize cross-regional traffic

On September 15, the fourth batch of intelligent connected vehicles were tested on the road in Wuhan The risk level assessment has passed expert review and is planned to be officially opened in the near future. After the official opening, the number of various intelligent network test roads in Wuhan will exceed 400 kilometers, ranking among the top in the country.

It is reported that Wuhan City has opened three batches of 340 kilometers of intelligent connected vehicle and intelligent transportation test roads. Among them, 321 kilometers of Wuhan Economic and Technological Development Zone have full coverage and open 5G commercial network The test road, including a 106-kilometer vehicle-road collaboration section with full 5G coverage, is the largest open test road in China with the richest scenarios and the first full 5G access. The fourth batch of open test roads is about 70 kilometers long and will connect the core areas of Wuhan Economic Development Zone and Hanyang District. This means that autonomous vehicles will drive out of China's Car Valley and achieve cross-regional traffic for the first time.

Conclusion: Since 2022, in addition to the frequent actions in major cities, related companies are also competing to increase their investment, conquering cities and territories in the new blue ocean of autonomous driving, and accelerating the pace of autonomous driving. Fully unmanned commercial operations are also gradually expanding from pilot projects in individual cities and regions to nationwide.

The above is the detailed content of The blueprint for the 'golden decade' of autonomous driving unfolds, with various regions accelerating their layout to seize the opportunity period. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

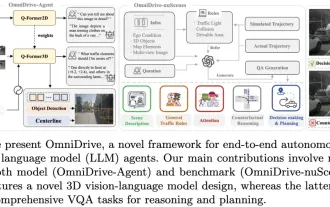

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require

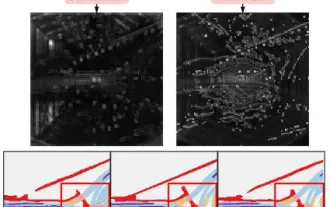

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass

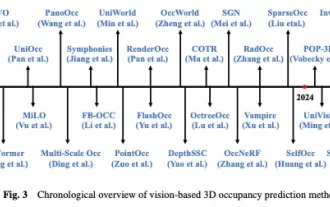

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Written above & The author’s personal understanding In recent years, autonomous driving has received increasing attention due to its potential in reducing driver burden and improving driving safety. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. at last

Beyond BEVFusion! DifFUSER: Diffusion model enters autonomous driving multi-task (BEV segmentation + detection dual SOTA)

Apr 22, 2024 pm 05:49 PM

Beyond BEVFusion! DifFUSER: Diffusion model enters autonomous driving multi-task (BEV segmentation + detection dual SOTA)

Apr 22, 2024 pm 05:49 PM

Written above & the author’s personal understanding At present, as autonomous driving technology becomes more mature and the demand for autonomous driving perception tasks increases, the industry and academia very much hope for an ideal perception algorithm model that can simultaneously complete three-dimensional target detection and based on Semantic segmentation task in BEV space. For a vehicle capable of autonomous driving, it is usually equipped with surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect data in different modalities. This makes full use of the complementary advantages between different modal data, making the data complementary advantages between different modalities. For example, 3D point cloud data can provide information for 3D target detection tasks, while color image data can provide more information for semantic segmentation tasks. accurate information. Needle

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

The deep reinforcement learning team of the Institute of Automation, Chinese Academy of Sciences, together with Li Auto and others, proposed a new closed-loop planning framework for autonomous driving based on the multi-modal large language model MLLM - PlanAgent. This method takes a bird's-eye view of the scene and graph-based text prompts as input, and uses the multi-modal understanding and common sense reasoning capabilities of the multi-modal large language model to perform hierarchical reasoning from scene understanding to the generation of horizontal and vertical movement instructions, and Further generate the instructions required by the planner. The method is tested on the large-scale and challenging nuPlan benchmark, and experiments show that PlanAgent achieves state-of-the-art (SOTA) performance on both regular and long-tail scenarios. Compared with conventional large language model (LLM) methods, PlanAgent

Overview of path planning: based on sampling, search, and optimization, all done!

Jun 01, 2024 pm 08:12 PM

Overview of path planning: based on sampling, search, and optimization, all done!

Jun 01, 2024 pm 08:12 PM

1 Overview of decision control and motion planning Current decision control methods can be divided into three categories: sequential planning, behavior-aware planning, and end-to-end planning. Sequential planning: The most traditional method, the three parts of perception, decision-making and control are relatively clear; behavior-aware planning: Compared with the first method, the highlight is the introduction of human-machine co-driving, vehicle-road collaboration and vehicle risk estimation of the external dynamic environment; End-to-end planning: DL and DRL technologies use a large amount of data training to obtain sensory information such as images, steering wheel angles, etc.