How to use caching in Go?

Caching is a technology commonly used in computer science that can effectively improve system performance and response speed. In the Go language, there are many different cache implementations, such as sync.Map, map, LRU Cache, Redis, etc. For different usage scenarios and requirements, we need to choose different caching solutions. In this article, we will discuss the relevant knowledge and techniques on how to use caching in Go.

Cache implementation in Go language

In Go, we can use map to implement a basic cache. For example, we can define a map to map a URL to a byte array of its response content, and then when processing an HTTP request, check whether the response corresponding to the URL exists in the cache. If it exists, directly return the response content in the cache, otherwise from Get the response data from the original data source and add it to the cache. The following is an implementation example:

package main

import (

"fmt"

"sync"

)

var cache = struct {

sync.RWMutex

data map[string][]byte

}{data: make(map[string][]byte)}

func main() {

url := "https://www.example.com"

if res, ok := get(url); ok {

fmt.Println("cache hit")

fmt.Println(string(res))

} else {

fmt.Println("cache miss")

// fetch response from url

res := fetchContent(url)

set(url, res)

fmt.Println(string(res))

}

}

func get(key string) ([]byte, bool) {

cache.RLock()

defer cache.RUnlock()

if res, ok := cache.data[key]; ok {

return res, true

}

return nil, false

}

func set(key string, value []byte) {

cache.Lock()

defer cache.Unlock()

cache.data[key] = value

}

func fetchContent(url string) []byte {

// fetch content from url

// ...

}In the above code example, we first define a global variable named cache, which has a read-write lock and a map to store the relationship between the URL and its response content. mapping relationship. Next, when processing the HTTP request, we use the get function to get the response from the cache, and return it directly if it exists. Otherwise, we use the fetchContent function to get the response data from the original data source and add it to the cache.

In addition to using map, the Go language also provides some other cache implementations, such as sync.Map and LRU Cache.

sync.Map is a thread-safe map that can perform concurrent read and write operations between multiple goroutines without locking. Using sync.Map to implement caching can improve the concurrency performance of the system. The following is an implementation example:

package main

import (

"fmt"

"sync"

)

func main() {

m := sync.Map{}

m.Store("key1", "value1")

m.Store("key2", "value2")

if res, ok := m.Load("key1"); ok {

fmt.Println(res)

}

m.Range(func(k, v interface{}) bool {

fmt.Printf("%v : %v

", k, v)

return true

})

}In the above code example, we store data in the map by calling the Store method of sync.Map, and use the Load method to obtain data from the map. In addition, we can also use the Range method to implement the function of traversing the map.

LRU Cache is a common caching strategy that uses the least recently used algorithm (Least Recently Used). When the cache space is full, the least recently used data is replaced from the cache. In Go language, you can use the golang-lru package to implement LRU Cache. The following is an implementation example:

package main

import (

"fmt"

"github.com/hashicorp/golang-lru"

)

func main() {

cache, _ := lru.New(128)

cache.Add("key1", "value1")

cache.Add("key2", "value2")

if res, ok := cache.Get("key1"); ok {

fmt.Println(res)

}

cache.Remove("key2")

fmt.Println(cache.Len())

}In the above code example, we first create an LRU Cache, add data to the cache by calling the Add method, get the data from the cache using the Get method, and use the Remove method Delete data from LRU Cache.

How to design an efficient caching system

For different scenarios and needs, we often need to choose different caching strategies. However, no matter what caching strategy is adopted, we need to consider how to design an efficient caching system.

The following are some tips for designing an efficient cache system:

- Set the appropriate cache size

The cache size should be based on the system's memory and data access patterns. to set. If the cache is too large, the system memory will be tight and the system performance will decrease. If the cache is too small, the system resources cannot be fully utilized and sufficient cache cannot be provided.

- Set the appropriate cache expiration time

Setting the appropriate cache expiration time can prevent the cached data from being too old and ensure the real-time nature of the data. Cache expiration time should be set based on the characteristics of the data and access patterns.

- Use multi-level cache

For data that is not accessed frequently, you can use a larger disk or network storage cache; for data that is accessed more frequently, For data, a smaller memory cache can be used. Through tiered caching, the performance and scalability of the system can be improved.

- Cache penetration

Cache penetration means that the requested data does not exist in the cache, and the requested data does not exist in the data source. In order to avoid cache penetration, you can add a Boolean flag to indicate whether the data exists when the cache expires. When the queried data does not exist, empty data is returned and the flag bit of the data is set to false. The next query will be based on the flag bit to avoid repeated queries.

- Cache avalanche

Cache avalanche means that a large amount of cached data fails at the same time, causing a large number of requests to be pressed on the back-end system, causing the system to crash. In order to avoid the cache avalanche problem, you can use the randomness of the cache expiration time to distribute, or divide the cache expiration time into several time periods, and the expiration times in different time periods are random to avoid a large number of cache failures at the same time, causing excessive system load. .

Summary

In the Go language, using cache can effectively improve system performance and response speed. We can choose different cache implementation solutions, such as map, sync.Map, LRU Cache, Redis, etc. At the same time, when designing an efficient cache system, it is necessary to select an appropriate cache strategy based on specific needs and scenarios, and consider issues such as cache size, cache expiration time, multi-level cache, cache penetration, cache avalanche, etc.

The above is the detailed content of How to use caching in Go?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to use reflection to access private fields and methods in golang

May 03, 2024 pm 12:15 PM

How to use reflection to access private fields and methods in golang

May 03, 2024 pm 12:15 PM

You can use reflection to access private fields and methods in Go language: To access private fields: obtain the reflection value of the value through reflect.ValueOf(), then use FieldByName() to obtain the reflection value of the field, and call the String() method to print the value of the field . Call a private method: also obtain the reflection value of the value through reflect.ValueOf(), then use MethodByName() to obtain the reflection value of the method, and finally call the Call() method to execute the method. Practical case: Modify private field values and call private methods through reflection to achieve object control and unit test coverage.

The difference between performance testing and unit testing in Go language

May 08, 2024 pm 03:09 PM

The difference between performance testing and unit testing in Go language

May 08, 2024 pm 03:09 PM

Performance tests evaluate an application's performance under different loads, while unit tests verify the correctness of a single unit of code. Performance testing focuses on measuring response time and throughput, while unit testing focuses on function output and code coverage. Performance tests simulate real-world environments with high load and concurrency, while unit tests run under low load and serial conditions. The goal of performance testing is to identify performance bottlenecks and optimize the application, while the goal of unit testing is to ensure code correctness and robustness.

Caching mechanism and application practice in PHP development

May 09, 2024 pm 01:30 PM

Caching mechanism and application practice in PHP development

May 09, 2024 pm 01:30 PM

In PHP development, the caching mechanism improves performance by temporarily storing frequently accessed data in memory or disk, thereby reducing the number of database accesses. Cache types mainly include memory, file and database cache. Caching can be implemented in PHP using built-in functions or third-party libraries, such as cache_get() and Memcache. Common practical applications include caching database query results to optimize query performance and caching page output to speed up rendering. The caching mechanism effectively improves website response speed, enhances user experience and reduces server load.

What pitfalls should we pay attention to when designing distributed systems with Golang technology?

May 07, 2024 pm 12:39 PM

What pitfalls should we pay attention to when designing distributed systems with Golang technology?

May 07, 2024 pm 12:39 PM

Pitfalls in Go Language When Designing Distributed Systems Go is a popular language used for developing distributed systems. However, there are some pitfalls to be aware of when using Go, which can undermine the robustness, performance, and correctness of your system. This article will explore some common pitfalls and provide practical examples on how to avoid them. 1. Overuse of concurrency Go is a concurrency language that encourages developers to use goroutines to increase parallelism. However, excessive use of concurrency can lead to system instability because too many goroutines compete for resources and cause context switching overhead. Practical case: Excessive use of concurrency leads to service response delays and resource competition, which manifests as high CPU utilization and high garbage collection overhead.

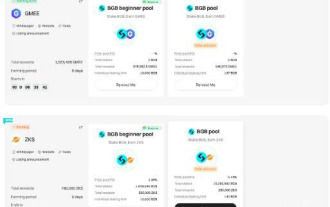

What is Bitget Launchpool? How to use Bitget Launchpool?

Jun 07, 2024 pm 12:06 PM

What is Bitget Launchpool? How to use Bitget Launchpool?

Jun 07, 2024 pm 12:06 PM

BitgetLaunchpool is a dynamic platform designed for all cryptocurrency enthusiasts. BitgetLaunchpool stands out with its unique offering. Here, you can stake your tokens to unlock more rewards, including airdrops, high returns, and a generous prize pool exclusive to early participants. What is BitgetLaunchpool? BitgetLaunchpool is a cryptocurrency platform where tokens can be staked and earned with user-friendly terms and conditions. By investing BGB or other tokens in Launchpool, users have the opportunity to receive free airdrops, earnings and participate in generous bonus pools. The income from pledged assets is calculated within T+1 hours, and the rewards are based on

Golang technology libraries and tools used in machine learning

May 08, 2024 pm 09:42 PM

Golang technology libraries and tools used in machine learning

May 08, 2024 pm 09:42 PM

Libraries and tools for machine learning in the Go language include: TensorFlow: a popular machine learning library that provides tools for building, training, and deploying models. GoLearn: A series of classification, regression and clustering algorithms. Gonum: A scientific computing library that provides matrix operations and linear algebra functions.

How to use caching in Golang distributed system?

Jun 01, 2024 pm 09:27 PM

How to use caching in Golang distributed system?

Jun 01, 2024 pm 09:27 PM

In the Go distributed system, caching can be implemented using the groupcache package. This package provides a general caching interface and supports multiple caching strategies, such as LRU, LFU, ARC and FIFO. Leveraging groupcache can significantly improve application performance, reduce backend load, and enhance system reliability. The specific implementation method is as follows: Import the necessary packages, set the cache pool size, define the cache pool, set the cache expiration time, set the number of concurrent value requests, and process the value request results.

The role of Golang technology in mobile IoT development

May 09, 2024 pm 03:51 PM

The role of Golang technology in mobile IoT development

May 09, 2024 pm 03:51 PM

With its high concurrency, efficiency and cross-platform nature, Go language has become an ideal choice for mobile Internet of Things (IoT) application development. Go's concurrency model achieves a high degree of concurrency through goroutines (lightweight coroutines), which is suitable for handling a large number of IoT devices connected at the same time. Go's low resource consumption helps run applications efficiently on mobile devices with limited computing and storage. Additionally, Go’s cross-platform support enables IoT applications to be easily deployed on a variety of mobile devices. The practical case demonstrates using Go to build a BLE temperature sensor application, communicating with the sensor through BLE and processing incoming data to read and display temperature readings.