nvidia-smi - NVIDIA System Management Interface program

nvidia smi (also known as NVSMI) provides support for nvidia Tesla, Quadro, GRID and GeForce devices provide monitoring and management capabilities. GeForce Titan series devices support most features, with very limited information provided for the rest of the GeForce brand. NVSMI is a cross-platform tool that supports all Linux distributions supported by standard NVIDIA drivers, as well as 64-bit versions of Windows starting with Windows Server 2008 R2.

NVIDIA System Management Interface (nvidia-smi) is a command line tool based on the NVIDIA Management Library (NVML), designed to assist in the management and monitoring of NVIDIA GPU devices .

This utility allows administrators to query GPU device status and allows administrators to modify GPU device status with appropriate permissions. It targets Tesla, GRID, Quadro and Titan X products, but other NVIDIA GPUs also have limited support.

NVIDIA-smi ships with the NVIDIA GPU display driver on Linux, as well as 64-bit Windows Server 2008 R2 and Windows 7. Nvidia-smi can report query information as XML or human-readable plain text to standard output or a file.

nvidia-smi

Refresh GPU information every 1 second

nvidia-smi -l 1

List all current GPUs Device

nvidia-smi -L

View current GPU clock speed, default clock speed and maximum possible clock speed

nvidia-smi -q -d CLOCK

nvidia- smi command

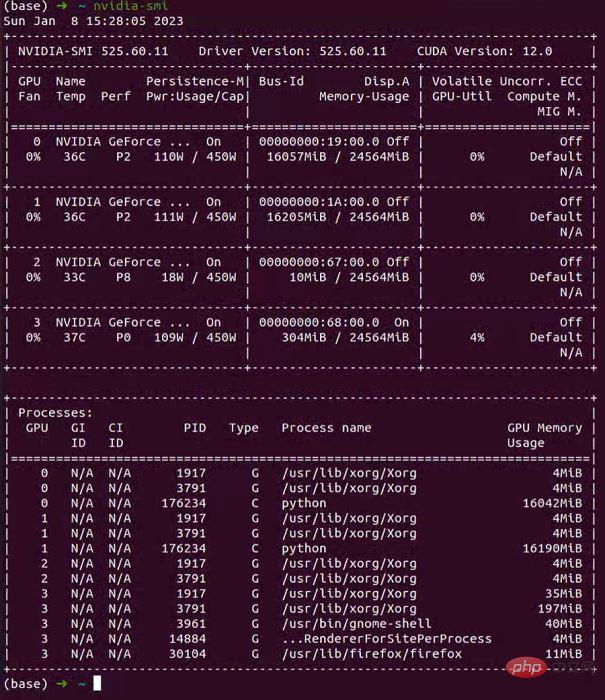

Input directly on the command linenvidia-smi The command should be a command that all alchemists are familiar with.

Note: It is recommended to use

watch -n 0.5 nvidia-smito dynamically observe the status of the GPU.

Through the nvidia-smi command, we will get such an informative page:

Tue Nov 9 13:47:51 2021

-----------------------------------------------------------------------------

| NVIDIA-SMI 495.44 Driver Version: 495.44 CUDA Version: 11.5 |

|------------------------------- ---------------------- ----------------------

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=============================== ====================== ======================|

| 0 NVIDIA GeForce ... Off | 00000000:17:00.0 Off | N/A |

| 62% 78C P2 155W / 170W | 10123MiB / 12051MiB | 100% Default |

| | | N/A |

------------------------------- ---------------------- ----------------------

| 1 NVIDIA GeForce ... Off | 00000000:65:00.0 Off | N/A |

|100% 92C P2 136W / 170W | 10121MiB / 12053MiB | 99% Default |

| | | N/A |

------------------------------- ---------------------- ----------------------

| 2 NVIDIA GeForce ... Off | 00000000:B5:00.0 Off | N/A |

| 32% 34C P8 12W / 170W | 5MiB / 12053MiB | 0% Default |

| | | N/A |

------------------------------- ---------------------- ----------------------

| 3 NVIDIA GeForce ... Off | 00000000:B6:00.0 Off | N/A |

| 30% 37C P8 13W / 170W | 5MiB / 12053MiB | 0% Default |

| | | N/A |

------------------------------- ---------------------- ----------------------+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1258 G /usr/lib/xorg/Xorg 6MiB |

| 0 N/A N/A 10426 C ...a3/envs/JJ_env/bin/python 10111MiB |

| 1 N/A N/A 1258 G /usr/lib/xorg/Xorg 4MiB |

| 1 N/A N/A 10427 C ...a3/envs/JJ_env/bin/python 10111MiB |

| 2 N/A N/A 1258 G /usr/lib/xorg/Xorg 4MiB |

| 3 N/A N/A 1258 G /usr/lib/xorg/Xorg 4MiB |

+-----------------------------------------------------------------------------+

其中显存占用和 GPU 利用率当然是我们最常来查看的参数,但是在一些情况下(比如要重点监控 GPU 的散热情况时)其他参数也很有用,笔者简单总结了一下该命令输出的各个参数的含义如下图:

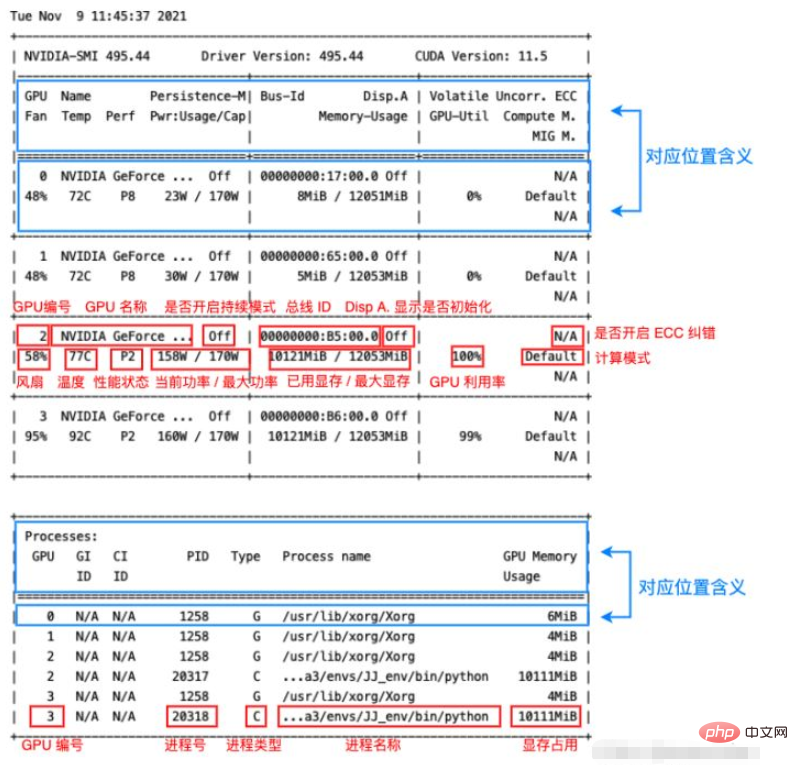

可以看到其中各个位置的对应含义在输出本身中其实都已经指出了(蓝框),红框则指出了输出各个部分的含义,大部分输出的作用一目了然,这里笔者将其中几个不那么直观的参数简单整理一下:

Fan:从0到100%之间变动,这个速度是计算机期望的风扇转速,实际情况下如果风扇堵转,可能打不到显示的转速。

Perf:是性能状态,从P0到P12,P0表示最大性能,P12表示状态最小性能。

Persistence-M:是持续模式的状态,持续模式虽然耗能大,但是在新的GPU应用启动时,花费的时间更少,这里显示的是off的状态。

Disp.A:Display Active,表示GPU的显示是否初始化。

Compute M:是计算模式。

Volatile Uncorr. ECC:是否开启 ECC 纠错。

type:进程类型。C 表示计算进程,G 表示图形进程,C+G 表示都有。

除了直接运行 nvidia-smi 命令之外,还可以加一些参数,来查看一些本机 Nvidia GPU 的其他一些状态。下面笔者简单介绍几个常用的参数,其他的有需要可以去手册中查找:man nvidia-smi。

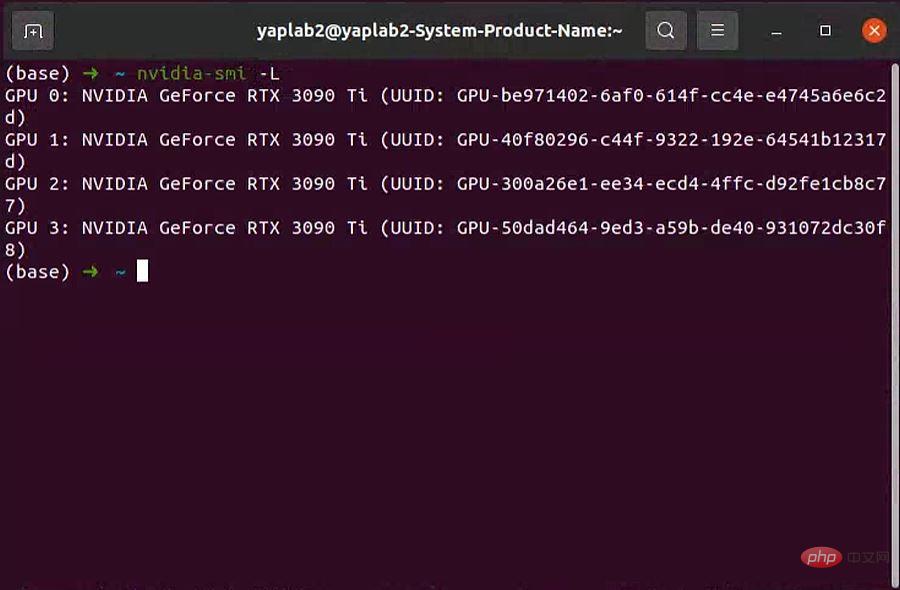

-L 参数显示连接到系统的 GPU 列表。

nvidia-smi -L # 输出: GPU 0: NVIDIA GeForce RTX 3060 (UUID: GPU-55275dff-****-****-****-6408855fced9) GPU 1: NVIDIA GeForce RTX 3060 (UUID: GPU-0a1e7f37-****-****-****-df9a8bce6d6b) GPU 2: NVIDIA GeForce RTX 3060 (UUID: GPU-38e2771e-****-****-****-d5cbb85c58d8) GPU 3: NVIDIA GeForce RTX 3060 (UUID: GPU-8b45b004-****-****-****-46c05975a9f0)

GPU UUID:此值是GPU的全球唯一不可变字母数字标识符。它与主板上的物理标签无关。

-i 参数指定某个 GPU,多用于查看 GPU 信息时指定其中一个 GPU。

-q 参数查看 GPU 的全部信息。可通过 -i 参数指定查看某个 GPU 的参数。

如:

nvidia-smi -i 0 -q

输出太长了,笔者这里就不列了,大家可以自己试一下,是很完整的信息。

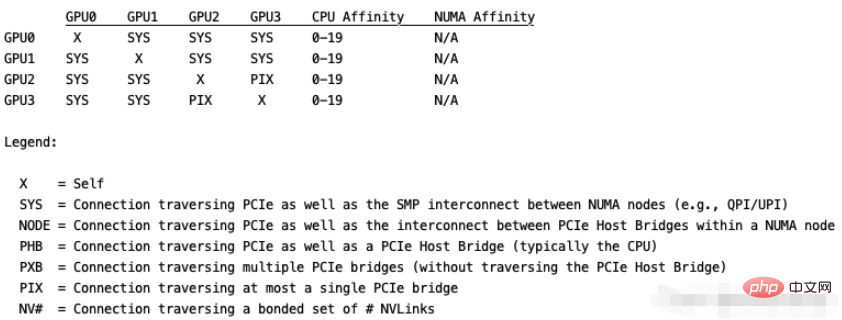

topo 展示多GPU系统的拓扑连接信息,通常配合 -m 参数即 nvidia-smi topo -m,其他参数可自行查阅。

输出如下,这里用代码块没法对齐,就直接贴图了:

The above is the detailed content of What are the commonly used nvidia-smi commands in Linux?. For more information, please follow other related articles on the PHP Chinese website!