Technology peripherals

Technology peripherals

AI

AI

Lava Alpaca LLaVA is here: like GPT-4, you can view pictures and chat, no invitation code is required, and you can play online

Lava Alpaca LLaVA is here: like GPT-4, you can view pictures and chat, no invitation code is required, and you can play online

Lava Alpaca LLaVA is here: like GPT-4, you can view pictures and chat, no invitation code is required, and you can play online

#When will GPT-4’s image recognition capabilities be online? There is still no answer to this question.

But the research community can’t wait any longer and have started DIY. The most popular one is a project called MiniGPT-4. MiniGPT-4 demonstrates many capabilities similar to GPT-4, such as generating detailed image descriptions and creating websites from handwritten drafts. Additionally, the authors observed other emerging capabilities of MiniGPT-4, including creating stories and poems based on given images, providing solutions to problems shown in images, teaching users how to cook based on food photos, etc. The project received nearly 10,000 stars within 3 days of its launch.

The project we are going to introduce today - LLaVA (Large Language and Vision Assistant) is similar and is a project developed by the University of Wisconsin-Madison and Microsoft A large multi-modal model jointly released by researchers from the Institute and Columbia University.

- ## Paper link: https://arxiv.org/pdf/2304.08485.pdf

- Project link: https://llava-vl.github.io/

This model shows some Image and text understanding ability close to multi-modal GPT-4: it achieved a relative score of 85.1% compared to GPT-4. When fine-tuned on Science QA, the synergy of LLaVA and GPT-4 achieves a new SoTA with 92.53% accuracy.

The following are the trial results of the Heart of the Machine (see the end of the article for more results):

Humans interact with the world through multiple channels such as vision and language, because different channels have their own unique advantages in representing and conveying certain concepts. Multi-channel way to better understand the world. One of the core aspirations of artificial intelligence is to develop a universal assistant that can effectively follow multi-modal instructions, such as visual or verbal instructions, satisfy human intentions, and complete various tasks in real environments.

To this end, there has been a trend in the community to develop visual models based on language enhancement. This type of model has powerful capabilities in open-world visual understanding, such as classification, detection, segmentation, and graphics, as well as visual generation and visual editing capabilities. Each task is independently solved by a large visual model, with the needs of the task implicitly considered in the model design. Furthermore, language is used only to describe image content. While this makes language play an important role in mapping visual signals to linguistic semantics (a common channel for human communication), it results in models that often have fixed interfaces with limitations in interactivity and adaptability to user instructions.

Large Language Models (LLMs), on the other hand, have shown that language can play a broader role: as a universal interactive interface for general-purpose intelligent assistants. In a common interface, various task instructions can be explicitly expressed in language and guide the end-to-end trained neural network assistant to switch modes to complete the task. For example, the recent success of ChatGPT and GPT-4 demonstrated the power of LLM in following human instructions to complete tasks and sparked a wave of development of open source LLM. Among them, LLaMA is an open source LLM with performance similar to GPT-3. Alpaca, Vicuna, GPT-4-LLM utilizes various machine-generated high-quality instruction trace samples to improve the alignment capabilities of LLM, demonstrating impressive performance compared to proprietary LLMs. Unfortunately, the input to these models is text only.

In this article, researchers propose a visual instruction-tuning method, which is the first attempt to extend instruction-tuning to a multi-modal space, paving the way for building a general visual assistant.

Specifically, this paper makes the following contributions:

- Multimodal instruction data. One of the key challenges today is the lack of visual and verbal command data. This paper proposes a data reorganization approach using ChatGPT/GPT-4 to convert image-text pairs into appropriate instruction formats;

- Large multi-modal models. The researchers developed a large multimodal model (LMM) - LLaVA - by connecting CLIP's open source visual encoder and language decoder LLaMA, and performed end-to-end fine-tuning on the generated visual-verbal instruction data. Empirical research verifies the effectiveness of using generated data for LMM instruction-tuning, and provides more practical techniques for building universal instructions that follow visual agents. Using GPT-4, we achieve state-of-the-art performance on Science QA, a multimodal inference dataset.

- Open source. The researchers released the following assets to the public: the generated multi-modal instruction data, code libraries for data generation and model training, model checkpoints, and visual chat demonstrations.

LLaVA Architecture

The main goal of this article is to effectively utilize the power of pre-trained LLM and vision models. The network architecture is shown in Figure 1. This paper chooses the LLaMA model as the LLM fφ(・) because its effectiveness has been demonstrated in several open source pure language instruction-tuning works.

For the input image X_v, this article uses the pre-trained CLIP visual encoder ViT-L/14 for processing, and obtains the visual feature Z_v=g ( X_v). The mesh features before and after the last Transformer layer were used in the experiment. This article uses a simple linear layer to connect image features into word embedding space. Specifically, a trainable projection matrix W is applied to transform Z_v into a language embedding token H_q, which has the same dimensions as the word embedding space in the language model:

#After that, a series of visual markers H_v are obtained. This simple projection scheme is lightweight, low-cost, and can quickly iterate on data-centric experiments. One can also consider more complex (but expensive) schemes for concatenating image and language features, such as the gated cross-attention mechanism in Flamingo and Q-former in BLIP-2, or other visual encoders that provide object-level features, such as SAM.

Experimental results

Multimodal chatbot

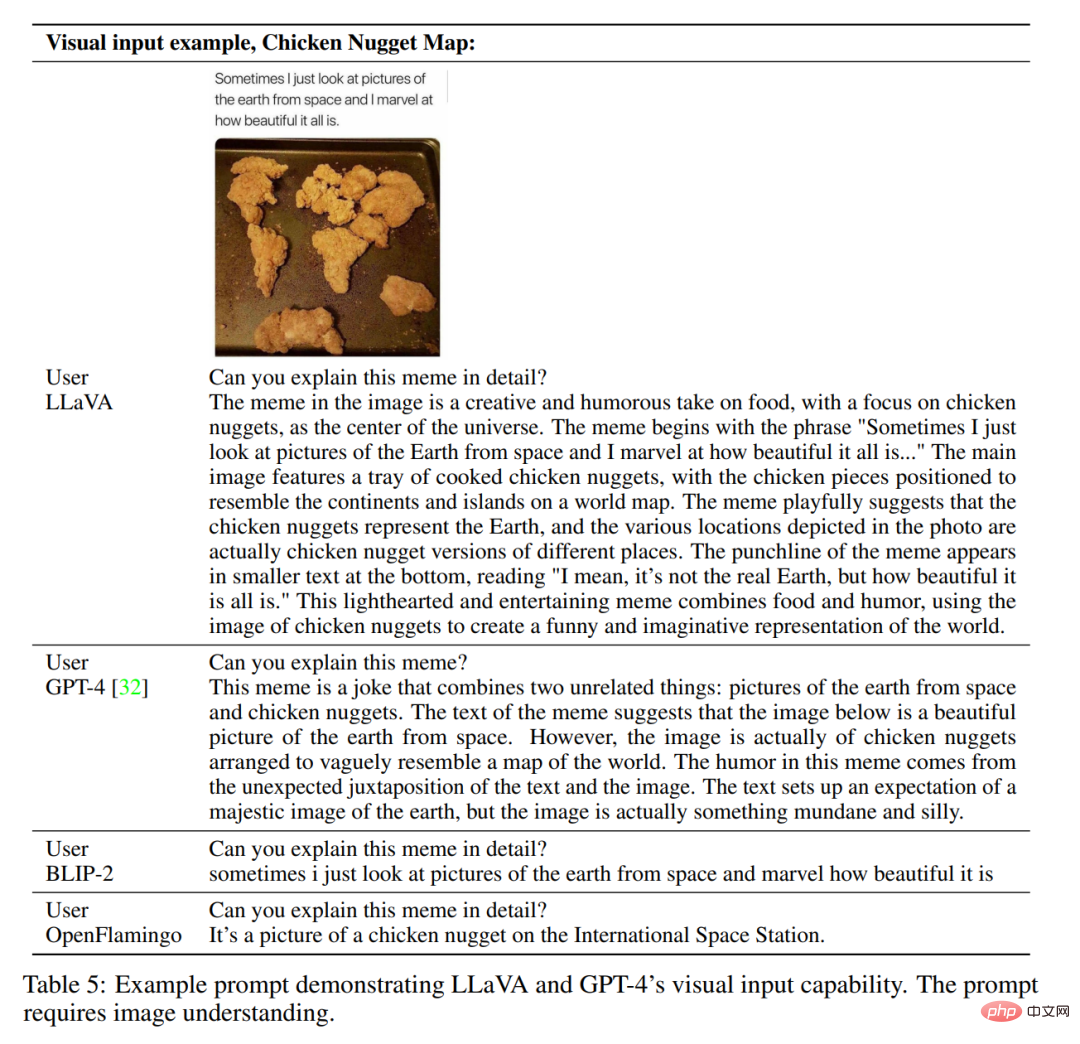

The researcher developed a chat Robot sample product to demonstrate LLaVA's image understanding and dialogue capabilities. In order to further study how LLaVA processes visual input and demonstrate its ability to process instructions, the researchers first used examples from the original GPT-4 paper, as shown in Tables 4 and 5. The prompt used needs to fit the image content. For comparison, this article quotes the prompts and results of the multimodal model GPT-4 from their paper.

Surprisingly, although LLaVA was performed using a small multi-modal instruction dataset ( (about 80K unique images), but it shows very similar inference results to the multi-modal model GPT-4 on the above two examples. Note that both images are outside the scope of LLaVA's dataset, which is capable of understanding the scene and answering the question instructions. In contrast, BLIP-2 and OpenFlamingo focus on describing images rather than answering user instructions in an appropriate manner. More examples are shown in Figure 3, Figure 4, and Figure 5.

Quantitative evaluation results are shown in Table 3.

ScienceQA

ScienceQA contains 21k multi-modal multiple selections Questions, involving 3 themes, 26 topics, 127 categories and 379 skills, with rich domain diversity. The benchmark dataset is divided into training, validation and testing parts with 12726, 4241 and 4241 samples respectively. This article compares two representative methods, including the GPT-3.5 model (text-davinci-002) and the GPT-3.5 model without Chain of Thought (CoT) version, LLaMA-Adapter, and Multimodal Thought Chain (MM- CoT) [57], which is the current SoTA method on this dataset, and the results are shown in Table 6.

Trial feedback

On the visualization usage page given in the paper, the machine heart also tried to input some pictures and instruction. The first is a common multi-person task in Q&A. Tests have shown that smaller targets are ignored when counting people, there are recognition errors for overlapping people, and there are also recognition errors for gender.

Next, we tried some generation tasks, such as naming the picture, or telling a story based on the picture story. The results output by the model are still biased towards understanding the image content, and the generation capabilities need to be strengthened.

In this photo, even if the human bodies overlap, the number of people can still be accurately identified. From the perspective of picture description and understanding ability, there are still highlights in the work of this article, and there is room for second creation.

The above is the detailed content of Lava Alpaca LLaVA is here: like GPT-4, you can view pictures and chat, no invitation code is required, and you can play online. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,