Java

Java

javaTutorial

javaTutorial

How to use @KafkaListener to concurrently receive messages in batches in Spring Boot

How to use @KafkaListener to concurrently receive messages in batches in Spring Boot

How to use @KafkaListener to concurrently receive messages in batches in Spring Boot

The first step, concurrent consumption

Look at the code first. The key point is that we are using ConcurrentKafkaListenerContainerFactory and set factory.setConcurrency(4); (My topic has 4 partitions. In order to To speed up consumption, set the concurrency to 4, that is, there are 4 KafkaMessageListenerContainers)

@Bean

KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(4);

factory.setBatchListener(true);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}Note that you can also add spring.kafka.listener.concurrency=3 directly to application.properties, and then use @KafkaListener for concurrent consumption.

The second step is batch consumption

Then there is batch consumption. The key points are factory.setBatchListener(true);

and propsMap.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 50);One is to enable batch consumption, and the other is to set the maximum number of message records that batch consumption can consume each time.

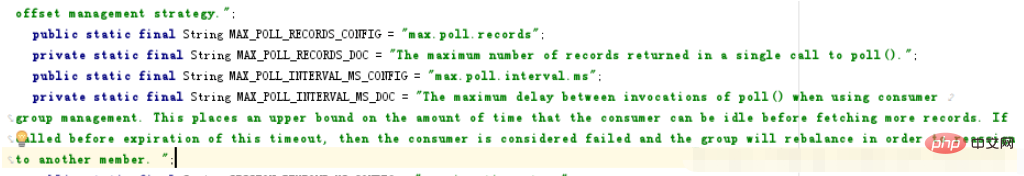

It is important to note that the ConsumerConfig.MAX_POLL_RECORDS_CONFIG we set is 50, which does not mean that we will keep waiting if 50 messages are not reached. The official explanation is "The maximum number of records returned in a single call to poll()." That is, 50 represents the maximum number of records returned in one poll.

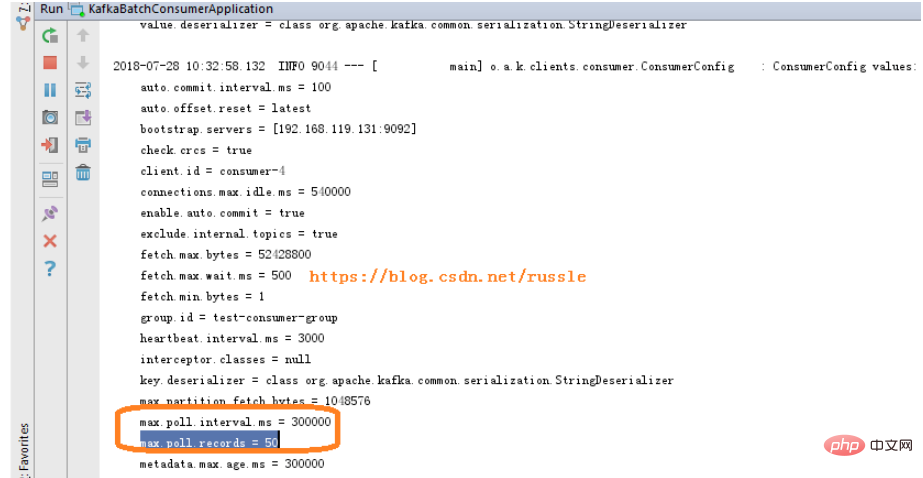

You can see from the startup log that there is max.poll.interval.ms = 300000, which means that we call poll once every max.poll.interval.ms interval. Each poll returns up to 50 records.

max.poll.interval.msThe official explanation is "The maximum delay between invocations of poll() when using consumer group management. This places an upper bound on the amount of time that the consumer can be idle before fetching more records. If poll() is not called before expiration of this timeout, then the consumer is considered failed and the group will rebalance in order to reassign the partitions to another member. ";

@Bean

KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(4);

factory.setBatchListener(true);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}

@Bean

public Map consumerConfigs() {

Map propsMap = new HashMap<>();

propsMap.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, propsConfig.getBroker());

propsMap.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, propsConfig.getEnableAutoCommit());

propsMap.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "100");

propsMap.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "15000");

propsMap.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.GROUP_ID_CONFIG, propsConfig.getGroupId());

propsMap.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, propsConfig.getAutoOffsetReset());

propsMap.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 50);

return propsMap;

}

public class MyListener {

private static final String TPOIC = "topic02";

@KafkaListener(id = "id0", topicPartitions = { @TopicPartition(topic = TPOIC, partitions = { "0" }) })

public void listenPartition0(List<ConsumerRecord<?, ?>> records) {

log.info("Id0 Listener, Thread ID: " + Thread.currentThread().getId());

log.info("Id0 records size " + records.size());

for (ConsumerRecord<?, ?> record : records) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

log.info("Received: " + record);

if (kafkaMessage.isPresent()) {

Object message = record.value();

String topic = record.topic();

log.info("p0 Received message={}", message);

}

}

}

@KafkaListener(id = "id1", topicPartitions = { @TopicPartition(topic = TPOIC, partitions = { "1" }) })

public void listenPartition1(List<ConsumerRecord<?, ?>> records) {

log.info("Id1 Listener, Thread ID: " + Thread.currentThread().getId());

log.info("Id1 records size " + records.size());

for (ConsumerRecord<?, ?> record : records) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

log.info("Received: " + record);

if (kafkaMessage.isPresent()) {

Object message = record.value();

String topic = record.topic();

log.info("p1 Received message={}", message);

}

}

}The above is the detailed content of How to use @KafkaListener to concurrently receive messages in batches in Spring Boot. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How Springboot integrates Jasypt to implement configuration file encryption

Jun 01, 2023 am 08:55 AM

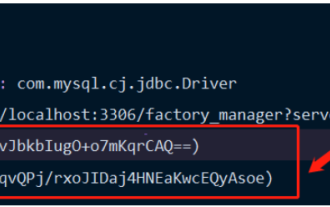

How Springboot integrates Jasypt to implement configuration file encryption

Jun 01, 2023 am 08:55 AM

Introduction to Jasypt Jasypt is a java library that allows a developer to add basic encryption functionality to his/her project with minimal effort and does not require a deep understanding of how encryption works. High security for one-way and two-way encryption. , standards-based encryption technology. Encrypt passwords, text, numbers, binaries... Suitable for integration into Spring-based applications, open API, for use with any JCE provider... Add the following dependency: com.github.ulisesbocchiojasypt-spring-boot-starter2. 1.1Jasypt benefits protect our system security. Even if the code is leaked, the data source can be guaranteed.

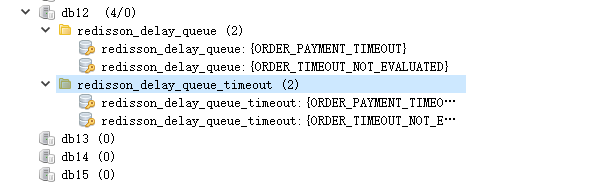

How SpringBoot integrates Redisson to implement delay queue

May 30, 2023 pm 02:40 PM

How SpringBoot integrates Redisson to implement delay queue

May 30, 2023 pm 02:40 PM

Usage scenario 1. The order was placed successfully but the payment was not made within 30 minutes. The payment timed out and the order was automatically canceled. 2. The order was signed and no evaluation was conducted for 7 days after signing. If the order times out and is not evaluated, the system defaults to a positive rating. 3. The order is placed successfully. If the merchant does not receive the order for 5 minutes, the order is cancelled. 4. The delivery times out, and push SMS reminder... For scenarios with long delays and low real-time performance, we can Use task scheduling to perform regular polling processing. For example: xxl-job Today we will pick

How to use Redis to implement distributed locks in SpringBoot

Jun 03, 2023 am 08:16 AM

How to use Redis to implement distributed locks in SpringBoot

Jun 03, 2023 am 08:16 AM

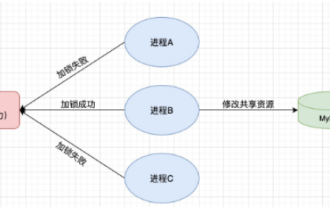

1. Redis implements distributed lock principle and why distributed locks are needed. Before talking about distributed locks, it is necessary to explain why distributed locks are needed. The opposite of distributed locks is stand-alone locks. When we write multi-threaded programs, we avoid data problems caused by operating a shared variable at the same time. We usually use a lock to mutually exclude the shared variables to ensure the correctness of the shared variables. Its scope of use is in the same process. If there are multiple processes that need to operate a shared resource at the same time, how can they be mutually exclusive? Today's business applications are usually microservice architecture, which also means that one application will deploy multiple processes. If multiple processes need to modify the same row of records in MySQL, in order to avoid dirty data caused by out-of-order operations, distribution needs to be introduced at this time. The style is locked. Want to achieve points

How to solve the problem that springboot cannot access the file after reading it into a jar package

Jun 03, 2023 pm 04:38 PM

How to solve the problem that springboot cannot access the file after reading it into a jar package

Jun 03, 2023 pm 04:38 PM

Springboot reads the file, but cannot access the latest development after packaging it into a jar package. There is a situation where springboot cannot read the file after packaging it into a jar package. The reason is that after packaging, the virtual path of the file is invalid and can only be accessed through the stream. Read. The file is under resources publicvoidtest(){Listnames=newArrayList();InputStreamReaderread=null;try{ClassPathResourceresource=newClassPathResource("name.txt");Input

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

SpringBoot and SpringMVC are both commonly used frameworks in Java development, but there are some obvious differences between them. This article will explore the features and uses of these two frameworks and compare their differences. First, let's learn about SpringBoot. SpringBoot was developed by the Pivotal team to simplify the creation and deployment of applications based on the Spring framework. It provides a fast, lightweight way to build stand-alone, executable

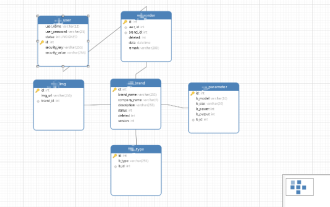

How to implement Springboot+Mybatis-plus without using SQL statements to add multiple tables

Jun 02, 2023 am 11:07 AM

How to implement Springboot+Mybatis-plus without using SQL statements to add multiple tables

Jun 02, 2023 am 11:07 AM

When Springboot+Mybatis-plus does not use SQL statements to perform multi-table adding operations, the problems I encountered are decomposed by simulating thinking in the test environment: Create a BrandDTO object with parameters to simulate passing parameters to the background. We all know that it is extremely difficult to perform multi-table operations in Mybatis-plus. If you do not use tools such as Mybatis-plus-join, you can only configure the corresponding Mapper.xml file and configure The smelly and long ResultMap, and then write the corresponding sql statement. Although this method seems cumbersome, it is highly flexible and allows us to

How SpringBoot customizes Redis to implement cache serialization

Jun 03, 2023 am 11:32 AM

How SpringBoot customizes Redis to implement cache serialization

Jun 03, 2023 am 11:32 AM

1. Customize RedisTemplate1.1, RedisAPI default serialization mechanism. The API-based Redis cache implementation uses the RedisTemplate template for data caching operations. Here, open the RedisTemplate class and view the source code information of the class. publicclassRedisTemplateextendsRedisAccessorimplementsRedisOperations, BeanClassLoaderAware{//Declare key, Various serialization methods of value, the initial value is empty @NullableprivateRedisSe

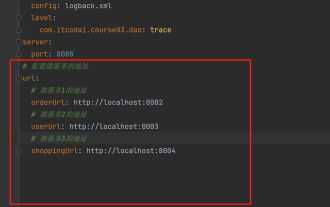

How to get the value in application.yml in springboot

Jun 03, 2023 pm 06:43 PM

How to get the value in application.yml in springboot

Jun 03, 2023 pm 06:43 PM

In projects, some configuration information is often needed. This information may have different configurations in the test environment and the production environment, and may need to be modified later based on actual business conditions. We cannot hard-code these configurations in the code. It is best to write them in the configuration file. For example, you can write this information in the application.yml file. So, how to get or use this address in the code? There are 2 methods. Method 1: We can get the value corresponding to the key in the configuration file (application.yml) through the ${key} annotated with @Value. This method is suitable for situations where there are relatively few microservices. Method 2: In actual projects, When business is complicated, logic