Technology peripherals

Technology peripherals

AI

AI

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

Speaking of domestic games that have become popular all over the world in the past two years, Genshin Impact definitely takes the cake.

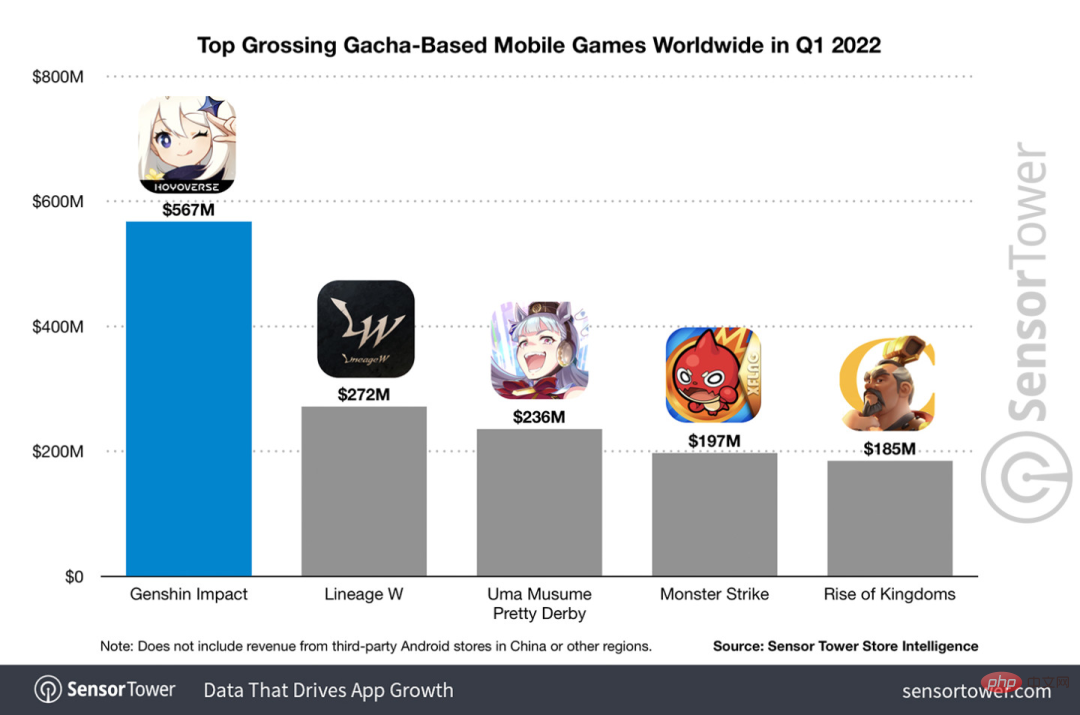

According to this year’s Q1 quarter mobile game revenue survey report released in May, “Genshin Impact” firmly won the first place among card-drawing mobile games with an absolute advantage of 567 million U.S. dollars. This also announced that “Genshin Impact” 》In just 18 months after its launch, the total revenue on the mobile platform alone exceeded US$3 billion (approximately RM13 billion).

Now, the last 2.8 island version before the opening of Xumi is long overdue. After a long draft period, there are finally new plots and areas to play.

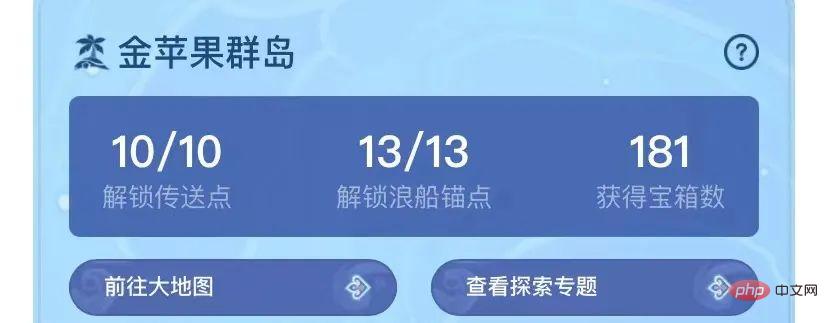

But I don’t know how many “Liver Emperors” there are. Now that the island has been fully explored, grass has begun to grow again.

There are 182 treasure chests in total and 1 Mora box (not included)

There is nothing to be afraid of during the long grass period, there is never a shortage in the Genshin Impact area Whole job.

No, during the long grass period, some players used XVLM wenet STARK to make a voice control project to play Genshin Impact.

For example, when he said "Use Tactic 3 to attack the fire slime in the middle", Zhongli first used a shield, Ling Hua did a step step and then said "Sorry", and the group destroyed 4 of them. Fire Slime.

Similarly, after saying "attack the Daqiuqiu people in the middle", Diona used E to set up a shield, Ling Hua followed up with an E and then 3A to clean up beautifully. Two large Qiuqiu people were lost.

As can be seen on the lower left, the entire process was done without any use of hands.

Digest Fungi said that he is an expert, he can save his hands when writing books in the future, and said that mom no longer has to worry about tenosynovitis from playing Genshin Impact!

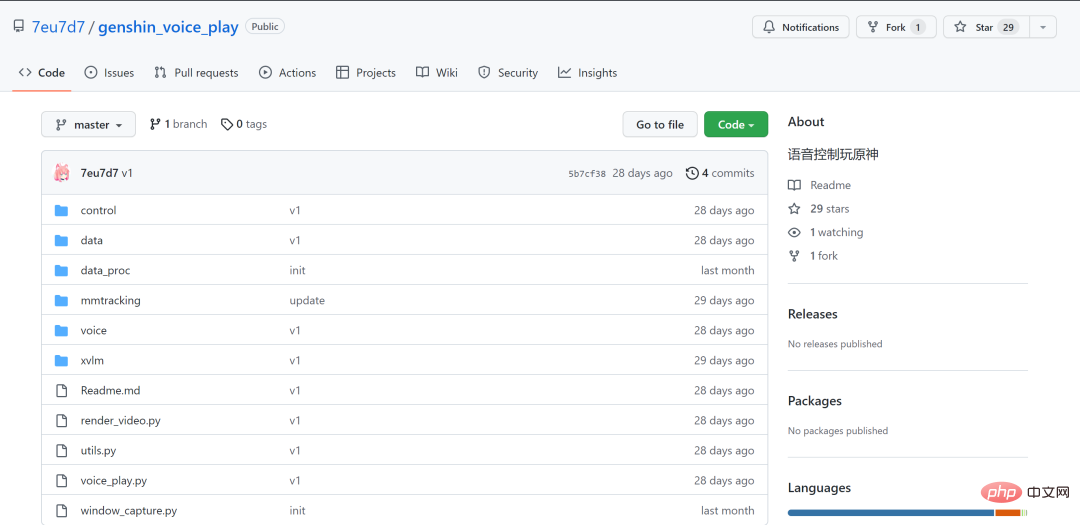

The project is currently open source on GitHub:

GitHub link:

https://github.com/7eu7d7/genshin_voice_play

Good Genshin Impact, he was actually played as a Pokémon

Such a live-action project naturally attracted the attention of many Genshin Impact players.

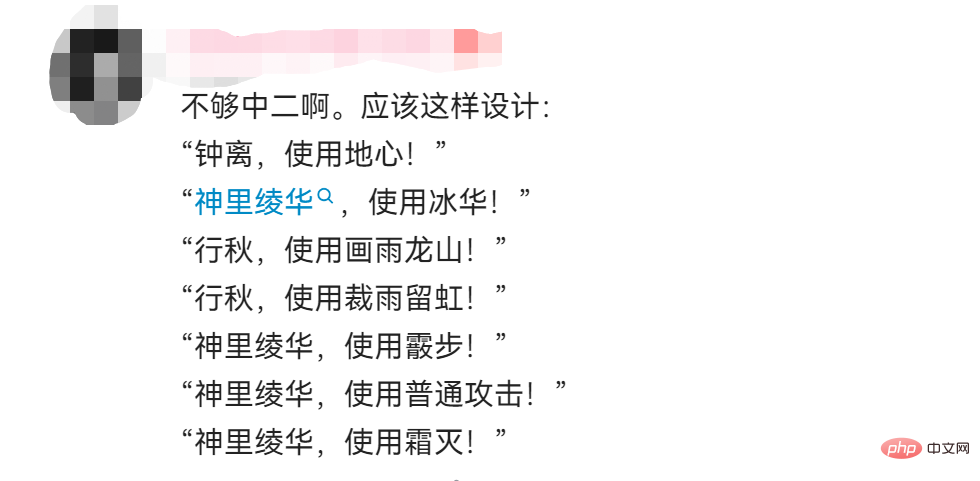

For example, some players suggested that the design can be more neutral and directly use the character name plus the skill name. After all, the audience cannot know the instructions such as "Tactics 3" at the first time, and "Zhongli, Using "Centre of the Earth" makes it easy to substitute into the game experience.

Some netizens said that since they can give instructions to monsters, can they also give voice commands to characters, such as "Turtle, use Frost Destruction".

guiguidailyquestion.jpg

However, why do these instructions seem so familiar?

In response to this, the up owner "Schrödinger's Rainbow Cat" said that the speed of shouting skills may not be kept up, and the attack speed will be slower. This is why he A set is preset.

However, the output methods of some classic teams, such as "Wanda International" and "Lei Jiuwan Ban" are relatively fixed, and the preset attack sequence and mode seem to be It works.

Of course, in addition to making jokes, netizens are also brainstorming and putting forward many optimization suggestions.

For example, directly use "1Q" to let the character in position 1 magnify his moves, use "heavy" to express heavy attacks, and "dodge" to dodge. In this way, it will be easier and faster to issue instructions, and it may also be used to fight the abyss. .

Some expert players also said that this AI seems to "do not understand the environment very well", "the next step can be to consider adding SLAM", "to achieve 360-degree all-round target detection" ".

# The owner of up said that the next step is to "completely automatically refresh the base, teleport, defeat monsters, and receive rewards in one package". It seems that an automatic strengthening saint can also be added. The relic function will format the AI if it is crooked.

The hard-core live-up master of Genshin Impact also published the "Tivat Fishing Guide"

As Digest Fungus said, Genshin Impact has never There is a lack of work, and this up owner "Schrödinger's Rainbow Cat" should be the most "hardcore" among them.

From "AI automatically places the maze" to "AI automatically plays", every mini-game produced by Genshin Impact can be said to be based on AI.

Among them, Wenzhijun also discovered the "AI automatic fishing" project (the good guy turns out to be you too). You only need to start the program, and all the fish in Teyvat can be bagged. thing.

Genshin Impact automatic fishing AI consists of two parts of the model: YOLOX and DQN:

YOLOX is used to locate and identify fish types and locate the landing point of the fishing rod;

DQN is used to adaptively control the click of the fishing process so that the intensity falls within the optimal area.

In addition, this project also uses transfer learning and semi-supervised learning for training. The model also contains some non-learnable parts that are implemented using traditional digital image processing methods such as opencv.

Project address:

https://github.com/7eu7d7/genshin_auto_fish

You still need to fish after the 3.0 update "Salted Fish Bow", I'll leave it to you!

Those "artifacts" that turn Genshin Impact into Pokémon

As a serious person, Digest Fungus feels it is necessary to educate everyone about the use of Genshin Impact voice project Several "artifacts".

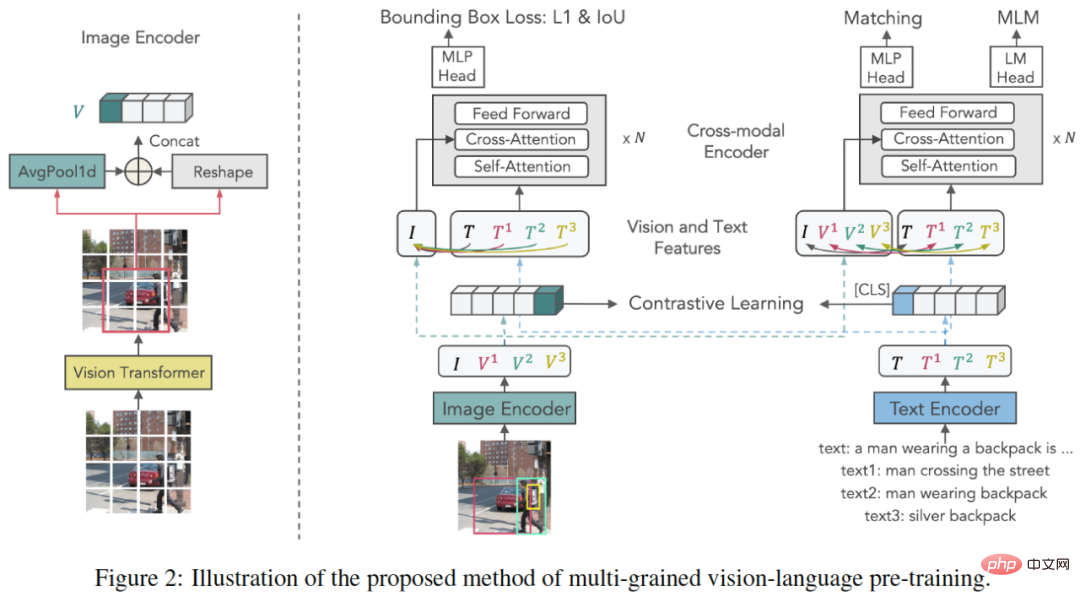

X-VLM is a multi-granularity model based on the visual language model (VLM). It consists of an image encoder, a text encoder and a cross-modal encoder. The cross-modal encoder combines visual features and language Cross-modal attention between features to learn visual language alignment.

The key to learning multi-granularity alignment is to optimize X-VLM: 1) by combining bounding box regression loss and IoU loss to locate visual concepts in images given associated text; 2) at the same time, by contrast loss, matching Loss and masked language modeling losses for multi-granular alignment of text with visual concepts.

In fine-tuning and inference, X-VLM can leverage the learned multi-granularity alignment to perform downstream V L tasks without adding bounding box annotations in the input image.

Paper link:

https://arxiv.org/abs/2111.08276

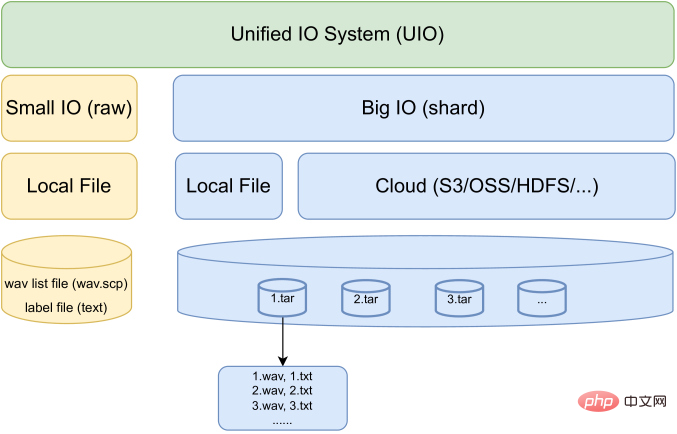

WeNet is an end-to-end production-oriented Speech recognition toolkit, which introduces a unified two-pass (U2) framework and built-in runtime to handle streaming and non-streaming decoding modes in a single model.

Just at the beginning of July this year, WeNet launched version 2.0 and was updated in 4 aspects:

U2: Unified dual-channel framework with bidirectional attention decoder, including from Future context information of the right-to-left attention decoder to improve the representation ability of the shared encoder and the performance of the rescoring stage;

Introduces an n-gram-based language model and a WFST-based decoder to facilitate Understand the use of rich text data in production scenarios;

Designed a unified context bias framework that utilizes user-specific context to provide rapid adaptability for production and improve ASR accuracy in both "with LM" and "without LM" scenarios;

A unified IO is designed to support large-scale data for effective model training.

Judging from the results, WeNet 2.0 achieved a relative recognition performance improvement of up to 10% on various corpora compared with the original WeNet.

Paper link: https://arxiv.org/pdf/2203.15455.pdf

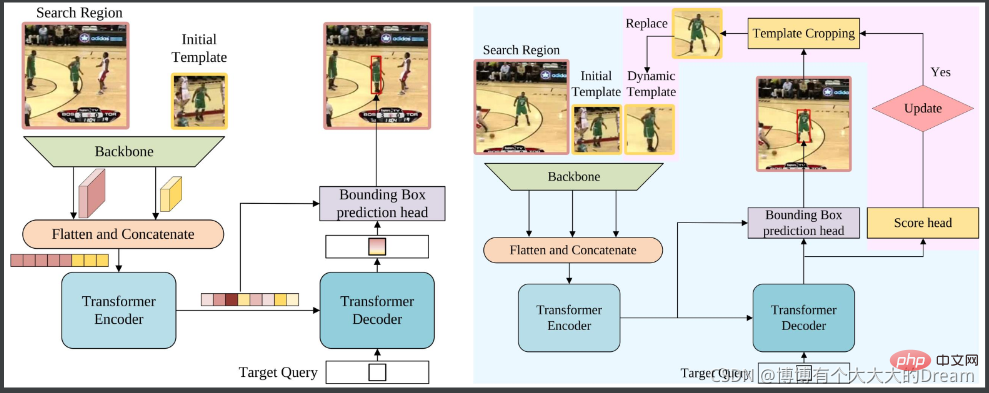

STARK is a spatio-temporal transformation network for visual tracking. Based on the baseline consisting of convolutional backbone, codec converter and bounding box prediction head, STARK has made 3 improvements:

Dynamic update template: use intermediate frames as dynamic templates to add to the input. Dynamic templates can capture appearance changes and provide additional time domain information;

score head: determine whether the dynamic template is currently updated;

Training strategy improvement: Divide training into two stages 1) In addition to score In addition to the head, use the baseline loss function to train. Ensure that all search images contain the target and allow the template to have positioning capabilities; 2) Use cross entropy to only optimize the score head and freeze other parameters at this time to allow the model to have positioning and classification capabilities.

Paper link:

https://openaccess.thecvf.com/content/ICCV2021/papers/Yan_Learning_Spatio-Temporal_Transformer_for_Visual_Tracking_ICCV_2021_paper.pdf

The above is the detailed content of You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving