Technology peripherals

Technology peripherals

AI

AI

NVIDIA releases ChatGPT dedicated GPU, increasing inference speed by 10 times

NVIDIA releases ChatGPT dedicated GPU, increasing inference speed by 10 times

NVIDIA releases ChatGPT dedicated GPU, increasing inference speed by 10 times

Once upon a time, artificial intelligence entered a decades-long bottleneck due to insufficient computing power, and GPU ignited deep learning. In the era of ChatGPT, AI once again faces the problem of insufficient computing power due to large models. Is there any solution for NVIDIA this time?

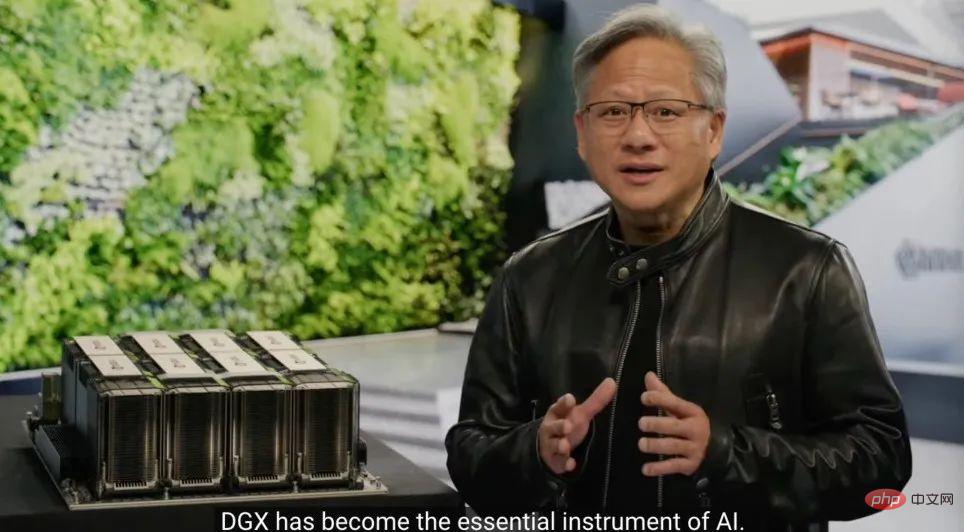

On March 22, the GTC conference was officially held. At the just-conducted Keynote, NVIDIA CEO Jen-Hsun Huang moved out the chips prepared for ChatGPT.

"Accelerating computing is not easy. In 2012, the computer vision model AlexNet used GeForce GTX 580 and could process 262 PetaFLOPS per second. This model triggered an explosion in AI technology," Huang Renxun said. "Ten years later, Transformer appeared. GPT-3 used 323 ZettaFLOPS of computing power, 1 million times that of AlexNet, to create ChatGPT, an AI that shocked the world. A new computing platform appeared, and the iPhone era of AI has arrived. ."

The prosperity of AI has pushed Nvidia's stock price up 77% this year. Currently, Nvidia's market value is US$640 billion, which is Intel's Nearly five times. However, today’s release tells us that Nvidia has not stopped yet.

Designing dedicated computing power for AIGC

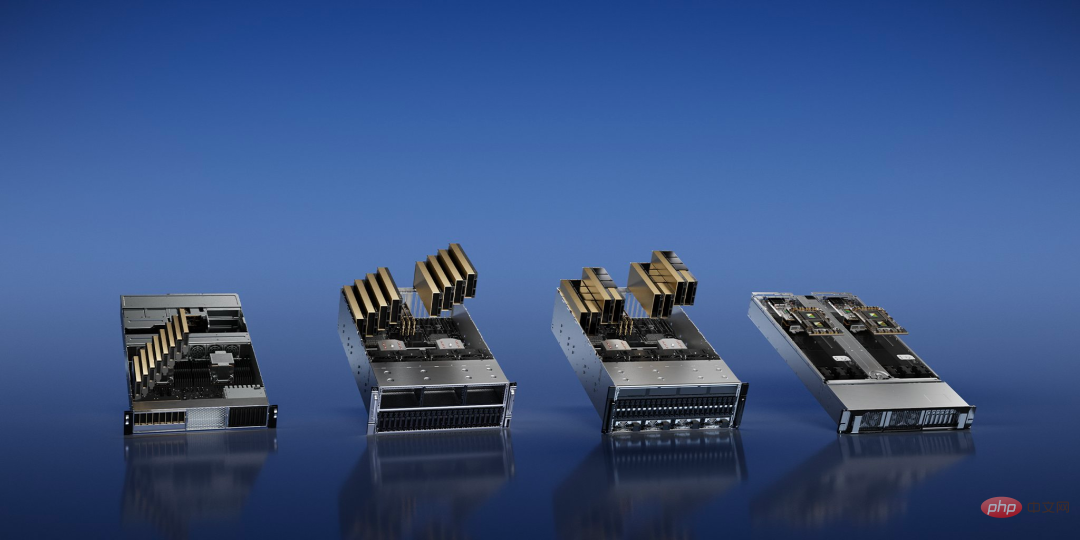

The development of generative AI (AIGC) is changing the needs of technology companies for computing power. Nvidia once demonstrated four types of AI-specific computing power Task reasoning platforms, they all use a unified architecture.

Among them, NVIDIA L4 provides "120 times higher AI-driven video performance than CPU, and 99% energy efficiency", which can be used for video Streaming, encoding and decoding, and generating AI videos; the more powerful NVIDIA L40 is specially used for 2D/3D image generation.

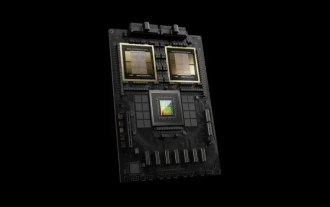

In response to ChatGPT, which requires huge computing power, NVIDIA has released NVIDIA H100 NVL, a large language model (LLM) dedicated solution with 94GB of memory and accelerated Transformer Engine, equipped with PCIE H100 GPU with dual GPU NVLINK.

"Currently the only GPU that can actually handle ChatGPT is the NVIDIA HGX A100. Compared with the former, one is now equipped with four pairs of H100 and dual NVLINK The standard server speed can be 10 times faster, which can reduce the processing cost of large language models by an order of magnitude," Huang said.

Finally there’s NVIDIA Grace Hopper for Recommendation Models, which in addition to being optimized for recommendation tasks, can power graph neural networks and vector databases.

Let chips break through physical limits

Currently, the production process of semiconductors has approached the limits that physics can achieve. After the 2nm process, what is the breakthrough point? NVIDIA decided to start with the most primitive stage of chip manufacturing - photolithography.

Fundamentally speaking, this is an imaging problem at the limits of physics. Under advanced processes, many features on the chip will be smaller than the wavelength of the light used in the printing process, and the design of the mask must be constantly modified, a step called optical proximity correction. Computational lithography simulates the behavior of light when it passes through the original and interacts with the photoresist. These behaviors are described according to Maxwell's equations. This is the most computationally demanding task in the field of chip design and manufacturing.

Jen-Hsun Huang announced a new technology called CuLitho at GTC to speed up the design and manufacturing of semiconductors. The software uses Nvidia chips to speed up the steps between software-based chip design and the physical fabrication of the photolithography masks used to print the design on the chip.

CuLitho runs on GPUs and delivers 40x performance improvements over current lithography technologies, accelerating large-scale computing workloads that currently consume tens of billions of CPU hours annually. "Building H100 requires 89 masks. When running on the CPU, one mask takes two weeks, but if H100 is used to run on CuLitho, it only takes 8 hours," Huang said.

This means that 500 NVIDIA DGX H100 systems can replace the work of 40,000 CPU systems and run all parts of the computational lithography process, helping to reduce power requirements and environmental impact potential impact.

This advancement will make the transistors and circuits of chips smaller than today, while speeding up the time to market of chips and improving the massive data centers that operate around the clock to drive the manufacturing process. energy efficiency.

Nvidia said it is working with ASML, Synopsys and TSMC to bring the technology to market. According to reports, TSMC will begin preparing for trial production of this technology in June.

"The chip industry is the foundation for almost every other industry in the world," Huang said. "With lithography technology at the limits of physics, through CuLitho and working with our partners TSMC, ASML and Synopsys, fabs can increase production, reduce their carbon footprint, and lay the foundation for 2nm and beyond."

The first GPU-accelerated quantum computing system

At today’s event, NVIDIA also announced a new system built using Quantum Machines, which is designed for high-tech applications. Researchers deliver a revolutionary new architecture for performance and low-latency quantum classical computing.

As the world’s first GPU-accelerated quantum computing system, NVIDIA DGX Quantum combines the world’s most powerful accelerated computing platform (Achieved by NVIDIA Grace Hopper super chip and CUDA Quantum open source programming model) combined with the world's most advanced quantum control platform OPX (provided by Quantum Machines). This combination enables researchers to build unprecedentedly powerful applications that combine quantum computing with state-of-the-art classical computing to enable calibration, control, quantum error correction and hybrid algorithms.

At the heart of DGX Quantum is an NVIDIA Grace Hopper system connected by PCIe to Quantum Machines OPX, enabling sub-microsecond latency between the GPU and the Quantum Processing Unit (QPU).

Tim Costa, director of HPC and quantum at NVIDIA, said: "Quantum-accelerated supercomputing has the potential to reshape science and industry, and NVIDIA DGX Quantum will enable researchers to break through the quantum-classical computing gap. Boundaries."

In response, NVIDIA integrated the high-performance Hopper architecture GPU with the company's new Grace CPU into "Grace Hopper" to provide super powerful AI and HPC applications. motivation. It delivers up to 10x performance for applications running terabytes of data, giving quantum-classical researchers more power to solve the world's most complex problems.

DGX Quantum also equips developers with NVIDIA CUDA Quantum, a powerful unified software stack that is now open source. CUDA Quantum is a hybrid quantum-classical computing platform that integrates and programs QPUs, GPUs, and CPUs in one system.

$37,000 per month, train your own ChatGPT on the webpage

Microsoft spent hundreds of millions of dollars to purchase tens of thousands of A100s to build a GPT-specific supercomputer, you are now You may want to rent the same GPUs used by OpenAI and Microsoft to train ChatGPT and Bing Search to train your own large models.

The DGX Cloud proposed by NVIDIA provides a dedicated NVIDIA DGX AI super computing cluster, paired with NVIDIA AI software. This service allows every enterprise to access AI super computing using a simple web browser. computing, eliminating the complexity of acquiring, deploying, and managing on-premises infrastructure.

According to reports, each DGX Cloud instance has eight H100 or A100 80GB Tensor Core GPUs, with a total of 640GB GPU memory per node. A high-performance, low-latency fabric built with NVIDIA Networking ensures workloads can scale across clusters of interconnected systems, allowing multiple instances to act as one giant GPU to meet the performance requirements of advanced AI training.

Now, enterprises can rent a DGX Cloud cluster on a monthly basis to quickly and easily scale development of large multi-node training workloads without waiting for accelerated computing resources that are often in high demand.

The monthly rental price, according to Huang Renxun, starts at $36,999 per instance per month.

“We are in the iPhone moment of artificial intelligence,” Huang said. “Startups are racing to create disruptive products and business models, and incumbents are looking to respond. DGX Cloud gives customers instant access to NVIDIA AI supercomputing in the cloud at global scale."

To help enterprises embrace the wave of generative AI, NVIDIA also announced a series of cloud services that allow Enterprises can build and improve customized large-scale language models and generative AI models.

Now people can use NVIDIA NeMo language services and NVIDIA Picasso image, video and 3D services to build proprietary, domain-specific generative AI applications for intelligent conversations and Customer support, professional content creation, digital simulations and more. Separately, NVIDIA announced new models of the NVIDIA BioNeMo biology cloud service.

"Generative AI is a new type of computer that can be programmed in human natural language. This ability has far-reaching implications - everyone can command the computer to solve problems, which was not the case before Soon, this was just for programmers," Huang said.

Judging from today’s release, Nvidia is not only continuously improving hardware design for technology companies’ AI workloads, but is also proposing new business models. In the eyes of some, NVIDIA wants to be "TSMC in the field of AI": providing advanced productivity foundry services like a wafer factory, helping other companies train AI algorithms for their specific scenarios on top of it.

Using NVIDIA’s supercomputer training to directly eliminate the need for middlemen to earn the price difference, will this be the direction of AI development in the future?

The above is the detailed content of NVIDIA releases ChatGPT dedicated GPU, increasing inference speed by 10 times. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1379

1379

52

52

NVIDIA launches RTX HDR function: unsupported games use AI filters to achieve HDR gorgeous visual effects

Feb 24, 2024 pm 06:37 PM

NVIDIA launches RTX HDR function: unsupported games use AI filters to achieve HDR gorgeous visual effects

Feb 24, 2024 pm 06:37 PM

According to news from this website on February 23, NVIDIA updated and launched the NVIDIA application last night, providing players with a new unified GPU control center, allowing players to capture wonderful moments through the powerful recording tool provided by the in-game floating window. In this update, NVIDIA also introduced the RTXHDR function. The official introduction is attached to this site: RTXHDR is a new AI-empowered Freestyle filter that can seamlessly introduce the gorgeous visual effects of high dynamic range (HDR) into In games that do not originally support HDR. All you need is an HDR-compatible monitor to use this feature with a wide range of DirectX and Vulkan-based games. After the player enables the RTXHDR function, the game will run even if it does not support HD

It is reported that NVIDIA RTX 50 series graphics cards are natively equipped with a 16-Pin PCIe Gen 6 power supply interface

Feb 20, 2024 pm 12:00 PM

It is reported that NVIDIA RTX 50 series graphics cards are natively equipped with a 16-Pin PCIe Gen 6 power supply interface

Feb 20, 2024 pm 12:00 PM

According to news from this website on February 19, in the latest video of Moore's LawisDead channel, anchor Tom revealed that Nvidia GeForce RTX50 series graphics cards will be natively equipped with PCIeGen6 16-Pin power supply interface. Tom said that in addition to the high-end GeForceRTX5080 and GeForceRTX5090 series, the mid-range GeForceRTX5060 will also enable new power supply interfaces. It is reported that Nvidia has set clear requirements that in the future, each GeForce RTX50 series will be equipped with a PCIeGen6 16-Pin power supply interface to simplify the supply chain. The screenshots attached to this site are as follows: Tom also said that GeForceRTX5090

NVIDIA RTX 4070 and 4060 Ti FE graphics cards have dropped below the recommended retail price, 4599/2999 yuan respectively

Feb 22, 2024 pm 09:43 PM

NVIDIA RTX 4070 and 4060 Ti FE graphics cards have dropped below the recommended retail price, 4599/2999 yuan respectively

Feb 22, 2024 pm 09:43 PM

According to news from this site on February 22, generally speaking, NVIDIA and AMD have restrictions on channel pricing, and some dealers who privately reduce prices significantly will also be punished. For example, AMD recently punished dealers who sold 6750GRE graphics cards at prices below the minimum price. The merchant was punished. This site has noticed that NVIDIA GeForce RTX 4070 and 4060 Ti have dropped to record lows. Their founder's version, that is, the public version of the graphics card, can currently receive a 200 yuan coupon at JD.com's self-operated store, with prices of 4,599 yuan and 2,999 yuan. Of course, if you consider third-party stores, there will be lower prices. In terms of parameters, the RTX4070 graphics card has a 5888CUDA core, uses 12GBGDDR6X memory, and a bit width of 192bi

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

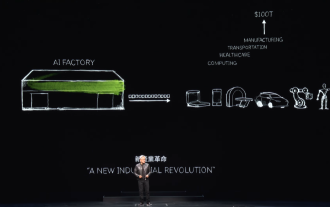

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

Recently, Layer1 blockchain VanarChain has attracted market attention due to its high growth rate and cooperation with AI giant NVIDIA. Behind VanarChain's popularity, in addition to undergoing multiple brand transformations, popular concepts such as main games, metaverse and AI have also earned the project plenty of popularity and topics. Prior to its transformation, Vanar, formerly TerraVirtua, was founded in 2018 as a platform that supported paid subscriptions, provided virtual reality (VR) and augmented reality (AR) content, and accepted cryptocurrency payments. The platform was created by co-founders Gary Bracey and Jawad Ashraf, with Gary Bracey having extensive experience involved in video game production and development.

RTX 4080 is 15% faster than RTX 4070 Ti Super, and the non-Super version is 8% slower.

Jan 24, 2024 pm 01:27 PM

RTX 4080 is 15% faster than RTX 4070 Ti Super, and the non-Super version is 8% slower.

Jan 24, 2024 pm 01:27 PM

According to the news from this site on January 23, according to the foreign technology media Videocardz, based on the 3DMark test results, the NVIDIA GeForce RTX4070TiSuper graphics card is 15% slower than the RTX4080 and 8% faster than the RTX4070Ti graphics card. According to the media report, multiple testers are testing the GeForce RTX4070TiSuper graphics card and will announce detailed test results in the next few days. One of the reviewers anonymously broke the news to the VideoCardz portal and shared the performance information of the graphics card in the 3DMark synthetic test. This site quotes the media’s opinion that the following running scores cannot fully reflect the performance of the RTX4070TiSuper. R

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.