Operation and Maintenance

Operation and Maintenance

Nginx

Nginx

How to install nginx server and configure load balancing under Linux

How to install nginx server and configure load balancing under Linux

How to install nginx server and configure load balancing under Linux

1. Set up a test environment

The test environment here is two lubuntu 19.04 virtual machines installed through virtualbox. The Linux system installation method will not be described in detail.

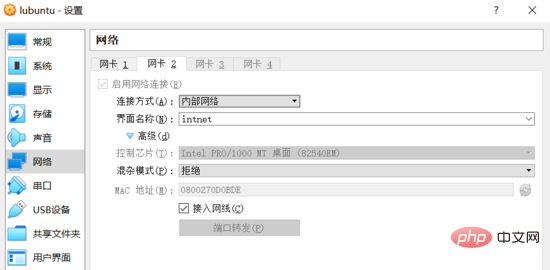

In order to ensure mutual access between two Linux virtual machines, in addition to the default nat method, the network configuration of the virtual machine also uses the internal network (internal) networking method provided by the virtualbox software.

In addition, the network cards associated with the "internal network" in the two virtual machines need to be bound to the static IP addresses of the same network segment. Then the two hosts form a local area network and can directly communicate with each other. access.

Network configuration

Open the virtualbox software, enter the setting interface of the two virtual machines, and add a network connection with the internal network connection method. The screenshots are as follows (two virtual machines) Make the same configuration for each virtual machine):

Internal network

Log in to the virtual machine system and use the ip addr command to view the current Network connection information:

$ ip addr ... 2: enp0s3: <broadcast,multicast,up,lower_up> mtu 1500 qdisc fq_codel state up group default qlen 1000 link/ether 08:00:27:38:65:a8 brd ff:ff:ff:ff:ff:ff inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic noprefixroute enp0s3 valid_lft 86390sec preferred_lft 86390sec inet6 fe80::9a49:54d3:2ea6:1b50/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: enp0s8: <broadcast,multicast,up,lower_up> mtu 1500 qdisc fq_codel state up group default qlen 1000 link/ether 08:00:27:0d:0b:de brd ff:ff:ff:ff:ff:ff inet6 fe80::2329:85bd:937e:c484/64 scope link noprefixroute valid_lft forever preferred_lft forever

You can see that the enp0s8 network card has not been bound to an ipv4 address at this time, and you need to manually specify a static IP for it.

It should be noted that starting from ubuntu 17.10 version, a new tool called netplan is introduced, and the original network configuration file /etc/network/interfaces is no longer valid.

So when setting a static IP for the network card, you need to modify the /etc/netplan/01-network-manager-all.yaml configuration file. The example is as follows:

network: version: 2 renderer: networkmanager ethernets: enp0s8: dhcp4: no dhcp6: no addresses: [192.168.1.101/24] # gateway4: 192.168.1.101 # nameservers: # addresses: [192.168.1.101, 8.8.8.8]

Since the two hosts are in the same subnet , the gateway and dns server can still access each other even if they are not configured. Comment out the corresponding configuration items for now (you can try to build your own dns server later).

After editing is completed, run the sudo netplan apply command, and the static IP configured previously will take effect.

$ ip addr

...

3: enp0s8: <broadcast,multicast,up,lower_up> mtu 1500 qdisc fq_codel state up group default qlen 1000

link/ether 08:00:27:0d:0b:de brd ff:ff:ff:ff:ff:ff

inet 192.168.1.101/24 brd 192.168.1.255 scope global noprefixroute enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe0d:bde/64 scope link

valid_lft forever preferred_lft foreverLog in to another virtual machine and perform the same operation (note that the addresses item in the configuration file is changed to [192.168.1.102/24]). The network configuration of the two virtual machines is completed.

At this time, there is a Linux virtual machine server1 with an IP address of 192.168.1.101; a Linux virtual machine server2 with an IP address of 192.168.1.102. The two hosts can access each other. The test is as follows:

starky@server1:~$ ping 192.168.1.102 -c 2 ping 192.168.1.102 (192.168.1.102) 56(84) bytes of data. 64 bytes from 192.168.1.102: icmp_seq=1 ttl=64 time=0.951 ms 64 bytes from 192.168.1.102: icmp_seq=2 ttl=64 time=0.330 ms --- 192.168.1.102 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 2ms rtt min/avg/max/mdev = 0.330/0.640/0.951/0.311 ms skitar@server2:~$ ping 192.168.1.101 -c 2 ping 192.168.1.101 (192.168.1.101) 56(84) bytes of data. 64 bytes from 192.168.1.101: icmp_seq=1 ttl=64 time=0.223 ms 64 bytes from 192.168.1.101: icmp_seq=2 ttl=64 time=0.249 ms --- 192.168.1.101 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 29ms rtt min/avg/max/mdev = 0.223/0.236/0.249/0.013 ms

2. Install nginx server

There are two main ways to install nginx:

Pre-compilation binary program. This is the simplest and fastest installation method. All mainstream operating systems can be installed through package managers (such as ubuntu's apt-get). This method will install almost all official modules or plug-ins.

Compile and install from source code. This method is more flexible than the former, and you can choose the modules or third-party plug-ins that need to be installed.

This example has no special requirements, so choose the first installation method directly. The command is as follows:

$ sudo apt-get update $ sudo apt-get install nginx

After successful installation, check the running status of the nginx service through the systemctl status nginx command:

$ systemctl status nginx

● nginx.service - a high performance web server and a reverse proxy server

loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: en

active: active (running) since tue 2019-07-02 01:22:07 cst; 26s ago

docs: man:nginx(8)

main pid: 3748 (nginx)

tasks: 2 (limit: 1092)

memory: 4.9m

cgroup: /system.slice/nginx.service

├─3748 nginx: master process /usr/sbin/nginx -g daemon on; master_pro

└─3749 nginx: worker processThrough curl -i 127.0.0.1 Command to verify whether the web server can be accessed normally:

$ curl -i 127.0.0.1 http/1.1 200 ok server: nginx/1.15.9 (ubuntu) ...

3. Load balancing configuration

Load balancing (load-balancing) means to allocate the load according to certain rules Execute on multiple operating units to improve service availability and response speed.

A simple example diagram is as follows:

load-balancing

For example, a website application is deployed on a server cluster composed of multiple hosts. The load balancing server is located between the end user and the server cluster. It is responsible for receiving the access traffic of the end user and distributing the user access to the back-end server host according to certain rules, thereby improving the response speed in high concurrency conditions.

Load balancing server

nginx can configure load balancing through the upstream option. Here, virtual machine server1 is used as the load balancing server.

Modify the configuration file of the default site on serve1 (sudo vim /etc/nginx/sites-available/default) to the following content:

upstream backend {

server 192.168.1.102:8000;

server 192.168.1.102;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}For testing purposes, there are currently only two virtual machine. server1 (192.168.1.101) has been used as a load balancing server, so server2 (192.168.1.102) is used as the application server.

Here we use the virtual host function of nginx to "simulate" 192.168.1.102 and 192.168.1.102:8000 as two different application servers.

Application Server

Modify the configuration file of the default site on server2 (sudo vim /etc/nginx/sites-available/default) to the following content:

server {

listen 80;

root /var/www/html;

index index.html index.htm index.nginx-debian.html;

server_name 192.168.1.102;

location / {

try_files $uri $uri/ =404;

}

}Create the index.html file in the /var/www/html directory as the index page of the default site. The content is as follows:

<html>

<head>

<title>index page from server1</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server1, address 192.168.1.102.</h1>

</body>

</html>Run sudo systemctl restart nginx Command to restart nginx service, you can access the newly created index.html page at this time:

$ curl 192.168.1.102

<html>

<head>

<title>index page from server1</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server1, address 192.168.1.102.</h1>

</body>

</html>配置“另一台主机”上的站点,在 server2 上创建 /etc/nginx/sites-available/server2 配置文件,内容如下:

server {

listen 8000;

root /var/www/html;

index index2.html index.htm index.nginx-debian.html;

server_name 192.168.1.102;

location / {

try_files $uri $uri/ =404;

}

}注意监听端口和 index 页面的配置变化。在 /var/www/html 目录下创建 index2.html 文件,作为 server2 站点的 index 页面,内容如下:

<html>

<head>

<title>index page from server2</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server2, address 192.168.1.102:8000.</h1>

</body>

</html>ps:为了测试目的,default 站点和 server2 站点配置在同一个主机 server2 上,且页面稍有不同。实际环境中通常将这两个站点配置在不同的主机上,且内容一致。

运行 sudo ln -s /etc/nginx/sites-available/server2 /etc/nginx/sites-enabled/ 命令启用刚刚创建的 server2 站点。

重启 nginx 服务,此时访问 即可获取刚刚创建的 index2.html 页面:

$ curl 192.168.1.102:8000

<html>

<head>

<title>index page from server2</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server2, address 192.168.1.102:8000.</h1>

</body>

</html>负载均衡测试

回到负载均衡服务器即虚拟机 server1 上,其配置文件中设置的 反向代理 url 为 。

由于未曾配置域名解析服务,无法将 urlhttp://backend 定位到正确的位置。

可以修改 server1 上的 /etc/hosts 文件,添加如下一条记录:

127.0.0.1 backend

即可将该域名解析到本地 ip ,完成对负载均衡服务器的访问。

重启 nginx 服务,在 server1 上访问 ,效果如下:

$ curl http://backend

<html>

<head>

<title>index page from server1</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server1, address 192.168.1.102.</h1>

</body>

</html>

$ curl http://backend

<html>

<head>

<title>index page from server2</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server2, address 192.168.1.102:8000.</h1>

</body>

</html>

$ curl http://backend

<html>

<head>

<title>index page from server1</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server1, address 192.168.1.102.</h1>

</body>

</html>

$ curl http://backend

<html>

<head>

<title>index page from server2</title>

</head>

<body>

<h1 id="this-nbsp-is-nbsp-server-nbsp-address-nbsp">this is server2, address 192.168.1.102:8000.</h1>

</body>

</html>从输出中可以看出,server1 对负载均衡服务器 的访问,完成了对应用服务器 server2 上两个 web 站点的 轮询 ,起到负载均衡的作用。

四、负载均衡方法

nginx 开源版本提供四种负载均衡的实现方式,简单介绍如下。

1. round robin

用户请求 均匀 地分配给后端服务器集群(可以通过 weight 选项设置轮询的 权重 ),这是 nginx 默认使用的负载均衡方式:

upstream backend {

server backend1.example.com weight=5;

server backend2.example.com;

}2. least connections

用户请求会优先转发给集群中当前活跃连接数最少的服务器。同样支持 weight 选项。

upstream backend {

least_conn;

server backend1.example.com;

server backend2.example.com;

}3. ip hash

用户请求会根据 客户端 ip 地址 进行转发。即该方式意图保证某个特定的客户端最终会访问 同一个 服务器主机。

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

}4. generic hash

用户请求会根据一个 自定义键值 确定最终转发的目的地,该键值可以是字符串、变量或者组合(如源 ip 和端口号)。

upstream backend {

hash $request_uri consistent;

server backend1.example.com;

server backend2.example.com;

}权重

参考下面的示例配置:

upstream backend {

server backend1.example.com weight=5;

server backend2.example.com;

server 192.0.0.1 backup;

}默认权重(weight)为 1 。 backup 服务器 只有在所有其他服务器全部宕机的情况下才会接收请求。

如上面的示例,每 6 个请求会有 5 个转发给 backend1.example.com,1 个转发给 backend2.example.com。只有当 backend1 和 backend2 全部宕机时,192.0.0.1 才会接收并处理请求。

The above is the detailed content of How to install nginx server and configure load balancing under Linux. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

vscode cannot install extension

Apr 15, 2025 pm 07:18 PM

vscode cannot install extension

Apr 15, 2025 pm 07:18 PM

The reasons for the installation of VS Code extensions may be: network instability, insufficient permissions, system compatibility issues, VS Code version is too old, antivirus software or firewall interference. By checking network connections, permissions, log files, updating VS Code, disabling security software, and restarting VS Code or computers, you can gradually troubleshoot and resolve issues.

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

VS Code system requirements: Operating system: Windows 10 and above, macOS 10.12 and above, Linux distribution processor: minimum 1.6 GHz, recommended 2.0 GHz and above memory: minimum 512 MB, recommended 4 GB and above storage space: minimum 250 MB, recommended 1 GB and above other requirements: stable network connection, Xorg/Wayland (Linux)

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

VS Code is available on Mac. It has powerful extensions, Git integration, terminal and debugger, and also offers a wealth of setup options. However, for particularly large projects or highly professional development, VS Code may have performance or functional limitations.

What is vscode What is vscode for?

Apr 15, 2025 pm 06:45 PM

What is vscode What is vscode for?

Apr 15, 2025 pm 06:45 PM

VS Code is the full name Visual Studio Code, which is a free and open source cross-platform code editor and development environment developed by Microsoft. It supports a wide range of programming languages and provides syntax highlighting, code automatic completion, code snippets and smart prompts to improve development efficiency. Through a rich extension ecosystem, users can add extensions to specific needs and languages, such as debuggers, code formatting tools, and Git integrations. VS Code also includes an intuitive debugger that helps quickly find and resolve bugs in your code.

How to run java code in notepad

Apr 16, 2025 pm 07:39 PM

How to run java code in notepad

Apr 16, 2025 pm 07:39 PM

Although Notepad cannot run Java code directly, it can be achieved by using other tools: using the command line compiler (javac) to generate a bytecode file (filename.class). Use the Java interpreter (java) to interpret bytecode, execute the code, and output the result.

How to switch Chinese mode with vscode

Apr 15, 2025 pm 11:39 PM

How to switch Chinese mode with vscode

Apr 15, 2025 pm 11:39 PM

VS Code To switch Chinese mode: Open the settings interface (Windows/Linux: Ctrl, macOS: Cmd,) Search for "Editor: Language" settings Select "Chinese" in the drop-down menu Save settings and restart VS Code

What is the main purpose of Linux?

Apr 16, 2025 am 12:19 AM

What is the main purpose of Linux?

Apr 16, 2025 am 12:19 AM

The main uses of Linux include: 1. Server operating system, 2. Embedded system, 3. Desktop operating system, 4. Development and testing environment. Linux excels in these areas, providing stability, security and efficient development tools.

How to use VSCode

Apr 15, 2025 pm 11:21 PM

How to use VSCode

Apr 15, 2025 pm 11:21 PM

Visual Studio Code (VSCode) is a cross-platform, open source and free code editor developed by Microsoft. It is known for its lightweight, scalability and support for a wide range of programming languages. To install VSCode, please visit the official website to download and run the installer. When using VSCode, you can create new projects, edit code, debug code, navigate projects, expand VSCode, and manage settings. VSCode is available for Windows, macOS, and Linux, supports multiple programming languages and provides various extensions through Marketplace. Its advantages include lightweight, scalability, extensive language support, rich features and version