How to use nginx simulation for canary publishing

Canary Release/Grayscale Release

The focus of canary release is: trial and error. The origin of the canary release itself is a tragic story of the beautiful creatures of nature in the development of human industry. The canary uses its life to try and make mistakes for the safety of the miners. A small cost is used to exchange for overall security. In the practice of continuous deployment, the canary is traffic control. A very small amount of traffic, such as one percent or one tenth, is used to verify whether a certain version is normal. If it is abnormal, its function will be achieved at the lowest cost and the risk will be reduced. If it is normal, you can gradually increase the weight until it reaches 100%, and switch all traffic to the new version smoothly. Grayscale publishing is generally a similar concept. Gray is a transition between black and white. It is different from blue and green deployment, which is either blue or green. Grayscale release/canary release has a time period when both exist at the same time, but the corresponding traffic of the two is different. If canary release is different from grayscale release, the difference should be the purpose. The purpose of canary release is trial and error, while grayscale release is about stable release, while there is no problem in canary release. It is a smooth transition under the circumstances of grayscale publishing.

Simulating canary release

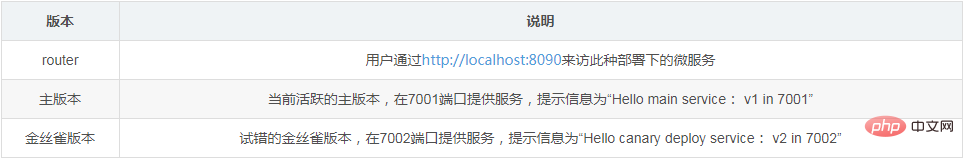

Next, we use nginx’s upstream to simply simulate the canary release scenario. The specific scenario is as follows. The main version is currently active. By adjusting the nginx settings and constantly adjusting the weight of the canary version, a smooth release is finally achieved.

Preparation in advance

Start two services on the two ports 7001/7002 in advance to display different information. The demonstration is convenient. I used tornado to make an image. The different parameters passed when starting the docker container are used to display the differences in services.

docker run -d -p 7001:8080 liumiaocn/tornado:latest python /usr/local/bin/daemon.py "hello main service: v1 in 7001" docker run -d -p 7002:8080 liumiaocn/tornado:latest python /usr/local/bin/daemon.py "hello canary deploy service: v2 in 7002"

Execution log

[root@kong ~]# docker run -d -p 7001:8080 liumiaocn/tornado:latest python /usr/local/bin/daemon.py "hello main service: v1 in 7001" 28f42bbd21146c520b05ff2226514e62445b4cdd5d82f372b3791fdd47cd602a [root@kong ~]# docker run -d -p 7002:8080 liumiaocn/tornado:latest python /usr/local/bin/daemon.py "hello canary deploy service: v2 in 7002" b86c4b83048d782fadc3edbacc19b73af20dc87f5f4cf37cf348d17c45f0215d [root@kong ~]# curl http://192.168.163.117:7001 hello, service :hello main service: v1 in 7001 [root@kong ~]# curl http://192.168.163.117:7002 hello, service :hello canary deploy service: v2 in 7002 [root@kong ~]#

Start nginx

[root@kong ~]# docker run -p 9080:80 --name nginx-canary -d nginx 659f15c4d006df6fcd1fab1efe39e25a85c31f3cab1cda67838ddd282669195c [root@kong ~]# docker ps |grep nginx-canary 659f15c4d006 nginx "nginx -g 'daemon ..." 7 seconds ago up 7 seconds 0.0.0.0:9080->80/tcp nginx-canary [root@kong ~]#

nginx code segment

Prepare the following nginx code snippet and add it to /etc/nginx/conf.d/default.conf of nginx. The simulation method is very simple. Use down to indicate that the traffic is zero (weight cannot be set to zero in nginx). At the beginning, 100% of the traffic is sent to the main version.

http {

upstream nginx_canary {

server 192.168.163.117:7001 weight=100;

server 192.168.163.117:7002 down;

}

server {

listen 80;

server_name www.liumiao.cn 192.168.163.117;

location / {

proxy_pass http://nginx_canary;

}

}How to modify default.conf

You can achieve the effect by installing vim in the container, you can also modify it locally and pass it in through docker cp, or directly sed Modifications are possible. If you install vim in a container, use the following method

[root@kong ~]# docker exec -it nginx-lb sh # apt-get update ...省略 # apt-get install vim ...省略

Before modification

# cat default.conf

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the php scripts to apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the php scripts to fastcgi server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param script_filename /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

#After modification

# cat default.conf

upstream nginx_canary {

server 192.168.163.117:7001 weight=100;

server 192.168.163.117:7002 down;

}

server {

listen 80;

server_name www.liumiao.cn 192.168.163.117;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

#root /usr/share/nginx/html;

#index index.html index.htm;

proxy_pass http://nginx_canary;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the php scripts to apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the php scripts to fastcgi server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param script_filename /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

#Reload nginx settings

# nginx -s reload 2018/05/28 05:16:20 [notice] 319#319: signal process started #

Confirm the result

The output of all 10 calls is v1 in 7001

[root@kong ~]# cnt=0; while [ $cnt -lt 10 ]; do curl ; let cnt ; done

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service: v1 in 7001

hello, service :hello main service : v1 in 7001

hello, service :hello main service: v1 in 7001

[root@kong ~]

Canary release: Canary version traffic Weight 10%

By adjusting the weight of default.conf and then executing nginx -s reload, adjust the weight of the canary version to 10%, and 10% of the traffic will execute the new service

How to modify default.conf

You only need to adjust the weight of the server in upstream as follows:

upstream nginx_canary {

server 192.168.163.117:7001 weight=10;

server 192.168.163.117:7002 weight=90;

}Reload nginx settings Determine

# nginx -s reload 2018/05/28 05:20:14 [notice] 330#330: signal process started #

Confirm the result

[root@kong ~]# cnt=0; while [ $cnt -lt 10 ]; do curl ; let cnt ; done

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service : v2 in 7002

[root@kong ~]

#Canary release: Canary version traffic weight 50%

through adjustment The weight of default.conf, and then execute nginx -s reload, adjust the weight of the canary version to 50%, and 50% of the traffic will execute the new service

Method to modify default.conf

You only need to adjust the weight of the server in upstream as follows:

upstream nginx_canary {

server 192.168.163.117:7001 weight=50;

server 192.168.163.117:7002 weight=50;

}Reload nginx settings

# nginx -s reload 2018/05/28 05:22:26 [notice] 339#339: signal process started #

确认结果

[root@kong ~]# cnt=0; while [ $cnt -lt 10 ]; do curl ; let cnt++; done

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

[root@kong ~]#

金丝雀发布: 金丝雀版本流量权重90%

通过调整default.conf的weight,然后执行nginx -s reload的方式,调节金丝雀版本的权重为90%,流量的90%会执行新的服务

修改default.conf的方法

只需要将upstream中的server的权重做如下调整:

upstream nginx_canary {

server 192.168.163.117:7001 weight=10;

server 192.168.163.117:7002 weight=90;

}重新加载nginx设定

# nginx -s reload 2018/05/28 05:24:29 [notice] 346#346: signal process started #

确认结果

[root@kong ~]# cnt=0; while [ $cnt -lt 10 ]; do curl ; let cnt++; done

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello main service: v1 in 7001

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

[root@kong ~]#

金丝雀发布: 金丝雀版本流量权重100%

通过调整default.conf的weight,然后执行nginx -s reload的方式,调节金丝雀版本的权重为100%,流量的100%会执行新的服务

修改default.conf的方法

只需要将upstream中的server的权重做如下调整:

upstream nginx_canary {

server 192.168.163.117:7001 down;

server 192.168.163.117:7002 weight=100;

}重新加载nginx设定

# nginx -s reload 2018/05/28 05:26:37 [notice] 353#353: signal process started

确认结果

[root@kong ~]# cnt=0; while [ $cnt -lt 10 ]; do curl ; let cnt++; done

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

hello, service :hello canary deploy service: v2 in 7002

[root@kong ~]#

The above is the detailed content of How to use nginx simulation for canary publishing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

To allow the Tomcat server to access the external network, you need to: modify the Tomcat configuration file to allow external connections. Add a firewall rule to allow access to the Tomcat server port. Create a DNS record pointing the domain name to the Tomcat server public IP. Optional: Use a reverse proxy to improve security and performance. Optional: Set up HTTPS for increased security.

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

Converting an HTML file to a URL requires a web server, which involves the following steps: Obtain a web server. Set up a web server. Upload HTML file. Create a domain name. Route the request.

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

Server deployment steps for a Node.js project: Prepare the deployment environment: obtain server access, install Node.js, set up a Git repository. Build the application: Use npm run build to generate deployable code and dependencies. Upload code to the server: via Git or File Transfer Protocol. Install dependencies: SSH into the server and use npm install to install application dependencies. Start the application: Use a command such as node index.js to start the application, or use a process manager such as pm2. Configure a reverse proxy (optional): Use a reverse proxy such as Nginx or Apache to route traffic to your application

Can nodejs be accessed from the outside?

Apr 21, 2024 am 04:43 AM

Can nodejs be accessed from the outside?

Apr 21, 2024 am 04:43 AM

Yes, Node.js can be accessed from the outside. You can use the following methods: Use Cloud Functions to deploy the function and make it publicly accessible. Use the Express framework to create routes and define endpoints. Use Nginx to reverse proxy requests to Node.js applications. Use Docker containers to run Node.js applications and expose them through port mapping.

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

To successfully deploy and maintain a PHP website, you need to perform the following steps: Select a web server (such as Apache or Nginx) Install PHP Create a database and connect PHP Upload code to the server Set up domain name and DNS Monitoring website maintenance steps include updating PHP and web servers, and backing up the website , monitor error logs and update content.

How to use Fail2Ban to protect your server from brute force attacks

Apr 27, 2024 am 08:34 AM

How to use Fail2Ban to protect your server from brute force attacks

Apr 27, 2024 am 08:34 AM

An important task for Linux administrators is to protect the server from illegal attacks or access. By default, Linux systems come with well-configured firewalls, such as iptables, Uncomplicated Firewall (UFW), ConfigServerSecurityFirewall (CSF), etc., which can prevent a variety of attacks. Any machine connected to the Internet is a potential target for malicious attacks. There is a tool called Fail2Ban that can be used to mitigate illegal access on the server. What is Fail2Ban? Fail2Ban[1] is an intrusion prevention software that protects servers from brute force attacks. It is written in Python programming language

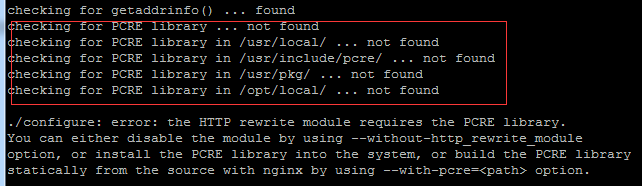

Come with me to learn Linux and install Nginx

Apr 28, 2024 pm 03:10 PM

Come with me to learn Linux and install Nginx

Apr 28, 2024 pm 03:10 PM

Today, I will lead you to install Nginx in a Linux environment. The Linux system used here is CentOS7.2. Prepare the installation tools 1. Download Nginx from the Nginx official website. The version used here is: 1.13.6.2. Upload the downloaded Nginx to Linux. Here, the /opt/nginx directory is used as an example. Run "tar-zxvfnginx-1.13.6.tar.gz" to decompress. 3. Switch to the /opt/nginx/nginx-1.13.6 directory and run ./configure for initial configuration. If the following prompt appears, it means that PCRE is not installed on the machine, and Nginx needs to

Several points to note when building high availability with keepalived+nginx

Apr 23, 2024 pm 05:50 PM

Several points to note when building high availability with keepalived+nginx

Apr 23, 2024 pm 05:50 PM

After yum installs keepalived, configure the keepalived configuration file. Note that in the keepalived configuration files of master and backup, the network card name is the network card name of the current machine. VIP is selected as an available IP. It is usually used in high availability and LAN environments. There are many, so this VIP is an intranet IP in the same network segment as the two machines. If used in an external network environment, it does not matter whether it is on the same network segment, as long as the client can access it. Stop the nginx service and start the keepalived service. You will see that keepalived pulls the nginx service to start. If it cannot start and fails, it is basically a problem with the configuration files and scripts, or a prevention problem.