Technology peripherals

Technology peripherals

AI

AI

Prompt is no longer needed. You can play the multi-modal dialogue system with just your hands. iChat is here!

Prompt is no longer needed. You can play the multi-modal dialogue system with just your hands. iChat is here!

Prompt is no longer needed. You can play the multi-modal dialogue system with just your hands. iChat is here!

Xi Xiaoyao Technology Talk Original

Author | IQ has dropped all over the place

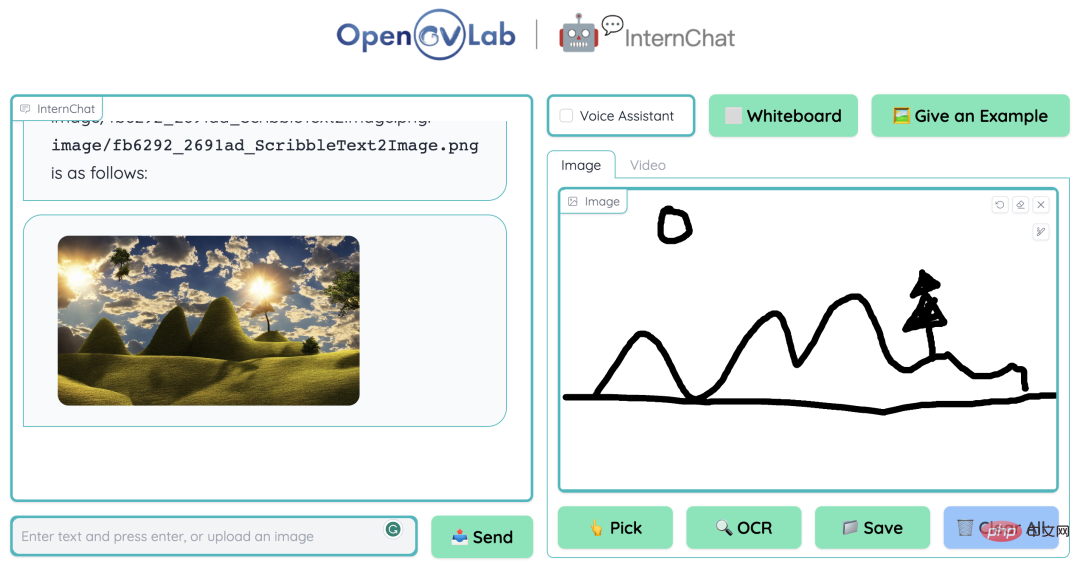

Recently, many teams have re-created based on the user-friendly ChatGPT, many of which have relatively eye-catching results. The InternChat work emphasizes user-friendliness by interacting with the chatbot in ways beyond language (cursors and gestures) for multimodal tasks. The name of InternChat is also interesting. It stands for interaction, nonverbal and chatbots. It can be referred to as iChat. Unlike existing interactive systems that rely on pure language, iChat significantly improves the efficiency of communication between users and chatbots by adding pointing instructions. In addition, the author also provides a large visual language model called Husky that can perform capture and visual question answering, and can also impress GPT-3.5-turbo with only 7 billion parameters.

However, due to the popularity of the Demo website, the team officially closed the experience page temporarily. Let’s first understand the content of this work through the following video~

Paper title:

InternChat: Solving Vision-Centric Tasks by Interacting with Chatbots Beyond Language

Paper link:

https://www.php.cn/link/7c9966afcc510cf5a40621d1d92bdaf1

Demo address :

https://www.php.cn/link/e355ad06c5a89f911fbb0aff2de52435

Project address:

https://www.php.cn/link/ 2d13d901966a8eaa7f9c943eba6a540b

Main features of the system

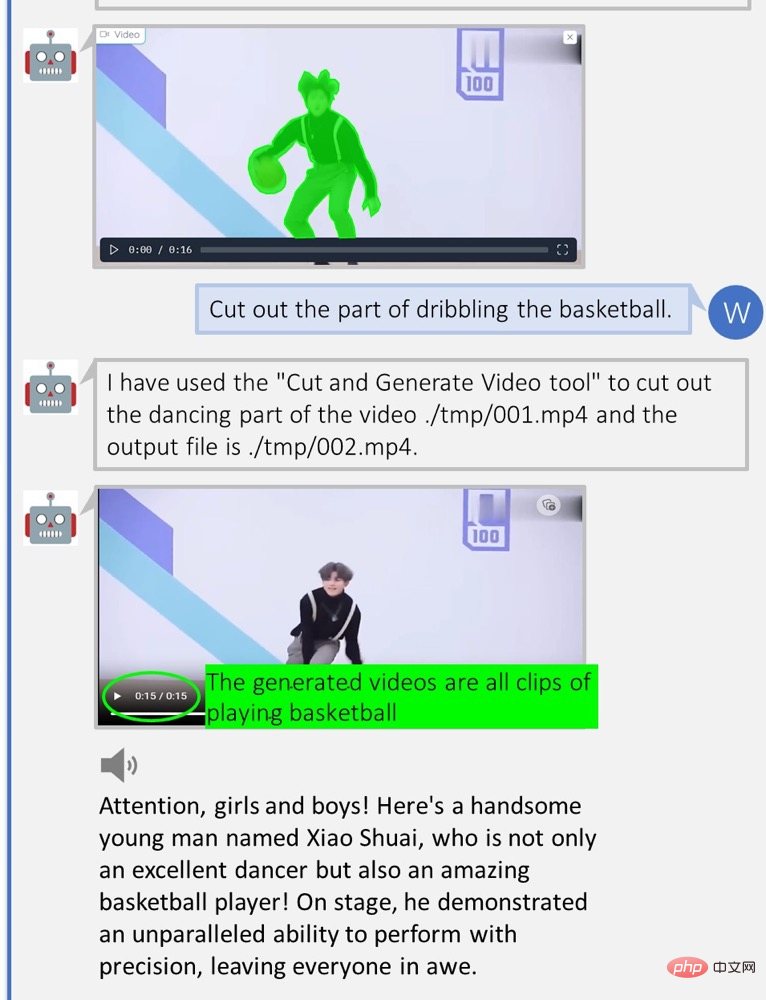

The author has provided some task screenshots on the project homepage, so that you can intuitively see some functions and effects of this interactive system:

(a) Remove obscured objects

(b) Interactive image editing

(c) Image generation

(d) Interactive visual question and answer

( e) Interactive image generation

(f) Video highlight explanation

Paper quick overview

Here we first introduce the two concepts mentioned in this article:

- Vision-centric tasks: In order for computers to understand what they see from the world and react accordingly .

- Communication in the form of non-verbal instructions: pointing actions such as cursors and hand gestures.

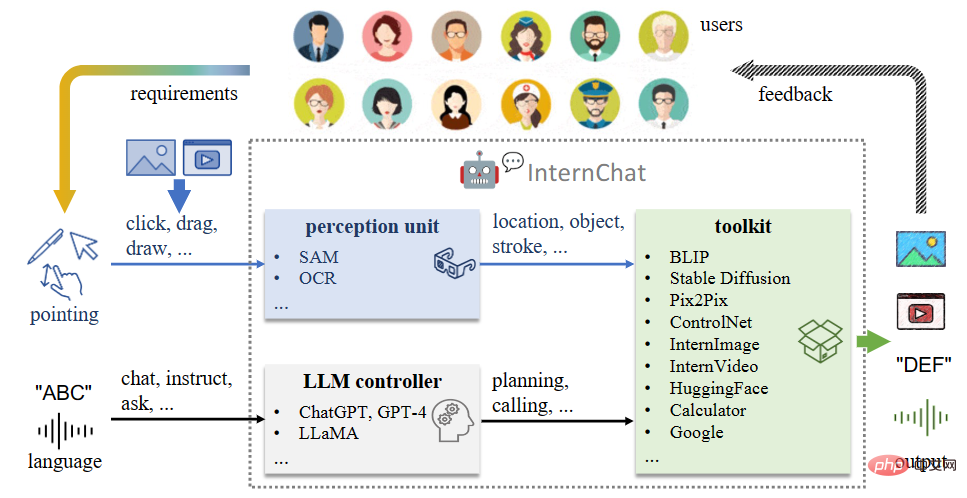

▲Figure 1 The overall architecture of iChat

iChat combines the advantages of pointing and language instructions to perform vision-centric tasks. As shown in Figure 1, this system consists of 3 main components:

- A perception unit that processes pointing instructions on images or videos;

- Has an auxiliary control that can accurately parse language instructions LLM controller of the mechanism;

- An open world toolkit that integrates HuggingFace's various online models, user-trained private models, and other applications (such as calculators and search engines).

It can effectively operate on 3 levels, namely:

- Basic interaction;

- Language-guided interaction;

- Point-to-language-enhanced interaction.

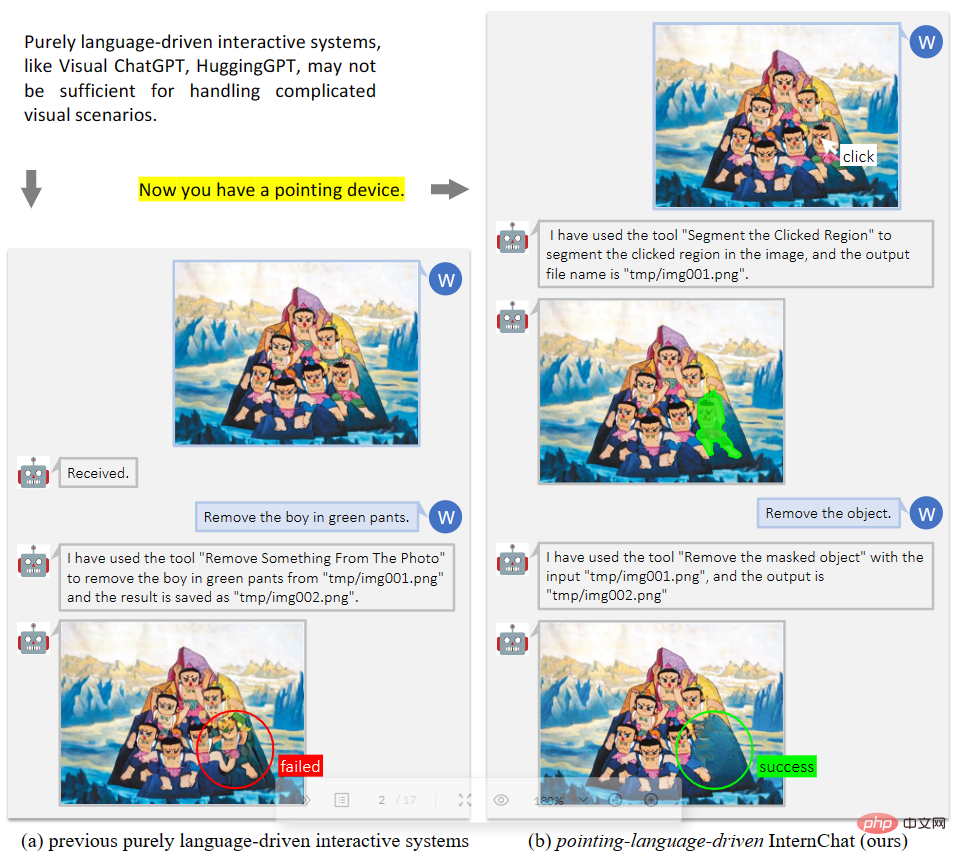

Thus, as shown in Figure 2, when a pure language system cannot complete the task, the system can still successfully perform complex interactive tasks.

▲Figure 2 Pointing to the advantages of language-driven interactive system

Experiment

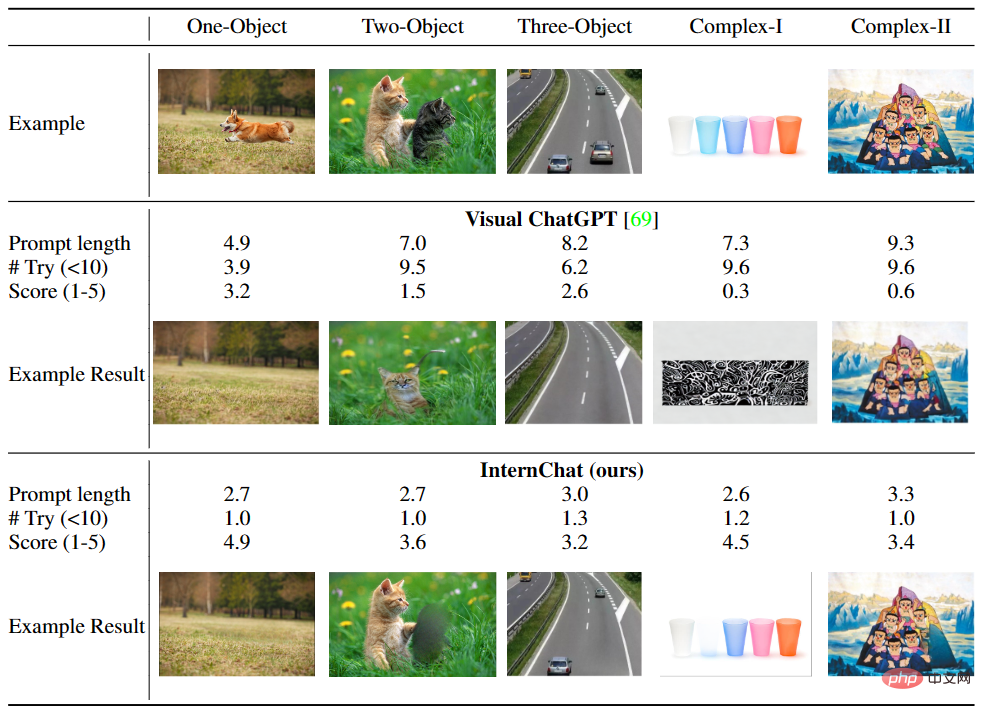

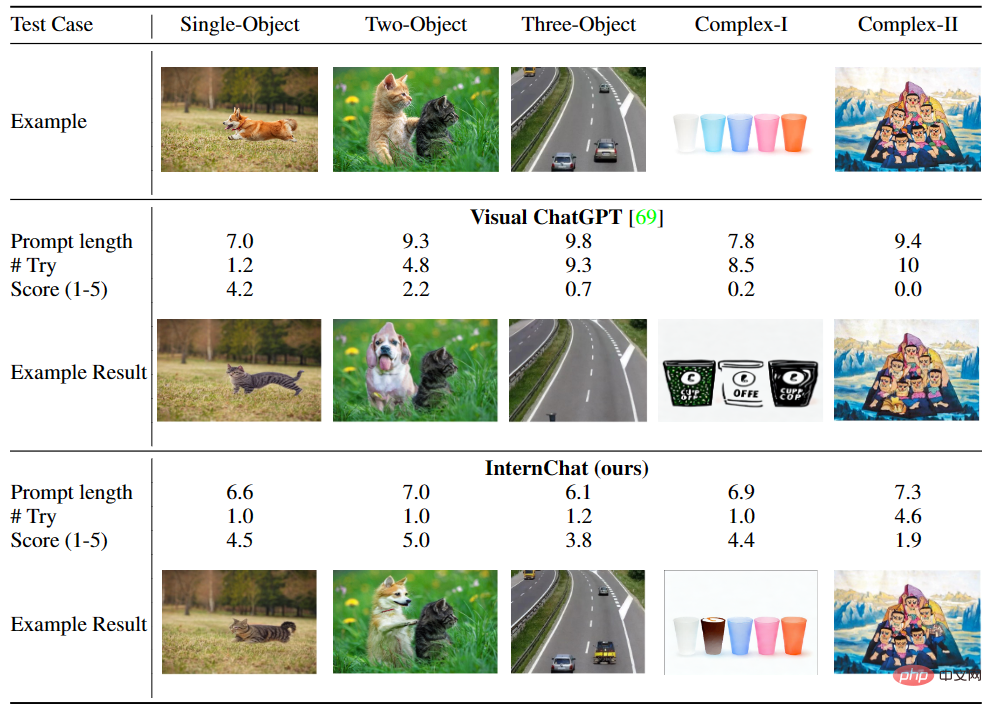

First let’s look at combining language and non-language Commands to improve communication with interactive systems. To demonstrate the advantages of this hybrid model compared to pure language instructions, the research team conducted a user survey. Participants chatted with Visual ChatGPT and iChat and gave feedback on their experience using it. The results in Tables 1 and 2 show that iChat is more efficient and user-friendly than Visual ChatGPT.

▲Table 1 User survey of “Remove something”

▲Table 2 “Replace with something” "Something" user survey

Summary

However, the system still has some limitations, including:

- The efficiency of iChat is greatly improved. The extent depends on the quality and accuracy of its underlying open source model. However, these models may have limitations or biases that adversely affect iChat performance.

- As user interactions become more complex or the number of instances increases, the system needs to maintain accuracy and response time, which can be challenging for iChat.

- In addition, there is a lack of learnable collaboration between current vision and language-based models, such as the lack of functions that can be adjusted by the instruction data.

- iChat may have difficulty responding to novel or unusual situations outside of the training data, causing performance to suffer.

- Achieving seamless integration across different devices and platforms can be challenging because of varying hardware capabilities, software limitations, and accessibility requirements.

On the plan list listed on the project homepage, there are still several goals that have not yet been achieved. Among them is the Chinese interaction that the editor must experience every time on the new dialogue system. Currently, this The system still probably does not support Chinese for the time being, but there seems to be no solution. Since most multi-modal data sets are based on English, English-Chinese translation wastes online resources and processing time. It is estimated that the road to Chineseization will still take some time.

The above is the detailed content of Prompt is no longer needed. You can play the multi-modal dialogue system with just your hands. iChat is here!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to get items using commands in Terraria? -How to collect items in Terraria?

Mar 19, 2024 am 08:13 AM

How to get items using commands in Terraria? -How to collect items in Terraria?

Mar 19, 2024 am 08:13 AM

How to get items using commands in Terraria? 1. What is the command to give items in Terraria? In the Terraria game, giving command to items is a very practical function. Through this command, players can directly obtain the items they need without having to fight monsters or teleport to a certain location. This can greatly save time, improve the efficiency of the game, and allow players to focus more on exploring and building the world. Overall, this feature makes the gaming experience smoother and more enjoyable. 2. How to use Terraria to give item commands 1. Open the game and enter the game interface. 2. Press the "Enter" key on the keyboard to open the chat window. 3. Enter the command format in the chat window: "/give[player name][item ID][item quantity]".

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Today I would like to share a recent research work from the University of Connecticut that proposes a method to align time series data with large natural language processing (NLP) models on the latent space to improve the performance of time series forecasting. The key to this method is to use latent spatial hints (prompts) to enhance the accuracy of time series predictions. Paper title: S2IP-LLM: SemanticSpaceInformedPromptLearningwithLLMforTimeSeriesForecasting Download address: https://arxiv.org/pdf/2403.05798v1.pdf 1. Large problem background model

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are