Technology peripherals

Technology peripherals

AI

AI

A quantum problem that required 100,000 equations to be solved was compressed by AI into just four without sacrificing accuracy.

A quantum problem that required 100,000 equations to be solved was compressed by AI into just four without sacrificing accuracy.

A quantum problem that required 100,000 equations to be solved was compressed by AI into just four without sacrificing accuracy.

Interacting electrons exhibit a variety of unique phenomena at different energies and temperatures. If we change their surrounding environment, they will exhibit new collective behaviors, such as spin, pairing fluctuations, etc. However, There are still many difficulties in dealing with these phenomena between electrons. Many researchers use Renormalization Group (RG) to solve this problem.

In the context of high-dimensional data, the emergence of machine learning (ML) technology and data-driven methods has aroused great interest among researchers in quantum physics. So far, ML ideas have used in the interaction of electronic systems.

In this article, physicists from the University of Bologna and other institutions use artificial intelligence to compress a quantum problem that has so far required 100,000 equations into one with only 4 equations. small tasks, all without sacrificing accuracy, the research was published today in Physical Review Letters.

##Paper address: https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.129.136402

Domenico Di Sante, the first author of the study and assistant professor at the University of Bologna, said: We coupled this huge project together and then used machine learning to condense it into something that can be counted on one finger. task.

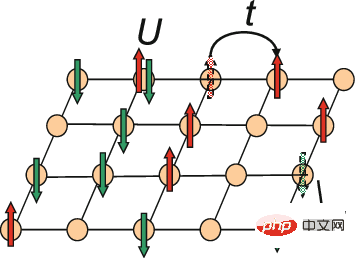

This study dealt with the question of how electrons behave as they move across a grid-like lattice. According to existing experience, when two electrons occupy the same lattice lattice, they will interact. This phenomenon, known as the Hubbard model, is an idealized setup of some materials that allows scientists to understand how electrons behave to create phases of matter, such as superconductivity, where electrons flow through the material without resistance. The model can also serve as a testing ground for new methods before they are applied to more complex quantum systems.

Schematic diagram of the two-dimensional Hubbard model

The Hubbard model seems simple. But even using cutting-edge computing methods to process small numbers of electrons requires a lot of computing power. This is because when electrons interact, they become quantum mechanically entangled: even if the electrons are located far apart in the lattice, the two electrons cannot be dealt with independently, so physicists must deal with them all simultaneously. electrons, rather than working with one electron at a time. The more electrons there are, the more quantum mechanical entanglement there will be, and the computational difficulty will increase exponentially.

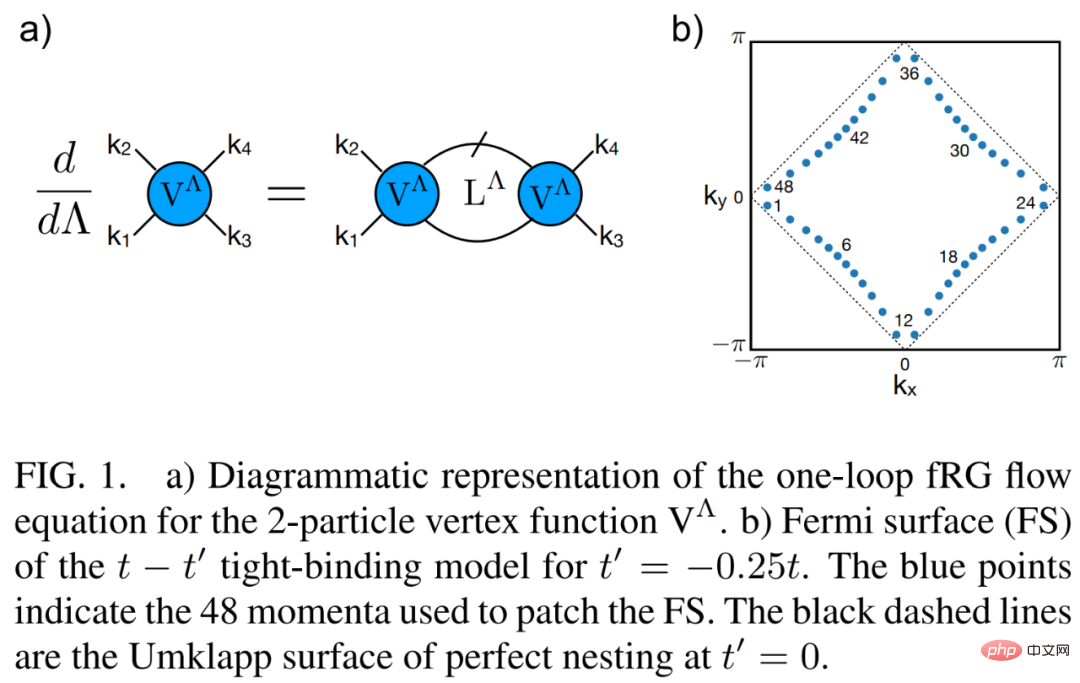

A common method to study quantum systems is the renormalization group. As a mathematical device, physicists use it to observe the behavior of a system, such as the Hubbard model. Unfortunately, a renormalization group records all possible couplings between electrons, which may contain thousands, hundreds of thousands, or even millions of independent equations that need to be solved. On top of that, the equations are complex: each equation represents a pair of interacting electrons.

The Di Sante team wondered if they could use a machine learning tool called a neural network to make renormalization groups more manageable.

In the case of neural networks, first, researchers use machine learning procedures to establish connections to full-size renormalization groups; then the neural network adjusts the strength of these connections until it finds a small A set of equations that yields the same solution as the original, very large renormalization group. We end up with four equations, and even though there are only four, the program's output captures the physics of Hubbard's model.

Di Sante said: "A neural network is essentially a machine that can discover hidden patterns, and this result exceeded our expectations."

Training machine learning programs requires a lot of computing power, so they took weeks to complete. The good news is that now that their program is up and running, a few tweaks can solve other problems without having to start from scratch.

When talking about future research directions, Di Sante said it is necessary to verify how effective the new method is on more complex quantum systems. In addition, Di Sante says there are great possibilities for using the technique in other fields regarding renormalization groups, such as cosmology and neuroscience.

Paper Overview

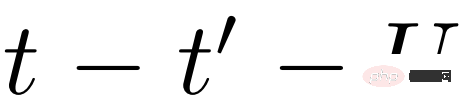

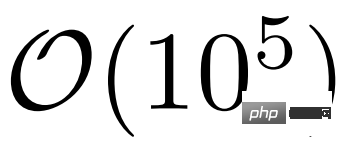

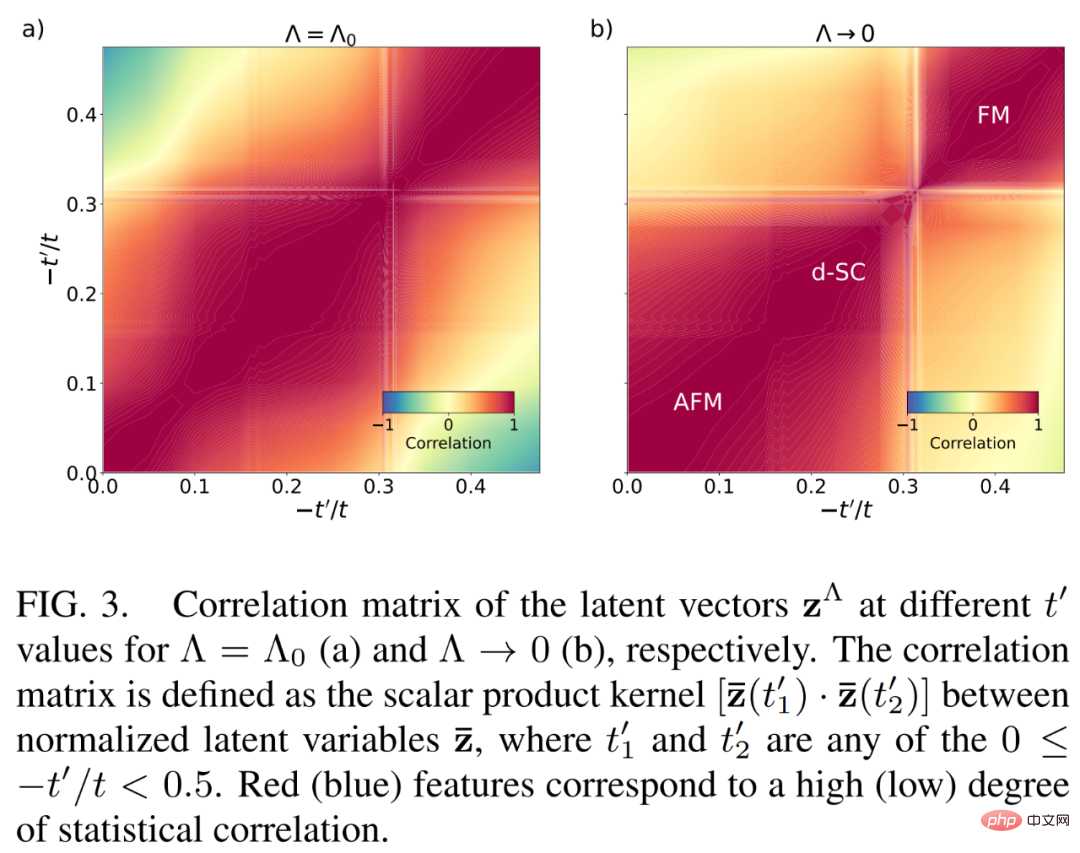

We describe the scale-dependent four-dimensional functional renormalization group (fRG) flow characteristics of the widely studied two-dimensional t-t' Hubbard model on square crystals. Vertex function, the researchers performed data-driven dimensionality reduction. They demonstrate that a deep learning architecture based on a neural regular differential equation (NODE) solver in a low-dimensional latent space can efficiently learn the fRG dynamics describing various magnetic and d-wave superconducting states of the Hubbard model.

The researchers further proposed dynamic mode decomposition analysis, which can confirm that a few modes are indeed sufficient to capture fRG dynamics. The research demonstrates the possibility of using artificial intelligence to extract compact representations of relevant electron four-vertex functions, which is the most important goal to successfully implement cutting-edge quantum field theory methods and solve many-electron problems.

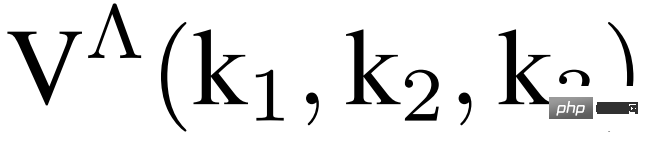

The basic object in fRG is the vertex function V(k_1, k_2, k_3), which in principle requires the calculation and storage of a function consisting of three continuous momentum variables. By studying specific theoretical patterns, the two-dimensional Hubbard model is thought to be relevant to cuprates and a wide range of organic conductors. We show that lower dimensional representations can capture the fRG flow of high-dimensional vertex functions.

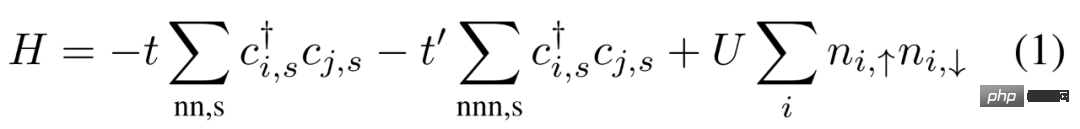

The fRG ground state of the Hubbard model. The microscopic Hamiltonian considered by the researcher is shown in the following formula (1).

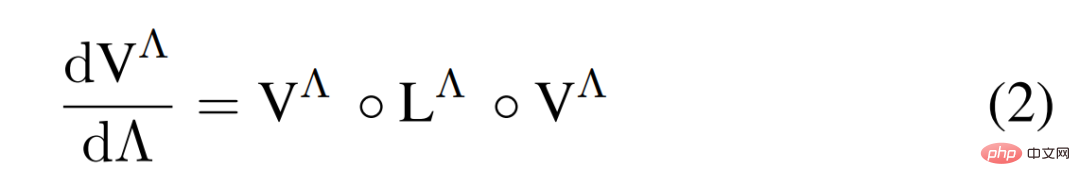

The 2-particle properties of the Hubbard model are studied through a one-loop fRG scheme of temperature flow, where The RG flow of  is shown in the following formula (2).

is shown in the following formula (2).

The following figure 1 a) is a graphical representation of the one-ring fRG flow equation of the 2-particle vertex function V^Λ.

Next let’s look at deep learning fRG. As shown in Figure 2 b) below, by examining the  coupling of the 2-particle vertex functions before the fRG flow tends to strong coupling and the one-ring approximate decomposition, the researchers realized that many of them either remain in the marginal state Either become irrelevant under RG flow.

coupling of the 2-particle vertex functions before the fRG flow tends to strong coupling and the one-ring approximate decomposition, the researchers realized that many of them either remain in the marginal state Either become irrelevant under RG flow.

The researcher implements a flexible dimensionality reduction scheme based on the parameterized NODE architecture suitable for current high-dimensional problems. This method is shown in Figure 2 a) below, focusing on deep neural networks.

Figure 3 below shows three statistically highly correlated latent space representations z as NODE neural during the fRG dynamics of the latent space Learning characteristics of the Internet.

Please refer to the original paper for more details.

The above is the detailed content of A quantum problem that required 100,000 equations to be solved was compressed by AI into just four without sacrificing accuracy.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

New research reveals the potential of quantum Monte Carlo to surpass neural networks in breaking through limitations, and a Nature sub-issue details the latest progress

Apr 24, 2023 pm 09:16 PM

New research reveals the potential of quantum Monte Carlo to surpass neural networks in breaking through limitations, and a Nature sub-issue details the latest progress

Apr 24, 2023 pm 09:16 PM

After four months, another collaborative work between ByteDance Research and Chen Ji's research group at the School of Physics at Peking University has been published in the top international journal Nature Communications: the paper "Towards the ground state of molecules via diffusion Monte Carlo neural networks" combines neural networks with diffusion Monte Carlo methods, greatly improving the application of neural network methods in quantum chemistry. The calculation accuracy, efficiency and system scale on related tasks have become the latest SOTA. Paper link: https://www.nature.com

Nature publishes major progress in quantum computing: the first ever quantum integrated circuit implementation

Apr 08, 2023 pm 09:01 PM

Nature publishes major progress in quantum computing: the first ever quantum integrated circuit implementation

Apr 08, 2023 pm 09:01 PM

On June 23, Australian quantum computing company SQC (Silicon Quantum Computing) announced the launch of the world’s first quantum integrated circuit. This is a circuit that contains all the basic components found on a classical computer chip, but on a quantum scale. The SQC team used this quantum processor to accurately simulate the quantum state of an organic polyacetylene molecule – ultimately demonstrating the effectiveness of the new quantum system modeling technique. “This is a major breakthrough,” said SQC founder Michelle Simmons. Today's classical computers have difficulty simulating even relatively small molecules due to the large number of possible interactions between atoms. The development of SQC atomic level circuit technology will

A brief analysis of machine learning and differential equations

Apr 04, 2023 pm 12:10 PM

A brief analysis of machine learning and differential equations

Apr 04, 2023 pm 12:10 PM

Although machine learning has been around since the 1950s, as computers have become more powerful and data has exploded, there has been widespread practice in how people can use artificial intelligence to gain a competitive advantage, improve insights, and grow profits. . For different application scenarios, machine learning and differential equations have a wide range of scenarios. Everyone has already used machine learning, especially deep learning based on neural networks. ChatGPT is very popular. Do you still need to understand differential equations in depth? No matter what the answer is, it will involve a comparison between the two. So, what is the difference between machine learning and differential equations? Let’s start with the differential equations of the love model. These two equations predict the longevity of a couple’s relationship, based on psychologist John Got

The world's first room-temperature quantum computer is launched! No need for absolute zero, the main core is actually 'encrusted with diamonds'

Apr 09, 2023 pm 08:51 PM

The world's first room-temperature quantum computer is launched! No need for absolute zero, the main core is actually 'encrusted with diamonds'

Apr 09, 2023 pm 08:51 PM

Quantum computing is one of the most exciting (and hyped) areas of research right now. In this regard, German and Australian startup Quantum Brilliance has recently done something big. The world's first diamond-based room-temperature quantum computer was successfully installed in remote Oceania! The world's first commercial room-temperature quantum computer. Simply put, Quantum Brilliance's quantum computer does not require absolute zero or a complex laser system. So, why is room temperature a thing worth talking about? The basic idea of a quantum computing system is that qubits can be in a state that is not just a "1" or a "0", but something called a "superposition"

Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal

May 04, 2023 pm 10:58 PM

Using quantum entanglement as a GPS, precise positioning can be achieved even in areas with no signal

May 04, 2023 pm 10:58 PM

Quantum entanglement refers to a special coupling phenomenon that occurs between particles. In the entangled state, we cannot describe the properties of each particle individually, but can only describe the properties of the overall system. This influence does not disappear with the change of distance, even if the particles are separated by the entire universe. A new study shows that using quantum entanglement mechanisms, sensors can be more accurate and faster at detecting motion. Scientists believe the findings could help develop navigation systems that do not rely on GPS. In a new study submitted in Nature Photonics by the University of Arizona and other institutions, researchers studied the optomechanical sensor (optomechanical sensor).

NVIDIA helps Japan build hybrid supercomputing: more than 2,000 H100 Tensor Core GPUs

Apr 25, 2024 pm 06:25 PM

NVIDIA helps Japan build hybrid supercomputing: more than 2,000 H100 Tensor Core GPUs

Apr 25, 2024 pm 06:25 PM

According to news from this website on April 25, NVIDIA recently announced that it is cooperating with Japan's Institute of Advanced Industrial Science and Technology (AIST) to build a supercomputer called "ABCI-Q", which will integrate traditional supercomputers and quantum computers to create a hybrid cloud system. Because Nvidia says quantum calculators running alone will still make a lot of mistakes, supercomputers must help resolve errors and make complex calculations smoother. ABCI-Q+%’s ability to perform high-speed, complex calculations will help research and enterprise applications in the fields of artificial intelligence, energy and biotechnology, such as improving the efficiency of new drug development and logistics. The website learned from reports that ABCI-Q+ has more than 2,000 Nvidia H100TensorCore GPUs built in, and is

2023 Gordon Bell Prize Announced: Frontier Supercomputer's 'Quantum Level Accuracy” Materials Simulation Winner

Nov 18, 2023 pm 07:37 PM

2023 Gordon Bell Prize Announced: Frontier Supercomputer's 'Quantum Level Accuracy” Materials Simulation Winner

Nov 18, 2023 pm 07:37 PM

The ACM Gordon Bell Prize was established in 1987 and awarded by the American Computer Society. It is known as the "Nobel Prize" in the supercomputing world. The award is given annually to recognize outstanding achievements in high-performance computing. The $10,000 prize is provided by Gordon Bell, a pioneer in the field of high-performance and parallel computing. Recently, at the Global Supercomputing Conference SC23, the 2023 ACM Gordon Bell Prize was awarded to an international team of eight researchers from the United States and India who achieved large-scale quantum precision materials simulation. The related project is titled "Large-scale materials modeling with quantum precision: ab initio simulations of quasicrystals and interaction-propagating defects in metal alloys." Team members have different backgrounds and they are

Will 'time travel' come true? Scientists create the first 'wormhole' and appear on the cover of Nature

May 16, 2023 pm 11:34 PM

Will 'time travel' come true? Scientists create the first 'wormhole' and appear on the cover of Nature

May 16, 2023 pm 11:34 PM

As people living in a three-dimensional world, we all seem to have thought about one question: Is time travel possible? In 1916, Austrian physicist Ludwig Flamm first proposed the concept of "wormhole". In the 1930s, Einstein and Nathan Rosen assumed that black holes and white holes are connected through wormholes when studying the gravitational field equations. Therefore, "Wormhole" is also called "Einstein-Rosen Bridge". "Wormholes" are considered to be possible "shortcuts" in the universe, through which objects can transfer time and space in an instant. However, scientists have been unable to confirm the objective existence of wormholes. Now, scientists have created the first-ever wormhole, and the research paper is on the cover of Nature