Technology peripherals

Technology peripherals

AI

AI

Control more than 100,000 AI models with one click, HuggingFace creates an 'APP Store” for ChatGPT-like models

Control more than 100,000 AI models with one click, HuggingFace creates an 'APP Store” for ChatGPT-like models

Control more than 100,000 AI models with one click, HuggingFace creates an 'APP Store” for ChatGPT-like models

From chatting to programming to supporting various plug-ins, the powerful ChatGPT has long been no longer a simple conversation assistant, but has been moving towards the "management" of the AI world.

On March 23, OpenAI announced that ChatGPT began to support various third-party plug-ins, such as the famous science and engineering artifact Wolfram Alpha. With the help of this artifact, ChatGPT, who was originally a chicken and a rabbit in the same cage, became a top student in science and engineering. Many people on Twitter commented that the launch of the ChatGPT plug-in looks a bit like the launch of the iPhone App Store in 2008. This also means that AI chatbots are entering a new stage of evolution - the "meta app" stage.

## Then, in early April, researchers from Zhejiang University and Microsoft Asia Research proposed a method called "HuggingGPT" ” important method can be regarded as a large-scale demonstration of the above route. HuggingGPT allows ChatGPT to act as a controller (can be understood as a management layer), which manages a large number of other AI models to solve some complex AI tasks. Specifically, HuggingGPT uses ChatGPT for task planning when it receives a user request, selects a model based on the feature description available in HuggingFace, executes each subtask with the selected AI model, and aggregates the response based on the execution results.

This approach can make up for many shortcomings of current large models, such as limited modalities that can be processed, and in some aspects it is not as good as professional models.

Although the HuggingFace model is scheduled, HuggingGPT is not an official product of HuggingFace after all. Just now, HuggingFace finally took action.

Similar to HuggingGPT, they have launched a new API - HuggingFace Transformers Agents. With Transformers Agents, you can control more than 100,000 Hugging Face models to complete various multi-modal tasks.

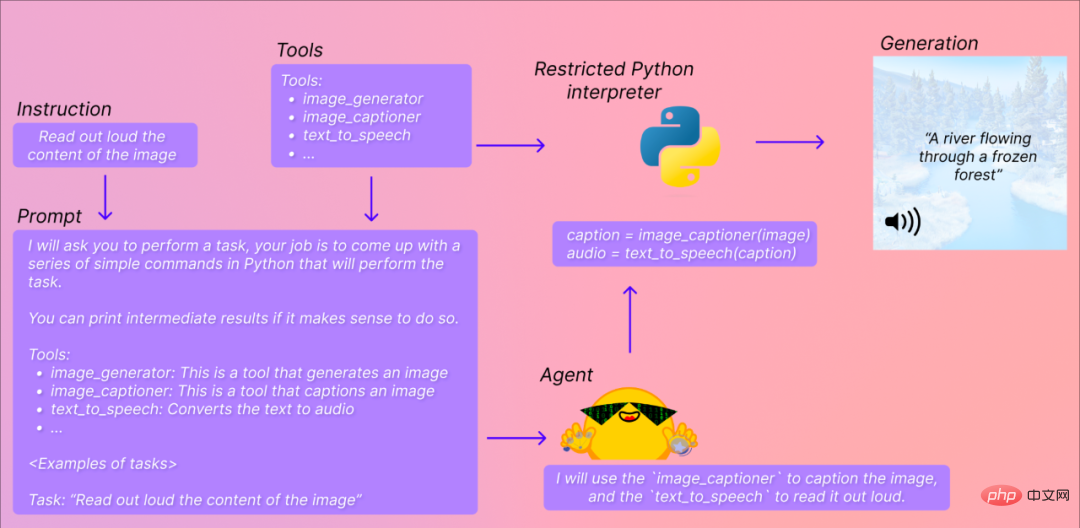

For example, in the example below, you want Transformers Agents to explain out loud what is depicted on the picture. It will try to understand your instructions (Read out loud the content of the image), then convert them into prompts, and select the appropriate models and tools to complete the tasks you specify.

NVIDIA AI scientist Jim Fan commented: This day has finally come. This is an important step towards “Everything APP”. .

However, some people say that this is not the same as AutoGPT’s automatic iteration. It is more like eliminating the need to write prompt and manually specifying these steps for tools, it is too early for the Master of All Things APP.

##Transformers Agents Address: https://huggingface.co/docs/transformers/transformers_agents Transformers Agents How to use?

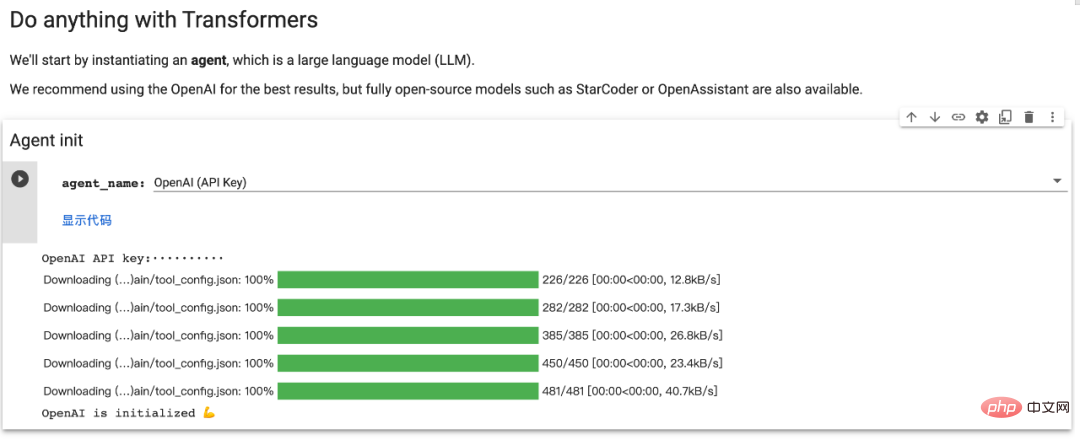

At the same time as the release, HuggingFace released the Colab address, and anyone can try it out:

https://huggingface. co/docs/transformers/en/transformers_agents

In short, it provides a natural language API on top of transformers: first define a set of curated tools, and An agent was designed to interpret natural language and use these tools.Furthermore, Transformers Agents are extensible by design.

The team has identified a set of tools that can be empowered to the agent. Here is the list of integrated tools: These tools are integrated in transformers, or can be used manually: Users can also push the tool's code to Hugging Face Space or the model repository to utilize the tool directly through the agent, such as: ##For specific gameplay, let’s first look at a few examples of HuggingFace: Generate image description :

<code>from transformers import load_tooltool = load_tool("text-to-speech")audio = tool("This is a text to speech tool")</code>

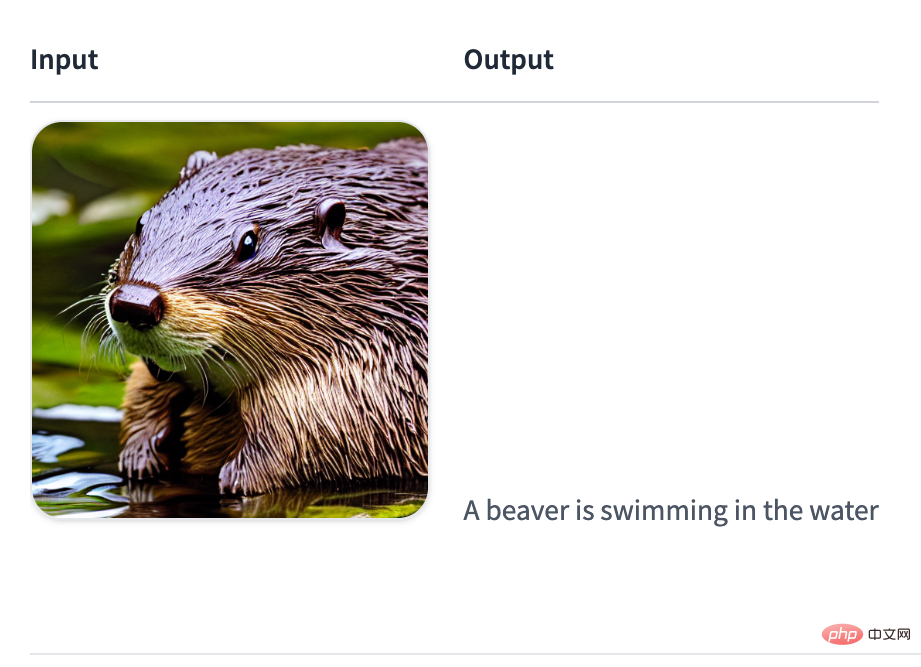

<code>agent.run("Caption the following image", image=image)</code>

Read text:

<code>agent.run("Read the following text out loud", text=text)</code>Input: A beaver is swimming in the water

Output:

tts_exampleAudio:00:0000:01

##Read File:

First, please install the agents add-on to install all default dependencies:

<code>pip install transformers[agents]</code>

<code>pip install openaifrom transformers import OpenAiAgentagent = OpenAiAgent(model="text-davinci-003", api_key="<your_api_key>")</code>

<code>from huggingface_hub import loginlogin("<YOUR_TOKEN>")</code>

<code>from transformers import HfAgentStarcoderagent = HfAgent("https://api-inference.huggingface.co/models/bigcode/starcoder")StarcoderBaseagent = HfAgent("https://api-inference.huggingface.co/models/bigcode/starcoderbase")OpenAssistantagent = HfAgent(url_endpoint="https://api-inference.huggingface.co/models/OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5")</code>

接下来,我们了解一下 Transformers Agents 提供的两个 API: 单次执行 单次执行是在使用智能体的 run () 方法时: 它会自动选择适合要执行的任务的工具并适当地执行,可在同一指令中执行一项或多项任务(不过指令越复杂,智能体失败的可能性就越大)。 每个 run () 操作都是独立的,因此可以针对不同的任务连续运行多次。如果想在执行过程中保持状态或将非文本对象传递给智能体,用户可以通过指定希望智能体使用的变量来实现。例如,用户可以生成第一张河流和湖泊图像,并通过执行以下操作要求模型更新该图片以添加一个岛屿: 当模型无法理解用户的请求并混合使用工具时,这会很有帮助。一个例子是: 在这里,模型可以用两种方式解释: 如果用户想强制执行第一种情况,可以通过将 prompt 作为参数传递给它来实现: 基于聊天的执行 智能体还有一种基于聊天的方法: 这是一种可以跨指令保持状态时。它更适合实验,但在单个指令上表现更好,而 run () 方法更擅长处理复杂指令。如果用户想传递非文本类型或特定 prompt,该方法也可以接受参数。<code>agent.run("Draw me a picture of rivers and lakes.")</code><code>agent.run("Draw me a picture of the sea then transform the picture to add an island")</code><code>picture = agent.run("Generate a picture of rivers and lakes.")updated_picture = agent.run("Transform the image in picture to add an island to it.", picture=picture)</code><code>agent.run("Draw me the picture of a capybara swimming in the sea")</code>

<code>agent.run("Draw me a picture of the prompt", prompt="a capybara swimming in the sea")</code><code>agent.chat("Generate a picture of rivers and lakes")</code><code>agent.chat ("Transform the picture so that there is a rock in there")</code>

The above is the detailed content of Control more than 100,000 AI models with one click, HuggingFace creates an 'APP Store” for ChatGPT-like models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to choose the PyTorch version on CentOS

Apr 14, 2025 pm 06:51 PM

How to choose the PyTorch version on CentOS

Apr 14, 2025 pm 06:51 PM

When installing PyTorch on CentOS system, you need to carefully select the appropriate version and consider the following key factors: 1. System environment compatibility: Operating system: It is recommended to use CentOS7 or higher. CUDA and cuDNN:PyTorch version and CUDA version are closely related. For example, PyTorch1.9.0 requires CUDA11.1, while PyTorch2.0.1 requires CUDA11.3. The cuDNN version must also match the CUDA version. Before selecting the PyTorch version, be sure to confirm that compatible CUDA and cuDNN versions have been installed. Python version: PyTorch official branch