Technology peripherals

Technology peripherals

AI

AI

Researchers develop robot that can understand English commands and perform household chores

Researchers develop robot that can understand English commands and perform household chores

Researchers develop robot that can understand English commands and perform household chores

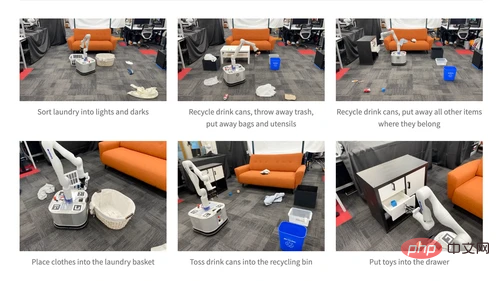

A team of researchers from Princeton University, Stanford University, and Google used OpenAI’s GPT-3 Davinci model to develop a robot named TidyBot that can understand English instructions and perform household chores. This robot can automatically complete tasks such as sorting laundry, picking up garbage on the floor, and picking up toys according to the user's preferences.

The GPT-3 Davinci model is a deep learning model, part of the GPT model family, that can understand and generate natural language. The model has powerful summarization capabilities and can learn complex object attributes and relationships from large amounts of text data. The researchers used this ability to have the robot place objects based on several example objects provided by the user, such as "yellow shirt in the drawer, dark purple shirt in the closet, white socks in the drawer" and then let the model conclude The user's general preference rules and apply them to future interactions.

The researchers wrote in the paper: "Our basic insight is that the summarization capabilities of LLM (Large Language Model) are a good match for the generalization needs of personalized robots. LLM demonstrates the ability to achieve generalization through summarization Amazing ability to exploit complex object properties and relationships learned from massive text datasets."

They also write: "Unlike traditional methods that require expensive data collection and model training, we show that LLM can Achieve generalization in the field of robotics directly out of the box, leveraging the powerful summarization capabilities they learn from massive amounts of text data."

The researchers demonstrated a robot on the paper's website that can do laundry. Separate into light and dark colors, recycle drink cans, throw away trash, pack bags and cutlery, put scattered items back in their place, and put toys in drawers.

The researchers first tested a text-based benchmark dataset in which user preferences were entered and the model was asked to create personalization rules to determine item attribution. The model summarizes the examples into general rules and uses the summary to determine where to place new items. Baseline scenes are defined in four rooms, each with 24 scenes. Each scene contains between two and five places to place items, and there are an equal number of seen and unseen items for the model to classify. The test achieved 91.2 percent accuracy on unseen items, they wrote.

When they applied this method to a real-world robot, TidyBot, they found that it was able to successfully pick up 85 percent of the objects. TidyBot was tested in eight real-life scenarios, each with a set of ten objects, and the robot was run three times in each scenario. According to IT House, in addition to LLM, TidyBot also uses an image classifier called CLIP and an object detector called OWL-ViT.

Danfei Xu, an assistant professor at Georgia Institute of Technology’s School of Interactive Computing, said when talking about Google’s PaLM-E model that LLM gives robots more problem-solving capabilities. "Most previous mission planning systems relied on some form of search or optimization algorithms, which were less flexible and difficult to build. LLM and multimodal LLM enable these systems to benefit from Internet-scale data and easily use to solve new problems," he said.

The above is the detailed content of Researchers develop robot that can understand English commands and perform household chores. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.