What are the main application scenarios in Nginx?

The main application scenarios of Nginx

Static website deployment

nginx is an http web server that can store static files on the server (html, css, pictures) are returned to the browser client through the HTTP protocol.

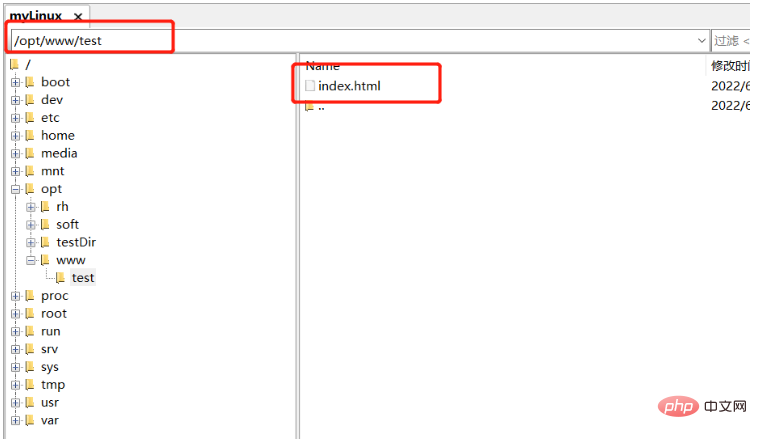

Example: We deploy a static resource index.html on the server

Upload index.html to linux /opt/www/test

Modify nginx.conf and add a location to intercept requests for /test. The /opt/www path corresponding to root represents the root path, which is the /slash in front of /test

1 2 3 4 |

|

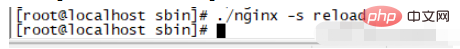

Start nginx or reload nginx

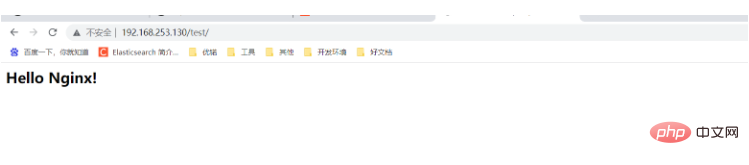

Let’s visit: http://192.168.253.130/test/

Load balancing

Load balancing can be divided into hardware load balancing and software load balancing

Hardware load balancing, such as F5, Sangfor, Array, etc., has the advantage of being supported by the manufacturer's professional team. Stable performance; the disadvantage is that it is expensive

Software load balancing, such as Nginx, LVS, HAProxy, etc. The advantage is that it is free and open source and low cost

Polling method: allocate requests in turn to On the backend server, it treats each backend server equally, regardless of the actual number of connections to the server and the current system load.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

|

Weighted polling method: Different back-end servers may have different machine configurations and current system loads, so their pressure resistance is also different.

Assign a higher weight to a machine with high configuration and low load to allow it to handle more requests; and assign a lower weight to a machine with low configuration and high load to reduce its system load. Weighted polling handles this problem very well and distributes requests to the backend in order and according to weight.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

|

Source address hashing method: According to the IP address of the client, a value is calculated through the hash function, and the value is used to perform a modulo operation on the size of the server list. The result obtained is the client request. Serial number to access the server.

Using the source address hash method for load balancing, a client with the same IP address will be mapped to the same backend server for access every time when the backend server list remains unchanged.

1 2 3 4 5 6 7 |

|

Minimum number of connections method: Since the configuration of the back-end server is different, the processing of requests may be faster or slower. The minimum number of connections method dynamically selects the current backlog based on the current connection status of the back-end server. The server with the least number of connections will handle the current request, improve the utilization efficiency of the back-end service as much as possible, and reasonably distribute the responsibility to each server.

1 2 3 4 5 6 7 |

|

down: Indicates stopping a certain service

1 2 3 4 5 6 |

|

backup: Specifies the backup server. Under normal circumstances, as long as other servers can access it normally, the backup server will not be accessed, only other servers. The standby server will only be used when all are down, so this method is generally used to implement hot deployment. The code is updated to the standby server first, and then the normal server is stopped. After the normal server deployment is completed, the standby server is waiting again. status, the entire deployment process enables users to experience no downtime.

1 2 3 4 5 6 |

|

Static proxy

##Separation of dynamic and static

Virtual host

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

上述配置中,Nginx会将访问根目录(/)的请求转发给后端的Web服务器(backend1.example.com和backend2.example.com),其中backend1.example.com的权重为3,backend2.example.com的权重为1,表示backend1.example.com的处理能力更强。

在转发请求时,Nginx还会设置HTTP头信息中的Host和X-Real-IP字段,从而隐藏Web服务器的真实IP。

3.负载均衡器

在使用Nginx作为负载均衡器时,Nginx会将请求均衡地分发到多个Web服务器上,从而实现高并发、高可用的服务。这种场景通常用于Web应用程序的集群部署、分布式系统的部署等。下面是一个示例Nginx配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

|

上述配置中,Nginx会将请求均衡地分发到三个Web服务器(backend1.example.com、backend2.example.com和backend3.example.com)上,从而实现负载均衡。

在转发请求时,Nginx还会设置HTTP头信息中的Host和X-Real-IP字段,从而隐藏Web服务器的真实IP。

4.缓存服务器

在使用Nginx作为缓存服务器时,Nginx会缓存Web服务器返回的响应,从而减少对Web服务器的请求。这种场景通常用于提高Web应用程序的性能、降低Web服务器的负载等。下面是一个示例Nginx配置:

1 2 3 4 5 6 7 8 9 10 11 |

|

上述配置中,Nginx会将Web服务器返回的响应缓存到/var/cache/nginx/my_cache目录下,并设置缓存有效期为60分钟。在缓存命中时,Nginx会直接返回缓存的响应,从而减少对Web服务器的请求。

总之,Nginx具有很强的可扩展性和灵活性,可以根据不同的需求配置不同的使用场景。以上仅是一些示例,实际应用中还有很多其他的使用场景。

5.反向代理服务器

在使用Nginx作为反向代理服务器时,Nginx会将客户端请求转发到后端的Web服务器上,并将后端服务器返回的响应转发给客户端。这种场景通常用于隐藏后端服务器的真实IP、提高Web应用程序的可用性等。下面是一个示例Nginx配置:

1 2 3 4 5 6 7 8 9 |

|

上述配置中,Nginx会将客户端请求转发到http://backend上,并设置HTTP头信息中的Host和X-Real-IP字段,从而隐藏后端服务器的真实IP。

6.WebSocket服务器

在使用Nginx作为WebSocket服务器时,Nginx会将客户端请求转发到后端的WebSocket服务器上,并实现WebSocket协议的连接管理。这种场景通常用于实时通信、游戏等应用程序。

下面是一个示例Nginx配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

|

上述配置中,Nginx会将WebSocket请求转发到http://backend上,并设置HTTP头信息中的Upgrade、Connection、Host和X-Real-IP字段,从而实现WebSocket协议的连接管理。

总之,Nginx具有很多的使用场景,可以根据不同的需求配置不同的服务器功能。以上仅是一些示例,实际应用中还有很多其他的使用场景。

The above is the detailed content of What are the main application scenarios in Nginx?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure an Nginx domain name on a cloud server: Create an A record pointing to the public IP address of the cloud server. Add virtual host blocks in the Nginx configuration file, specifying the listening port, domain name, and website root directory. Restart Nginx to apply the changes. Access the domain name test configuration. Other notes: Install the SSL certificate to enable HTTPS, ensure that the firewall allows port 80 traffic, and wait for DNS resolution to take effect.

How to check nginx version

Apr 14, 2025 am 11:57 AM

How to check nginx version

Apr 14, 2025 am 11:57 AM

The methods that can query the Nginx version are: use the nginx -v command; view the version directive in the nginx.conf file; open the Nginx error page and view the page title.

How to start nginx server

Apr 14, 2025 pm 12:27 PM

How to start nginx server

Apr 14, 2025 pm 12:27 PM

Starting an Nginx server requires different steps according to different operating systems: Linux/Unix system: Install the Nginx package (for example, using apt-get or yum). Use systemctl to start an Nginx service (for example, sudo systemctl start nginx). Windows system: Download and install Windows binary files. Start Nginx using the nginx.exe executable (for example, nginx.exe -c conf\nginx.conf). No matter which operating system you use, you can access the server IP

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to confirm whether Nginx is started: 1. Use the command line: systemctl status nginx (Linux/Unix), netstat -ano | findstr 80 (Windows); 2. Check whether port 80 is open; 3. Check the Nginx startup message in the system log; 4. Use third-party tools, such as Nagios, Zabbix, and Icinga.

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

You can query the Docker container name by following the steps: List all containers (docker ps). Filter the container list (using the grep command). Gets the container name (located in the "NAMES" column).

How to configure nginx in Windows

Apr 14, 2025 pm 12:57 PM

How to configure nginx in Windows

Apr 14, 2025 pm 12:57 PM

How to configure Nginx in Windows? Install Nginx and create a virtual host configuration. Modify the main configuration file and include the virtual host configuration. Start or reload Nginx. Test the configuration and view the website. Selectively enable SSL and configure SSL certificates. Selectively set the firewall to allow port 80 and 443 traffic.

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

Docker container startup steps: Pull the container image: Run "docker pull [mirror name]". Create a container: Use "docker create [options] [mirror name] [commands and parameters]". Start the container: Execute "docker start [Container name or ID]". Check container status: Verify that the container is running with "docker ps".

How to run nginx apache

Apr 14, 2025 pm 12:33 PM

How to run nginx apache

Apr 14, 2025 pm 12:33 PM

To get Nginx to run Apache, you need to: 1. Install Nginx and Apache; 2. Configure the Nginx agent; 3. Start Nginx and Apache; 4. Test the configuration to ensure that you can see Apache content after accessing the domain name. In addition, you need to pay attention to other matters such as port number matching, virtual host configuration, and SSL/TLS settings.