Technology peripherals

Technology peripherals

AI

AI

The AI exam and the public exam are just around the corner! Microsoft Chinese team releases new benchmark AGIEval, specially designed for human examinations

The AI exam and the public exam are just around the corner! Microsoft Chinese team releases new benchmark AGIEval, specially designed for human examinations

The AI exam and the public exam are just around the corner! Microsoft Chinese team releases new benchmark AGIEval, specially designed for human examinations

As language models become more and more capable, the existing evaluation benchmarks are a bit childish, and the performance of some tasks is far behind humans.

An important feature of general artificial intelligence (AGI) is the model’s generalization ability to handle human-level tasks, while traditional benchmarks that rely on artificial datasets do not accurately represent humans. ability.

Recently, Microsoft researchers released a new benchmark AGIEval, specifically used to evaluate the performance of basic models in "human-centric" Performance on standardized tests, such as the College Entrance Examination, Civil Service Examination, Law School Admission Test, Mathematics Competition, and Bar Examination.

Paper link: https://arxiv.org/pdf/2304.06364.pdf

Data link: https://github.com/microsoft/AGIEval

The researchers evaluated using the AGIEval benchmark Three state-of-the-art basic models, including GPT-4, ChatGPT and Text-Davinci-003, experimental results found that GPT-4's performance in SAT, LSAT and mathematics competitions exceeded the average human level, and the accuracy of the SAT mathematics test reached The accuracy rate of the Chinese College Entrance Examination English test reached 92.5%, indicating the extraordinary performance of the current basic model.

But GPT-4 is less adept at tasks that require complex reasoning or domain-specific knowledge, as our comprehensive analysis of model capabilities (understanding, knowledge, reasoning, and computation) reveals. Model strengths and limitations.

AGIEval Dataset

In recent years, large-scale basic models such as GPT-4 have shown very powerful capabilities in various fields, and can assist humans in processing daily events, and even It can also provide decision-making advice in professional fields such as law, medicine and finance.

In other words, artificial intelligence systems are gradually approaching and achieving artificial general intelligence (AGI).

But as AI gradually integrates into daily life, how to evaluate the human-centered generalization ability of models, identify potential flaws, and ensure that they can effectively handle complex, human-centered tasks, and Assessing reasoning skills to ensure reliability and trustworthiness in different contexts is critical.

The researchers constructed the AGIEval data set mainly following two design principles:

1. Emphasis on human brain level Cognitive Tasks

#The main goal of "human-centered" design is to center on tasks closely related to human cognition and problem solving, and in a more Assess the generalization ability of the underlying model in a meaningful and comprehensive manner.

To achieve this goal, the researchers selected a variety of official, public, high-standard admissions and qualifying exams that meet the needs of general human test takers, including college admissions exams, law school admissions exams, math exams, bar exams, and state civil service exams that are taken every year by millions of people seeking to enter higher education or a new career path.

By adhering to these officially recognized standards for assessing human-level capabilities, AGIEval ensures that assessments of model performance are directly related to human decision-making and cognitive abilities.

2. Relevance to real-world scenarios

By selecting from high standards The tasks of entrance and qualifying examinations ensure that assessment results reflect the complexity and practicality of the challenges that individuals often encounter in different fields and contexts.

This approach not only measures the performance of the model in terms of human cognitive abilities, but also provides a better understanding of applicability and effectiveness in real life, i.e. helps in development Develop artificial intelligence systems that are more reliable, more practical, and more suitable for solving a wide range of real-world problems.

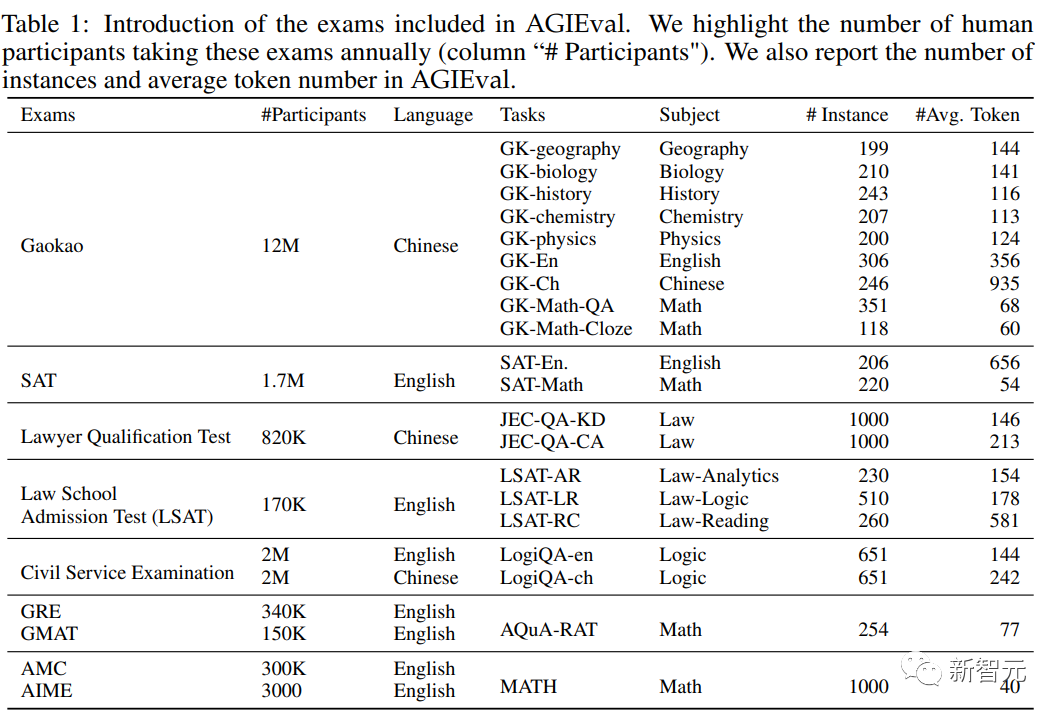

# Based on the above design principles, researchers selected a variety of standardized, high-quality exams that emphasize human-level reasoning and real-world relevance, Specifically include:

1. General College Entrance Examination

College Entrance Examination includes various A subject that requires critical thinking, problem-solving and analytical skills and is ideal for assessing the performance of large language models in relation to human cognition.

Specifically includes the Graduate Record Examination (GRE), the Academic Assessment Test (SAT) and the Chinese College Entrance Examination (Gaokao), which can assess the general abilities and subject-specific knowledge of students seeking admission to higher education institutions. .

The data set collects exams corresponding to the 8 subjects of the Chinese College Entrance Examination: history, mathematics, English, Chinese, geography, biology, chemistry and physics; select math questions from the GRE; English and mathematics subjects were selected from the SAT to construct a benchmark data set.

2. Law School Admission Test

Law School Admission Test, such as LSAT, Designed to measure the reasoning and analytical abilities of future law students, the exam includes sections such as logical reasoning, reading comprehension, and analytical reasoning. It requires test takers to analyze complex information and draw accurate conclusions. These tasks can assess the role of language models in legal reasoning. and analytical skills.

#3. The Bar Examination

can evaluate the legal proficiency of an individual pursuing a legal career Knowledge, analytical skills and ethical understanding. The exam covers a wide range of legal topics, including constitutional law, contract law, criminal law and property law, and requires candidates to demonstrate their ability to effectively apply legal principles and reasoning. This test can demonstrate professional legal knowledge and ethical judgment. Evaluate the performance of language models in the context of

4. Graduate Management Admission Test (GMAT)

GMAT is a standardized The exam can assess the analytical, quantitative, verbal and comprehensive reasoning abilities of future business school graduate students. It consists of analytical writing assessment, comprehensive reasoning, quantitative reasoning and verbal reasoning. It evaluates the test taker's critical thinking, analyzing data and effective communication. Ability.

5. High School Mathematics Competitions

These competitions cover a wide range of mathematical topics, Includes number theory, algebra, geometry, and combinatorics, and often presents non-routine problems that require creative solutions.

Specifically includes the American Mathematics Competition (AMC) and the American Invitational Mathematics Examination (AIME), which can test students’ mathematical ability, creativity and problem-solving ability, and can further evaluate language model processing Ability to solve complex and creative mathematical problems, and the ability of models to generate novel solutions.

#6. The Domestic Civil Service Examination

can assess the qualifications of individuals seeking entry into the civil service Competencies and skills, the examination includes assessment of general knowledge, reasoning ability, language skills, and expertise in specific subjects related to the roles and responsibilities of various civil service positions in China. It can measure the performance of language models in the context of public administration, and their Potential for policy development, decision-making and public service delivery processes.

Evaluation results

The selected models include:

ChatGPT, Dialogue developed by OpenAI A new artificial intelligence model that can engage in user interactions and dynamic conversations, trained using a massive instruction data set and further tuned through reinforcement learning with human feedback (RLHF), enabling it to provide contextual and coherent content consistent with human expectations. reply.

GPT-4, as the fourth generation GPT model, contains a wider range of knowledge base and exhibits human-level performance in many application scenarios. GPT-4 was repeatedly tweaked using adversarial testing and ChatGPT, resulting in significant improvements in factuality, bootability, and compliance with the rules.

Text-Davinci-003 is an intermediate version between GPT-3 and GPT-4, which is better than GPT after fine-tuning through instructions -3 performs better.

In addition, the average score and the highest score of human test takers were also reported in the experiment as the human level limit for each task, but they do not fully represent what humans may have. Range of skills and knowledge.

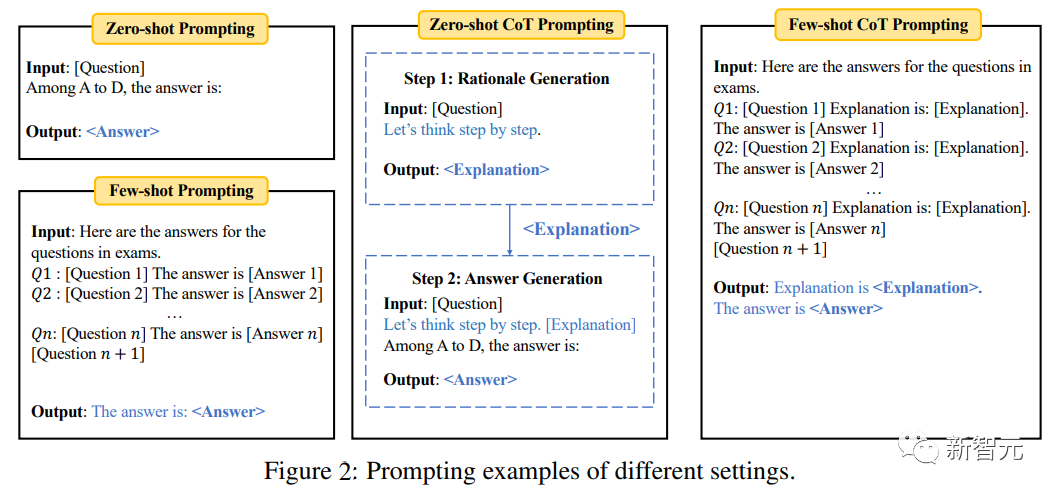

Zero-shot/Few-shot evaluation

In the setting of zero samples, the model directly evaluates the problem Evaluation; in few-shot tasks, a small number of examples (such as 5) from the same task are input before evaluation on the test samples.

In order to further test the reasoning ability of the model, the chain of thought (CoT) prompt was also introduced in the experiment, that is, first enter the prompt "Let's think step by step" to generate an explanation for a given question. Then enter the prompt "Explanation is" to generate the final answer based on the explanation.

The "multiple-choice questions" in the benchmark use standard classification accuracy; the "fill-in-the-blank questions" use exact matching (EM ) and F1 indicator.

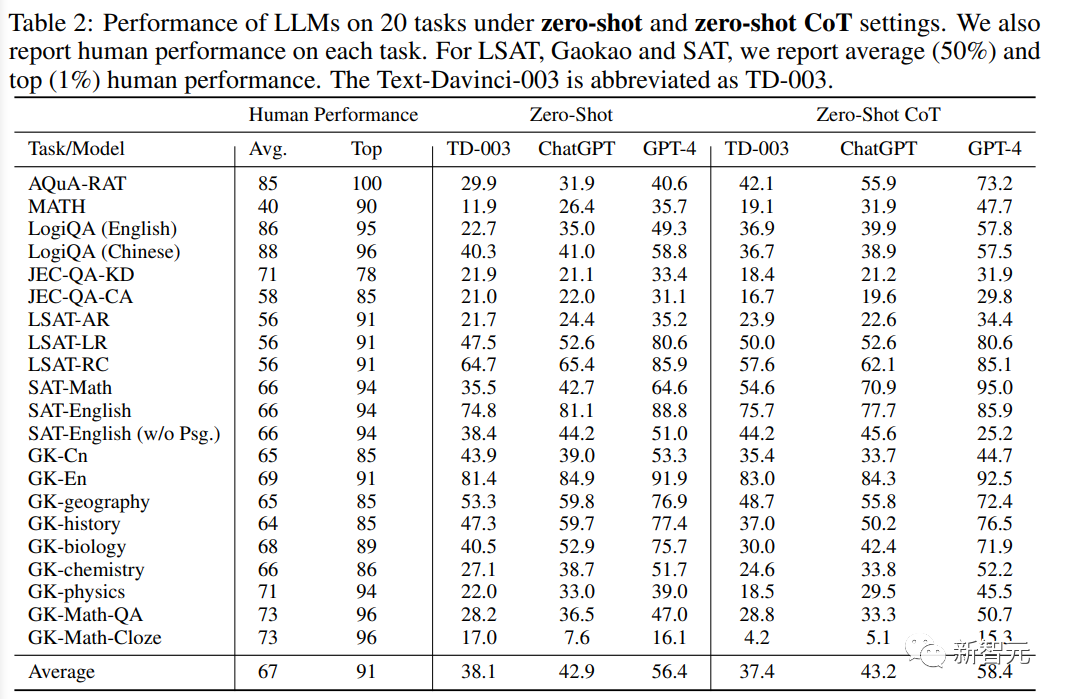

It can be found from the experimental results:

1. GPT-4 is significantly better than its similar products in all task settings, including 93.8% accuracy on Gaokao-English and 95% accuracy on SAT-MATH, indicating that GPT-4 has excellent general capabilities in handling human-centered tasks.

2. ChatGPT significantly outperforms Text-Davinci-003 in tasks that require external knowledge, such as those involving geography, biology, chemistry, physics, and mathematics , indicating that ChatGPT has a stronger knowledge base and is better able to handle tasks that require a deep understanding of a specific domain.

On the other hand, ChatGPT slightly outperforms Text-Davinci- in all assessment settings and in tasks that require pure understanding and do not rely heavily on external knowledge, such as English and LSAT tasks. 003, or equivalent results. This observation means that both models are capable of handling tasks centered on language understanding and logical reasoning without requiring specialized domain knowledge.

3. Although the overall performance of these models is good, all language models perform poorly in complex reasoning tasks, such as MATH and LSAT-AR , GK-physics, and GK-Math, highlighting the limitations of these models in handling tasks that require advanced reasoning and problem-solving skills.

The observed difficulties in handling complex inference problems provide opportunities for future research and development aimed at improving the model's general inference capabilities.

4. Compared with zero-shot learning, few-shot learning usually only brings limited performance improvements, indicating that the current zero-shot learning of large language models Shot learning capabilities are approaching few-shot learning capabilities, which also marks a big improvement over the original GPT-3 model, when few-shot performance was much better than zero-shot.

A reasonable explanation for this development is the enhancement of human adjustments and adjustments to instructions in current language models. These improvements allow the models to better understand the tasks ahead of time. meaning and context, thus allowing them to perform well even in zero-shot situations, proving the effectiveness of the instructions.

The above is the detailed content of The AI exam and the public exam are just around the corner! Microsoft Chinese team releases new benchmark AGIEval, specially designed for human examinations. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos8 restarts ssh

Apr 14, 2025 pm 09:00 PM

Centos8 restarts ssh

Apr 14, 2025 pm 09:00 PM

The command to restart the SSH service is: systemctl restart sshd. Detailed steps: 1. Access the terminal and connect to the server; 2. Enter the command: systemctl restart sshd; 3. Verify the service status: systemctl status sshd.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place