Java

Java

javaTutorial

javaTutorial

How Springboot microservice project integrates Kafka to implement article uploading and delisting functions

How Springboot microservice project integrates Kafka to implement article uploading and delisting functions

How Springboot microservice project integrates Kafka to implement article uploading and delisting functions

1: Quick Start for Kafka Message Sending

1. Pass String Message

(1) Send Message

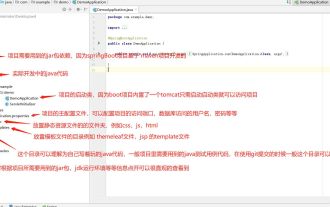

Create a Controller package and write a test class for Send message

package com.my.kafka.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class HelloController {

@Autowired

private KafkaTemplate<String,String> kafkaTemplate;

@GetMapping("hello")

public String helloProducer(){

kafkaTemplate.send("my-topic","Hello~");

return "ok";

}

}(2) Listen for messages

Write a test class to receive messages:

package com.my.kafka.listener;

import org.junit.platform.commons.util.StringUtils;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class HelloListener {

@KafkaListener(topics = "my-topic")

public void helloListener(String message) {

if(StringUtils.isNotBlank(message)) {

System.out.println(message);

}

}

}(3) Test results

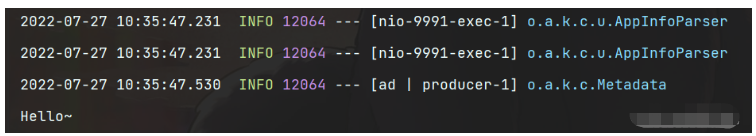

Open browser input localhost:9991/hello, and then go to the console to view the message. You can see that the successful message has been monitored and consumed.

2. Passing object messages

Currently springboot integrated kafka, because the serializer is StringSerializer, if you need to pass objects at this time, there are two ways Method:

Method 1: You can customize the serializer with many object types. This method is not very versatile and will not be introduced here.

Method 2: You can convert the object to be transferred into a json string, and then convert it into an object after receiving the message. This method is used in this project.

(1) Modify the producer code

@GetMapping("hello")

public String helloProducer(){

User user = new User();

user.setName("赵四");

user.setAge(20);

kafkaTemplate.send("my-topic", JSON.toJSONString(user));

return "ok";

}(2) Result test

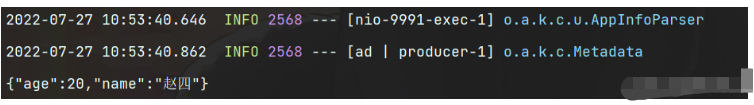

You can see that all object parameters are successfully received, later To use this object, you only need to convert it into a User object.

2: Function introduction

1. Requirements analysis

After publishing an article, there may be some errors or other reasons in the article. We will implement the article on the article management side. The upload and delist function (see the picture below), that is, when the management terminal removes an article from the shelves, the mobile terminal will no longer display the article. Only after the article is re-listed can the article information be seen on the mobile terminal.

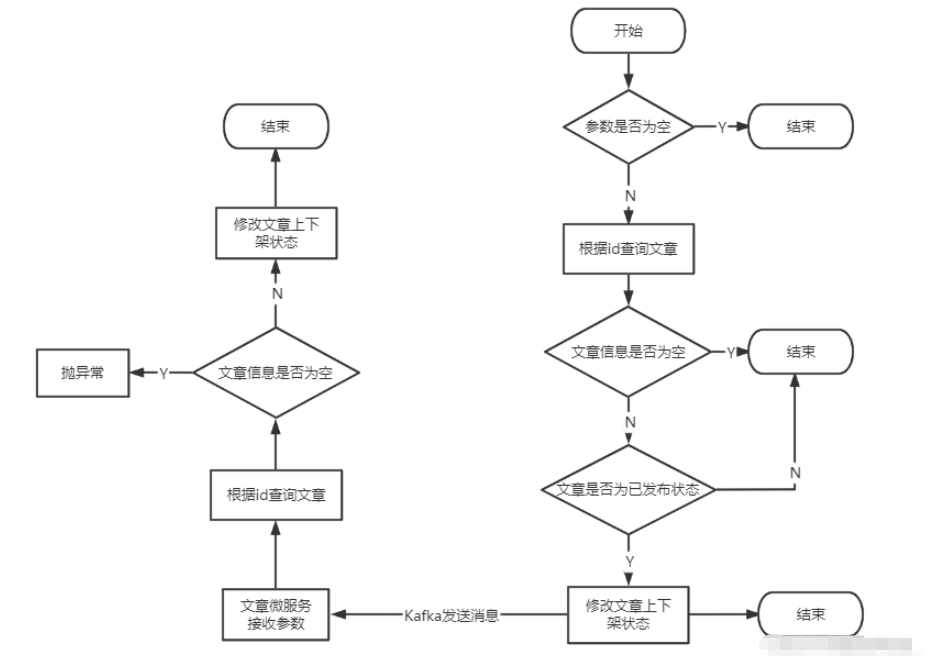

2. Logical analysis

#After the back-end receives the parameters passed by the front-end, it must first do a verification , the parameter is not empty before the execution can continue. First, the article information of the self-media database should be queried based on the article id passed from the front end (self-media end article id) and judge whether the article has been published, because only if the review is successful and successful Only published articles can be uploaded or removed. After the self-media side microservice modifies the article uploading and delisting status, it can send a message to Kafka. The message is a Map object. The data stored in it is the article id of the mobile terminal and the uploading and delisting parameter enable passed from the front end. Of course, this message must be The Map object can be sent after it is converted into a JSON string.

The article microservice listens to the message sent by Kafka, converts the JSON string into a Map object, and then obtains the relevant parameters to modify the up and down status of the mobile article.

3: Early preparation

1.Introduce dependencies

<!-- kafkfa -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>2.Define constants

package com.my.common.constans;

public class WmNewsMessageConstants {

public static final String WM_NEWS_UP_OR_DOWN_TOPIC="wm.news.up.or.down.topic";

}3.Kafka configuration information

Because I use Nacos as the registration center, so the configuration information can be placed on Nacos.

(1) Self-media terminal configuration

spring:

kafka:

bootstrap-servers: 4.234.52.122:9092

producer:

retries: 10

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer(2) Mobile terminal configuration

spring:

kafka:

bootstrap-servers: 4.234.52.122:9092

consumer:

group-id: ${spring.application.name}-test

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializerFour: Code implementation

1. Self-media terminal

@Autowired

private KafkaTemplate<String,String> kafkaTemplate;

/**

* 文章下架或上架

* @param id

* @param enable

* @return

*/

@Override

public ResponseResult downOrUp(Integer id,Integer enable) {

log.info("执行文章上下架操作...");

if(id == null || enable == null) {

return ResponseResult.errorResult(AppHttpCodeEnum.PARAM_INVALID);

}

//根据id获取文章

WmNews news = getById(id);

if(news == null) {

return ResponseResult.errorResult(AppHttpCodeEnum.DATA_NOT_EXIST,"文章信息不存在");

}

//获取当前文章状态

Short status = news.getStatus();

if(!status.equals(WmNews.Status.PUBLISHED.getCode())) {

return ResponseResult.errorResult(AppHttpCodeEnum.PARAM_INVALID,"文章非发布状态,不能上下架");

}

//更改文章状态

news.setEnable(enable.shortValue());

updateById(news);

log.info("更改文章上架状态{}-->{}",status,news.getEnable());

//发送消息到Kafka

Map<String, Object> map = new HashMap<>();

map.put("articleId",news.getArticleId());

map.put("enable",enable.shortValue());

kafkaTemplate.send(WmNewsMessageConstants.WM_NEWS_UP_OR_DOWN_TOPIC,JSON.toJSONString(map));

log.info("发送消息到Kafka...");

return ResponseResult.okResult(AppHttpCodeEnum.SUCCESS);

}2. Mobile terminal

(1) Set up the listener

package com.my.article.listener;

import com.baomidou.mybatisplus.core.toolkit.StringUtils;

import com.my.article.service.ApArticleService;

import com.my.common.constans.WmNewsMessageConstants;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import org.springframework.kafka.annotation.KafkaListener;

@Slf4j

@Component

public class EnableListener {

@Autowired

private ApArticleService apArticleService;

@KafkaListener(topics = WmNewsMessageConstants.WM_NEWS_UP_OR_DOWN_TOPIC)

public void downOrUp(String message) {

if(StringUtils.isNotBlank(message)) {

log.info("监听到消息{}",message);

apArticleService.downOrUp(message);

}

}

}(2) Get the message and modify the article status

/**

* 文章上下架

* @param message

* @return

*/

@Override

public ResponseResult downOrUp(String message) {

Map map = JSON.parseObject(message, Map.class);

//获取文章id

Long articleId = (Long) map.get("articleId");

//获取文章待修改状态

Integer enable = (Integer) map.get("enable");

//查询文章配置

ApArticleConfig apArticleConfig = apArticleConfigMapper.selectOne

(Wrappers.<ApArticleConfig>lambdaQuery().eq(ApArticleConfig::getArticleId, articleId));

if(apArticleConfig != null) {

//上架

if(enable == 1) {

log.info("文章重新上架");

apArticleConfig.setIsDown(false);

apArticleConfigMapper.updateById(apArticleConfig);

}

//下架

if(enable == 0) {

log.info("文章下架");

apArticleConfig.setIsDown(true);

apArticleConfigMapper.updateById(apArticleConfig);

}

}

else {

throw new RuntimeException("文章信息不存在");

}

return ResponseResult.okResult(AppHttpCodeEnum.SUCCESS);

}The above is the detailed content of How Springboot microservice project integrates Kafka to implement article uploading and delisting functions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to implement real-time stock analysis using PHP and Kafka

Jun 28, 2023 am 10:04 AM

How to implement real-time stock analysis using PHP and Kafka

Jun 28, 2023 am 10:04 AM

With the development of the Internet and technology, digital investment has become a topic of increasing concern. Many investors continue to explore and study investment strategies, hoping to obtain a higher return on investment. In stock trading, real-time stock analysis is very important for decision-making, and the use of Kafka real-time message queue and PHP technology is an efficient and practical means. 1. Introduction to Kafka Kafka is a high-throughput distributed publish and subscribe messaging system developed by LinkedIn. The main features of Kafka are

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

SpringBoot and SpringMVC are both commonly used frameworks in Java development, but there are some obvious differences between them. This article will explore the features and uses of these two frameworks and compare their differences. First, let's learn about SpringBoot. SpringBoot was developed by the Pivotal team to simplify the creation and deployment of applications based on the Spring framework. It provides a fast, lightweight way to build stand-alone, executable

SpringBoot+Dubbo+Nacos development practical tutorial

Aug 15, 2023 pm 04:49 PM

SpringBoot+Dubbo+Nacos development practical tutorial

Aug 15, 2023 pm 04:49 PM

This article will write a detailed example to talk about the actual development of dubbo+nacos+Spring Boot. This article will not cover too much theoretical knowledge, but will write the simplest example to illustrate how dubbo can be integrated with nacos to quickly build a development environment.

Five selections of visualization tools for exploring Kafka

Feb 01, 2024 am 08:03 AM

Five selections of visualization tools for exploring Kafka

Feb 01, 2024 am 08:03 AM

Five options for Kafka visualization tools ApacheKafka is a distributed stream processing platform capable of processing large amounts of real-time data. It is widely used to build real-time data pipelines, message queues, and event-driven applications. Kafka's visualization tools can help users monitor and manage Kafka clusters and better understand Kafka data flows. The following is an introduction to five popular Kafka visualization tools: ConfluentControlCenterConfluent

Comparative analysis of kafka visualization tools: How to choose the most appropriate tool?

Jan 05, 2024 pm 12:15 PM

Comparative analysis of kafka visualization tools: How to choose the most appropriate tool?

Jan 05, 2024 pm 12:15 PM

How to choose the right Kafka visualization tool? Comparative analysis of five tools Introduction: Kafka is a high-performance, high-throughput distributed message queue system that is widely used in the field of big data. With the popularity of Kafka, more and more enterprises and developers need a visual tool to easily monitor and manage Kafka clusters. This article will introduce five commonly used Kafka visualization tools and compare their features and functions to help readers choose the tool that suits their needs. 1. KafkaManager

How to install Apache Kafka on Rocky Linux?

Mar 01, 2024 pm 10:37 PM

How to install Apache Kafka on Rocky Linux?

Mar 01, 2024 pm 10:37 PM

To install ApacheKafka on RockyLinux, you can follow the following steps: Update system: First, make sure your RockyLinux system is up to date, execute the following command to update the system package: sudoyumupdate Install Java: ApacheKafka depends on Java, so you need to install JavaDevelopmentKit (JDK) first ). OpenJDK can be installed through the following command: sudoyuminstalljava-1.8.0-openjdk-devel Download and decompress: Visit the ApacheKafka official website () to download the latest binary package. Choose a stable version

What are the commonly used directories for springBoot projects?

Jun 27, 2023 pm 01:42 PM

What are the commonly used directories for springBoot projects?

Jun 27, 2023 pm 01:42 PM

Commonly used directories for springBoot projects. The directory structure and naming specifications of springBoot projects are introduced based on the directory structure and naming specifications during SpringBoot development. Through the introduction, we can help you solve the problem. How to plan the directory structure in actual projects? How to name directories more standardizedly? What do each directory mean? Wait three questions. Directory description servicex//Project name|-admin-ui//Management service front-end code (usually UI and SERVICE are put into one project for easy management)|-servicex-auth//Module 1|-servicex-common//Module 2|-servicex-gateway//Module 3|

The practice of go-zero and Kafka+Avro: building a high-performance interactive data processing system

Jun 23, 2023 am 09:04 AM

The practice of go-zero and Kafka+Avro: building a high-performance interactive data processing system

Jun 23, 2023 am 09:04 AM

In recent years, with the rise of big data and active open source communities, more and more enterprises have begun to look for high-performance interactive data processing systems to meet the growing data needs. In this wave of technology upgrades, go-zero and Kafka+Avro are being paid attention to and adopted by more and more enterprises. go-zero is a microservice framework developed based on the Golang language. It has the characteristics of high performance, ease of use, easy expansion, and easy maintenance. It is designed to help enterprises quickly build efficient microservice application systems. its rapid growth