1. Solution

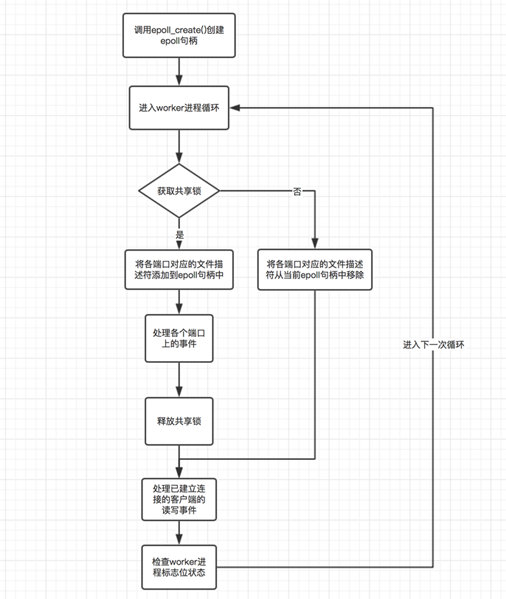

When each worker process is created, the ngx_worker_process_init() method will be called to initialize the current worker process. There is a very important point in this process. Step, that is, each worker process will call the epoll_create() method to create a unique epoll handle for itself. For each port that needs to be monitored, there is a file descriptor corresponding to it, and the worker process only adds the file descriptor to the epoll handle of the current process through the epoll_ctl() method and listens to the accept event. Triggered by the client's connection establishment event to handle the event. It can also be seen from here that if the worker process does not add the file descriptor corresponding to the port that needs to be monitored to the epoll handle of the process, then the corresponding event cannot be triggered. Based on this principle, nginx uses a shared lock to control whether the current process has the permission to add the port that needs to be monitored to the epoll handle of the current process. In other words, only the process that acquires the lock will listen to the target port. In this way, it is guaranteed that only one worker process will be triggered each time an event occurs. The following figure is a schematic diagram of the work cycle of the worker process:

Regarding the process in the figure, one thing that needs to be explained is that each worker process enters the cycle. It will try to acquire the shared lock. If it is not acquired, the file descriptor of the monitored port will be removed from the epoll handle of the current process (it will be removed even if it does not exist). The main purpose of this is to prevent loss. Client connection events, although this may cause a small amount of thundering herd problems, are not serious. Just imagine, if according to theory, the file descriptor of the listening port is removed from the epoll handle when the current process releases the lock, then before the next worker process acquires the lock, the file descriptor corresponding to each port during this period is If there is no epoll handle to listen, the event will be lost. If, on the other hand, the monitored file descriptors are removed only when the lock acquisition fails, as shown in the figure. Since the lock acquisition fails, it means that there must be a process currently monitoring these file descriptors, so it is safe to remove them at this time. of. But one problem this will cause is that, according to the above figure, when the current process completes the execution of a loop, it will release the lock and then handle other events. Note that it does not release the monitored file descriptor during this process. At this time, if another process acquires the lock and monitors the file descriptor, then there are two processes monitoring the file descriptor at this time. Therefore, if a connection establishment event occurs on the client, two workers will be triggered. process. This problem is tolerable for two main reasons:

This kind of thundering herd phenomenon will only trigger a smaller number of worker processes, which is better than waking up all worker processes every time.

The main reason why this kind of panic problem occurs is that the current process releases the lock, but does not release the monitored file descriptor, but the worker process mainly releases the lock after releasing the lock. It is to process the read and write events and check flag bits of the client connection. This process is very short. After the processing is completed, it will try to acquire the lock. At this time, the monitored file descriptor will be released. In comparison, In other words, the worker process that acquires the lock waits longer for processing the client's connection establishment event, so the probability of a thundering herd problem is still relatively small.

2. Source code explanation

The initial event method of the worker process is mainly carried out in the ngx_process_events_and_timers() method. Below we Let’s take a look at how this method handles the entire process. The following is the source code of this method:

void ngx_process_events_and_timers(ngx_cycle_t *cycle) {

ngx_uint_t flags;

ngx_msec_t timer, delta;

if (ngx_trylock_accept_mutex(cycle) == ngx_error) {

return;

}

// 这里开始处理事件,对于kqueue模型,其指向的是ngx_kqueue_process_events()方法,

// 而对于epoll模型,其指向的是ngx_epoll_process_events()方法

// 这个方法的主要作用是,在对应的事件模型中获取事件列表,然后将事件添加到ngx_posted_accept_events

// 队列或者ngx_posted_events队列中

(void) ngx_process_events(cycle, timer, flags);

// 这里开始处理accept事件,将其交由ngx_event_accept.c的ngx_event_accept()方法处理;

ngx_event_process_posted(cycle, &ngx_posted_accept_events);

// 开始释放锁

if (ngx_accept_mutex_held) {

ngx_shmtx_unlock(&ngx_accept_mutex);

}

// 如果不需要在事件队列中进行处理,则直接处理该事件

// 对于事件的处理,如果是accept事件,则将其交由ngx_event_accept.c的ngx_event_accept()方法处理;

// 如果是读事件,则将其交由ngx_http_request.c的ngx_http_wait_request_handler()方法处理;

// 对于处理完成的事件,最后会交由ngx_http_request.c的ngx_http_keepalive_handler()方法处理。

// 这里开始处理除accept事件外的其他事件

ngx_event_process_posted(cycle, &ngx_posted_events);

}In the above code, we have omitted most of the checking work, leaving only the skeleton code. First, the worker process will call the ngx_trylock_accept_mutex() method to obtain the lock. If the lock is obtained, it will listen to the file descriptor corresponding to each port. Then the ngx_process_events() method will be called to process the events monitored in the epoll handle. Then the shared lock will be released, and finally the read and write events of the connected client will be processed. Let's take a look at how the ngx_trylock_accept_mutex() method obtains the shared lock:

ngx_int_t ngx_trylock_accept_mutex(ngx_cycle_t *cycle) {

// 尝试使用cas算法获取共享锁

if (ngx_shmtx_trylock(&ngx_accept_mutex)) {

// ngx_accept_mutex_held为1表示当前进程已经获取到了锁

if (ngx_accept_mutex_held && ngx_accept_events == 0) {

return ngx_ok;

}

// 这里主要是将当前连接的文件描述符注册到对应事件的队列中,比如kqueue模型的change_list数组

// nginx在启用各个worker进程的时候,默认情况下,worker进程是会继承master进程所监听的socket句柄的,

// 这就导致一个问题,就是当某个端口有客户端事件时,就会把监听该端口的进程都给唤醒,

// 但是只有一个worker进程能够成功处理该事件,而其他的进程被唤醒之后发现事件已经过期,

// 因而会继续进入等待状态,这种现象称为"惊群"现象。

// nginx解决惊群现象的方式一方面是通过这里的共享锁的方式,即只有获取到锁的worker进程才能处理

// 客户端事件,但实际上,worker进程是通过在获取锁的过程中,为当前worker进程重新添加各个端口的监听事件,

// 而其他worker进程则不会监听。也就是说同一时间只有一个worker进程会监听各个端口,

// 这样就避免了"惊群"问题。

// 这里的ngx_enable_accept_events()方法就是为当前进程重新添加各个端口的监听事件的。

if (ngx_enable_accept_events(cycle) == ngx_error) {

ngx_shmtx_unlock(&ngx_accept_mutex);

return ngx_error;

}

// 标志当前已经成功获取到了锁

ngx_accept_events = 0;

ngx_accept_mutex_held = 1;

return ngx_ok;

}

// 前面获取锁失败了,因而这里需要重置ngx_accept_mutex_held的状态,并且将当前连接的事件给清除掉

if (ngx_accept_mutex_held) {

// 如果当前进程的ngx_accept_mutex_held为1,则将其重置为0,并且将当前进程在各个端口上的监听

// 事件给删除掉

if (ngx_disable_accept_events(cycle, 0) == ngx_error) {

return ngx_error;

}

ngx_accept_mutex_held = 0;

}

return ngx_ok;

}In the above code, it essentially does three main things:

Pass The ngx_shmtx_trylock() method attempts to use the cas method to obtain the shared lock;

After obtaining the lock, the ngx_enable_accept_events() method is called to listen to the file descriptor corresponding to the target port;

If the lock is not acquired, call the ngx_disable_accept_events() method to release the monitored file descriptor.

The above is the detailed content of How to solve nginx panic group problem. For more information, please follow other related articles on the PHP Chinese website!