Technology peripherals

Technology peripherals

AI

AI

Six inertial sensors and a mobile phone realize human body motion capture, positioning and environment reconstruction

Six inertial sensors and a mobile phone realize human body motion capture, positioning and environment reconstruction

Six inertial sensors and a mobile phone realize human body motion capture, positioning and environment reconstruction

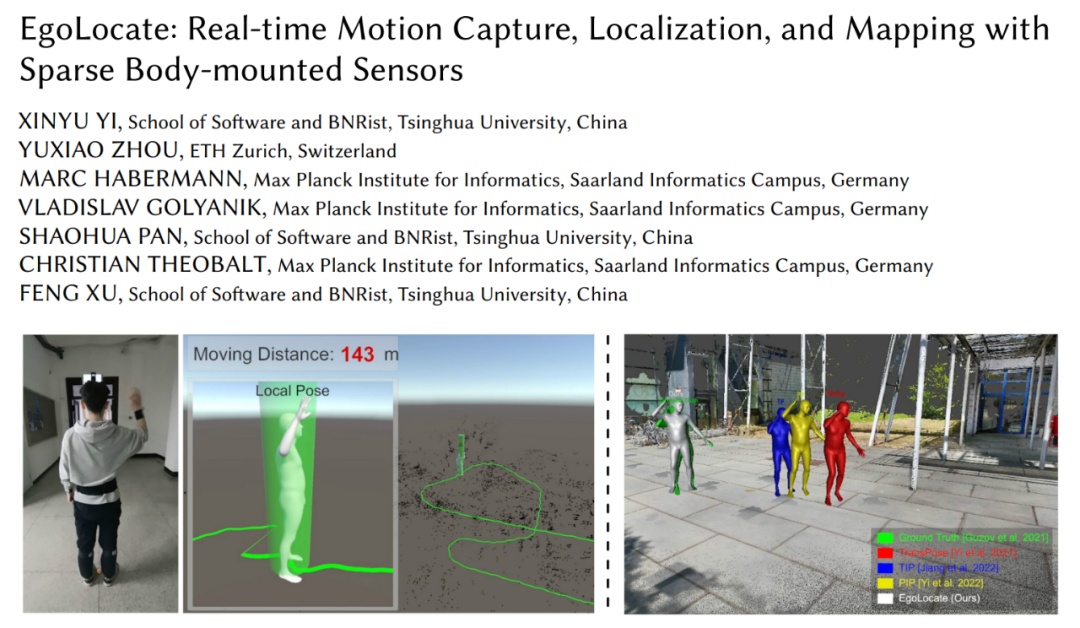

This article attempts to open the "eyes" of inertial motion capture. By wearing an additional phone camera, our algorithm has "vision." It can sense environmental information while capturing human movement, thereby achieving precise positioning of the human body. This research comes from the team of Xu Feng of Tsinghua University and has been accepted by SIGGRAPH2023, the top international conference in the field of computer graphics.

- ##Paper address: https://arxiv.org/abs/2305.01599

- Project homepage: https://xinyu-yi.github.io/EgoLocate/ Open source code: https:/ /github.com/Xinyu-Yi/EgoLocate

With the development of computer technology, human body perception and environment perception have become indispensable in modern intelligent applications of two parts. Human body sensing technology can realize human-computer interaction, intelligent medical care, games and other applications by capturing human body movements and actions. Environment perception technology can realize applications such as three-dimensional reconstruction, scene analysis and intelligent navigation by reconstructing scene models. The two tasks are interdependent, but most of the existing technologies at home and abroad handle them independently. The research team believes that the combined perception of human movement and environment is very important for scenarios where humans interact with the environment. First, simultaneous sensing of the human body and the environment can improve the efficiency and safety of human interaction with the environment. For example, in self-driving cars, sensing the driver's behavior and surrounding environment simultaneously can better ensure the safety and smoothness of driving. Secondly, the simultaneous perception of the human body and the environment can achieve a higher level of human-computer interaction. For example, in virtual reality and augmented reality, the simultaneous perception of the user's actions and the surrounding environment can better achieve an immersive experience. Therefore, simultaneous perception of the human body and the environment can bring us a more efficient, safer, and smarter human-computer interaction and environmental application experience. Based on this, Xu Feng’s team from Tsinghua University proposed simultaneous real-time human motion capture using only 6 inertial sensors (IMU) and 1 monocular color camera , positioning and environment mapping technology (shown in Figure 1). Inertial motion capture (mocap) technology explores "internal" information such as human body motion signals, while simultaneous localization and mapping (SLAM) technology mainly relies on "external" information, that is, the environment captured by the camera. The former has good stability, but because there is no external correct reference, global position drift will accumulate during long-term movements; the latter can estimate the global position in the scene with high accuracy, but when the environmental information is unreliable (such as no texture Or there is occlusion), it is easy to lose tracking.

# Therefore, this article effectively combines these two complementary technologies (mocap and SLAM). Robust and accurate human positioning and map reconstruction are achieved through the fusion of human motion priors and visual tracking on multiple key algorithms.

Figure 1 This article proposes simultaneous human motion capture and environment mapping technology

Specifically, in this study, six IMUs were worn on the limbs, head and back of a person, and a monocular color camera was fixed on the head and photographed outward. This design is inspired by real human behavior: when humans are in a new environment, they observe the environment through their eyes and determine their position, thereby planning their movements within the scene. In our system, the monocular camera acts as the human eye, providing visual signals for real-time scene reconstruction and self-positioning for this technology, while the IMU measures the movement of the human limbs and head. sports. This setup is compatible with existing VR equipment and can use the camera in the VR headset and an additional IMU to perform stable, drift-free full-body motion capture and environment perception.

For the first time, the entire system achieves simultaneous human motion capture and environment sparse point reconstruction based on only 6 IMUs and 1 camera. The running speed reaches 60fps on the CPU, and the accuracy exceeds the best in both fields. Advanced technology. Real-time examples of this system are shown in Figures 2 and 3.

Figure 2 In the complex movement of 70 meters, this system accurately tracks the position of the human body and captures the human body's movements without obvious position drift.

Figure 3 A real-time example of this system simultaneously reconstructing human motion and scene sparse points.

Introduction to the method

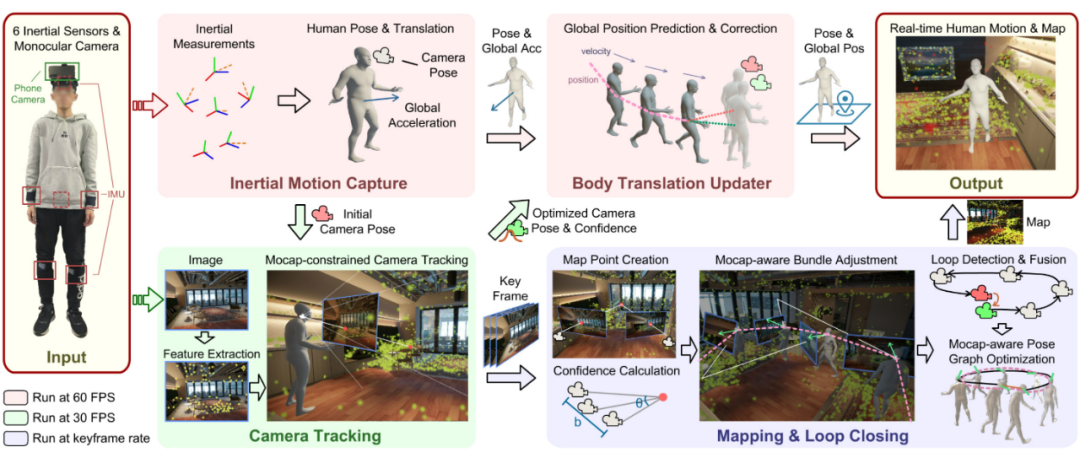

##Figure 4 Overall process of the method

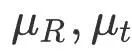

The task of the system is to reconstruct human movement and sparse point clouds of three-dimensional scenes in real time from the orientation and acceleration measurements of the six IMU sensors and the color pictures taken by the camera. , and locate the person's position in the scene. We design a deeply coupled framework to fully exploit the complementary advantages of sparse inertial motion capture and SLAM technologies. In this framework, human motion priors are combined with multiple key components of SLAM, and the positioning results of SLAM are also fed back to human motion capture. As shown in Figure 4, according to functions, we divide the system into four modules: inertial motion capture module (Inertial Motion Capture), camera tracking module (Camera Tracking), and mapping And loop detection module (Mapping & Loop Closing) and human motion update module (Body Translation Updater). Each module is introduced below.

Inertial Motion CaptureThe inertial motion capture module estimates human posture and motion from 6 IMU measurements. The design of this module is based on our previous PIP [1] work, but in this work we no longer assume that the scene is a flat ground, but consider capturing free human motion in 3D space. To this end, this paper makes adaptive modifications to the PIP optimization algorithm.

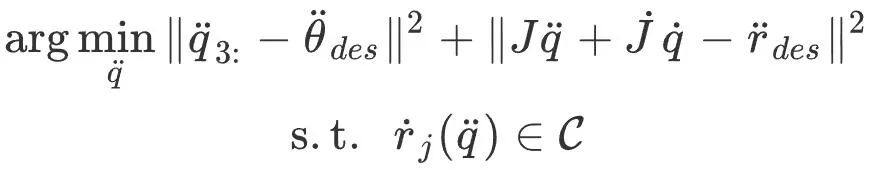

Specifically, this module first predicts human joint rotation, speed, foot and ground contact probability from IMU measurement values through a multi-stage recurrent neural network. The dual PD controller proposed by PIP is used to solve the optimal control angular acceleration  and linear acceleration

and linear acceleration  of human joints. Subsequently, this module optimizes the posture acceleration

of human joints. Subsequently, this module optimizes the posture acceleration of the human body so that it can achieve the acceleration given by the PD controller while satisfying the contact constraint conditionC:

of the human body so that it can achieve the acceleration given by the PD controller while satisfying the contact constraint conditionC:

## where

J is the joint Jacobian matrix, is the linear speed of the foot in contact with the ground, and the constraint  C requires that the speed of the foot in contact with the ground should be small (no sliding occurs). To solve this quadratic programming problem, please refer to PIP[1]. After obtaining the human body posture and motion through posture acceleration integration, the posture of the camera bound to the human body can be obtained for subsequent modules.

C requires that the speed of the foot in contact with the ground should be small (no sliding occurs). To solve this quadratic programming problem, please refer to PIP[1]. After obtaining the human body posture and motion through posture acceleration integration, the posture of the camera bound to the human body can be obtained for subsequent modules.

Camera Tracking

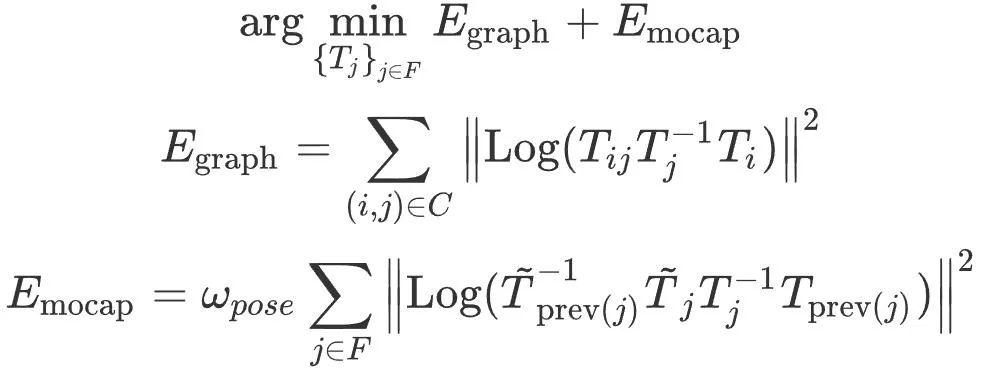

The camera tracking module takes the initial camera pose given by the inertial motion capture module and the color image captured by the camera as input, and uses the image information to optimize the camera pose and eliminate position drift. Specifically, this module is designed based on ORB-SLAM3 [2]. It first extracts the ORB feature points of the image, and performs feature matching with the reconstructed sparse map points (described below) using feature similarity to obtain matching 2D-3D point pairs. , and then optimize the camera pose by optimizing the reprojection error. It is worth noting that optimizing only the reprojection error may be affected by false matches, leading to poor camera pose optimization results. Therefore, This article integrates human motion prior information in camera tracking optimization, uses inertial motion capture results as constraints, limits the optimization process of reprojection errors, and promptly discovers and eliminates erroneous feature point-map point matching. .

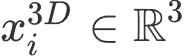

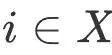

Remember the world coordinates of the map point as  , and the pixel coordinates of the matching 2D image feature points as

, and the pixel coordinates of the matching 2D image feature points as

represents all matching relationships. Use

represents all matching relationships. Use

to represent the initial camera pose before optimization, then this module optimizes the camera pose R,t:

Among them,  is the robust Huber kernel function,

is the robust Huber kernel function, Maps three-dimensional rotation to three-dimensional vector space,

Maps three-dimensional rotation to three-dimensional vector space,  is the perspective projection operation,

is the perspective projection operation,  is the control coefficient of motion capture rotation and translation items. This optimization is performed three times, each time classifying 2D-3D matches as correct or incorrect based on the reprojection error. In the next optimization, only correct matches are used, and incorrect matches are deleted. With strong prior knowledge provided by motion capture constraints, this algorithm can better distinguish between correct and incorrect matches, thereby improving camera tracking accuracy. After solving the camera pose, this module extracts the number of correctly matched map point pairs and uses it as the credibility of the camera pose.

is the control coefficient of motion capture rotation and translation items. This optimization is performed three times, each time classifying 2D-3D matches as correct or incorrect based on the reprojection error. In the next optimization, only correct matches are used, and incorrect matches are deleted. With strong prior knowledge provided by motion capture constraints, this algorithm can better distinguish between correct and incorrect matches, thereby improving camera tracking accuracy. After solving the camera pose, this module extracts the number of correctly matched map point pairs and uses it as the credibility of the camera pose.

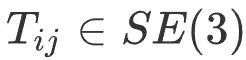

Mapping and loop closure detection

The mapping and loop closure detection module uses key frames to reconstruct sparse map points and detect whether the human body has reached the location it has been to. Correct cumulative errors. During the mapping process, we use motion capture constrained bundle adjustment (Bundle Adjustment, BA) to simultaneously optimize sparse map point positions and keyframe camera poses, and introduce map point confidence to dynamically balance The relative strength relationship between the motion capture constraint term and the reprojection error term, thereby improving the accuracy of the results. When a closed loop occurs in human movement, motion capture-assisted Pose Graph Optimization is performed to correct the closed loop error. Finally, the optimized sparse map point positions and key frame poses are obtained, which are used to run the algorithm in the next frame.

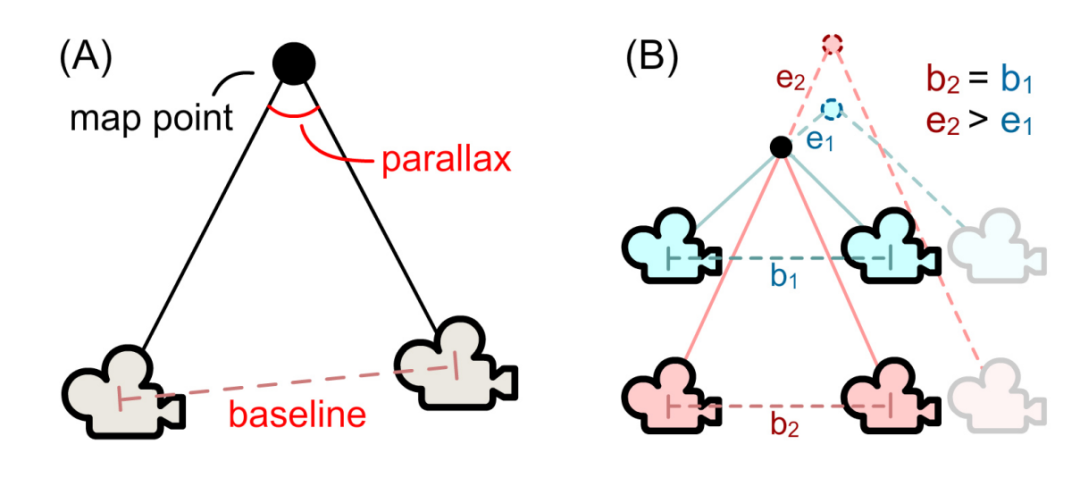

Specifically, this module first calculates the confidence of the map point based on the observation situation, which is used for subsequent BA optimization. As shown in Figure 5 below, according to the location of the key frame of the observed map point, this module calculates the key frame baseline length bi and the observation angle θi to determine the confidence of map point i , where k is control coefficient.

, where k is control coefficient.

Figure 5 (a) Map point confidence calculation. (b) With the same baseline length b1=b2, a larger observation angle (blue) can better resist the perturbation of the camera pose, resulting in smaller map point position errors (e1

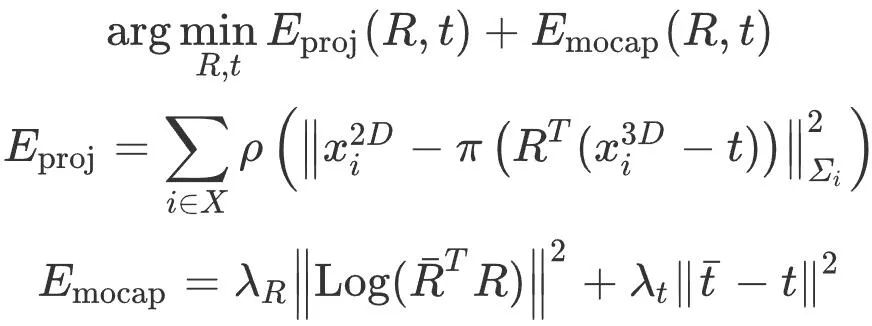

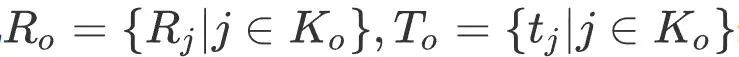

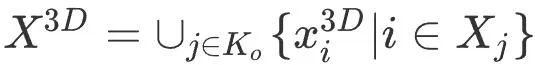

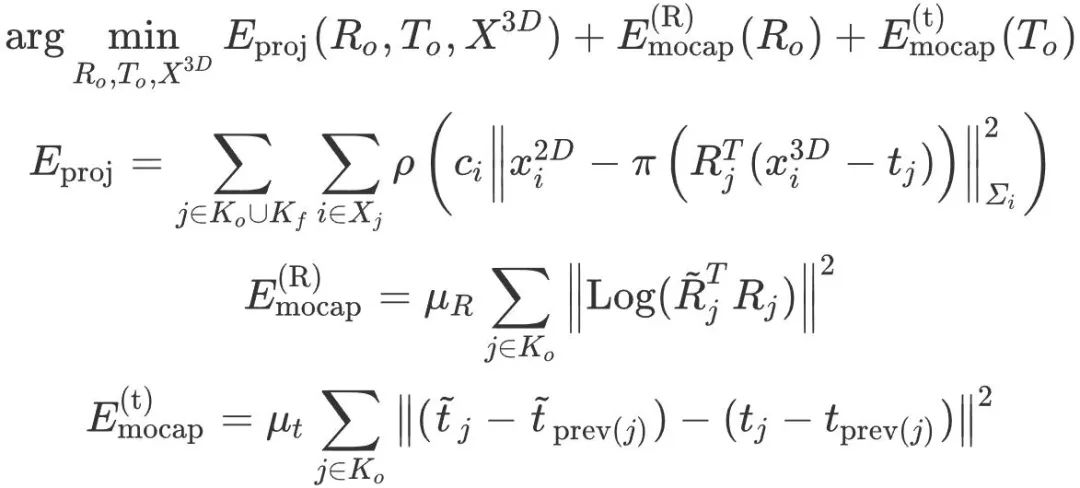

Then, simultaneously optimize the camera poses of the last 20 keyframes and their observed map points. Other keyframe poses that see these map points are fixed during optimization. Denote the set of all optimizable key frames as K0, the set of all fixed key frames as Kf, and the set of map points measured by key frame j as Xj. note

indicates the keyframe orientation and three-dimensional position that need to be optimized,

## represents the location of the map point. Then the beam adjustment optimization of the motion capture constraint is defined as:

## Among them,

represents the previous key frame of key frame j,

is the coefficient of the motion capture constraint item. This optimization requires that the reprojection error of map points should be small, and the rotation and relative position of each key frame should be close to the results of motion capture. The map point confidence ci dynamically determines the motion capture constraints and The relative weight relationship between map point reprojection items: For areas that have not been fully reconstructed, the system is more likely to believe in the results of motion capture; conversely, if an area is repeatedly observed, the system will Trust visual tracking more. The optimized factor graph is shown in Figure 6 below.

Figure 6 Optimized factor graph representation by beam adjustment method for motion capture constraints.

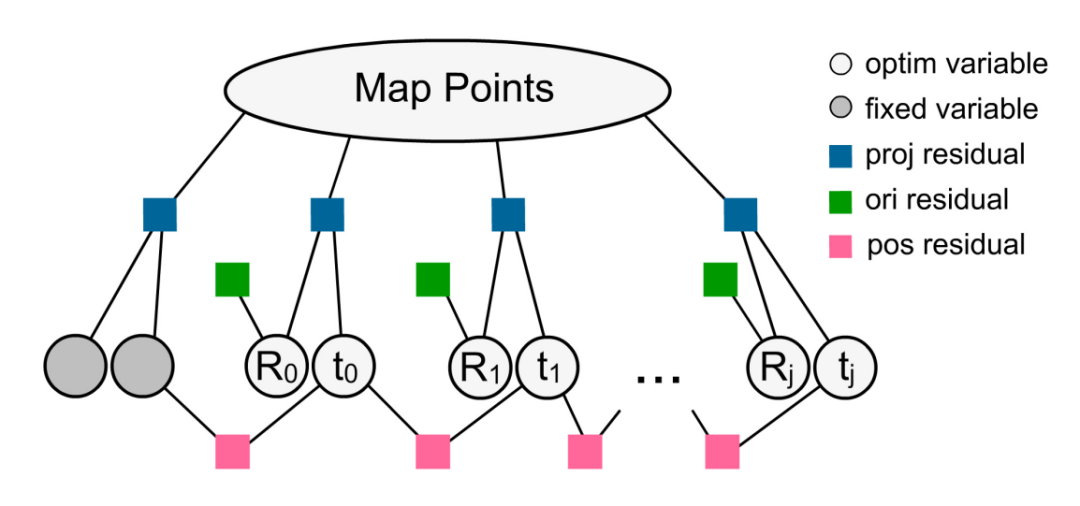

#When the trajectory closed loop is detected, the system performs closed loop optimization. Based on ORB-SLAM3[2], the set of vertices in the pose graph is F and the set of edges is C. Then the pose graph optimization of motion capture constraints is defined as:

Among them,  is the pose of key frame j,

is the pose of key frame j,  is between key frames i and j before the pose graph is optimized The relative pose of ,

is between key frames i and j before the pose graph is optimized The relative pose of ,  is the initial value of the camera pose obtained by motion capture,

is the initial value of the camera pose obtained by motion capture,  maps the pose to a six-dimensional vector space,

maps the pose to a six-dimensional vector space,  is the relative coefficient of the motion capture constraint item. This optimization is guided by the motion capture prior and disperses the closed-loop error to each key frame.

is the relative coefficient of the motion capture constraint item. This optimization is guided by the motion capture prior and disperses the closed-loop error to each key frame.

Human motion update

The human motion update module uses the optimized camera pose and credibility of the camera tracking module to update The global position of the human body given by the motion capture module. This module is implemented using the prediction-correction algorithm of the Kalman filter. Among them, the motion capture module provides constant variance of human body motion acceleration, which can be used to predict the global position of the human body (prior distribution); while the camera tracking module provides camera position observations and confidence, which is used to correct the global position of the human body (posterior distribution). ). Among them, the covariance matrix  of the camera position observation is approximately calculated by the number of matching map points as the following diagonal matrix:

of the camera position observation is approximately calculated by the number of matching map points as the following diagonal matrix:

where  is a decimal to avoid the divisor being 0. That is, the greater the number of successfully matched map points in camera tracking, the smaller the variance of camera pose observations. The Kalman filter algorithm is used to finally predict the global position of the human body.

is a decimal to avoid the divisor being 0. That is, the greater the number of successfully matched map points in camera tracking, the smaller the variance of camera pose observations. The Kalman filter algorithm is used to finally predict the global position of the human body.

For more detailed method introduction and formula derivation, please refer to the original text and appendix of the paper.

Experiment

Comparison with Mocap

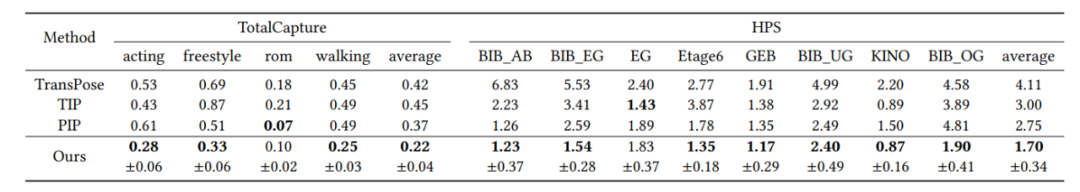

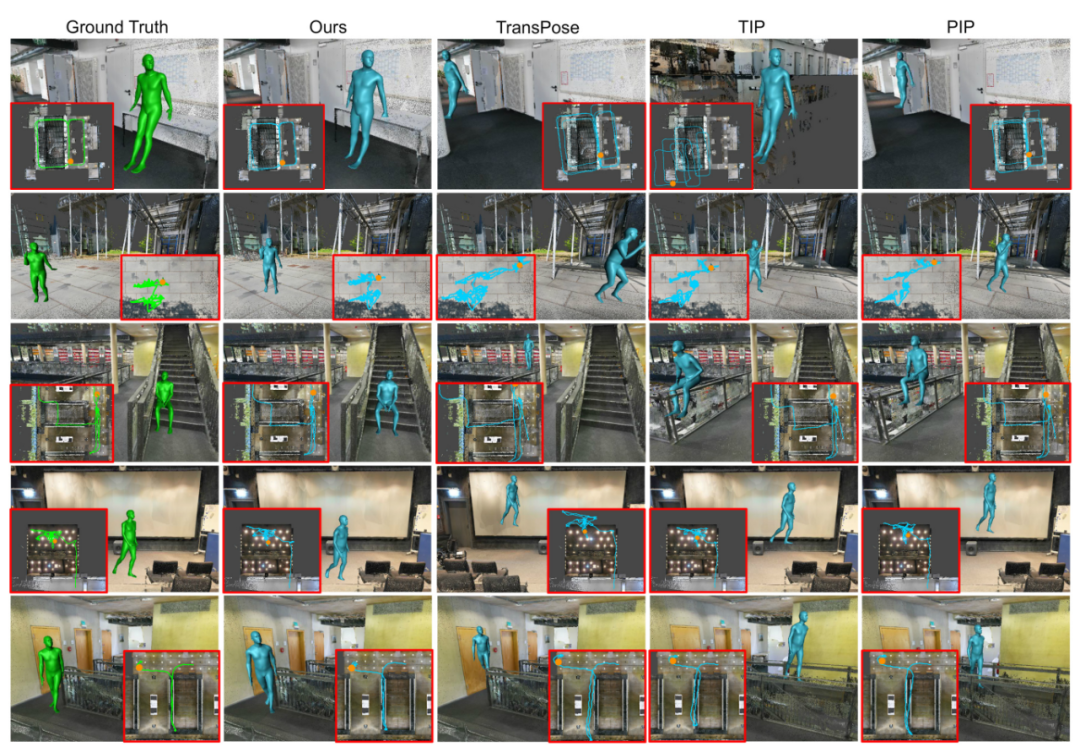

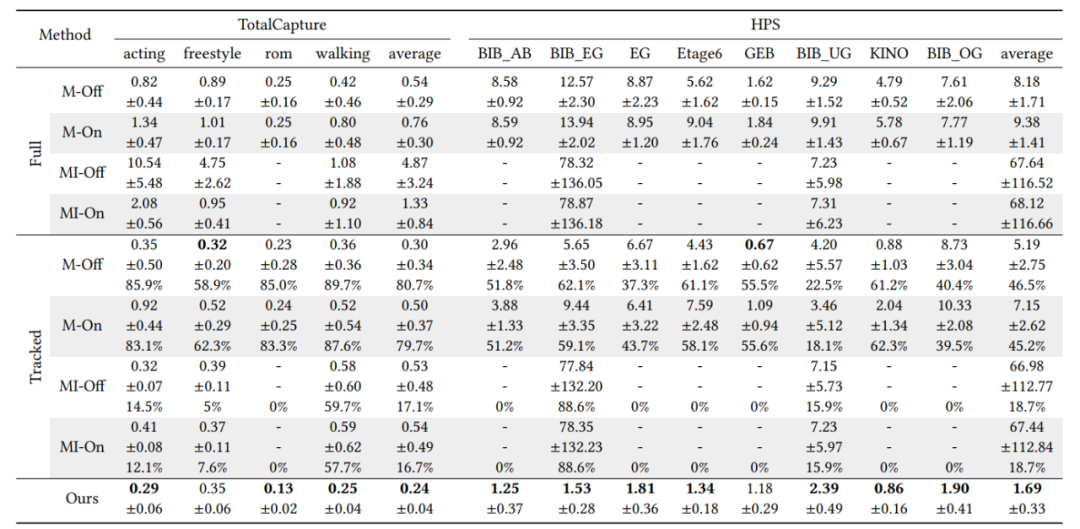

This method mainly solves the problem of global position drift in sparse inertial motion capture (Mocap), so the main test is selected The indicator is the global position error of the human body. The comparison of quantitative test results with SOTA mocap methods TransPose[3], TIP[4] and PIP[1] on two public data sets, TotalCapture and HPS, is shown in Table 1 below. The comparison of qualitative test results is shown in Figures 7 and 8 below. Show. It can be seen that the method in this article greatly exceeds the previous inertial motion capture method in global positioning accuracy (increased by 41% and 38% in TotalCapture and HPS respectively), and the trajectory has the highest similarity with the true value.

Table 1 Quantitative comparison of global position error with inertial motion capture work (unit: meters ). The TotalCapture data set is classified by actions, and the HPS data set is classified by scenes. For our work, we test 9 times and report the median and standard deviation.

Figure 7 Qualitative comparison of global position error with inertial motion capture work. The true value is shown in green, and the prediction results of different methods are shown in blue. The movement trajectory and current position of the human body (orange dots) are shown in the corner of each image.

Figure 8 Qualitative comparison of global position error with inertial motion capture work (video). The true value is shown in green, the method in this paper is in white, and the methods of previous work use other different colors (see legend).

Comparison with SLAM

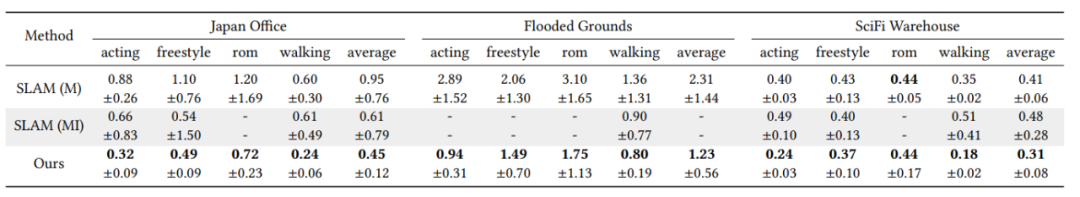

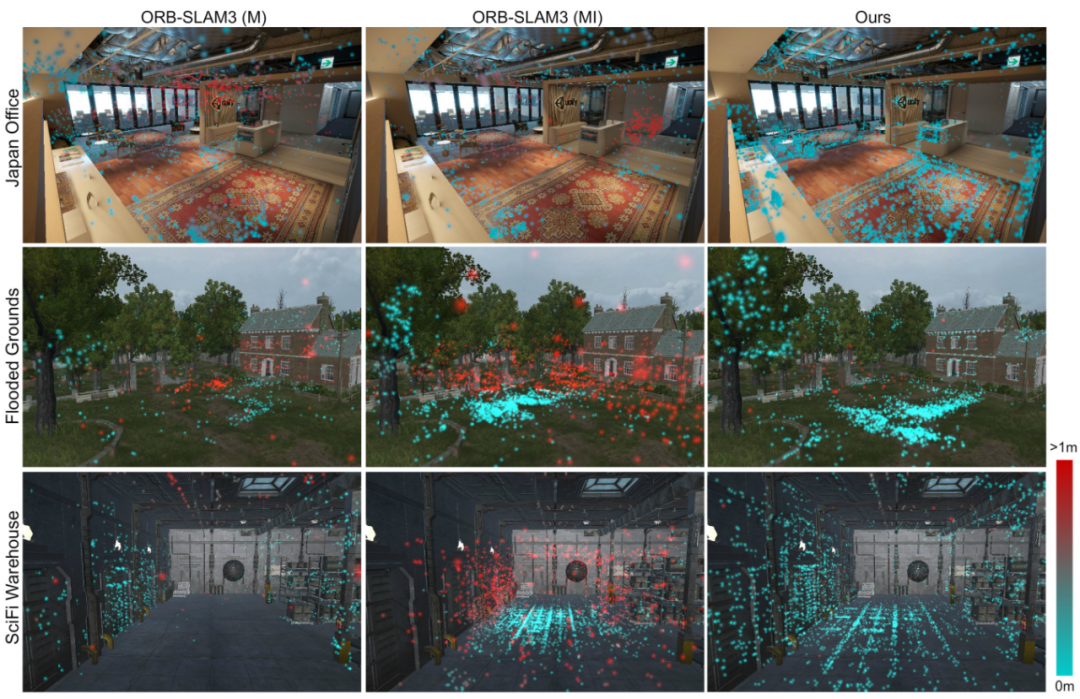

This article compares SOTA SLAM work ORB-SLAM3[2] from two perspectives: positioning accuracy and map reconstruction accuracy. The monocular and monocular inertial versions were compared. The quantitative comparison results of positioning accuracy are shown in Table 2. The quantitative comparison results of map reconstruction accuracy are shown in Table 3, and the qualitative comparison results are shown in Figure 9. It can be seen that compared with SLAM, the method in this paper greatly improves the system robustness, positioning accuracy and map reconstruction accuracy.

Table 2 Quantitative comparison of positioning errors with SLAM work (error unit: meters). M/MI respectively represents the monocular/monocular inertial version of ORB-SLAM3, and On/Off represents the real-time and offline results of SLAM. Since SLAM often loses tracking, we report the average positioning error on the complete sequence (Full) and successfully tracked frames (Tracked) for SLAM respectively; there is no tracking loss in this method, so we report the results of the complete sequence. Each method was tested 9 times and the median and standard deviation were reported. For errors on successfully tracked frames, we additionally report the percentage of success. If a method fails multiple times, we mark it as failed (indicated by "-").

##Table 3 Quantitative comparison of map reconstruction errors with SLAM work (Error unit: meters). M/MI respectively represent the monocular/monocular inertial version of ORB-SLAM3. For three different scenes (office, outdoor, factory), we test the average error of all reconstructed 3D map points from the scene surface geometry. Each method was tested 9 times and the median and standard deviation were reported. If a method fails multiple times, we mark it as failed (indicated by "-").

## Figure 9 Qualitative comparison of map reconstruction error with SLAM work . We show scene points reconstructed by different methods, with the color indicating the error for each point.

# In addition, this system greatly improves the robustness against visual tracking loss by introducing human motion prior. When the visual features are poor, this system can utilize human motion priors to continue tracking without losing tracking and resetting or creating new maps like other SLAM systems. As shown in Figure 10 below.

Figure 10 Comparison of occlusion robustness with SLAM work. The ground truth trajectory reference is shown in the upper right corner. Due to the randomness of SLAM initialization, the global coordinate system and timestamp are not completely aligned.

#For more experimental results, please refer to the original text of the paper, the project homepage and the paper video.Summary

This article proposes the first combination of inertial mocap and SLAM to achieve real-time simultaneous human motion capture, positioning and mapping. work. The system is lightweight enough to require only a sparse set of sensors worn by the human body, including 6 inertial measurement units and a mobile phone camera. For online tracking, mocap and SLAM are fused through constrained optimization and Kalman filtering techniques to achieve more accurate human positioning. For back-end optimization, positioning and mapping errors are further reduced by integrating human motion prior into beam adjustment optimization and closed-loop optimization in SLAM.This research aims to integrate human body perception with the perception of the environment. Although this work focuses primarily on localization aspects, we believe that this work represents a first step toward joint motion capture and fine-grained environment perception and reconstruction.

The above is the detailed content of Six inertial sensors and a mobile phone realize human body motion capture, positioning and environment reconstruction. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

In Python, how to dynamically create an object through a string and call its methods? This is a common programming requirement, especially if it needs to be configured or run...

How to use Go or Rust to call Python scripts to achieve true parallel execution?

Apr 01, 2025 pm 11:39 PM

How to use Go or Rust to call Python scripts to achieve true parallel execution?

Apr 01, 2025 pm 11:39 PM

How to use Go or Rust to call Python scripts to achieve true parallel execution? Recently I've been using Python...

How to solve the problem of missing dynamic loading content when obtaining web page data?

Apr 01, 2025 pm 11:24 PM

How to solve the problem of missing dynamic loading content when obtaining web page data?

Apr 01, 2025 pm 11:24 PM

Problems and solutions encountered when using the requests library to crawl web page data. When using the requests library to obtain web page data, you sometimes encounter the...

How to operate Zookeeper performance tuning on Debian

Apr 02, 2025 am 07:42 AM

How to operate Zookeeper performance tuning on Debian

Apr 02, 2025 am 07:42 AM

This article describes how to optimize ZooKeeper performance on Debian systems. We will provide advice on hardware, operating system, ZooKeeper configuration and monitoring. 1. Optimize storage media upgrade at the system level: Replacing traditional mechanical hard drives with SSD solid-state drives will significantly improve I/O performance and reduce access latency. Disable swap partitioning: By adjusting kernel parameters, reduce dependence on swap partitions and avoid performance losses caused by frequent memory and disk swaps. Improve file descriptor upper limit: Increase the number of file descriptors allowed to be opened at the same time by the system to avoid resource limitations affecting the processing efficiency of ZooKeeper. 2. ZooKeeper configuration optimization zoo.cfg file configuration

How to do Oracle security settings on Debian

Apr 02, 2025 am 07:48 AM

How to do Oracle security settings on Debian

Apr 02, 2025 am 07:48 AM

To strengthen the security of Oracle database on the Debian system, it requires many aspects to start. The following steps provide a framework for secure configuration: 1. Oracle database installation and initial configuration system preparation: Ensure that the Debian system has been updated to the latest version, the network configuration is correct, and all required software packages are installed. It is recommended to refer to official documents or reliable third-party resources for installation. Users and Groups: Create a dedicated Oracle user group (such as oinstall, dba, backupdba) and set appropriate permissions for it. 2. Security restrictions set resource restrictions: Edit /etc/security/limits.d/30-oracle.conf

In the ChatGPT era, how can the technical Q&A community respond to challenges?

Apr 01, 2025 pm 11:51 PM

In the ChatGPT era, how can the technical Q&A community respond to challenges?

Apr 01, 2025 pm 11:51 PM

The technical Q&A community in the ChatGPT era: SegmentFault’s response strategy StackOverflow...

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

About Pythonasyncio...

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

Discussion on the reasons why pipeline files cannot be written when using Scapy crawlers When learning and using Scapy crawlers for persistent data storage, you may encounter pipeline files...