Technology peripherals

Technology peripherals

AI

AI

Let ChatGPT call 100,000+ open source AI models! HuggingFace's new feature is booming: large models can be used as multi-modal AI tools

Let ChatGPT call 100,000+ open source AI models! HuggingFace's new feature is booming: large models can be used as multi-modal AI tools

Let ChatGPT call 100,000+ open source AI models! HuggingFace's new feature is booming: large models can be used as multi-modal AI tools

Just chat with ChatGPT, it can help you call 100,000 HuggingFace models!

This is HuggingFace Transformers Agents, the latest function launched by Hugging Face. It has received great attention since its launch:

This function , which is equivalent to equipping large models such as ChatGPT with "multi-modal" capabilities -

is not limited to text, but can solve any multi-modal tasks such as images, voices, documents, etc.

For example, you can make a "describe this image" request to ChatGPT and give it a picture of a beaver. Using ChatGPT, you can call the image interpreter and output "a beaver is swimming"

Then, ChatGPT calls text-to-speech, and it takes minutes. You can read this sentence:

A beaver is swimming in the water Audio: 00:0000:01

It can not only support OpenAI’s large models, such as ChatGPT, but also support other Free large models like OpenAssistant.

Transformer Agent is responsible for "teaching" these large models to directly call any AI model on Hugging Face and output the processed results.

So what is the principle behind this newly launched function?

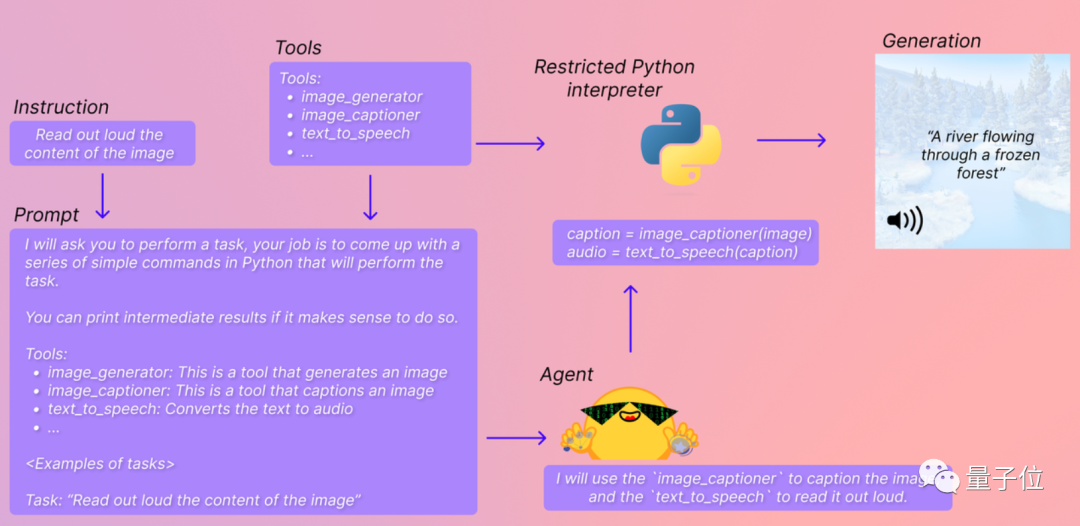

How to let large models "command" various AIs?

Simply put, Transformers Agents is a "hugging face AI tool integration package" exclusive to large models.

Various large and small AI models on HuggingFace are included in this package and classified into "image generator", "image interpreter", "text-to-speech tool"...

At the same time, each tool will have a corresponding text explanation to facilitate large models to understand which model they should call.

In this way, you only need a simple code prompt to let the big model help you run the AI model directly and output the results It will be returned to you in real time. The process is divided into three steps:

First, set up the large model you want to use. Here you can use OpenAI's large model (of course, the API is charged):

<code>from transformers import OpenAiAgentagent = OpenAiAgent(model="text-davinci-003", api_key="<your_api_key>")</your_api_key></code>

You can also use free large models such as BigCode or OpenAssistant:

<code>from huggingface_hub import loginlogin("<your_token>")</your_token></code>Then, set up Hugging Transformers Agents. Here we take the default Agent as an example:

<code>from transformers import HfAgent# Starcoderagent = HfAgent("https://api-inference.huggingface.co/models/bigcode/starcoder")# StarcoderBase# agent = HfAgent("https://api-inference.huggingface.co/models/bigcode/starcoderbase")# OpenAssistant# agent = HfAgent(url_endpoint="https://api-inference.huggingface.co/models/OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5")</code>Then, you can use the command run() or chat() to run Transformers Agents.

run() is suitable for calling multiple AI models at the same time to perform more complex and professional tasks.

A single AI tool can be called.

For example, if you execute agent.run("Draw me a picture of rivers and lakes."), it will call the AI graphic tool to help you generate an image:

You can also call multiple AI tools at the same time.

For example, if you execute agent.run("Draw me a picture of the sea then transform the picture to add an island"), it will call the "Wen Sheng Diagram" and "Tu Sheng Diagram" tools to help you generate Corresponding image:

chat() is suitable for "continuously completing tasks" through chatting.

For example, first call the Wenshengtu AI tool to generate a picture of rivers and lakes: agent.chat("Generate a picture of rivers and lakes")

Then make the "picture from picture" modification based on this picture: agent.chat("Transform the picture so that there is a rock in there")

The AI model to be called can be set by yourself, or you can use the set of default settings that come with Huohuan Face.

A set of default AI models has been set up

Currently, Transformers Agents have integrated a set of default AI models, which is completed by calling the following AI models in the Transformer library:

1. Vision Document understanding model Donut. As long as you provide a file in image format (including images converted from PDF), you can use it to answer questions about the file.

For example, if you ask "Where will the TRRF Scientific Advisory Committee meeting be held?" Donut will give the answer:

2. Text question answering model Flan-T5. Given a long article and a question, it can answer various text questions and help you with reading comprehension.

3. Zero-sample visual language model BLIP. It can directly understand the content in the image and provide text descriptions for the image.

4. Multi-modal model ViLT. It can understand and answer questions in a given image,

5. Multi-modal image segmentation model CLIPseg. Just provide a model and prompt words, and the system can segment the specified content (mask) in the image based on the prompt words.

6. Automatic speech recognition model Whisper. It can automatically recognize the text in a recording and complete the transcription.

7. Speech synthesis model SpeechT5. for text-to-speech.

8. Self-encoding language model BART. In addition to automatically classifying a piece of text content, it can also make text summaries.

9. 200 language translation model NLLB. In addition to common languages, it can also translate some less common languages, including Lao and Kamba.

By calling the above AI models, tasks including image question and answer, document understanding, image segmentation, recording to text, translation, captioning, text to speech, and text classification can all be completed.

In addition, Huohuan Lian also contains "private goods", including some models outside the Transformer library, including downloading text, Vincent pictures, pictures, and Vincent videos from web pages:

These models can not only be called individually, but can also be mixed together. For example, if you ask the large model to "generate and describe a good-looking photo of a beaver", it will Call the "Venture Picture" and "Picture Understanding" AI models respectively.

Of course, if we don’t want to use these default AI models and want to set up a more useful “tool integration package”, we can also set it up ourselves according to the steps.

Regarding Transformers Agents, some netizens pointed out that it is a bit like the "replacement" of LangChain agents:

Have you tried these two tools? Which one do you think is more useful?

Reference link: [1]https://twitter.com/huggingface/status/1656334778407297027[2]https://huggingface.co/docs/transformers/transformers_agents

The above is the detailed content of Let ChatGPT call 100,000+ open source AI models! HuggingFace's new feature is booming: large models can be used as multi-modal AI tools. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1369

1369

52

52

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

When converting strings to objects in Vue.js, JSON.parse() is preferred for standard JSON strings. For non-standard JSON strings, the string can be processed by using regular expressions and reduce methods according to the format or decoded URL-encoded. Select the appropriate method according to the string format and pay attention to security and encoding issues to avoid bugs.

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

MySQL performance optimization needs to start from three aspects: installation configuration, indexing and query optimization, monitoring and tuning. 1. After installation, you need to adjust the my.cnf file according to the server configuration, such as the innodb_buffer_pool_size parameter, and close query_cache_size; 2. Create a suitable index to avoid excessive indexes, and optimize query statements, such as using the EXPLAIN command to analyze the execution plan; 3. Use MySQL's own monitoring tool (SHOWPROCESSLIST, SHOWSTATUS) to monitor the database health, and regularly back up and organize the database. Only by continuously optimizing these steps can the performance of MySQL database be improved.

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

MySQL refused to start? Don’t panic, let’s check it out! Many friends found that the service could not be started after installing MySQL, and they were so anxious! Don’t worry, this article will take you to deal with it calmly and find out the mastermind behind it! After reading it, you can not only solve this problem, but also improve your understanding of MySQL services and your ideas for troubleshooting problems, and become a more powerful database administrator! The MySQL service failed to start, and there are many reasons, ranging from simple configuration errors to complex system problems. Let’s start with the most common aspects. Basic knowledge: A brief description of the service startup process MySQL service startup. Simply put, the operating system loads MySQL-related files and then starts the MySQL daemon. This involves configuration