Technology peripherals

Technology peripherals

AI

AI

From a technical perspective, let's talk about why binocular autonomous driving systems are difficult to popularize?

From a technical perspective, let's talk about why binocular autonomous driving systems are difficult to popularize?

From a technical perspective, let's talk about why binocular autonomous driving systems are difficult to popularize?

Monocular vision is the magic weapon of Mobileye (ME). In fact, it also considered binocular vision at that time, but finally chose to give up.

What do monocular ranging and 3-D estimation rely on? It is the Bounding Box (BB) that detects the target. If the obstacle cannot be detected, the system cannot estimate its distance and 3-D attitude/orientation. Without deep learning, ME mainly estimates the distance based on the BB, the attitude and height obtained by camera calibration, and the assumption that the road surface is straight.

With deep learning, the NN model can be trained based on 3-D ground truth to obtain 3D size and attitude estimates. The distance is obtained based on the parallel line principle (single view metrology) . The monocular L3 solution announced by Baidu Apollo not long ago explains it clearly. The reference paper is "3D Bounding Box Estimation by Deep Learning and Geometry".

Binocular can certainly calculate parallax and depth, even if no obstacle is detected (because of the additional depth information, the detector will be better than the monocular), it will alarm. The problem is that it is not that easy for a binocular vision system to estimate disparity. Stereo matching is a typical problem in computer vision. A wide baseline results in accurate ranging results for far targets, while a short baseline results in good ranging results for near targets. There is a trade-off here.

The current ADAS binocular vision system on the market is Subaru EyeSight, and its performance is said to be okay.

The Apollo L4 shuttle bus launched by Baidu was mass-produced with 100 units installed, and a binocular system was installed. The EU autonomous parking project V-Charge also uses a forward binocular vision system, as does the autonomous driving research and development system Berta Benz, which is integrated with the radar system. Among them, the binocular matching obstacle detection algorithm Stixel is very famous. Tier-1 companies such as Bosch and Conti have also developed binocular vision solutions in the past, but they did not have an impact on the market and were reportedly axed.

Talking about the difficulties of the binocular system, in addition to stereo matching, there is also calibration. The calibrated system will "drift", so online calibration is a must. The same is true for monocular, because tire deformation and vehicle body bumps will affect the changes in external parameters of the camera, and some parameters must be calibrated and corrected online, such as pitch angle and yaw angle.

Binocular online calibration is more complicated. Because binocular matching is simplified as much as possible into a 1-D search, it is necessary to use stereo rectification to make the optical axes of the two lenses parallel and perpendicular to the baseline. . Therefore, compared with the gained gain, merchants will give up if it is not cost-effective due to the increased complexity and cost.

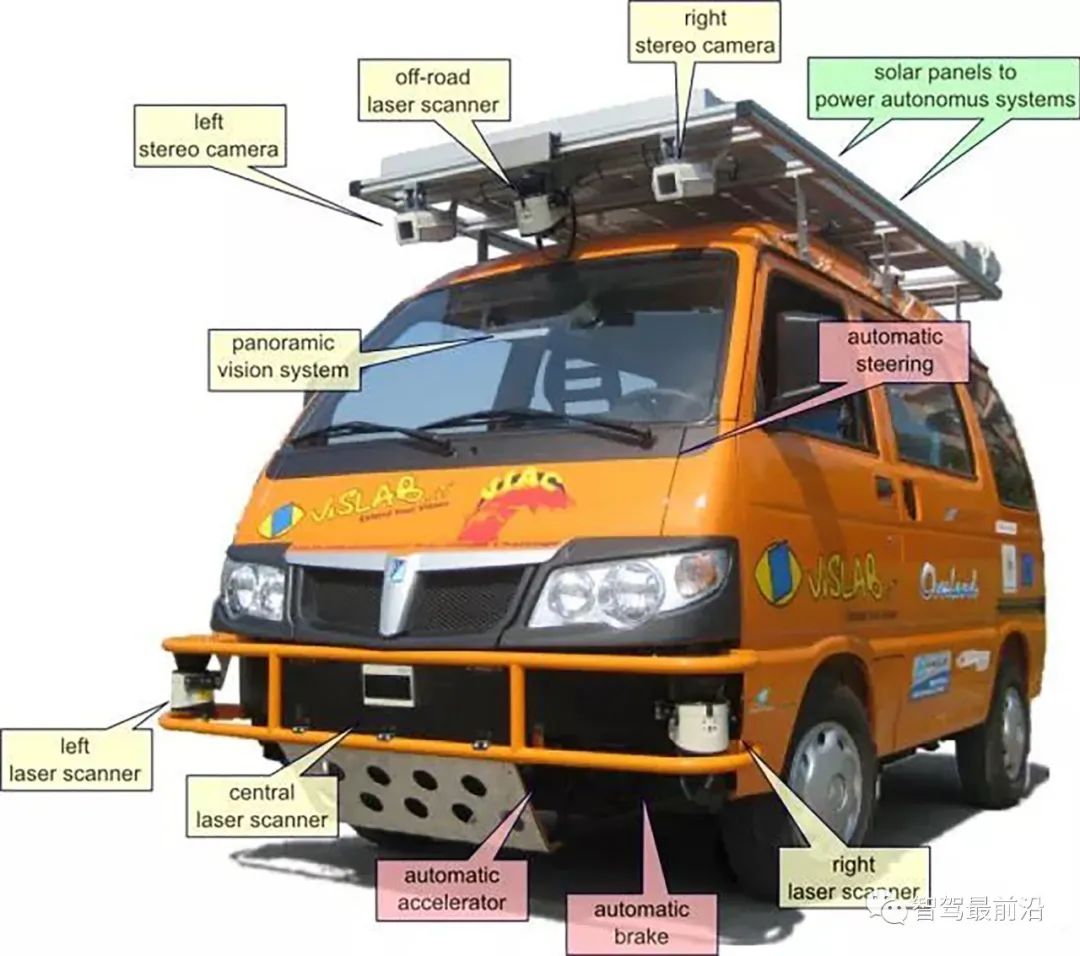

Binocular vision has been mentioned recently because Silicon Valley chip company Ambarella acquired Vis Lab of the University of Parma in Italy in 2014 and developed binocular ADAS and autonomous driving chips. , after CES last year, it began to enter car companies and Tier-1. Moreover, Ambarella is currently continuing research to improve the performance of the system.

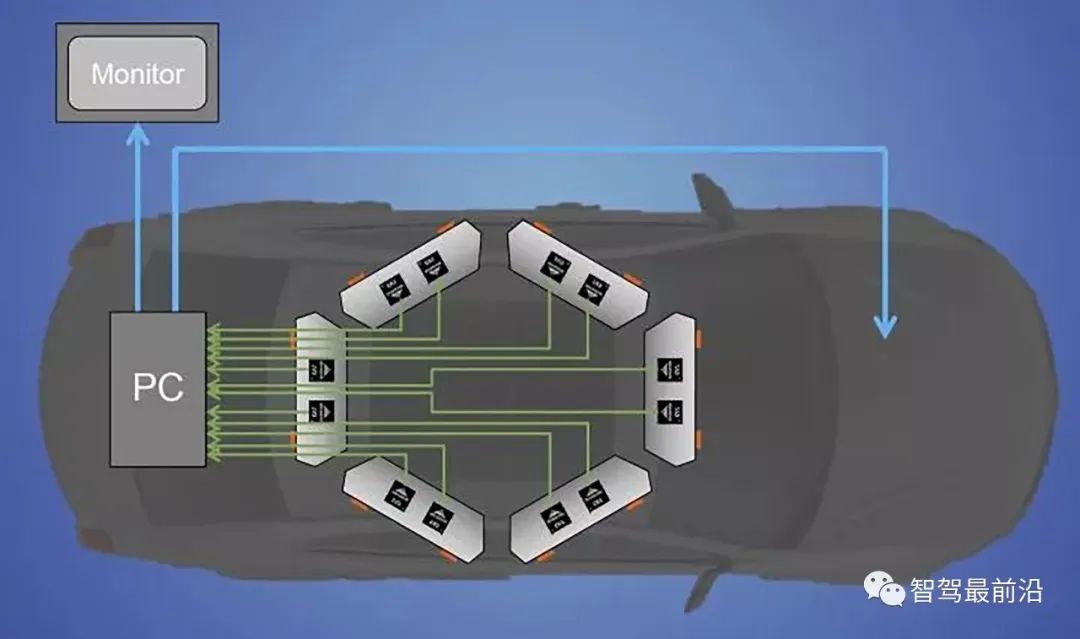

The picture below is a schematic diagram of six pairs of stereo vision systems installed on the roof of the car. Their baseline widths can be different, and the effective detection distances are correspondingly different. The author once took a ride in its self-driving car and could see 200 meters in the distance and 20-30 meters in the distance. It can indeed perform online calibration and adjust some binocular vision parameters at any time.

01 Stereo matching

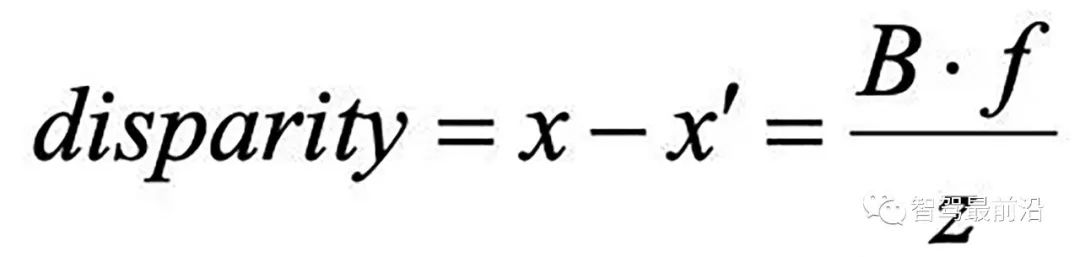

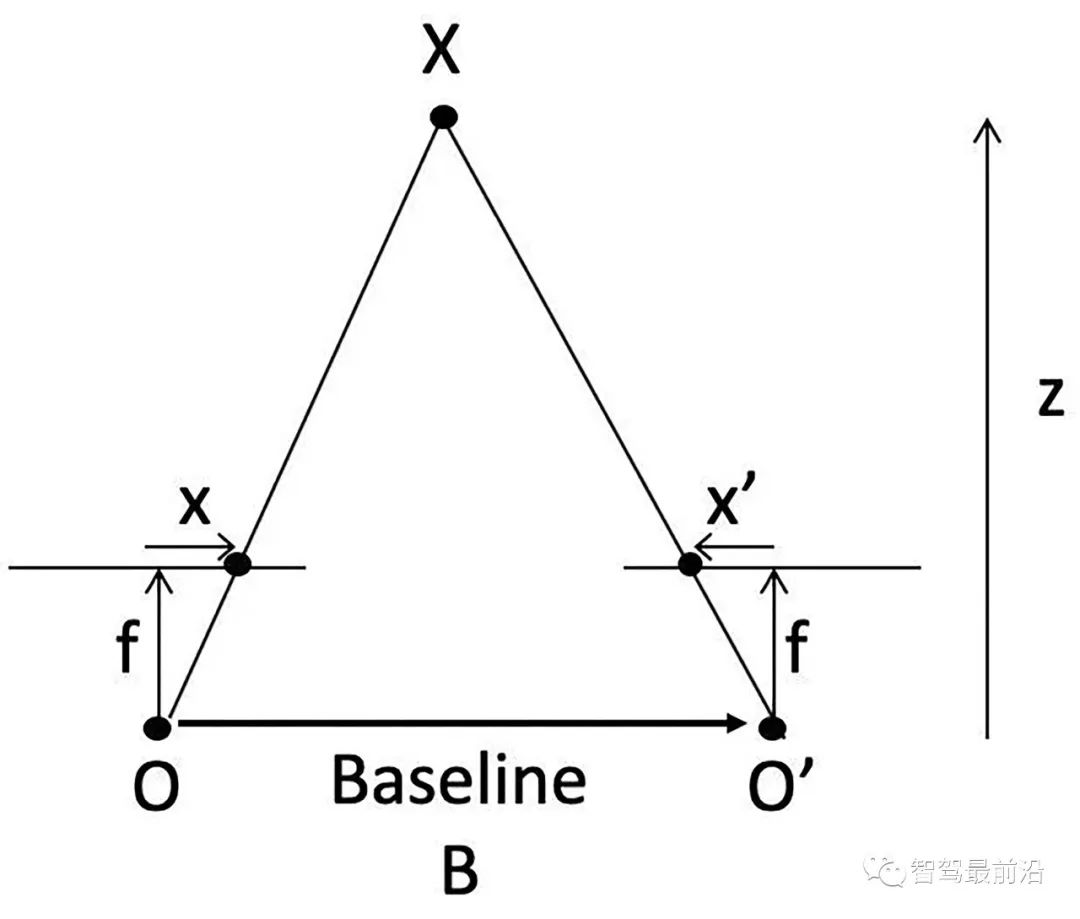

Let’s talk about stereo matching first, that is, disparity/depth estimation. As shown in the figure, assume that the focal length of the left and right cameras is f, the width of the baseline (the line connecting the two optical centers) is B, the depth of the 3-D point

#Visible parallax can inversely calculate the depth value. But the most difficult thing here is how to determine that the images seen by the left and right lenses are the same target, that is, the matching problem.

There are two matching methods, global method and local method. There are four steps for binocular matching:

- Matching cost calculation;

- Cost aggregation;

- Disparity calculation/optimization;

- Disparity Refinement.

The most famous local method is SGM (semi-global matching). The method used by many products is based on this improvement. Many vision chips use this method. algorithm.

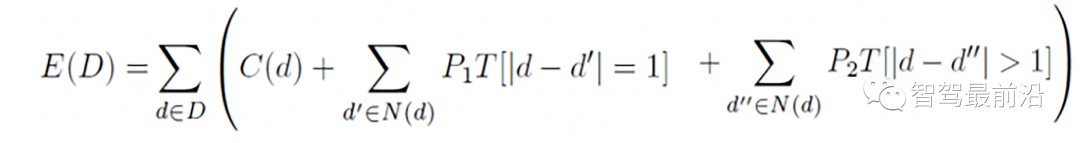

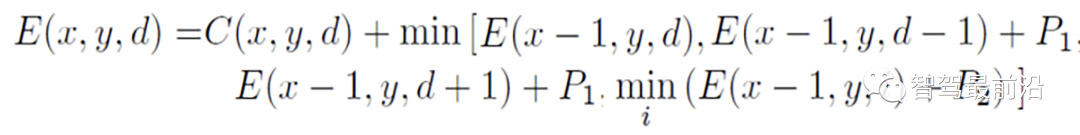

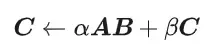

SGM approximates a global optimization into a combination of multiple local optimization problems. The following formula is the optimization objective function of 2-D matching. SGM is implemented as multiple 1-D optimization paths. The sum

The following figure is the path optimization function along the horizontal direction

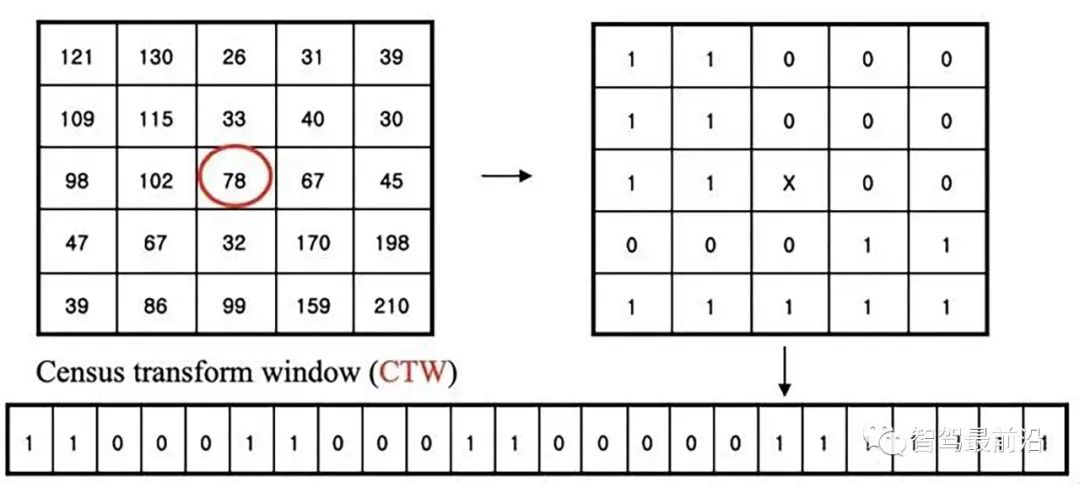

Census Transform converts 8/24-bit pixels into a binary sequence. Another binary feature called LBP (local binary pattern) is similar to it. The stereo matching algorithm is based on this transformation and turns the matching into a minimization search of Hamming distance. Intel's RealSense acquired a binocular vision startup company founded in 1994 based on this technology, and also acquired several other small companies and combined them to create it.

The following figure is a schematic diagram of CS transformation:

PatchMatch is an algorithm that accelerates image template matching , is used in optical flow calculation and disparity estimation. Microsoft Research has previously done a project based on 3-D reconstruction of a monocular mobile phone camera, imitating the previously successful KinectFusion based on RGB-D algorithm, with a name similar to MonoFusion, in which the depth map estimation uses a modified PatchMatch method.

The basic idea is to randomly initialize the disparity and plane parameters, and then update the estimate through information propagation between neighboring pixels. The PM algorithm is divided into five steps:

- 1) Spatial propagation: Each pixel checks the disparity and plane parameters of the left and upper neighbors. If the matching cost becomes smaller, Replace the current estimate;

- 2) View propagation: Transform pixels from other perspectives, check the estimate of the corresponding image, and replace it if it becomes smaller;

- 3) Temporal propagation: Consider the estimation of corresponding pixels in the previous and next frames;

- 4) Plane refinement: Randomly generate samples , if the estimate brings the matching cost down, update.

- 5) Post-processing: Left-right consistency and weighted median filter remove outliers.

The following picture is a schematic diagram of PM:

02 Online calibration

More on Online calibration.

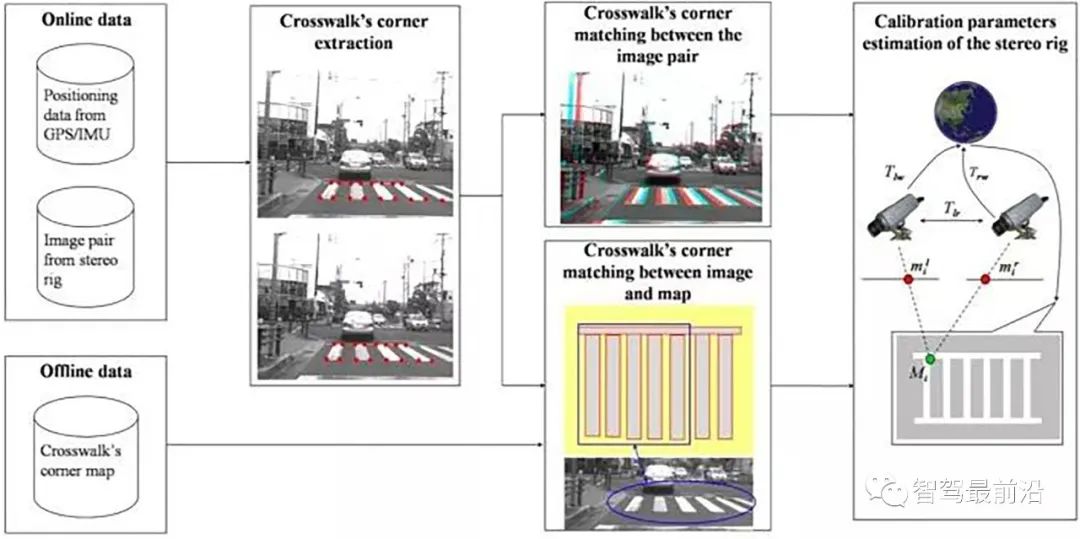

This is a calibration method that uses road markings (zebra crossings): the parallel line pattern of the zebra crossing is known, the zebra crossing is detected and the corner points are extracted, and the zebra crossing pattern is calculated to match the road surface. Homography parameters are used to obtain calibration parameters.

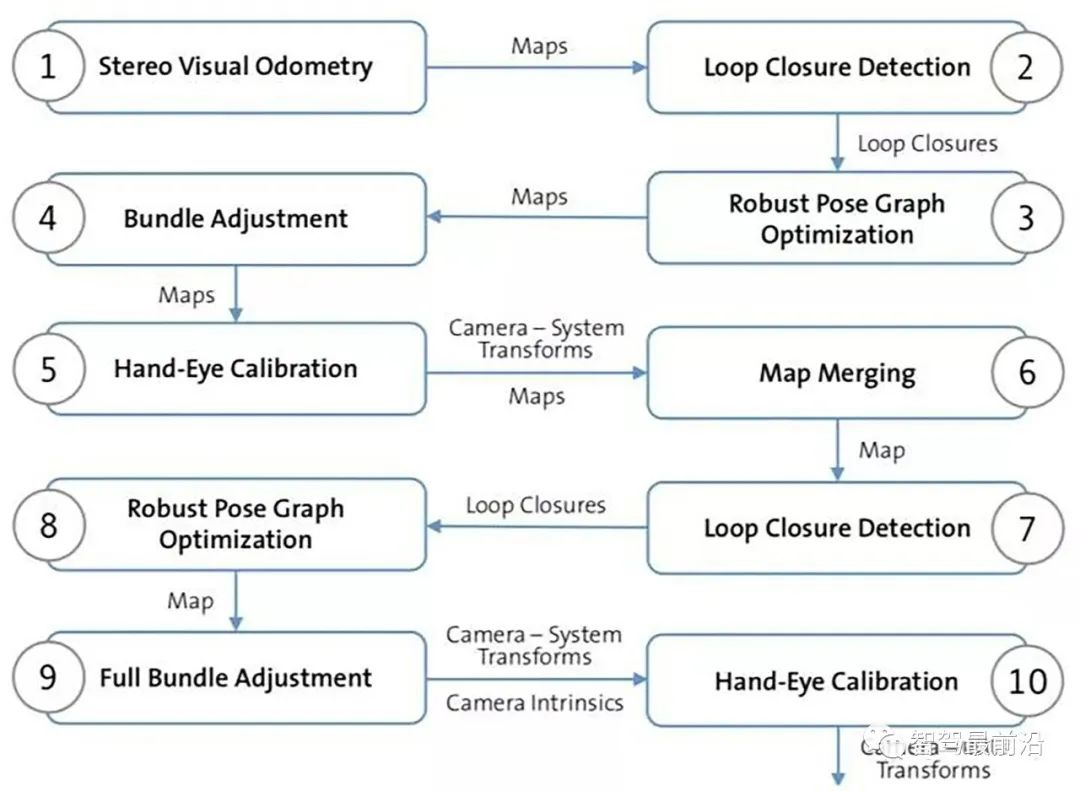

The other method is based on VO and SLAM, which is more complicated, but it can do map-based positioning at the same time. Using SLAM for online calibration is not suitable for high-frequency operations. The following figure is the flow chart of its algorithm: Steps 1-4, obtain the global continuous map through stereo vision SLAM; Step 5 gives the initial estimate of the binocular camera transformation, Step 6 Aggregate the maps of all stereo cameras into one map; obtain the poses between multiple cameras in steps 7-8.

Similar to the monocular method, online calibration can be quickly completed using the assumption that the lane lines are parallel and the road is flat, that is, the vanishing point theory: assuming a flat road Model, clear longitudinal lane lines, no other objects have edges parallel to them; the driving speed is required to be slow, the lane lines are continuous, and the binocular configuration of the left and right cameras requires a comparison of the elevation/roll angles of the left camera relative to the road surface (yaw/roll angles) Small; in this way, the drift amount of the binocular external parameters can be calculated by comparing it with the initialized vanishing point (related to offline calibration) (Figure 5-269). The algorithm is to estimate the camera elevation/oblique angle from the vanishing point.

03 Typical binocular automatic driving system

The following introduces several typical binocular automatic driving systems driving system.

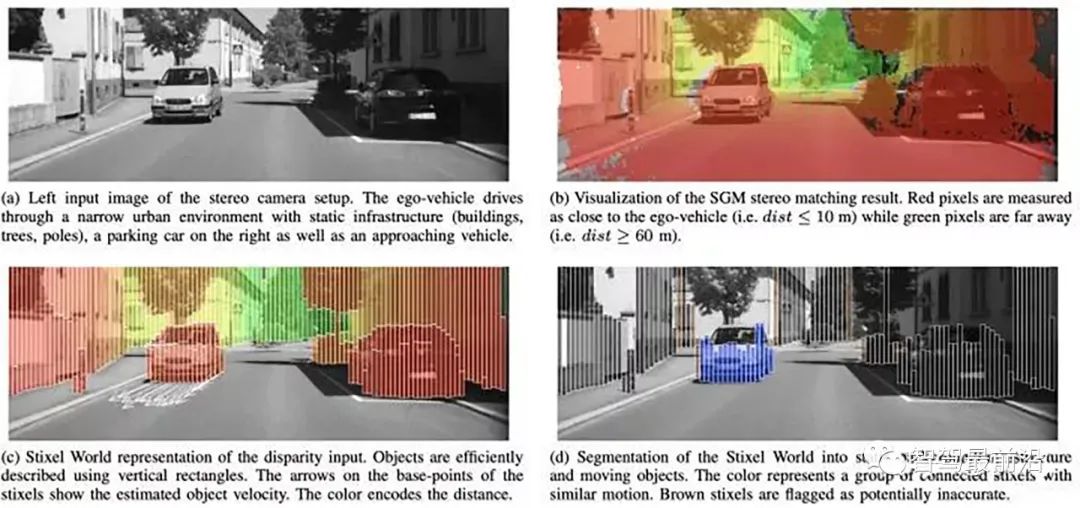

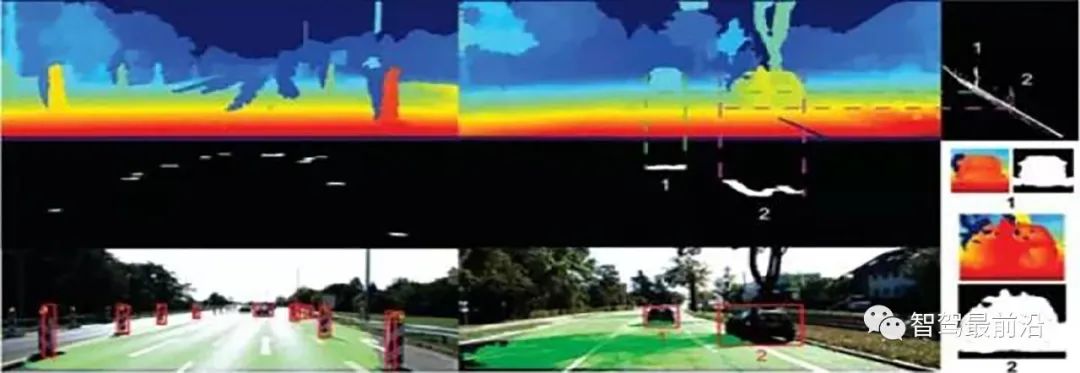

The obstacle detection algorithm Stixel adopted by Berta Benz is based on the following assumptions: the targets in the scene are described as columns, the center of gravity of the target is standing on the ground, and the upper part of each target is larger than The lower part has great depth. The following figure (a-d) introduces how SGM disparity results generate Stixel segmentation results:

The following figure is a schematic diagram of Stixels calculation: (a) Based on dynamics Planned free driving space calculation (b) Attribute values in height segmentation (c) Cost image (grayscale values reversed) (d) Height segmentation.

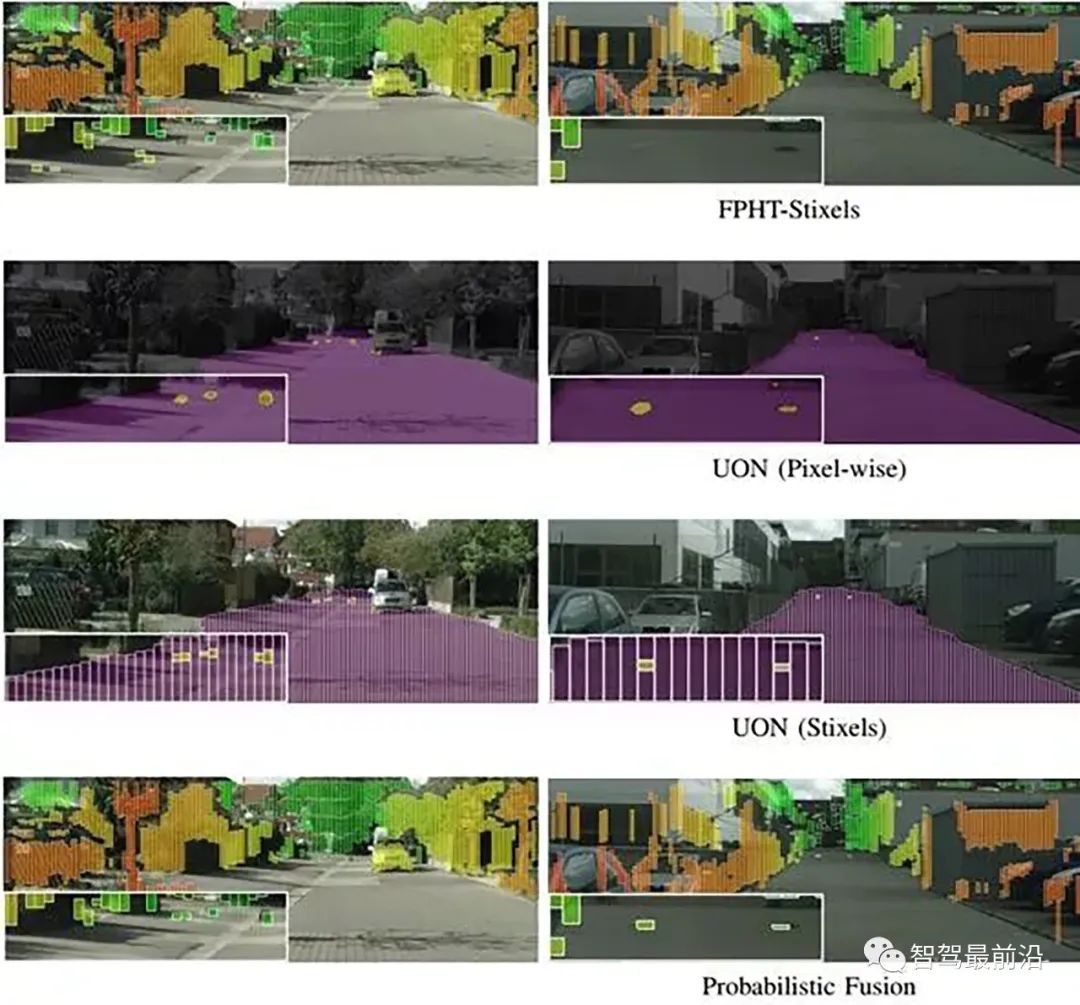

This is the block diagram and new results of Stixel after they added deep learning to do parallax fusion:

##Introducing a VisLab early binocular obstacle algorithm, Generic Obstacle and Lane Detection system (GOLD). Based on IPM (Inverse Perspective Mapping), detect lane lines and calculate obstacles on the road based on the difference between the left and right images:

(a) Left . (b) Right (c) Remapped left. (d) Remapped right. (e) Thresholded and filtered difference between remapped views. (f) In light gray, the road area visible from both cameras.

(a) Original. (b) Remapped. (c) Filtered. (d) Enhanced. (e) Binarized.

GOLD system architecture

This is VisLab’s vehicle participating in the autonomous driving competition VIAC (VisLab Intercontinental Autonomous Challenge). In addition to binocular cameras, there is also a laser on the vehicle Radar as an aid to road classification.

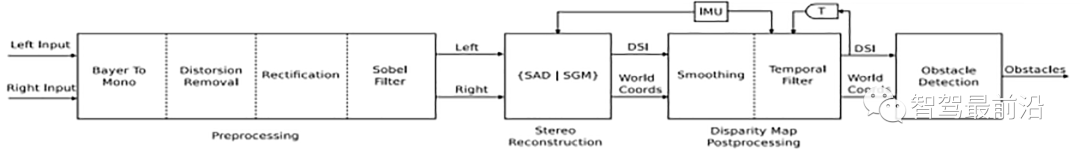

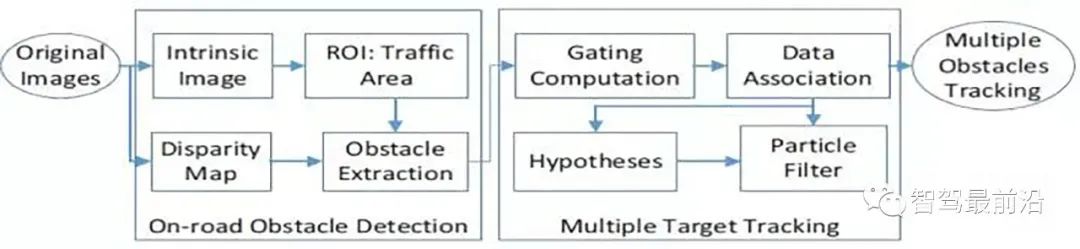

#This is its binocular obstacle detection flow chart: disparity estimation utilizes the SGM algorithm and related algorithms based on SAD.

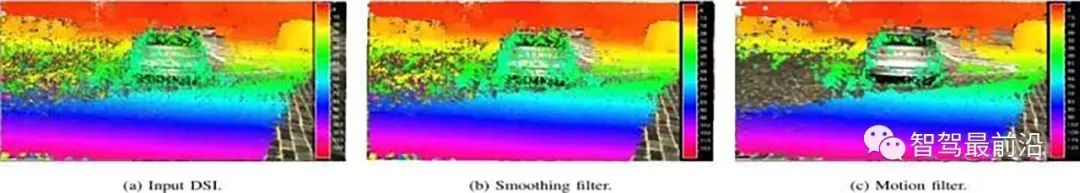

Two DSI (Disparity Space Image) space filters are added to the post-processing, as shown in Figure 5-274. One is smoothing processing. The other is motion trajectory processing based on inertial navigation (IMU).

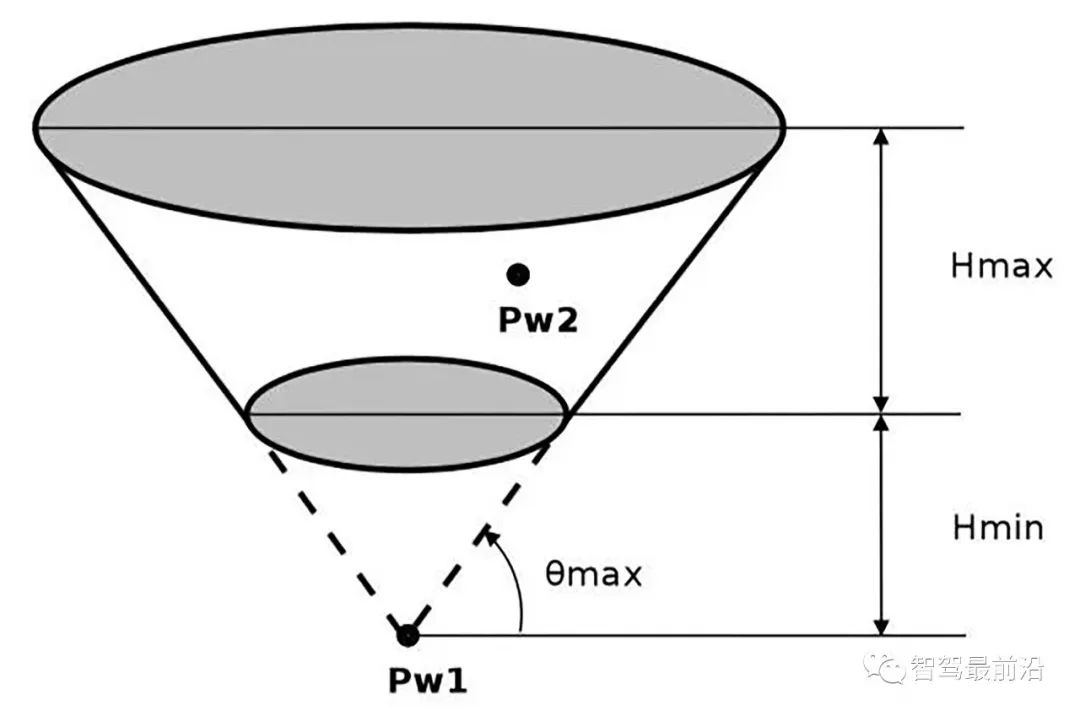

The obstacle detection algorithm adopts the JPL method and clusters obstacles based on the spatial layout characteristics and the physical characteristics of the vehicle. The physical characteristics include the maximum height (vehicle), the minimum height (obstacle) and the maximum passable range of the road. These constraints define a spatial truncated cone, as shown in the figure, then during the clustering process, everything falls Points within the truncated cone are designated as obstacles.

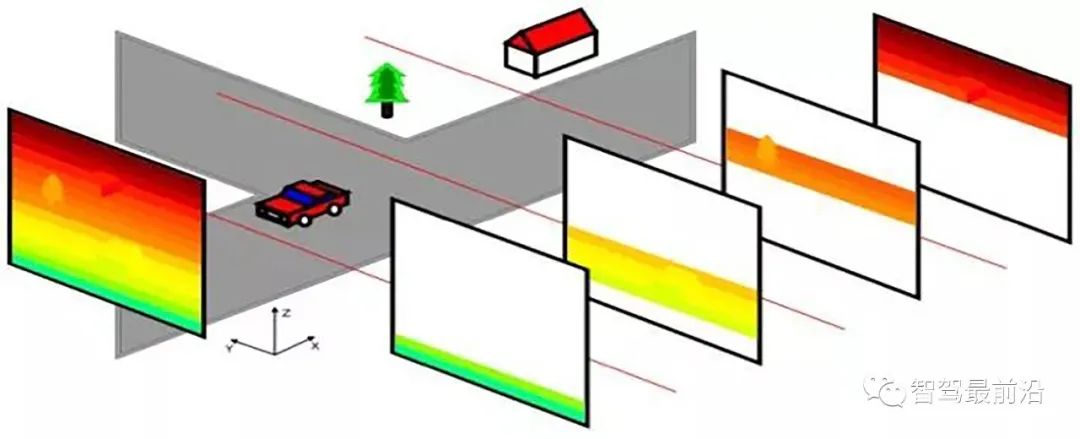

In order to speed up the disparity estimation algorithm, the method of dividing DSI is adopted:

Another classic method is to obtain the road parallax based on the road equation (stereo vision), and calculate the obstacles on the road based on this:

04 Summary

In general, the method of binocular detection of obstacles is basically based on disparity maps, and there are many methods based on road disparity. Perhaps with the rapid development of deep learning and the enhancement of computing platforms, binocular autonomous driving systems will also become popular.

The above is the detailed content of From a technical perspective, let's talk about why binocular autonomous driving systems are difficult to popularize?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

General Matrix Multiplication (GEMM) is a vital part of many applications and algorithms, and is also one of the important indicators for evaluating computer hardware performance. In-depth research and optimization of the implementation of GEMM can help us better understand high-performance computing and the relationship between software and hardware systems. In computer science, effective optimization of GEMM can increase computing speed and save resources, which is crucial to improving the overall performance of a computer system. An in-depth understanding of the working principle and optimization method of GEMM will help us better utilize the potential of modern computing hardware and provide more efficient solutions for various complex computing tasks. By optimizing the performance of GEMM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

On July 29, at the roll-off ceremony of AITO Wenjie's 400,000th new car, Yu Chengdong, Huawei's Managing Director, Chairman of Terminal BG, and Chairman of Smart Car Solutions BU, attended and delivered a speech and announced that Wenjie series models will be launched this year In August, Huawei Qiankun ADS 3.0 version was launched, and it is planned to successively push upgrades from August to September. The Xiangjie S9, which will be released on August 6, will debut Huawei’s ADS3.0 intelligent driving system. With the assistance of lidar, Huawei Qiankun ADS3.0 version will greatly improve its intelligent driving capabilities, have end-to-end integrated capabilities, and adopt a new end-to-end architecture of GOD (general obstacle identification)/PDP (predictive decision-making and control) , providing the NCA function of smart driving from parking space to parking space, and upgrading CAS3.0

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

The best version of the Apple 16 system is iOS16.1.4. The best version of the iOS16 system may vary from person to person. The additions and improvements in daily use experience have also been praised by many users. Which version of the Apple 16 system is the best? Answer: iOS16.1.4 The best version of the iOS 16 system may vary from person to person. According to public information, iOS16, launched in 2022, is considered a very stable and performant version, and users are quite satisfied with its overall experience. In addition, the addition of new features and improvements in daily use experience in iOS16 have also been well received by many users. Especially in terms of updated battery life, signal performance and heating control, user feedback has been relatively positive. However, considering iPhone14

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

On April 11, Huawei officially announced the HarmonyOS 4.2 100-machine upgrade plan for the first time. This time, more than 180 devices will participate in the upgrade, covering mobile phones, tablets, watches, headphones, smart screens and other devices. In the past month, with the steady progress of the HarmonyOS4.2 100-machine upgrade plan, many popular models including Huawei Pocket2, Huawei MateX5 series, nova12 series, Huawei Pura series, etc. have also started to upgrade and adapt, which means that there will be More Huawei model users can enjoy the common and often new experience brought by HarmonyOS. Judging from user feedback, the experience of Huawei Mate60 series models has improved in all aspects after upgrading HarmonyOS4.2. Especially Huawei M

What are the computer operating systems?

Jan 12, 2024 pm 03:12 PM

What are the computer operating systems?

Jan 12, 2024 pm 03:12 PM

A computer operating system is a system used to manage computer hardware and software programs. It is also an operating system program developed based on all software systems. Different operating systems have different users. So what are the computer systems? Below, the editor will share with you what computer operating systems are. The so-called operating system is to manage computer hardware and software programs. All software is developed based on operating system programs. In fact, there are many types of operating systems, including those for industrial use, commercial use, and personal use, covering a wide range of applications. Below, the editor will explain to you what computer operating systems are. What computer operating systems are Windows systems? The Windows system is an operating system developed by Microsoft Corporation of the United States. than the most

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Linux and Windows are two common operating systems, representing the open source Linux system and the commercial Windows system respectively. In both operating systems, there is a command line interface for users to interact with the operating system. In Linux systems, users use the Shell command line, while in Windows systems, users use the cmd command line. The Shell command line in Linux system is a very powerful tool that can complete almost all system management tasks.

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass