nginx load balancing instance analysis

nginx load balancing

Note, as you can see, because our website is in the early stages of development, nginx only acts as an agent for one back-end server, but because our website As the reputation grew and more and more people visited, one server just couldn’t handle it, so we added multiple servers. How do we configure proxies for so many servers? Here we use two servers as an example to demonstrate for everyone. .

1.upstream load balancing module description

Case:

The following sets the load balancing server list.

upstream test.net{

ip_hash;

server 192.168.10.13:80;

server 192.168.10.14:80 down;

server 192.168.10.15:8009 max_fails=3 fail_timeout=20s;

server 192.168.10.16:8080;

}

server {

location / {

proxy_pass http://test.net;

}

}upstream is the http upstream module of nginx. This module uses a simple scheduling algorithm to achieve load balancing from the client IP to the back-end server. In the above settings, a load balancer name test.net is specified through the upstream directive. This name can be specified arbitrarily and can be called directly where it is needed later.

2. Load balancing algorithms supported by upstream

nginx’s load balancing module currently supports 4 scheduling algorithms, which are introduced separately below. The last two of them belong to third parties. Scheduling Algorithm.

Polling (default). Each request is assigned to different back-end servers one by one in chronological order. If a back-end server goes down, the faulty system is automatically eliminated so that user access is not affected. Weight specifies the polling weight. The larger the weight value, the higher the access probability assigned. It is mainly used when the performance of each back-end server is uneven.

ip_hash. Each request is allocated according to the hash result of the accessed IP, so that visitors from the same IP can access a back-end server, which effectively solves the session sharing problem of dynamic web pages.

fair. This is a more intelligent load balancing algorithm than the above two. This algorithm can intelligently perform load balancing based on page size and loading time, that is, allocate requests based on the response time of the back-end server, and prioritize those with short response times. nginx itself does not support fair. If you need to use this scheduling algorithm, you must download the upstream_fair module of nginx.

url_hash. This method allocates requests according to the hash result of the access URL, so that each URL is directed to the same back-end server, which can further improve the efficiency of the back-end cache server. nginx itself does not support url_hash. If you need to use this scheduling algorithm, you must install the nginx hash software package.

3. Upstream supported status parameters

In the http upstream module, you can specify the ip address and port of the backend server through the server directive , and you can also set the status of each backend server in load balancing scheduling. Commonly used states are:

#down, indicating that the current server does not participate in load balancing temporarily.

backup, reserved backup machine. Requests are sent to the standby machine only when all other non-standby machines are down or busy, so the standby machine has the lightest load.

max_fails, the number of allowed request failures, the default is 1. If the number exceeds the maximum number, an error defined by the proxy_next_upstream module will be returned.

After multiple failures (reaching max_fails times), the service will be suspended for a period of time and fail_timeout will be triggered. max_fails can be used together with fail_timeout.

Note that when the load scheduling algorithm is ip_hash, the status of the backend server in the load balancing scheduling cannot be weight and backup.

4. Experimental topology

5. Configure nginx load balancing

[root@nginx ~]# vim /etc/nginx/nginx.conf

upstream webservers {

server 192.168.18.201 weight=1;

server 192.168.18.202 weight=1;

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

proxy_pass http://webservers;

proxy_set_header x-real-ip $remote_addr;

}

}Note, upstream is defined in server{ } Outside of server{ }, it cannot be defined inside. After defining upstream, just use proxy_pass to reference it.

6. Reload the configuration file

[root@nginx ~]# service nginx reload nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful 重新载入 nginx: [确定]

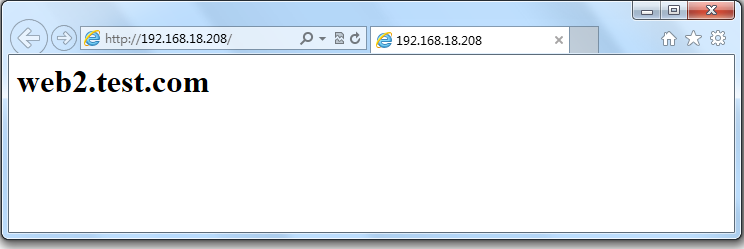

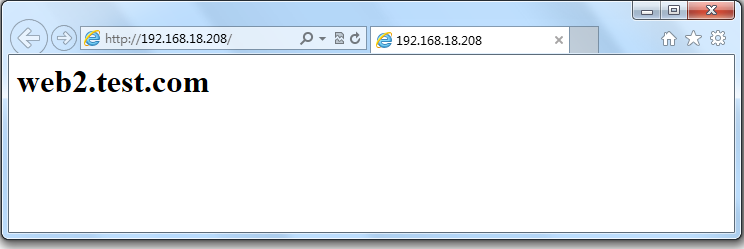

7. Test it

Note, you can constantly refresh the browsing content, and you can find that web1 and web2 appear alternately, achieving a load balancing effect.

8. Check the web access server log

web1:

[root@web1 ~]# tail /var/log/httpd/access_log 192.168.18.138 - - [04/sep/2013:09:41:58 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:41:58 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:41:59 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:41:59 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:42:00 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:42:00 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:42:00 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:44:21 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:44:22 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:44:22 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)"

web2:

Modify it first, The format of web server logs.

[root@web2 ~]# vim /etc/httpd/conf/httpd.conf

logformat "%{x-real-ip}i %l %u %t \"%r\" %>s %b \"%{referer}i\" \"%{user-agent}i\"" combined

[root@web2 ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]Then, visit multiple times and continue to check the log.

[root@web2 ~]# tail /var/log/httpd/access_log 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:28 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:29 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)" 192.168.18.138 - - [04/sep/2013:09:50:29 +0800] "get / http/1.0" 200 23 "-" "mozilla/5.0 (compatible; msie 10.0; windows nt 6.1; wow64; trident/6.0)"

Note, as you can see, the logs of both servers record access logs of 192.168.18.138, which also shows that the load balancing configuration is successful.

9. Configure nginx to perform health status check

max_fails, the number of allowed request failures, the default is 1. If the number exceeds the maximum number, an error defined by the proxy_next_upstream module will be returned.

After experiencing the maximum allowed number of failures (max_fails), the service will be suspended for a period of time (fail_timeout). Health status checks can be performed using max_fails and fail_timeout.

[root@nginx ~]# vim /etc/nginx/nginx.conf

upstream webservers {

server 192.168.18.201 weight=1 max_fails=2 fail_timeout=2;

server 192.168.18.202 weight=1 max_fails=2 fail_timeout=2;

}10.重新加载一下配置文件

[root@nginx ~]# service nginx reload nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful 重新载入 nginx: [确定]

11.停止服务器并测试

先停止web1,进行测试。 [root@web1 ~]# service httpd stop 停止 httpd: [确定]

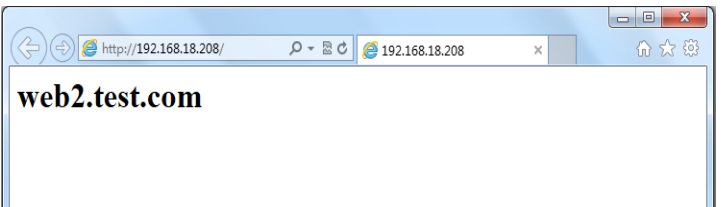

注,大家可以看到,现在只能访问web2,再重新启动web1,再次访问一下。

[root@web1 ~]# service httpd start 正在启动 httpd: [确定]

注,大家可以看到,现在又可以重新访问,说明nginx的健康状态查检配置成功。但大家想一下,如果不幸的是所有服务器都不能提供服务了怎么办,用户打开页面就会出现出错页面,那么会带来用户体验的降低,所以我们能不能像配置lvs是配置sorry_server呢,答案是可以的,但这里不是配置sorry_server而是配置backup。

12.配置backup服务器

[root@nginx ~]# vim /etc/nginx/nginx.conf

server {

listen 8080;

server_name localhost;

root /data/www/errorpage;

index index.html;

}

upstream webservers {

server 192.168.18.201 weight=1 max_fails=2 fail_timeout=2;

server 192.168.18.202 weight=1 max_fails=2 fail_timeout=2;

server 127.0.0.1:8080 backup;

}

[root@nginx ~]# mkdir -pv /data/www/errorpage

[root@nginx errorpage]# cat index.html

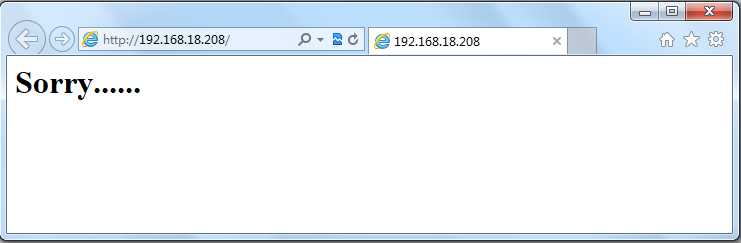

<h1 id="sorry">sorry......</h1>13.重新加载配置文件

[root@nginx errorpage]# service nginx reload nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful 重新载入 nginx: [确定]

14.关闭web服务器并进行测试

[root@web1 ~]# service httpd stop 停止 httpd: [确定] [root@web2 ~]# service httpd stop 停止 httpd: [确定]

注,大家可以看到,当所有服务器都不能工作时,就会启动备份服务器。好了,backup服务器就配置到这里,下面我们来配置ip_hash负载均衡。

15.配置ip_hash负载均衡

ip_hash,每个请求按访问ip的hash结果分配,这样来自同一个ip的访客固定访问一个后端服务器,有效解决了动态网页存在的session共享问题。(一般电子商务网站用的比较多)

[root@nginx ~]# vim /etc/nginx/nginx.conf

upstream webservers {

ip_hash;

server 192.168.18.201 weight=1 max_fails=2 fail_timeout=2;

server 192.168.18.202 weight=1 max_fails=2 fail_timeout=2;

#server 127.0.0.1:8080 backup;

}注,当负载调度算法为ip_hash时,后端服务器在负载均衡调度中的状态不能有backup。(有人可能会问,为什么呢?大家想啊,如果负载均衡把你分配到backup服务器上,你能访问到页面吗?不能,所以了不能配置backup服务器)

16.重新加载一下服务器

[root@nginx ~]# service nginx reload nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful 重新载入 nginx: [确定]

17.测试一下

注,大家可以看到,你不断的刷新页面一直会显示的民web2,说明ip_hash负载均衡配置成功。下面我们来统计一下web2的访问连接数。

18.统计web2的访问连接数

[root@web2 ~]# netstat -an | grep :80 | wc -l 304

注,你不断的刷新,连接数会越来越多。

The above is the detailed content of nginx load balancing instance analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure an Nginx domain name on a cloud server: Create an A record pointing to the public IP address of the cloud server. Add virtual host blocks in the Nginx configuration file, specifying the listening port, domain name, and website root directory. Restart Nginx to apply the changes. Access the domain name test configuration. Other notes: Install the SSL certificate to enable HTTPS, ensure that the firewall allows port 80 traffic, and wait for DNS resolution to take effect.

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to confirm whether Nginx is started: 1. Use the command line: systemctl status nginx (Linux/Unix), netstat -ano | findstr 80 (Windows); 2. Check whether port 80 is open; 3. Check the Nginx startup message in the system log; 4. Use third-party tools, such as Nagios, Zabbix, and Icinga.

How to create a mirror in docker

Apr 15, 2025 am 11:27 AM

How to create a mirror in docker

Apr 15, 2025 am 11:27 AM

Steps to create a Docker image: Write a Dockerfile that contains the build instructions. Build the image in the terminal, using the docker build command. Tag the image and assign names and tags using the docker tag command.

How to check nginx version

Apr 14, 2025 am 11:57 AM

How to check nginx version

Apr 14, 2025 am 11:57 AM

The methods that can query the Nginx version are: use the nginx -v command; view the version directive in the nginx.conf file; open the Nginx error page and view the page title.

How to start nginx server

Apr 14, 2025 pm 12:27 PM

How to start nginx server

Apr 14, 2025 pm 12:27 PM

Starting an Nginx server requires different steps according to different operating systems: Linux/Unix system: Install the Nginx package (for example, using apt-get or yum). Use systemctl to start an Nginx service (for example, sudo systemctl start nginx). Windows system: Download and install Windows binary files. Start Nginx using the nginx.exe executable (for example, nginx.exe -c conf\nginx.conf). No matter which operating system you use, you can access the server IP

How to check whether nginx is started?

Apr 14, 2025 pm 12:48 PM

How to check whether nginx is started?

Apr 14, 2025 pm 12:48 PM

In Linux, use the following command to check whether Nginx is started: systemctl status nginx judges based on the command output: If "Active: active (running)" is displayed, Nginx is started. If "Active: inactive (dead)" is displayed, Nginx is stopped.

How to start nginx in Linux

Apr 14, 2025 pm 12:51 PM

How to start nginx in Linux

Apr 14, 2025 pm 12:51 PM

Steps to start Nginx in Linux: Check whether Nginx is installed. Use systemctl start nginx to start the Nginx service. Use systemctl enable nginx to enable automatic startup of Nginx at system startup. Use systemctl status nginx to verify that the startup is successful. Visit http://localhost in a web browser to view the default welcome page.

How to solve nginx403

Apr 14, 2025 am 10:33 AM

How to solve nginx403

Apr 14, 2025 am 10:33 AM

How to fix Nginx 403 Forbidden error? Check file or directory permissions; 2. Check .htaccess file; 3. Check Nginx configuration file; 4. Restart Nginx. Other possible causes include firewall rules, SELinux settings, or application issues.