Technology peripherals

Technology peripherals

AI

AI

ChatGPT application is booming. Where to find a secure big data base?

ChatGPT application is booming. Where to find a secure big data base?

ChatGPT application is booming. Where to find a secure big data base?

There is no doubt that AIGC is bringing a profound change to human society.

Peeling away its dazzling and gorgeous appearance, the core of operation cannot be separated from the support of massive data.

ChatGPT’s “intrusion” has caused concerns about content plagiarism in all walks of life, as well as increased awareness of network data security.

Although AI technology is neutral, it does not become a reason to avoid responsibilities and obligations.

Recently, the British intelligence agency, the British Government Communications Headquarters (GCHQ), warned that ChatGPT and other artificial intelligence chatbots will be a new security threat.

Although the concept of ChatGPT has not been around for long, the threats to network security and data security have become the focus of the industry.

Regarding ChatGPT, which is still in its early stages of development, are such worries unfounded?

Security threats may be occurring

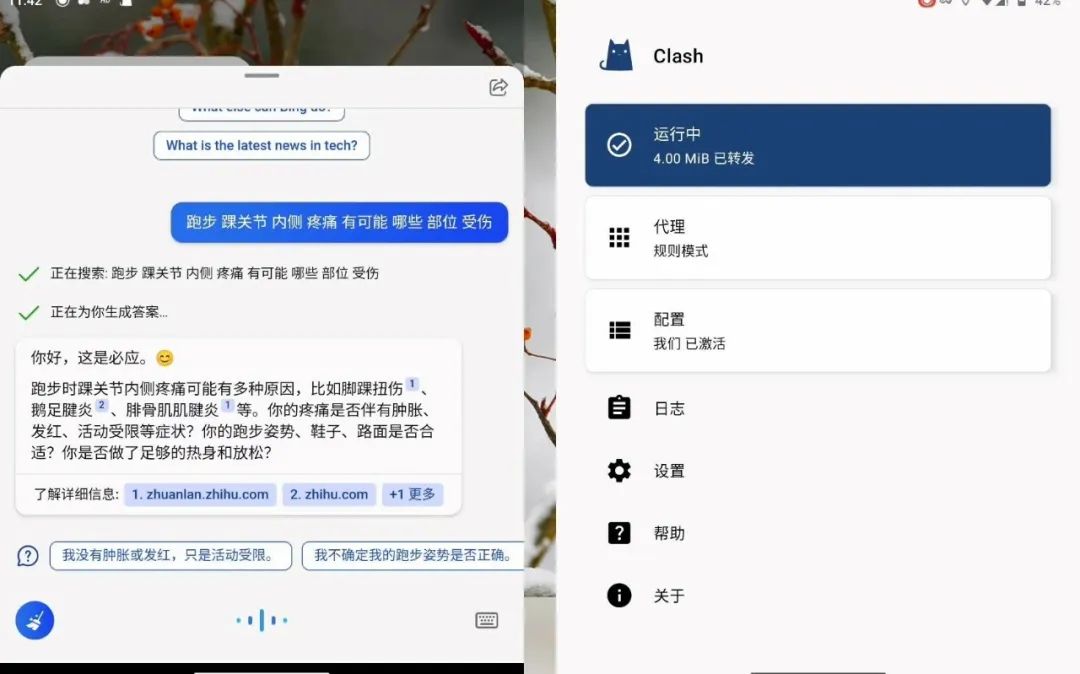

At the end of last year, the startup OpenAI launched ChatGPT. After that, its investor Microsoft launched a chatbot based on ChatGPT technology this year." Bing Chat”.

Because this type of software can provide human-like conversations, this service has become popular all over the world.

GCHQ’s cybersecurity arm noted that companies that provide AI chatbots can see the content of queries entered by users. As far as ChatGPT is concerned, its developer OpenAI can see this.

ChatGPT is trained through a large number of text corpora, and its deep learning capabilities rely heavily on the data behind it.

Due to concerns about information leakage, many companies and institutions have issued "ChatGPT bans".

City of London law firm Mishcon de Reya has banned its lawyers from entering client data into ChatGPT over concerns that legally privileged information could be compromised.

International consulting firm Accenture warned its 700,000 employees worldwide not to use ChatGPT for similar reasons, fearing that confidential client data could end up in the wrong hands.

Japan’s SoftBank Group, the parent company of British computer chip company Arm, also warned its employees not to enter company personnel’s identifying information or confidential data into artificial intelligence chatbots.

In February this year, JPMorgan Chase became the first Wall Street investment bank to restrict the use of ChatGPT in the workplace.

Citigroup and Goldman Sachs followed suit, with the former banning employees from company-wide access to ChatGPT and the latter restricting employees from using the product on the trading floor.

Earlier, in order to prevent employees from leaking secrets when using ChatGPT, Amazon and Microsoft prohibited them from sharing sensitive data with them, because this information may be used to further Iterative training data.

In fact, behind these artificial intelligence chatbots are large language models (LLM), and the user's query content will be stored and used at some point in the future. Develop LLM services or models.

This means that the LLM provider can read related queries and possibly incorporate them into future releases in some way.

Although LLM operators should take steps to protect data, the possibility of unauthorized access cannot be completely ruled out. Therefore, enterprises need to ensure that they have strict policies and provide technical support to monitor the use of LLM to minimize the risk of data exposure.

In addition, although ChatGPT itself does not have the ability to directly attack network security and data security, due to its ability to generate and understand natural language, it can be used to forge false information, attack social engineering, etc.

In addition, attackers can also use natural language to let ChatGPT generate corresponding attack code, malware code, spam, etc.

Therefore, AI can allow those who originally have no ability to launch attacks to generate attacks based on AI, and greatly increase the success rate of attacks.

With the support of technologies and models such as automation, AI, and "attack as a service", network security attacks have shown a skyrocketing trend.

Before ChatGPT became popular, there had been many cyber attacks by hackers using AI technology.

In fact, it is not uncommon for artificial intelligence to be adjusted by users to "deviate from the rhythm". Six years ago, Microsoft launched the intelligent chat robot Tay. When it went online, Tay behaved politely. He was polite, but in less than 24 hours, he was "led bad" by unscrupulous users. He used rude and dirty words constantly, and his words even involved racism, pornography, and Nazis. He was full of discrimination, hatred, and prejudice, so he had to be taken offline and ended his short life. .

On the other hand, the risk closer to the user is that when using AI tools such as ChatGPT, users may inadvertently input private data into the cloud model, and this data may become a training tool. Data can also become part of the answers provided to others, leading to data breaches and compliance risks.

AI applications must lay a secure foundation

As a large language model, the core logic of ChatGPT is actually the collection, processing, and Processing and output of operation results.

In general, these links may be associated with risks in three aspects: technical elements, organizational management, and digital content.

Although ChatGPT stated that it will strictly abide by privacy and security policies when storing the data required for training and running models, there may still be problems such as cyber attacks and data crawling in the future. Ignored data security risks.

Especially when it comes to the capture, processing, and combined use of national core data, local and industry important data, and personal privacy data, it is necessary to balance data security protection and flow sharing.

In addition to the hidden dangers of data and privacy leaks, AI technology also has problems such as data bias, false information, and difficulty in interpreting models, which may lead to misunderstanding and distrust.

The trend has arrived, and the wave of AIGC is coming. Against the backdrop of a promising future, it is crucial to move forward and establish a data security protection wall.

Especially when AI technology gradually improves, it can not only become a powerful tool for productivity improvement, but it can also easily become a tool for illegal crimes.

Monitoring data from the Qi’anxin Threat Intelligence Center shows that from January to October 2022, more than 95 billion pieces of Chinese institutional data were illegally traded overseas, of which more than 57 billion pieces were illegally traded overseas. is personal information.

Therefore, how to ensure the security of data storage, calculation, and circulation is a prerequisite for the development of the digital economy.

From an overall perspective, top-level design and industrial development should be insisted on going hand in hand. On the basis of the "Cybersecurity Law", the risk and responsibility analysis system should be refined and a security accountability mechanism should be established.

At the same time, regulatory authorities can carry out regular inspections, and companies in the security field can work together to build a full-process data security system.

Regarding the issues of data compliance and data security, especially after the introduction of the "Data Security Law", data privacy is becoming more and more important.

If data security and compliance cannot be guaranteed during the application of AI technology, it may cause great risks to the enterprise.

In particular, small and medium-sized enterprises have relatively little knowledge about data privacy security and do not know how to protect data from security threats.

Data security compliance is not a matter for a certain department, but the most important matter for the entire enterprise.

Enterprises should train employees to make them aware that everyone who uses data has the obligation to protect data, including IT personnel, AI departments, data engineers, developers, users, etc. Reporting people, people and technology need to be integrated.

Faced with the aforementioned potential risks, how can regulators and relevant companies strengthen data security protection in the AIGC field from the institutional and technical levels?

Compared to taking regulatory measures such as restricting the use of user terminals directly, it will be more effective to clearly require AI technology research and development companies to follow scientific and technological ethical principles, because these companies can limit users at the technical level scope of use.

At the institutional level, it is necessary to establish and improve a data classification and hierarchical protection system based on the characteristics and functions of the data required by AIGC's underlying technology.

For example, the data in the training data set can be classified and managed according to the data subject, data processing level, data rights attributes, etc., according to the value of the data to the data rights subject, and once the data is Classify the degree of harm to the data subject if it has been tampered with, destroyed, etc.

On the basis of data classification and classification, establish data protection standards and sharing mechanisms that match the data type and security level.

Focusing on enterprises, it is also necessary to accelerate the application of "private computing" technology in the AIGC field.

This type of technology allows multiple data owners to share, interoperate, and calculate data by sharing SDK or opening SDK permissions without exposing the data itself. , modeling, while ensuring that AIGC can provide services normally, while ensuring that data is not leaked to other participants.

In addition, the importance of full-process compliance management has become increasingly prominent.

Enterprises should first pay attention to whether the data resources they use comply with legal and regulatory requirements. Secondly, they should ensure that the entire process of algorithm and model operation is compliant. The innovative research and development of enterprises should also maximize Meet the ethical expectations of the public.

At the same time, enterprises should formulate internal management standards and set up relevant supervision departments to supervise data in all aspects of AI technology application scenarios to ensure that data sources are legal, processing is legal, and output is legal. This ensures its own compliance.

The key to AI application lies in the consideration between deployment method and cost. However, it must be noted that if security compliance and privacy protection are not done well, it may have "" A greater risk point.”

AI is a double-edged sword. If used well, enterprises will be even more powerful; if used improperly, neglecting security, privacy and compliance will bring greater losses to the enterprise.

Therefore, before AI can be applied, it is necessary to build a more stable "data base". As the saying goes, only stability can lead to long-term development.

The above is the detailed content of ChatGPT application is booming. Where to find a secure big data base?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

Open AI’s ChatGPT Mac application is now available to everyone, having been limited to only those with a ChatGPT Plus subscription for the last few months. The app installs just like any other native Mac app, as long as you have an up to date Apple S

SearchGPT: Open AI takes on Google with its own AI search engine

Jul 30, 2024 am 09:58 AM

SearchGPT: Open AI takes on Google with its own AI search engine

Jul 30, 2024 am 09:58 AM

Open AI is finally making its foray into search. The San Francisco company has recently announced a new AI tool with search capabilities. First reported by The Information in February this year, the new tool is aptly called SearchGPT and features a c

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year