Technology peripherals

Technology peripherals

AI

AI

Research on the possibility of building a visual language model from a set of words

Research on the possibility of building a visual language model from a set of words

Research on the possibility of building a visual language model from a set of words

Translator | Zhu Xianzhong

Reviewer| Chonglou

##Currently, Multi-modal artificial intelligencehas become a hot topic discussed in the streets. With the recent release of GPT-4, we are seeing countless possible new applications and future technologies that were unimaginable just six months ago. In fact, visual language models are generally useful for many different tasks. For example, you can use CLIP (Contrastive Language-Image Pre-training, that is "Contrastive Language-Image Pre-training", link: https://www.php.cn/link/b02d46e8a3d8d9fd6028f3f2c2495864Zero-shot image classification on unseen data sets;Usually In this case, excellent performance can be obtained without any training.

At the same time, the visual language model is not perfect. In this article In , wewillexplore the limitations of these models, highlighting where and why they may fail.In fact, This article is a short/high-level description of our recent to be published paper Plan will be presented in the form of ICLR 2023 Oral Published. If you want to view this article about the complete source code, just click the link https://www.php.cn/link/afb992000fcf79ef7a53fffde9c8e044.

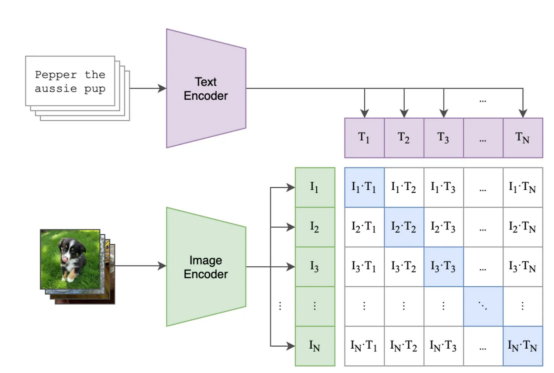

IntroductionWhat is a visual language model?Visual language models exploit the connection between visual and language data Synergy to perform various tasks has revolutionized the field. Although many visual language models have been introduced in the existing literature, CLIP (Compare Language-Image Pre-training) is still the best-known and most widely used model. By embedding images and captions in the same vector space, the CLIP model allows cross-modal reasoning, enabling users to perform tasks such as zero-shot images with good accuracy Tasks such as classification and text-to-image retrieval. Moreover, the CLIP model uses contrastive learning methods to learn image and title embeddings.

Introduction to Contrastive Learning Contrastive learning allows CLIP models to learn to associate images with their corresponding captions

by minimizing the distance between images in a shared vector space. CLIP models and others The impressive results achieved by the contrast-based model prove that this approach is very effective.

Contrast loss is used in comparison batches of image and title pairs, and optimize the model to maximize the similarity between embeddings of matching image-text pairs and reduce the similarity between other image-text pairs in the batch Similarity.

The figure belowshowsan example of possible batch processing and training steps

, Where:

- The purple square contains the embeds for all titles, and the green square contains the embeds for all images.

- The square of the matrix contains the dot product of all image embeddings and all text embeddings in the batch (read as "cosine similarity" because the embeddings are normalized ).

- The blue squares contain the dot products between image-text pairs for which the model must maximize the similarity, the other white squares are the similarities we wish to minimize (because each of these squares contains similarities in unmatched image-text pairs, such as an image of a cat and the description "my vintage chair" ).

(Among them, The blue squares are the image-text pairs for which we want to optimize similarity )

After training, you should be able to generate a A meaningful vector space that encodes images andtitles. Once you have embedded content for each image and each text, you can do a number of tasks, such as see which images are more match the title (e.g. find "dogs on the beach" in the 2017 summer vacation photo album), Or find which text label is more like a given image (e.g. you have a bunch of images of your dog and cat and you want to be able to identify which is which). Visual language models such as CLIP have become powerful tools for solving complex artificial intelligence tasks by integrating visual and linguistic information. Their ability to embed both types of data into a shared vector space has led to unprecedented success in a wide range of applications Accuracy and superior performance.

Can visual language models understand language?What we

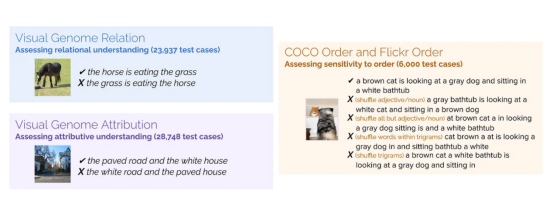

’s workis exactly trying to take some measures to answer this question. Regarding the question of whether or to what extent deep models can understand language, There are still significant debates. Here, our goal is to study visual language models and their synthesis capabilities.We first propose a new dataset to test ingredient understanding; this new benchmark is called ARO (Attribution,

Relations,and Order: Attributes, relationships and orders). Next, we explore why contrast loss may be limited in this case. Finally , we propose a simple but promising solution to this problem.New benchmark: ARO (Attributes, Relations, and Order) How well do models like CLIP (and Salesforce's recent BLIP) do at understanding language

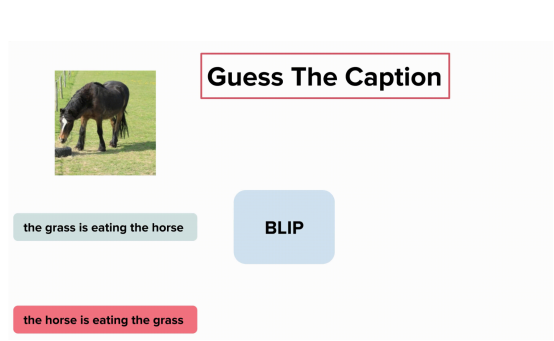

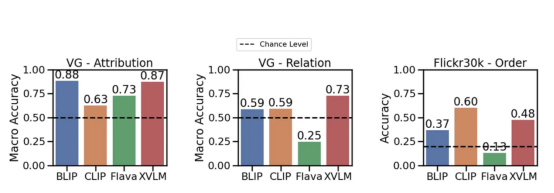

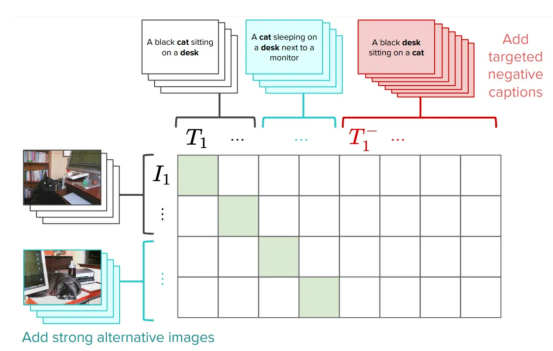

? We have collected a set of attribute-based compositions titles (e.g. "the red door and the standing man"(red door and standing person)) and a set of relationship-based composition title( For example "the horse is eating the grass" (马在吃草)) and matching images. Then, we generate a fake title instead of , such as "the grass is eating the horse" ( The grass is eating the horse). Can the models find the right title? We also explored the effect of shuffling words: Does the model prefer unshuffled Title to shuffled Title? Attributes, Relationships and Orders (ARO) #The four datasets created are shown below (please note that the sequence part contains two datasets): include Relation, Attribution and Order. For each dataset, we show an image example and a different title. Among them, only one title is correct, and the model must identify this correct title. Well, maybe it’s the BLIP model, because it can’t understand the difference between “the horse is eating grass” and “the grass is eating grass”: The BLIP model does not understand the difference between "the grass is eating grass" and "the horse is eating grass"(where Contains elements from the Visual Genome dataset, Image provided by the author) Now,let’s seeexperimentresult: Few models can go beyond the possibility of understanding relationships to a large extent (e.g., eating——Have a meal). However, CLIPModel is in Attributes and Relationships The edge aspect is slightly higher than this possibility. This actually shows that the visual language modelstillhas a problem. Different models have different attributes in attributes, relationships and order (Flick30k ) performance on benchmarks. used CLIP, BLIP and other SoTA models One of the main results of this work is that we may need more than the standard contrastive loss to learn language. This is why? titles, which TitleRequires understanding of composition (for example, "the orange cat is on the red table": The orange cat is on the red table). So, if the title is complex, why can’t the model learn composition understanding? ]Searching on these datasets does not necessarily require an understanding of composition. "books the looking at people are"? If the answer is yes;that means,no instruction information is needed to find the correct image. The task of our test model is to retrieve using scrambled titles. Even if we scramble the captions, the model can correctly find the corresponding image (and vice versa). This suggests that the retrieval task may be too simple,Image provided by the author. We tested different shuffle processes and the results were positive: even with different Out-of-order technology, the retrieval performance will basically not be affected. Let us say it again: the visual language model achieves high-performance retrieval on these datasets, even when the instruction information is inaccessible. These models might behave like astack of words, where the order doesn't matter: if the model doesn't need to understand word order in order to performs well in retrieval, so what are we actually measuring in retrieval? Now that we know there is a problem, we might want to look for a solution. The simplest way is: let CLIPmodel understand that "the cat is on the table" and "the table is on the cat" are different. In fact, one of the ways we suggested is to improve CLIPtraining by adding a hard negative made specifically to solve this problem. This is a very simple and efficient solution: it requires very small edits to the original CLIP loss without affecting the overall performance (you can read some caveats in the paper). We call this version of CLIP NegCLIP. Introducing a hard negative into the CLIP model (We added image and text hard negative, Picture provided by the author) Basically, we ask NegCLIPmodelto place an image of a black cat on "a black cat sitting on a desk" (黑猫 sitting on the desk)near this sentence, but far away from the sentence" a black desk sitting on a cat. Note that the latter is automatically generated by using POS tags. The effect of this fix is that it actually improves the performance of the ARO benchmark without harming retrieval performance or the performance of downstream tasks such as retrieval and classification . See the figure below for results on different benchmarks (see this papercorresponding paper for details). NegCLIPmodel and CLIPmodel on different benchmarks. Among them, the blue benchmark is the benchmark we introduced, and the green benchmark comes from the networkliterature( Image provided by the author) You can see that there is a huge improvement here compared to the ARO baseline, There are also edge improvements or similar performance on other downstream tasks. Mert(The lead author of the paper) has done a great job creating a small library to test visual language models. You can use his code to replicate our results or experiment with new models. All it takes to download the dataset and start running is a few a few linesPython language : NegCLIP model (It is actually an updated copy of OpenCLIP), and its complete code download address is https://github.com/vinid/neg_clip. Conclusion Visual language modelCurrently You can already do a lot of things. Next,We can’t wait to see what future models like GPT4 can do! Translator introduction

Original title: ##Your Vision-Language Model Might Be a Bag of Words , Author: Federico Bianchi The different data sets we created

The different data sets we created

Retrieval and contrastive loss evaluation

What to do?

Programming implementation

import clip

from dataset_zoo import VG_Relation, VG_Attribution

model, image_preprocess = clip.load("ViT-B/32", device="cuda")

root_dir="/path/to/aro/datasets"

#把 download设置为True将把数据集下载到路径`root_dir`——如果不存在的话

#对于VG-R和VG-A,这将是1GB大小的压缩zip文件——它是GQA的一个子集

vgr_dataset = VG_Relation(image_preprocess=preprocess,

download=True, root_dir=root_dir)

vga_dataset = VG_Attribution(image_preprocess=preprocess,

download=True, root_dir=root_dir)

#可以对数据集作任何处理。数据集中的每一项具有类似如下的形式:

# item = {"image_options": [image], "caption_options": [false_caption, true_caption]}

The above is the detailed content of Research on the possibility of building a visual language model from a set of words. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year